What can companies learn about you by analyzing your voice & manner of speaking (e.g., in voice commands/messages/calls/memos)? In this thread, I summarize our study on the largely overlooked privacy impacts of modern voice & speech analysis. #privacy #datamining #IoT #ethics 1/n

Link to paper (open access): link.springer.com/content/pdf/10…. The paper provides a structured overview of personal information that can be inferred from voice recordings by using machine learning techniques. 2/n

Through the lens of data analytics, certain speech characteristics can carry more information than the words themselves (e.g., accent, dialect, sociolect, lexical diversity, patterns of word use, speaking rate & rhythms, intonation, pitch, loudness, formant frequencies). 3/n

Drawing from patents & literature of diverse disciplines, our paper shows that such features can reveal a speaker’s biometric identity, body features, sex, age, personality traits, mental & physical health, emotions, drug habits, geographical origin, and socioeconomic status. 4/n

Let me give some examples. Based on certain speech parameters, such as loudness, roughness, hoarseness or nasality and indicative sounds like sniffles, coughs or sneezes, voice recordings can contain rich information about a speaker’s state of health. 5/n

This not only includes information about voice & communication disorders, but also conditions beyond speech production (e.g., asthma, Alzheimer’s, Huntingston's, Parkinson's, ALS, respiratory tract infections caused by the common cold or flu) and overall health/fitness. 6/n

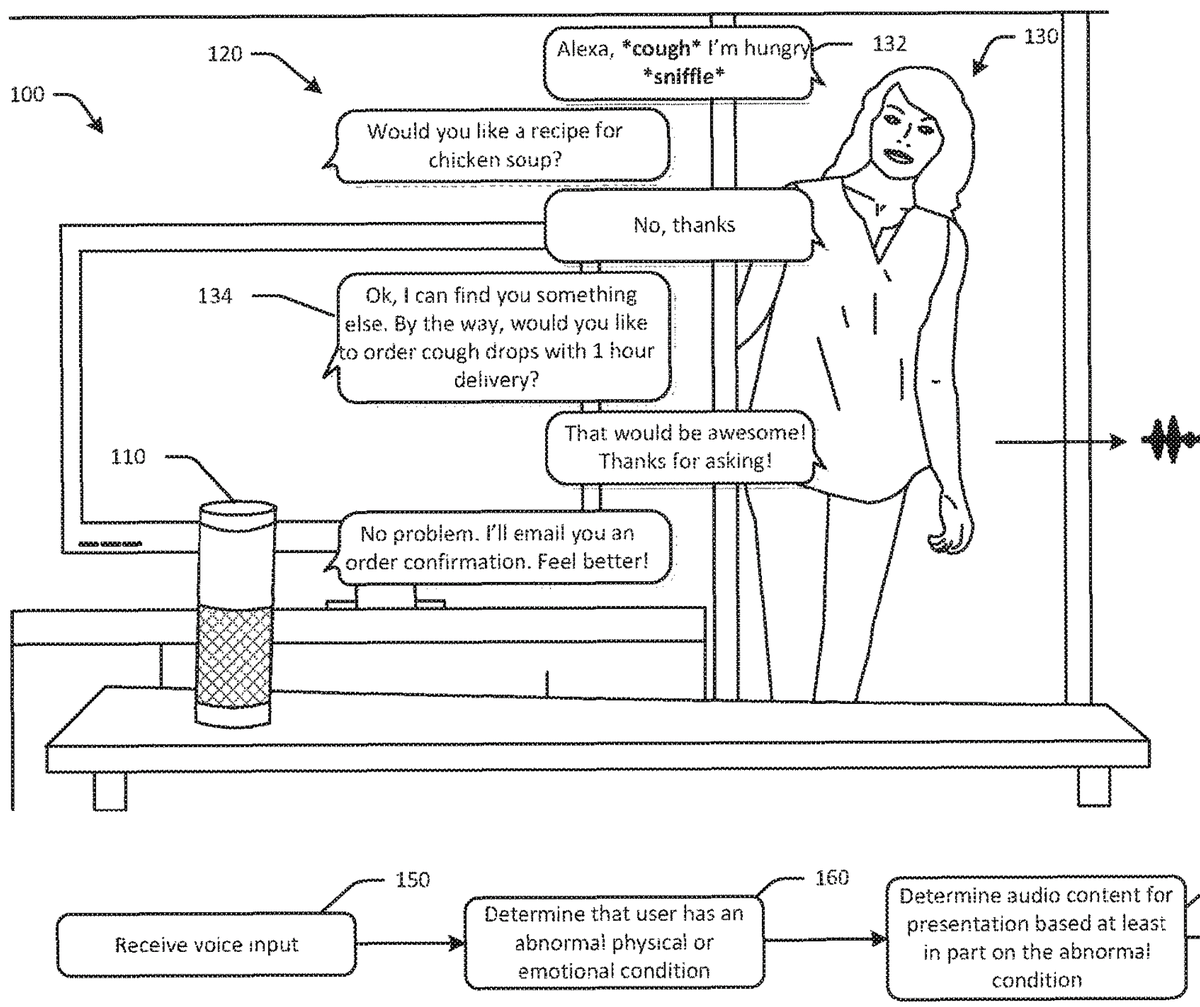

One interesting example is that Amazon has patented a system to analyze voice commands recorded by a smart speaker to assess the user’s health: patents.google.com/patent/US10096… 7/n

Certain voice & speech characteristics can also reveal a speaker’s drug habits, both in terms of acute intoxication (e.g., alcohol, MDMA) and long-term drug use & addiction (e.g., voice-based smoker detection). 8/n

Methods exist to automatically estimate geographical origin & “degree of nativeness” based on a person's accent. Non-native speakers can even be detected when they are fluent in the spoken language and have lived in the respective host country for several years. 9/n

Based on tone of voice, use of filler words, fluency of speaking, etc., recorded speech may also reveal how a speaker tends to be perceived by other people (e.g., in terms of charisma, intelligence, character traits). 10/n

Automated approaches have been proposed to predict a person's age range (child/ adolescent/adult/senior) or even estimate the actual year of birth based on speech parameters alone. 11/n

Besides human speech, voice recordings often contain ambient noise. Algorithms can analyze the environment in which an audio sequence was recorded and classify audio events (e.g., daily activities, media consumption, the type of food a person is eating). 12/n

We conducted a survey and found that most people, including data protection and IT professionals, are not aware of the detailed personal information that companies can infer from voice recordings (study available here: doi.org/10.2478/popets…). 13/n

Inference methods are mostly developed & deployed behind closed doors, subject to non-disclosure agreements. Based on R&D investments, some companies likely have far greater capabilities than what is known from published research. 14/n

Of course, drawing inferences from speech data is not trivial & inference methods are never faultless. However, for many attacks and profiling purposes, 100% accuracy isn’t needed. Inaccurate methods will be used nonetheless, causing additional discriminatory side-effects. 15/n

Internet-connected mics have become ubiquitous (in phones, tablets, laptops, cameras, smartwatches, connected cars, voice-enabled remotes, intercoms, baby monitors, smart speakers, etc). The number of smart speakers in use is forecast to reach 640 million globally by 2024. 16/n

Mics & other embedded sensors improve our lives in many important ways, no question about that. The same is true for inferential analytics. I see countless beneficial applications. But to realize them in a socially acceptable manner, adequate privacy protection is needed. 17/n

Existing technical & legal countermeasures don’t offer reliable protection against undesired inferences. Considering the ubiquity of microphones and the soaring popularity of voice-based technology (e.g., voice assistants), more effective safeguards are urgently needed. 18/n

Of course, the inference problem goes far beyond audio recordings, encompassing countless other sensors and data sources. In recent work, we have also examined the privacy-invading potential of eye tracking data: link.springer.com/content/pdf/10… ... 19/n

… and accelerometer data (= fine-grained device motion recorded by almost all mobile devices & wearables): dl.acm.org/doi/pdf/10.114…. 20/n

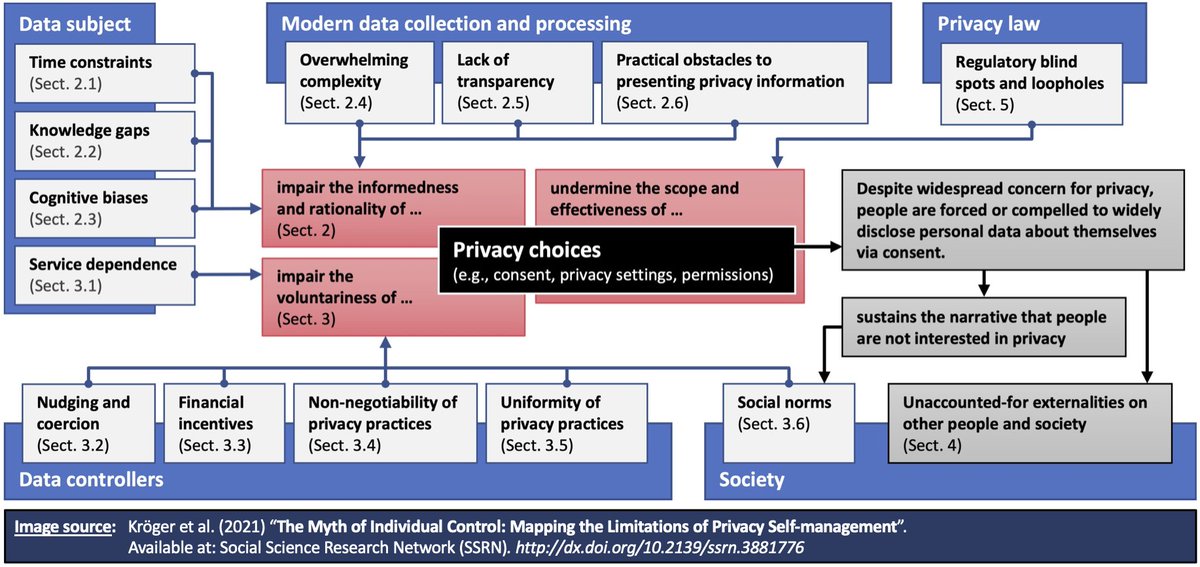

Given the subject’s complexity, users cannot be expected to “defend themselves” or manage their privacy in a truly informed manner, calling into question the prevalent legal paradigm of notice-and-consent (a.k.a. privacy self-management). 21/n

Even in much less complex settings, people’s privacy choices are typically irrational, involuntary and/or circumventable due to human limitations, corporate tricks and legal loopholes – as we discuss in-depth in other recent work: ssrn.com/abstract=38817… 22/n

Thus, the issue discussed in this thread is yet another reminder that we urgently need to challenge the misleading notion of “informed consent” when it comes to our privacy choices. The prevalent data protection paradigm of notice-and-choice is completely dysfunctional. 23/n

We have outlined alternative approaches here (under Sect. 7): ssrn.com/abstract=38817…. Note that most existing ideas in this area are still vague and hypothetical. To arrive at actionable policy recommendations, further research on this issue is urgently needed. 24/n

Thank you so much for reading. I will post more information/updates soon.

Feel free to share your thoughts and ideas!

Feel free to share your thoughts and ideas!

• • •

Missing some Tweet in this thread? You can try to

force a refresh