Hi all,

You might have noticed my short-term projections for the NSW outbreak yesterday were pretty nuts. It turns out this projection was unrealistic, due to some approximations in my code that are no longer valid now that growth rates are higher. 1/🧵

You might have noticed my short-term projections for the NSW outbreak yesterday were pretty nuts. It turns out this projection was unrealistic, due to some approximations in my code that are no longer valid now that growth rates are higher. 1/🧵

https://twitter.com/Chrisbilbo/status/1471243060759515136

So first, apologies. These issues are fixed now and today's projection ought to be more realistic. Secondly, gory details below. There were two problems. 2/🧵

Problem 1. smoothing. Many have noticed my "smoothed cases" line didn't actually go through the middle of the data. This is not incorrect per se, this is what correct smoothing with a symmetric smoothing window looks like when the data has a nonzero second derivative. 3/🧵

What's incorrect is to use that "smoothed cases" line to estimate of the current caseload. Because the smoothing was in a sense bringing some of the influence of future large caseloads to the present, this made the starting point for projections a few days ahead of reality 4/🧵

I'm now doing the smoothing in log space. Taking the log of case numbers, smoothing, then exponentiating again. Exponentials have no second derivative in log space, so the problem goes away. 5/🧵

This kind of smoothing doesn't preserve the total case numbers, which is unappealing, but seems like the better compromise to make. 6/🧵

Problem 2: generation distributions. I estimate R_eff as the 5-day growth factor in new cases. This approximates that everyone transmits after exactly 5 days, when in reality, that's just an average. That itself wouldn't be too bad, this is probably an OK estimate of R_eff. 7/🧵

The problem is that the short-term projection comes not from merely extrapolating that same growth rate, but from feeding it to an SIR model. In (simple) SIR models, transmissions are exponentially distributed in time. 8/🧵

Exponential distributions have more probability at shorter times. Transmissions that occur earlier have more time to go on to transmit again, such that an SIR model given an R_eff of 3 and 5-day mean generation time will result in a 5-day growth factor *larger* than 3.

9/🧵

9/🧵

This didn't matter when R_eff was closer to 1.0, but is now a massive error. Feeding R_eff = 3 to an SIR model with transmissions exponentially distributed would have resulted in a 5-day growth factor of 7.

10/🧵

10/🧵

This is likely why the projection was blowing up to 30k cases in only a week. That's not realistic at all for the timescale of a week. 11/🧵

I've resolved this by converting R_eff before feeding it to the SIR model, such that the result has the same 5-day growth factor. The formula is simple: R' = 1 + log(R), where R' is what I'm feeding to the SIR model and R is what's obtained from the 5-day growth rate 12/🧵

I got this conversion formula from the following paper that discusses these distinctions: ncbi.nlm.nih.gov/pmc/articles/P…

13/🧵

13/🧵

I also only twigged this issue was a possibility because someone on twitter pointed it out months ago. I don't remember who they were, but thanks and hello if you're reading! I didn't fix it at the time because it didn't matter unless R was large, and who expected that? 14/🧵

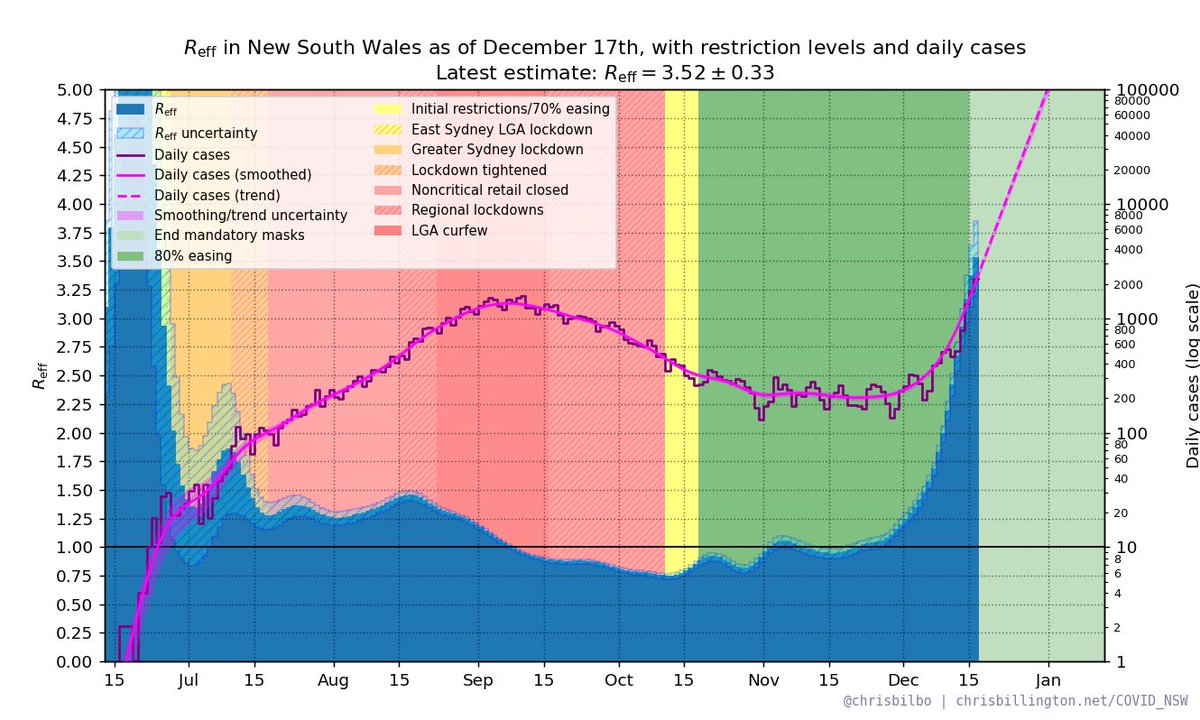

With these two issues resolved the short-term projection is more realistic:

day cases 68% range

Fri: 1873 1557—2209

Sat: 2283 1878—2727

Sun: 2789 2266—3368

Mon: 3401 2727—4158

Tue: 4141 3286—5137

Wed: 5032 3932—6329

Thu: 6117 4716—7788

15/🧵

day cases 68% range

Fri: 1873 1557—2209

Sat: 2283 1878—2727

Sun: 2789 2266—3368

Mon: 3401 2727—4158

Tue: 4141 3286—5137

Wed: 5032 3932—6329

Thu: 6117 4716—7788

15/🧵

Apologies again for putting out dodgy numbers, and appreciate the feedback that helps find issues like these. Please share the fact that this was wrong with anyone you hear quoting the stupid numbers I put out yesterday. 16/16.

• • •

Missing some Tweet in this thread? You can try to

force a refresh