Let's build something new: a screenshot repo with a custom domain. Datastore is S3, DNS is CloudFlare. Eeny meeny miney Pulumi. @PulumiCorp, you're up.

They have a handy "S3 static site" tutorial option. It's in JavaScript, with a link to the Python code. Nice!

The first command errors. Less than nice.

(It wants `pulumi new` first).

The first command errors. Less than nice.

(It wants `pulumi new` first).

They offer sample code on GitHub. This is why I have @cassido's keyboard handy.

Important to set a region. I'm in San Francisco but I pick the further-away us-west-2 @awscloud region because while I do reasonably well, I don't make us-west-1 kind of money.

I like the compromise here between "here's a painful level of detail" and "it'll be fine, trust us. It's only production!"

The only resource is the stack because I forgot to save the file. I fix that, rerun `pulumi up` and run into the bane of all CLI tools: a stack trace that breaks immersion.

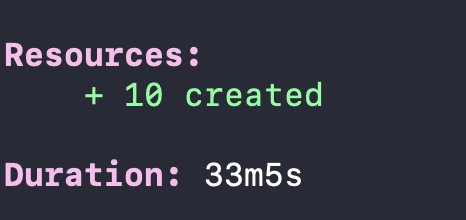

`pip install pulumi_aws` and a rerun results in a successful outcome. Huzzah.

By default it named the bucket "s3-bucket" plus a random string. This is less than idea.

Pulumi may be the first thing I've seen that deleted an S3 bucket and its contents without requiring manual action from me.

Pulumi may be the first thing I've seen that deleted an S3 bucket and its contents without requiring manual action from me.

Of course, @awscloud begins predictably losing its mind about the open bucket despite the fact that I've only permitted access from @CloudFlare's proxy IP ranges. I wish there were a better way to establish trust between the two providers.

Oops. Clearly a copy/paste failure, @cassidoo.

https://twitter.com/QuinnyPig/status/1491135430863253504

It looks like having an S3 bucket fronted by CloudFlare is an exercise in frustration with regard to bucket naming, etc.

New plan. CloudFlare holds DNS, which will point to CloudFront in order to cache the images.

New plan. CloudFlare holds DNS, which will point to CloudFront in order to cache the images.

"Oh, you want to use CloudFront + ACM + Route 53 + S3? No worries, that's just 200 lines of Python away!"

github.com/pulumi/example…

github.com/pulumi/example…

Sheesh, 200 lines and it doesn't even lock the S3 bucket down to the CloudFront origin, so @awscloud is still gonna scream its head off.

Pulumi has been lightning fast, but the ACM verification delay and the CloudFront distribution delay team up to make the initial deployment look like one of the providers is "open a service ticket with the helpdesk."

Okay, I'm not nuts; there's a @pulumicorp doc bug. Trying to lock down the S3 bucket to only allow the CloudFront distribution to access it. The only other reference I see to this on GitHub has a "This is broken" comment attached. Looks like a Terraform translation issue.

(The fact that I can't find any examples of someone locking down an S3 bucket via Pulumi is uh... not indicative of positive findings.)

It's not purely about speed; it's also about "has someone else on the internet attempted to do the thing I'm trying to get to work."

Terraform to its credit has that in spades.

Terraform to its credit has that in spades.

https://twitter.com/karnauskas/status/1491227094546018304

And now I've stumbled into "we can't even spit out an error that corresponds to the mess you've made in your code" territory, because I'm lucky like that. It's probably time to call it a night.

Er, I was unclear here. It deleted the bucket when told to destroy it without stopping to ask me if I was REALLY sure, or making me jump through hoops. It’s what I wanted, not a bug!

https://twitter.com/quinnypig/status/1491139762434248704

All credit to @briggsl; his gist got me sorted out. Onward!

https://twitter.com/briggsl/status/1491238518756364288

Ugh. Save me from the S3 API. After messing around with Pulumi's intelligent tiering configuration for too long, it uh... only adjusts the archive settings. The way to enable Intelligent Tiering is via lifecycle policy.

#awswishlist: Let me specify a default storage class for everything placed into a bucket. Don't make me eat a lifecycle transition fee for all of them.

And thanks to @DropshareApps my new "shitposting.pictures" screenshot domain is secure, intelligently tiered, offers Twitter preview cards, and is managed via @PulumiCorp.

shitposting.pictures/FumTHzAec1Hr9

shitposting.pictures/FumTHzAec1Hr9

So my takeaways on Pulumi:

Overall I like it. It's got promise. I think that it relies overly much on users having familiarity with Terraform (I don't have much, for reference).

Overall I like it. It's got promise. I think that it relies overly much on users having familiarity with Terraform (I don't have much, for reference).

"This is better/faster/easier in Terraform" overlooks the target audience for something like Pulumi. That's forgivable; I think that Pulumi itself hasn't clearly articulated whether it's targeting developers or folks with ops backgrounds.

Remember how messed up it seemed when Amazon started shipping things next day? You'd click the button, a box showed up tomorrow. It felt suspiciously fast.

That's how Pulumi feels. I make a change, it's live less than ten seconds later.

That's how Pulumi feels. I make a change, it's live less than ten seconds later.

Also, and I recognize that this is wildly controversial, maybe try to sell me something at some point in this process?

Further, I see big opportunity here for product advancement. I have this thing deploying to production, the end. Build out a tiered dev structure. Build out CI/CD for me. Be prescriptive for those of us without existing systems.

And @funcOfJoe's post here is great, but... please build this in. :-) "Specifying set of tags" is the sort of thing that a LOT of people will benefit from; make it a Pulumi feature directly. Just rolled this out, it was too much manual work.

pulumi.com/blog/automatic…

pulumi.com/blog/automatic…

• • •

Missing some Tweet in this thread? You can try to

force a refresh