I've done a lot of yowling about high cardinality -- what it is, why you can't have observability without it.

I haven't made nearly as much noise about ✨high dimensionality✨. Which is unfortunate, because it is every bit as fundamental to true observability. Let's fix this!

I haven't made nearly as much noise about ✨high dimensionality✨. Which is unfortunate, because it is every bit as fundamental to true observability. Let's fix this!

If you accept my definition of observability (the ability to understand any unknown system state just by asking questions from the outside; it's all about the unknown-unknowns) then you understand why o11y is built on building blocks of arbitrarily-wide structured data blobs.

If you want to brush up on any of this, here are some links on observability:

* honeycomb.io/blog/so-you-wa…

* thenewstack.io/observability-…

* charity.wtf/2020/03/03/obs…

and on wide events:

* charity.wtf/2019/02/05/log…

* kislayverma.com/programming/pu…

* honeycomb.io/blog/so-you-wa…

* thenewstack.io/observability-…

* charity.wtf/2020/03/03/obs…

and on wide events:

* charity.wtf/2019/02/05/log…

* kislayverma.com/programming/pu…

These wide events consist of rich context and metadata, collected into one event per request per service. You can also think of them as "spans". They have of lots of key/value pairs (and simple data structures) and some dedicated fields for trace id, span id, request id, etc.

When we talk about high cardinality for observability, we are saying that any of the values in these key/value pairs can have a kajillion possible values. Like query strings or UUIDs.

Here's where some tools get tricksy with the marketing language. They say they support high-c..

Here's where some tools get tricksy with the marketing language. They say they support high-c..

.. but it comes with lots of hidden costs or limitations. Like, you can have a FEW high-cardinality dimensions, but only a limited number, and you have to choose them in advance.

Or they won't actually let you break down or group by that one in a million UUIDs,

Or they won't actually let you break down or group by that one in a million UUIDs,

... only append it as a tag. Or maybe they'll let you add some number of high-cardinality dimensions, but the cost goes up linearly with each and every one.

These are good clues that you're being sold a bunch of patches on top of a last generation tool, not real observability.

These are good clues that you're being sold a bunch of patches on top of a last generation tool, not real observability.

If cardinality refers to the values, dimensionality refers to the keys. "High dimensionality" means the tool supports extremely wide events. Like many hundreds of k/v pairs and structs per each event.

The wider the event, the richer the context, the more powerful the tool.

The wider the event, the richer the context, the more powerful the tool.

Say you have a schema that defines six high-cardinality dimensions per event:

* time

* app

* host

* user

* endpoint

* status

This allows you to slice and dice and look for any combination of results or outliers. Like, "all of the errors in that spike were for host xyz",

* time

* app

* host

* user

* endpoint

* status

This allows you to slice and dice and look for any combination of results or outliers. Like, "all of the errors in that spike were for host xyz",

or, "all of those 403s were to the /export endpoint by user abc", or "all of the timeouts to the /payment endpoint were on host blah".

Super useful, right?

The wider the event, the richer the context; and the more powerful and high-precision your scalpel becomes.

Super useful, right?

The wider the event, the richer the context; and the more powerful and high-precision your scalpel becomes.

Now imagine that instead of six basic dimensions, you can toss in literally any detail, value, counter, string, etc that seems like it might be useful at some future date.

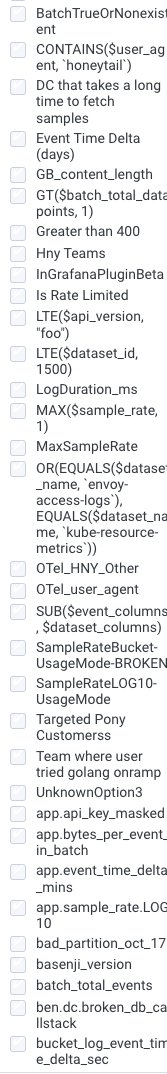

(below is a kludgey screencap of some of the dimensions captured for our API service)

(below is a kludgey screencap of some of the dimensions captured for our API service)

It's effectively free if you toss a few more bytes onto the event -- effectively free for us to store, that is; we charge by the event so it's LITERALLY free for you.

And you can slice and dice, mix and match at will. String along five, ten, twenty or more dimensions in a query.

And you can slice and dice, mix and match at will. String along five, ten, twenty or more dimensions in a query.

But who can keep track of a schema with 300+ dimensions in it?? Great question; nobody can. 🙃

That's why we say "arbitrarily-wide" events: because the "schema" is inferred. You just append details whenever something seems useful, and don't send it when it's not.

That's why we say "arbitrarily-wide" events: because the "schema" is inferred. You just append details whenever something seems useful, and don't send it when it's not.

With modern systems, it's not enough to gather a few trusty rusty dimensions about your user requests.

You need to gather incredibly rich detail about everything happening at the intersection of users and code. More dimensions equals more context. And then you need a scalpel.

You need to gather incredibly rich detail about everything happening at the intersection of users and code. More dimensions equals more context. And then you need a scalpel.

.. that can pick apart traffic at the individual request level, identifying outliers based on version numbers, feature flags, and any/every combination of details about your headers, environment, devices, queries, storage internals, user metadata, and more.

There are a million different definitions of observability floating around, and I'm probably responsible for more than my fair share of them. But as a user, the key functionality to watch for is:

* high-cardinality

* high-dimensionality

* explorability

Accept no substitutes. 🐝

* high-cardinality

* high-dimensionality

* explorability

Accept no substitutes. 🐝

• • •

Missing some Tweet in this thread? You can try to

force a refresh