im looking to start an interest group crossing over @full_stack_dl + @ml_collective!

we'll work through long-form content (h/t @chipro + @sh_reya) first, w sync discussions weekly to keep us on track

async folks can chat on discord, contribute to a wiki, + catch the recordings

we'll work through long-form content (h/t @chipro + @sh_reya) first, w sync discussions weekly to keep us on track

async folks can chat on discord, contribute to a wiki, + catch the recordings

this follows the format of really successful MLC interest groups in e.g. NLP (notion.so/MLC-NLP-Paper-…) and Computer Vision (notion.so/MLC-Computer-V…)

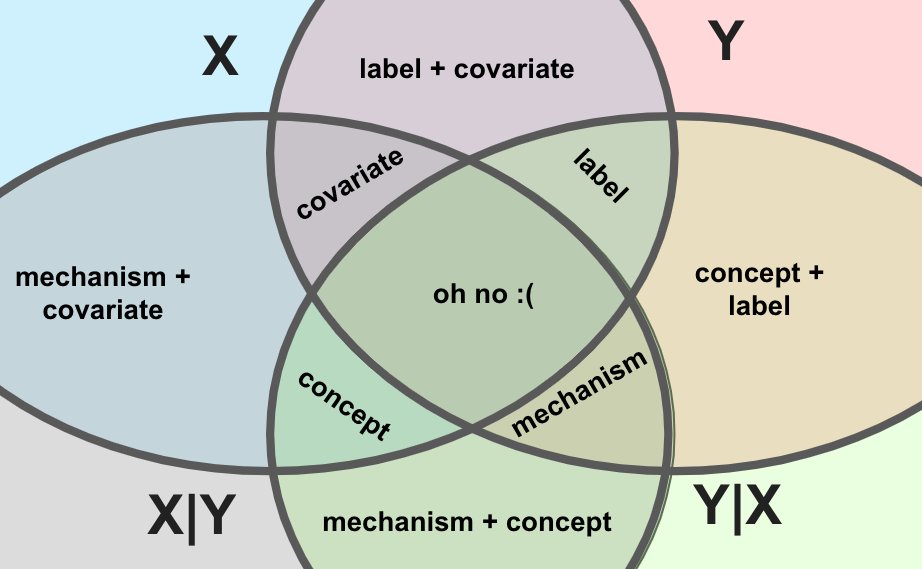

this group will focus on problems in production ML, like building datasets, monitoring models, and designing robust systems

this group will focus on problems in production ML, like building datasets, monitoring models, and designing robust systems

we're organizing through the MLCollective Open Collab discord. won't link it on Twitter because bots + griefers

it's towards the bottom of the page here: mlcollective.org

come thru and drop some 🥞 if yr tryna!

it's towards the bottom of the page here: mlcollective.org

come thru and drop some 🥞 if yr tryna!

(ps the post about the group is in the #general channel. you can ask questions there!)

• • •

Missing some Tweet in this thread? You can try to

force a refresh