This is the literal step-by-step process I use for #keywordresearch and to determine what to write about next on my sites.

For this you'll need

✅ @ahrefs

✅ Google Sheets

✅ A small bit of Python (my script + instructions are provided below)

#nichesites #seo #contentsites

🧵🪡

For this you'll need

✅ @ahrefs

✅ Google Sheets

✅ A small bit of Python (my script + instructions are provided below)

#nichesites #seo #contentsites

🧵🪡

First, start with a super broad keyword that is relavent to your site and load that into Ahrefs.

I'm using a random word as an example for this thread.

I'm using a random word as an example for this thread.

Next I export this list and import it into Google Sheets into a tab called Ahrefs.

Then we need to copy the list of all the keywords from column B and paste these in a file called keywords.txt

Then we need to copy the list of all the keywords from column B and paste these in a file called keywords.txt

Now move that keywords.txt file into a folder called Google Counts.

Then head to this GIST page and copy this python code for a script that I have created.

In the Google Counts folder paste this script as main.py

gist.github.com/shanejones/8ca…

Then head to this GIST page and copy this python code for a script that I have created.

In the Google Counts folder paste this script as main.py

gist.github.com/shanejones/8ca…

This script uses Requests to query the search URL's we're mocking and Beautiful Soup to parse the result page HTML and scrape content for the specific parts of the page we want.

ℹ️NOTE: You may need to install Requests and Beautiful Soup 4 on your machine if you don't have them.

A quick search for "how to install Requests in Python" or how to install Beautiful Soup 4 in Python" should help you out with this.

A quick search for "how to install Requests in Python" or how to install Beautiful Soup 4 in Python" should help you out with this.

This python script will look for the keywords.txt file in the same folder and perform 3 Google searches for each and return the number of results for

➡️ Standard search of search term

➡️ Search with quoted "search term"

➡️ An allintitle:Search Term

➡️ Standard search of search term

➡️ Search with quoted "search term"

➡️ An allintitle:Search Term

Run this script using

python3 main.py

You'll see that your console will start churning through the list

python3 main.py

You'll see that your console will start churning through the list

⚠️ NOTE: Google doesn't like you doing this so much and may stop giving you results after about 200 keywords so run this in batches of 200 keywords and maybe switch VPN's to get around this :)

The output of this script completing will be a CSV in your folder with the totals of the searches alongside the search term.

Once you have this CSV, come back to Google Sheets and import the file into a new tab called Google Results.

Once you have this CSV, come back to Google Sheets and import the file into a new tab called Google Results.

Then back in your main Ahrefs tab add three new columns

- Search Results

- Quoted Results

- Allintitle Results

Then create a VLOOKUP in these columns from these keywords to lookup the values from the Google Results tab

- Search Results

- Quoted Results

- Allintitle Results

Then create a VLOOKUP in these columns from these keywords to lookup the values from the Google Results tab

ℹ️ NOTE: some results may return 0 across all three searches. Since using this script I've noticed that some search pages actually hide the search result total

You now have a complete data sheet where you can look for opportunities.

Here is the competed sheet I created for this thread with a slight bit of conditional formatting to help me identify things easier

docs.google.com/spreadsheets/d…

Here is the competed sheet I created for this thread with a slight bit of conditional formatting to help me identify things easier

docs.google.com/spreadsheets/d…

I use this basic theory for searching for opportunities in this keyword sheet.

✅ Under 1 million search results,

✅ Under 1,000 quoted results

✅ Low or no allintitle results

✅ Any search volume

This should result in easy traffic

✅ Under 1 million search results,

✅ Under 1,000 quoted results

✅ Low or no allintitle results

✅ Any search volume

This should result in easy traffic

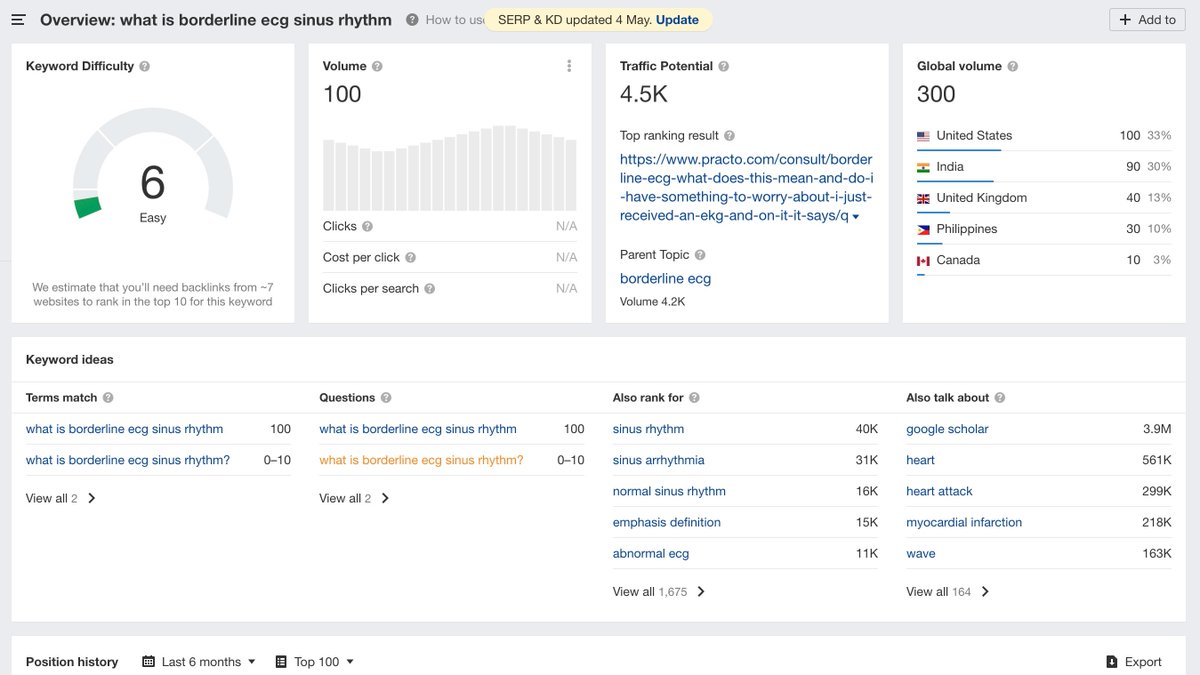

As an example we have "what is borderline ecg sinus rhythm"

This has 100 searches per month, 259,000 results, 2 quoted results and 1 allintitle result.

This has 100 searches per month, 259,000 results, 2 quoted results and 1 allintitle result.

Then from here I manually look at the SERPS to check the top 3 - 5 positions, check a mix of quoted and allintitle searches too.

Allintitle shows you who is going after that keyphrase as they have it exactly in the title.

You can also research a specific SERP in Ahrefs too.

Allintitle shows you who is going after that keyphrase as they have it exactly in the title.

You can also research a specific SERP in Ahrefs too.

This quick bit of research helps me determine how much content to create before adding a ticket to my content board in Notion ready for when I next go on a writing binge 😄

I've also worked out a potential parent page for this that could be on ECG Rhythms.

We can now do some more research around ECG Rhythms to populate that parent page and to discover other potential child pages too.

This is where the process can repeat continuously.

We can now do some more research around ECG Rhythms to populate that parent page and to discover other potential child pages too.

This is where the process can repeat continuously.

Now all you need to do is write the content and get it published. Thats the easy part right?

🥇 Using this exact method I've had posts reach Page 1 in as little as 2 weeks to a month.

Let me know how you get on too.

🥇 Using this exact method I've had posts reach Page 1 in as little as 2 weeks to a month.

Let me know how you get on too.

If you liked this thread I tend to do these about once a week at the moment so be sure to give me a follow so you don't miss the next one and maybe give the original thread tweet a RT too. Thanks!

twitter.com/shanejones

twitter.com/shanejones

• • •

Missing some Tweet in this thread? You can try to

force a refresh