Created with #deforum #stablediffusion from a walking animation I made in #UnrealEngine5 with #realtime clothing and hair on a #daz model. Combined the passes in Premiere. Breakdown thread 1/8 @UnrealEngine @daz3d #aiart @deforum_art #MachineLearning #aiartcommunity #aiartprocess

I started with this animation I rendered in #UE5 @UnrealEngine using a @daz3d model with #realtime clothing using the @triMirror plugin. Walk was from the #daz store. Hair was from the Epic Marketplace. Went for a floaty, underwater vibe with the hair settings. 2/8 #aiartprocess

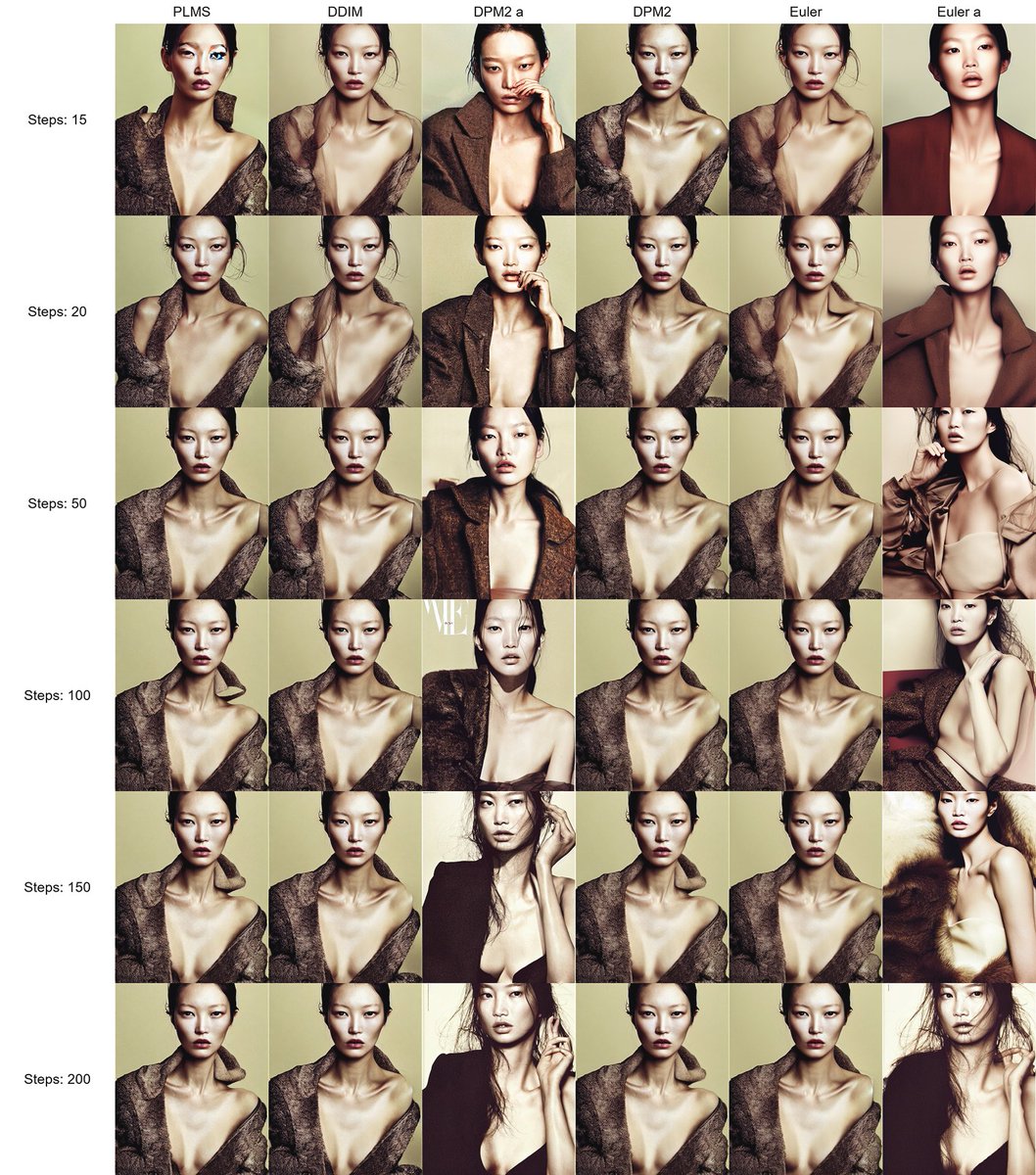

Used the #UE5 video as input into #deforum #stablediffusion. Adjusted the settings to keep the results very close to the input frames. @deforum_art 3/8 #aiartprocess

I felt the #deforum animation lost some of the body and fluidity in the hair, and I thought the hand artifacts were too much so I decided to layer and blend the Deforum video ontop of the original #ue5 render in Premiere Pro. 4/8 #aiartprocess

I also added a blurred layer for a slight glowing effect on the skin.

I liked the mix of fluidity and flickering in the aesthetic. I also decided not to fix the artifacts at the top of the head. Something about the imperfection was interesting to me. 5/8 #aiartprocess

I liked the mix of fluidity and flickering in the aesthetic. I also decided not to fix the artifacts at the top of the head. Something about the imperfection was interesting to me. 5/8 #aiartprocess

I then upscaled the video to 4K with @topazlabs Video Enhance AI. With that I could animate camera moves in a 1080 frame in Premiere Pro. 6/8 #aiartprocess

I generated 3 img2img backgrounds in #stablediffusion I took CC0 images that I found online, edited them in Photoshop to get roughly matching horizon levels, and used them as init images. Then upscaled to 4K with @topazlabs 7/8 #aiartprocess

I animated and layered the background plates together in Premiere. Keyed out the blue background in the walk video and laid it on top. Then made slight adjustments in color/contrast as she walked through the diff. environments. 8/8 #aiartprocess

• • •

Missing some Tweet in this thread? You can try to

force a refresh