Sharing additional thoughts on #StableDiffusion to create #gaming content & #game assets 🧵

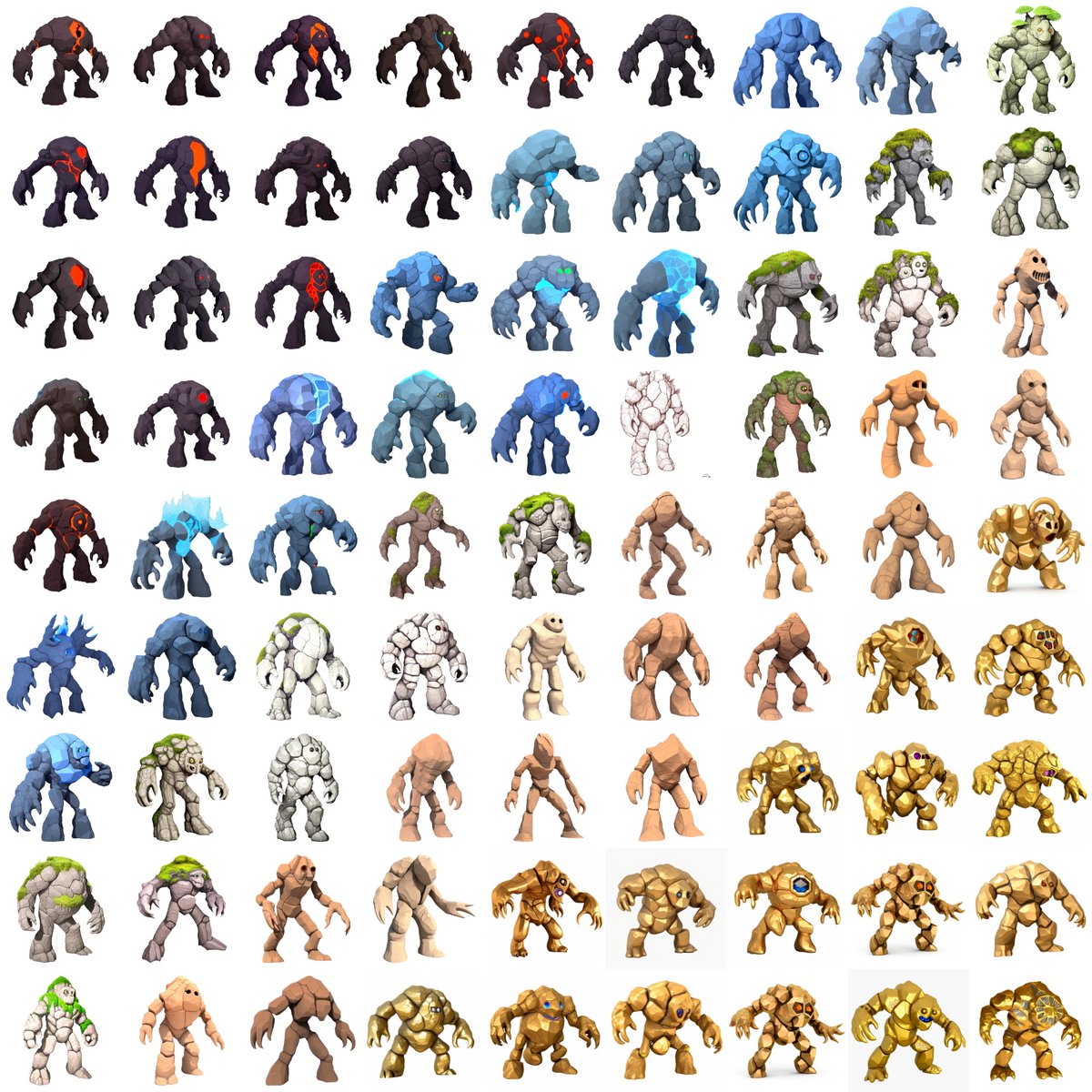

My last exploration was on golems; this one is with "Space Marines-like" heavy infantry.

This was a fun creation with lots of learnings 👨💻. Feel free to like/RT if you find it useful 🚀

My last exploration was on golems; this one is with "Space Marines-like" heavy infantry.

This was a fun creation with lots of learnings 👨💻. Feel free to like/RT if you find it useful 🚀

1/ First (as mentioned before -

Once the model is ready, compare different generic prompts (e.g. "low-poly," "3D rendering“, "ultra-detailed," "pixel art," etc.)

https://twitter.com/emmanuel_2m/status/1588854017140330497) > curate a training dataset (such as pictures of figurines) to feed a #Dreambooth finetune.

Once the model is ready, compare different generic prompts (e.g. "low-poly," "3D rendering“, "ultra-detailed," "pixel art," etc.)

2/ Once a prompt looks good, keep the modifiers (in this case: "3D rendering, highly detailed, trending on Artstation").

And start iterating around variations, such as colors (or pose). Don't over-engineer it, to keep the consistency. You should get this:

And start iterating around variations, such as colors (or pose). Don't over-engineer it, to keep the consistency. You should get this:

4/ Now you can get creative and add more colors (I just prompted "rainbow space marine, colorful" in the set below)

5/ It's not just about colors. The look or style of the models can be altered with other modifiers.

Such as "a dark Halloween zombie space marine". Now all the models have been zombified 🧟♂️💀🧟♀️. They could also melt or glow in the dark.

Such as "a dark Halloween zombie space marine". Now all the models have been zombified 🧟♂️💀🧟♀️. They could also melt or glow in the dark.

6/ I randomly found that one of the soldiers looked like a baby, so I prompted "a cute baby space marine" and made everyone look younger, in seconds:

7/ Because the shape/silhouette of the "baby space marine" was interesting, I kept it and used #img2img to generate pixel art variations, in different colors:

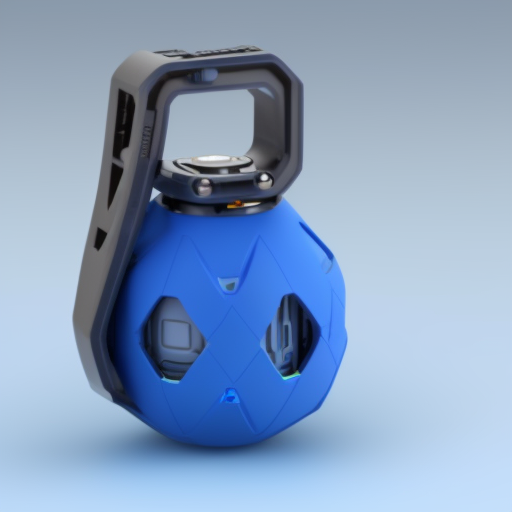

8/ img2img is very powerful when combined with Dreambooth. I went back to my previous tweet (link -

https://twitter.com/emmanuel_2m/status/1588854015819132928), and selected a "cyclopean golem", to resembling space soldiers, like this

9/ Same story below, but with a different (white) golem.

Also, I added "no gun" as a negative prompt. None of the soldiers has the machine gun they held before.

Also, I added "no gun" as a negative prompt. None of the soldiers has the machine gun they held before.

10/ As a bonus, you can have more fun with your finetune and generate other storytelling visuals.

For example, "urban warfare background" or "a group of space marines dancing in a night club"

For example, "urban warfare background" or "a group of space marines dancing in a night club"

11/ Or even find the image of a tank and then run #img2img to generate tank-like space robots/creatures 🪖

12/ I'm hoping this somehow demonstrates the power of having your own "finetune(s)"

Training lets you iterate around a specific concept, style, or object. You get consistent results, faster, and possibly using your training data. No more endless random prompting. What else? :)

Training lets you iterate around a specific concept, style, or object. You get consistent results, faster, and possibly using your training data. No more endless random prompting. What else? :)

end/ I've had some great discussions about it with the gaming community (artists or studios) over the past few days. If you have questions or would like to have a chat, please comment or DM me; happy to connect with innovative game creators.

#AI #GenAI #Gaming #StableDiffusion

#AI #GenAI #Gaming #StableDiffusion

(11/06 update) - I've done a similar pass on a dwarf character set. Check it out.

https://twitter.com/emmanuel_2m/status/1589418134880292865

• • •

Missing some Tweet in this thread? You can try to

force a refresh