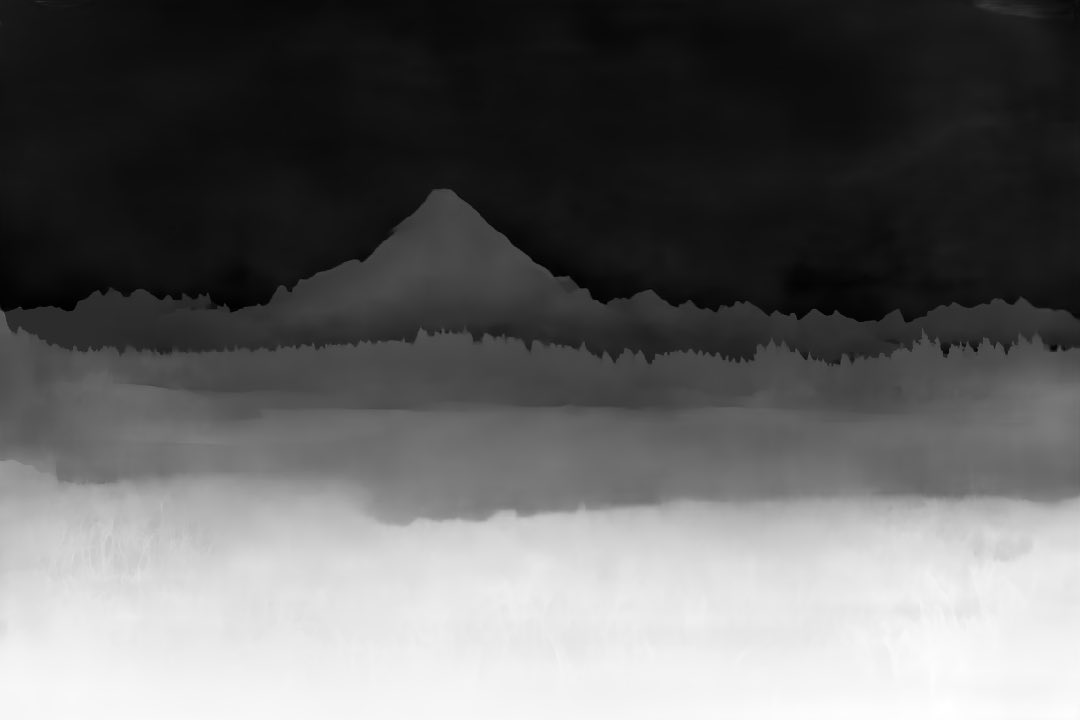

Used #stablediffusion2 #depth2img model to render a more photoreal layer ontop of a walking animation I made in #UnrealEngine5 with #realtime clothing and hair on a #daz model. Breakdown thread 1/6 @UnrealEngine @daz3d #aiart #MachineLearning #aiartcommunity #aiartprocess #aiia

Reused this animation I made from a post from a few months ago. Rendered in #UE5 @UnrealEngine using a @daz3d model with #realtime clothing in the @triMirror plugin. Walk was from the #daz store. Hair was from the Epic Marketplace. 2/6 #aiartprocess

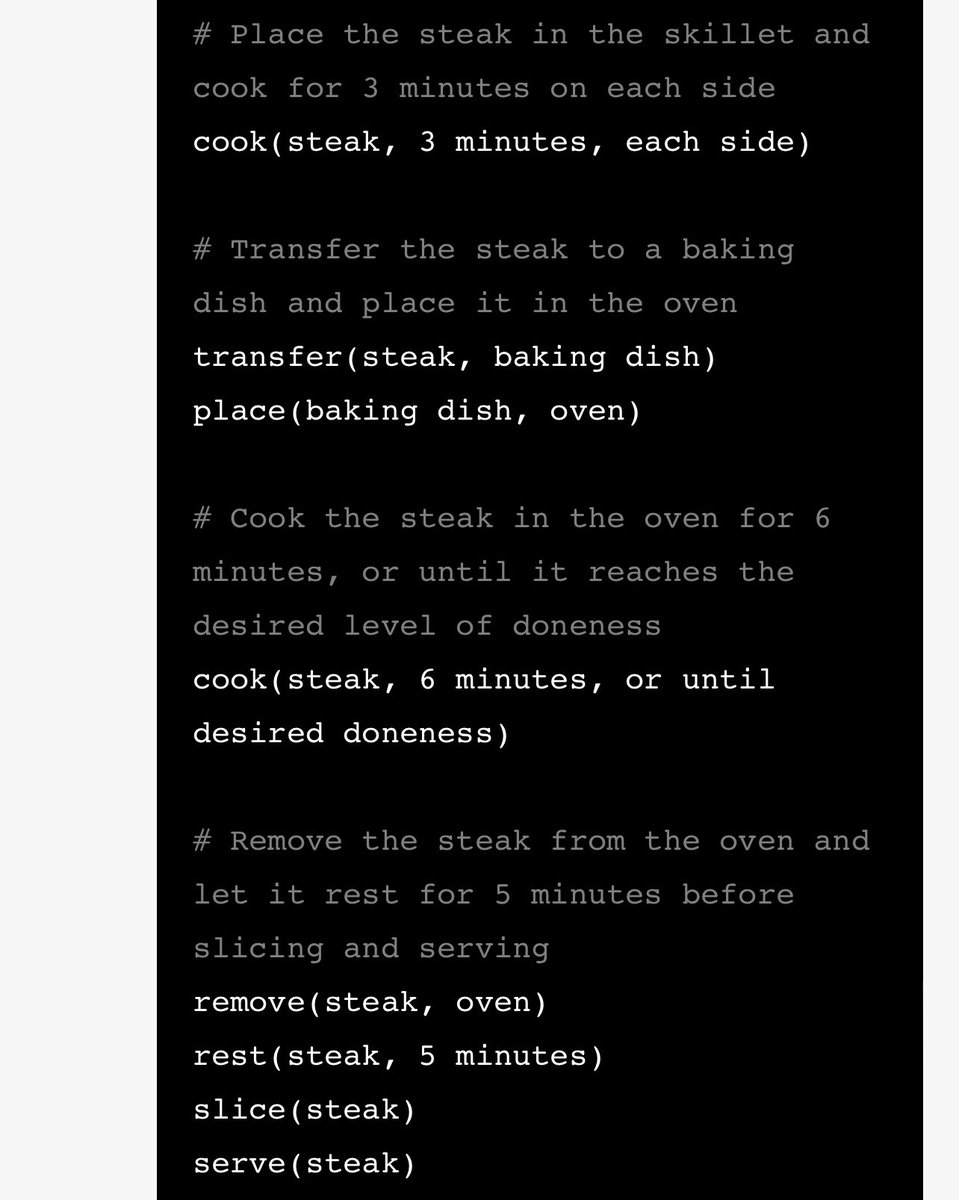

Used SD2’s #depth2img model running locally in Automatic1111. Thanks to @TomLikesRobots for the help getting it working! And showing how the model retains more consistency than normal img2img. I basically did an img2img batch process on the image sequence. 3/6 #aiartprocess

With the #depth2img model I’m able to use a low denoising strength of 0.25 but still get a noticeable change in aesthetics to photorealism. There’s still flickering in the anatomy but it’s much better than before. 4/6 #aiartprocess

Finally I added some film grain in Premiere Pro and simulated the retro glow from a Black Pro-mist filter. I thought it would be interesting to see how it might add to the realism. 5/6 #aiartprocess

While you can still see instability in shoulders and collarbones, I’m really excited to see that we’re not that far from creating photorealistic video ontop of a 3D scaffold rendered in #UnrealEngine5. We just need more consistency from frame to frame. 6/6 #aiartprocess

• • •

Missing some Tweet in this thread? You can try to

force a refresh