When George Hayward was a Facebook data-scientist, his bosses ordered him to run a #NegativeTest, updating Messenger to deliberately drain users' batteries to determine how power-hungry various options were. Hayward refused. FB fired him. He sued:

nypost.com/2023/01/28/fac… 1/

nypost.com/2023/01/28/fac… 1/

If you'd like an essay-formatted version of this thread to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

pluralistic.net/2023/02/05/bat… 2/

pluralistic.net/2023/02/05/bat… 2/

Hayward balked because he knew that among the 1.3 billion people who use Messenger, some would be placed in harm's way if Facebook deliberately drained their batteries. 3/

They could be physically stranded, unable to communicate with loved ones experiencing emergencies, or locked out of their identification, payment method, and all the other functions filled by mobile phones. 4/

As Hayward told Kathianne Boniello at The @NYPost, "Any data scientist worth his or her salt will know, 'Don’t hurt people...' I refused to do this test. It turns out if you tell your boss, 'No, that’s illegal,' it doesn’t go over very well." 5/

Negative testing is standard practice at #Facebook, and Hayward was given a document called "How to run thoughtful negative tests" regarding which he said, "I have never seen a more horrible document in my career." 6/

We don't know much else, because Hayward's employment contract included a non-negotiable #BindingArbitration waiver, which means that he surrendered his right to seek legal redress from his former employer. 7/

Instead, his claim will be heard by an #arbitrator - that is, a fake corporate judge who is paid by Facebook to decide if Facebook was wrong. 8/

Even if he finds in Hayward's favor - something that arbitrators do far less frequently than real judges do - the judgment, and all the information that led up to it, will be confidential, meaning we won't get to find out more:

pluralistic.net/2022/06/12/hot… 9/

pluralistic.net/2022/06/12/hot… 9/

One significant element of this story is that the malicious code was inserted into Facebook's app. Apps, we're told, are more secure than real software. 10/

Under the #CuratedComputing model, you forfeit your right to decide what programs run on your devices, and the manufacturer keeps you safe. But in practice, apps are just software, only worse:

pluralistic.net/2022/06/23/pee… 11/

pluralistic.net/2022/06/23/pee… 11/

Apps are part what #BruceSchneier calls #FeudalSecurity. In this model, we defend ourselves against the bandits who roam the internet by moving into a warlord's fortress. 12/

So long as we do what the warlord tells us to do, his hired mercenaries will keep us safe from the bandits:

locusmag.com/2021/01/cory-d… 13/

locusmag.com/2021/01/cory-d… 13/

But in practice, the mercenaries aren't all that good at their jobs. They let all kinds of #badware into the fortress, like the #PigButchering apps that snuck into the two major mobile app stores:

arstechnica.com/information-te… 14/

arstechnica.com/information-te… 14/

It's not merely that the app stores' masters make mistakes - it's that when they screw up, we have no recourse. You can't switch to an app store that pays closer attention, or that lets you install low-level software that monitors and overrides the apps you download. 15/

Indeed, #Apple's Developer Agreement bans apps that violate other services' terms of service, and they've blocked apps like #OGApp that block Facebook's surveillance and other #enshittification measures. 16/

Apple sided with Facebook against Apple device owners who asserted the right to control how they interact with the company:

pluralistic.net/2022/12/10/e2e… 17/

pluralistic.net/2022/12/10/e2e… 17/

When a company insists that you must be rendered helpless as a condition of protecting you, it sets itself up for ghastly failures. 18/

Apple's decision to prevent every one of its Chinese users from overriding its decisions led inevitably and foreseeably to the Chinese government ordering Apple to spy on those users:

pluralistic.net/2022/11/11/for… 19/

pluralistic.net/2022/11/11/for… 19/

Apple isn't shy about thwarting Facebook's business plans, but Apple uses that power selectively - they blocked Facebook from spying on Iphone users (yay!). 20/

But then Apple covertly spied on its customers in exactly the same way as Facebook, for exactly the same purpose, and lied about it:

pluralistic.net/2022/11/14/lux… 21/

pluralistic.net/2022/11/14/lux… 21/

The ultimately, irresolvable problem of Feudal Security is that the warlord's mercenaries will protect you against anyone - except the warlord who pays them. 22/

When Apple or Google or Facebook decides to attack its users, the company's security experts will bend their efforts to preventing those users from defending themselves, turning the fortress into a prison:

pluralistic.net/2022/10/20/ben… 23/

pluralistic.net/2022/10/20/ben… 23/

Feudal security leaves us at the mercy of giant corporations - fallible and just as vulnerable to temptation as any of us. Both binding arbitration and feudal security assume that the benevolent dictator will always be benevolent, and never make a mistake. 24/

Time and again, these assumptions are proven to be nonsense. 25/

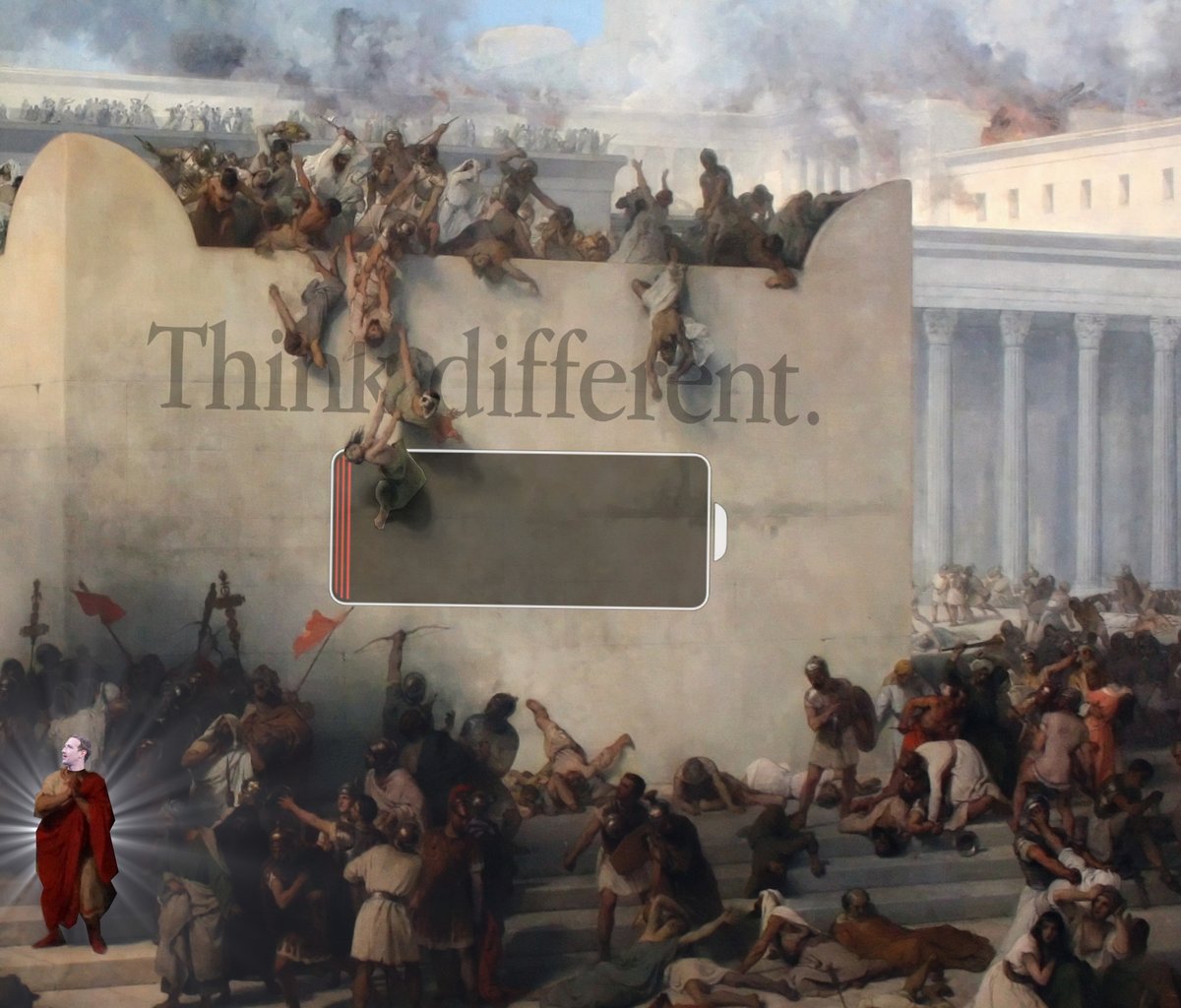

Image:

Anthony Quintano (modified)

commons.wikimedia.org/wiki/File:Mark…

CC BY 2.0:

creativecommons.org/licenses/by/2.… 26/

Anthony Quintano (modified)

commons.wikimedia.org/wiki/File:Mark…

CC BY 2.0:

creativecommons.org/licenses/by/2.… 26/

• • •

Missing some Tweet in this thread? You can try to

force a refresh