A recent Valent investigation found large sums of money have been spent on an online effort to undermine support for #ULEZ before the #Uxbridge by-election, which observers say @UKLabour lost due to the clear air policy championed by @mayorofLondon #sadiqkhan check it out 👇 1/27

@UKLabour @MayorofLondon The study intended to establish whether the ULEZ policy was being targeted by online manipulation (SPOILER: It is). We did not seek to identify the actors behind the manipulation, or definitely establish impact on voting intention. This would require a deeper dive 2/27

@UKLabour @MayorofLondon The investigation is part of our regular effort to examine the role of online technical manipulation in key areas of public debate. The study was not funded or commissioned by any other party. 3/27

@UKLabour @MayorofLondon We analysed 13k tweets and 8k retweets from 8,583 Twitter accounts. The findings outlined in this thread are likely just the tip of the iceberg. More extensive activities are likely taking place on bigger platforms eg. Facebook, Instagram, Telegram, TikTok 4/27

@UKLabour @MayorofLondon The methodology we observed uses a multilevel digital architecture, where different types of accounts undertake specific roles in a coordinated way. Instead of crude hashtag spamming, it aims to manipulate the way high-end influencer accounts are prioritised by Twitter 5/27

@UKLabour @MayorofLondon This results in 1,000s of accounts acting in a way that tricks Twitter's algorithms to place content from anti-ULEZ influencers onto users’ timelines. Additionally, the lowest quality and easiest-to-replace accounts are most at risk of detection, but can be easily replaced 6/27

@UKLabour @MayorofLondon To be clear, there is significant genuine anti-ULEZ sentiment and influencers leading it may not even be aware that their content is being amplified through algorithmic manipulation 7/27

@UKLabour @MayorofLondon Before ULEZ was first launched in 2019 in central London, registration of accounts mentioning ULEZ aligned with Twitter-wide registration trends. After 2019, registration of ULEZ-related accounts surged far beyond the general trend, suggesting an orchestrated campaign 8/27

@UKLabour @MayorofLondon In Oct 2021, the mayor announced #ULEZExpansion across #London. From November of that year, ULEZ account generation increased significantly. Of the total 8,583 Twitter accounts that mention ULEZ, 4,114 (~48%) were created since Nov 2022 9/27

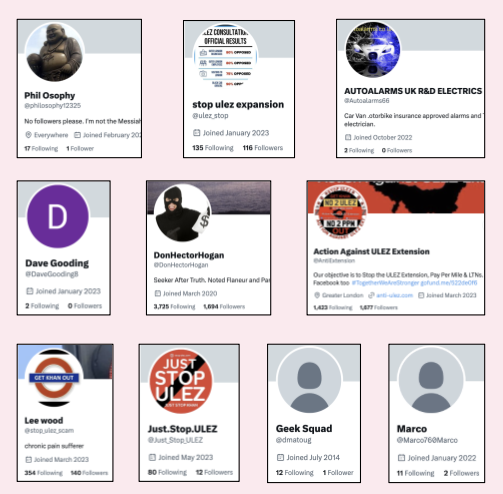

@UKLabour @MayorofLondon Of those 4,114 accounts, we stripped out those with the largest follower numbers (10% of the total) since those appear to be authentic anti-ULEZ talking heads. We call these accounts “seeders” since their main role seems to be to introduce talking points 10/27

@UKLabour @MayorofLondon The seeder accounts seem largely authentic - with characteristics including; clear attribution to a real person or group, long-standing account registration, unique posts, low retweeting ratio etc 11/27

@UKLabour @MayorofLondon After removing genuine seeder accounts, we were left with 3,702. Amongst these, we found clear evidence of inauthenticity (e.g. newly registered, use of fake names, high retweet and duplication ratio, focused solely on attacking ULEZ). We call these accounts “spreaders” 12/27

@UKLabour @MayorofLondon Spreader accounts are relatively low quality, they mostly tend to retweet and/or like content posted by seeders. They rarely tweet original content 13/27

@UKLabour @MayorofLondon We suspect that these spreader accounts are being controlled (or semi controlled) by automated software that can direct multiple accounts simultaneously. A number of entities claim to have illicit software that can do this 14/27

@UKLabour @MayorofLondon A @Guardian investigation from February revealed an Israeli company marketing a software tool called AIMS that can control up to 30,000 accounts across Twitter, LinkedIn, Facebook, Telegram, Gmail and Instagram 15/27 https://t.co/IZhPQcppHutheguardian.com/world/2023/feb…

@UKLabour @MayorofLondon @guardian The Guardian report says the Israeli outfit quoted Cambridge Analytica $160,000 in 2015, but was turned down. The company is by no means unique. Dozens of others based all over the world advertise similar tools, using euphemisms such as “multi-accounting” 16/27

@UKLabour @MayorofLondon @guardian On the ULEZ spreader accounts, on average, the 3,702 accounts we examined have 380 followers each. The follower accounts are clearly inauthentic with large following-to-follower ratios, stolen/fake profile pics, recently created. We call these accounts “validators” 17/27

@UKLabour @MayorofLondon @guardian Disreputable online vendors generate and maintain validator accounts so that they can offer potential customers the ability to quickly add more “followers" to their accounts. Twitter’s algorithms then treat the accounts with more followers as more important 18/27

@UKLabour @MayorofLondon @guardian The number of “followers” purchased doesn't necessarily equate to an equal number of unique accounts because one account can follow multiple other accounts 19/27

@UKLabour @MayorofLondon @guardian Our analysis shows it would cost roughly £44,000 to buy an average of 380 followers for each of the 3,702 seeder accounts in this study. This figure is based on a costing of £31 (converted from $40) for 1,000 followers per account (bought in bulk) 20/27

@UKLabour @MayorofLondon @guardian Although seeders may express genuine anti-ULEZ sentiment, their online voices are being amplified through Twitter manipulation. Without this amplification, their talking points would gain less traction and, therefore, attention 21/27

@UKLabour @MayorofLondon @guardian To reiterate; the seeders may not even be aware that their content is being falsely amplified. Amongst those we see benefitting from inauthentic amplification are some of the biggest anti-ULEZ voices such as @ronin19217435, @sophielouisecc and @GarethBaconMP 22/27

@UKLabour @MayorofLondon @guardian @ronin19217435 @sophielouisecc @GarethBaconMP The cost of the activity we observed during this study would include the use of AIMS-style software to direct the spreader accounts, and the purchase of false followers provided by the shadowy online providers. Our rough calculation of these costs is as follows:.. 23/27

@UKLabour @MayorofLondon @guardian @ronin19217435 @sophielouisecc @GarethBaconMP $160,000 (£124,000) for AIMS-style software (as per the 2015 prices quoted by the Guardian) and £44,000 for the false followers attached to the 3,702 seeders. This brings the total to £168,000. This is a highly indicative figure, the actual figure will include other costs 24/27

@UKLabour @MayorofLondon @guardian @ronin19217435 @sophielouisecc @GarethBaconMP Online manipulation techniques are evolving rapidly. The anti-ULEZ effort we investigated here is amongst the most advanced we have seen in nearly four years of examining such activity in Africa, the Middle East and Europe. 25/27

@UKLabour @MayorofLondon @guardian @ronin19217435 @sophielouisecc @GarethBaconMP Clearly the resource dedicated to conducting manipulation is significant and often far outstrips the targets’ spending on their communications efforts. At the same time, AI is making these sorts of operations cheaper 26/27

@UKLabour @MayorofLondon @guardian @ronin19217435 @sophielouisecc @GarethBaconMP In terms of ULEZ, further investigation is required to identify who is behind this manipulation effort, the cost that they incurred, and to investigate the potential manipulation on the larger platforms (e.g. Facebook, Tiktok, Telegram etc). 27/27

• • •

Missing some Tweet in this thread? You can try to

force a refresh

Read on Twitter

Read on Twitter