Medical AI safety.

Director of Research @ nRAH Medical Imaging. Senior Research Fellow, Australian Institute for Machine Learning.

She/her 🏳️⚧️🏳️🌈

2 subscribers

How to get URL link on X (Twitter) App

https://twitter.com/DrLaurenOR/status/1533556511913689088

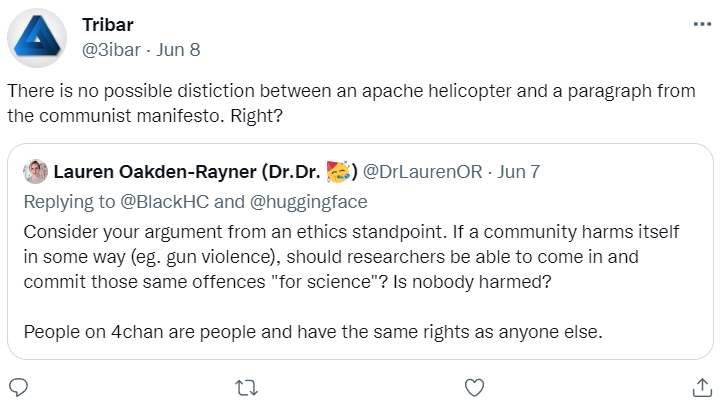

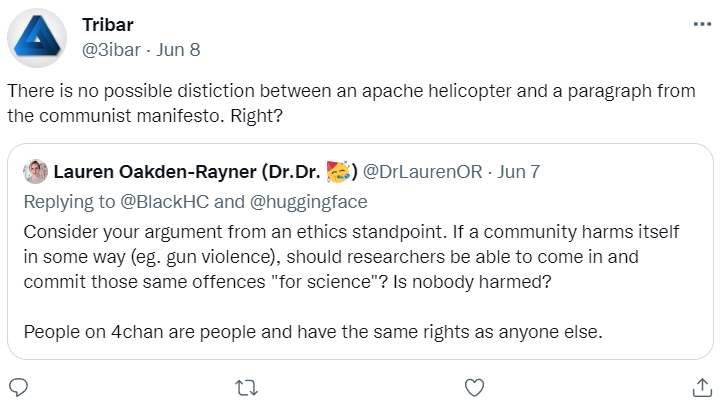

Here's some more. If anyone doesn't understand why all these statements are explicitly transphobic ... well, it is because you don't face it. These are all extremely hurtful.

Here's some more. If anyone doesn't understand why all these statements are explicitly transphobic ... well, it is because you don't face it. These are all extremely hurtful.

https://twitter.com/ykilcher/status/1532751551869108227

@huggingface as the model custodian (an interesting new concept) should implement an #ethics review process to determine the harm hosted models may cause, and gate harmful models behind approval/usage agreements.

@huggingface as the model custodian (an interesting new concept) should implement an #ethics review process to determine the harm hosted models may cause, and gate harmful models behind approval/usage agreements.

#Medical #AI has a problem. Preclinical testing, including regulatory testing, does not accurately predict the risks that AI models pose once they are deployed in clinics.

#Medical #AI has a problem. Preclinical testing, including regulatory testing, does not accurately predict the risks that AI models pose once they are deployed in clinics.

https://twitter.com/VickersBiostats/status/1295489610139738112First, we need to ask: is there a difference?

https://twitter.com/laure_wynants/status/1288131085797294080The argument against using a threshold to determine an action, at a basic level, seems to be:

https://twitter.com/pranavrajpurkar/status/1234772132514553856I personally suspect the biggest problem is automation bias, which is where the human over-relies on the model output.

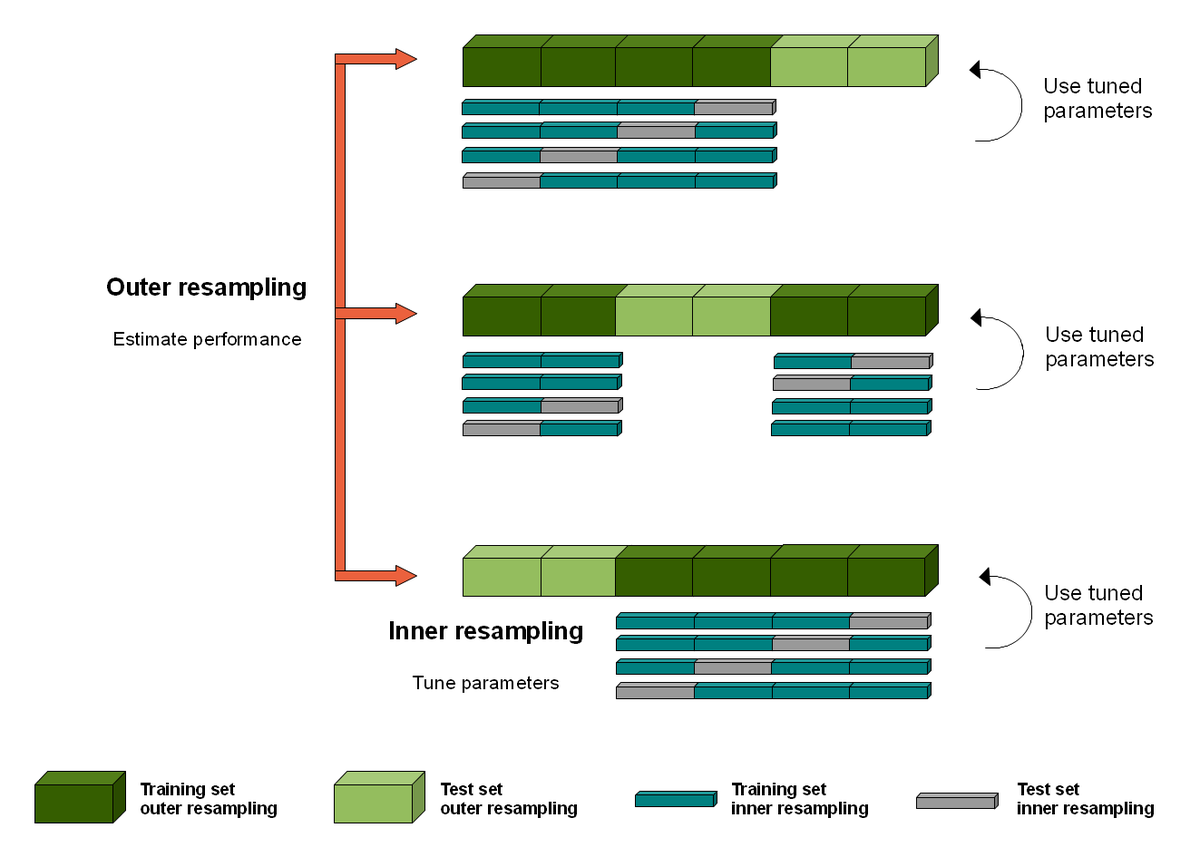

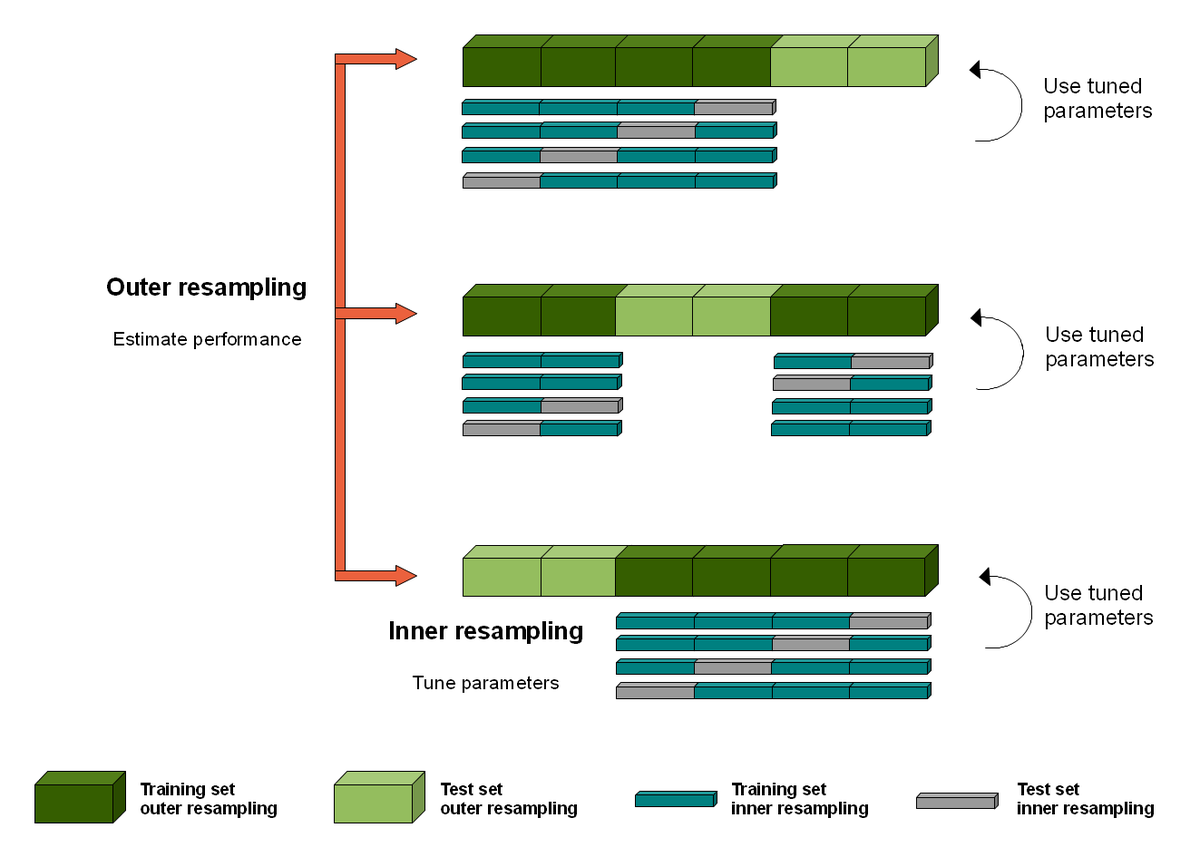

@weina_jin The weird thing about CV in AI is that you don't actually end up with a single model. You end up with k different models and sets of hyperparameters.

@weina_jin The weird thing about CV in AI is that you don't actually end up with a single model. You end up with k different models and sets of hyperparameters.https://twitter.com/ten_photos/status/11707320678874849282/ The deep learning models are tiny (4 conv layers) with justification that it works for MNIST. Everything works for MNIST! Linear regression works for MNIST!

https://twitter.com/DrLukeOR/status/1006804796785950720The paper claims "Most dermatologists were outperformed by the CNN", a bold statement. The relevant part of the paper is pictured.