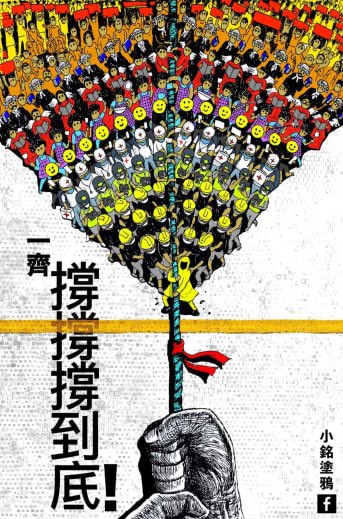

Poster art of the #HongKongProtests

Typeface used by a city’s public transport system becomes representative of the city. Here is an experiment by an artist where they swapped the fonts between Hong Kong MTR map and Tokyo Metro:

https://twitter.com/riensnk0813/status/1132573470120763392?s=21

Calligraphy or Graffiti?

https://twitter.com/elson_tong/status/1165536079216201729?s=21

@cathaypacific The designers behind the #HongKongProtests poster art

@StanChartHelp @HKWORLDCITY Hong Kong explained as GitHub activity

「HK原本是從CN fork出來的,後來被UK push了好多feature,現在要merge回CN,conflict太多了⋯⋯」 🤣

「HK原本是從CN fork出來的,後來被UK push了好多feature,現在要merge回CN,conflict太多了⋯⋯」 🤣

fine arts students @CUHKofficial crafting large banner

https://twitter.com/rachel_cheung1/status/1167094464218714112?s=21

Sculptures produced by art students @CUHKofficial

https://twitter.com/kaming/status/1167669779336978436?s=21

“Hong Kong democracy goddess” statue is the result of a crowded funded project, where HKD200k (~USD25k) was raised within 6 hours.

The details of the design was also decided via public voting, so democratic values is embedded in the art creation process.

The details of the design was also decided via public voting, so democratic values is embedded in the art creation process.

• • •

Missing some Tweet in this thread? You can try to

force a refresh