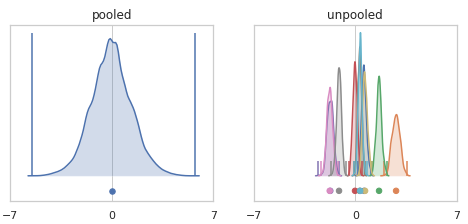

So why has 'internal covariate shift' remained controversial to this day?

Thread 👇

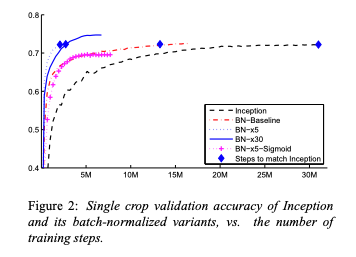

...but it doesn't explain why BN works!

We shall even define what we mean by the term! @alirahimi0

In the olden days it was understood that training deep nets is a hard optimisation problem.

(Things have since gotten simpler so it’s easy to forget!)

(@ylecun et al ’98) yann.lecun.com/exdb/publis/pd…

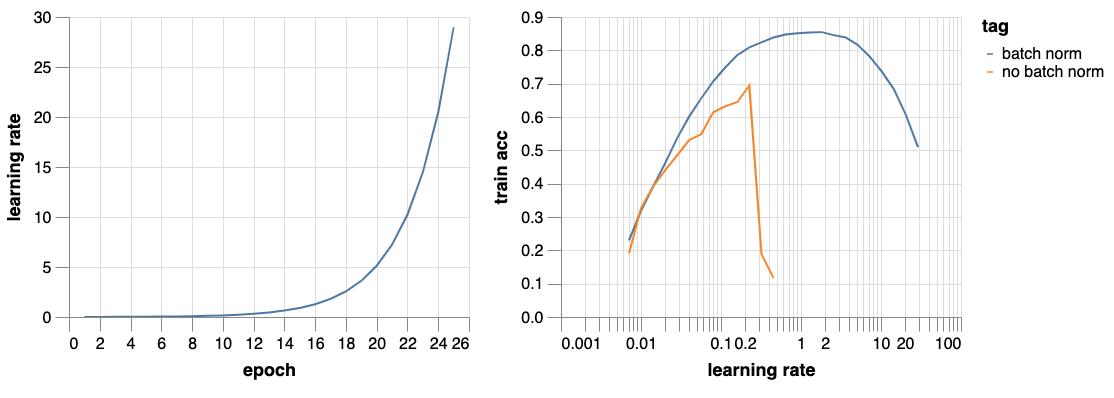

For a single layer, centering improves conditioning of the Hessian of the loss, enabling higher learning rates.

(@ylecun et al ’90) papers.nips.cc/paper/314-seco…

(If that output is a ‘cat’ neuron, a change in mean can lead to predicting ‘cat’ on every example!)

(@leventsagun et al) arxiv.org/abs/1611.07476, arxiv.org/abs/1706.04454

(Papyan) arxiv.org/abs/1811.07062.

(@_ghorbani et al) arxiv.org/abs/1901.10159 compare what happens with and without BN.

...matching the intuition of the original paper!