We go on a tour of bad inits, degenerate networks and spiky Hessians - all in a Colab notebook:

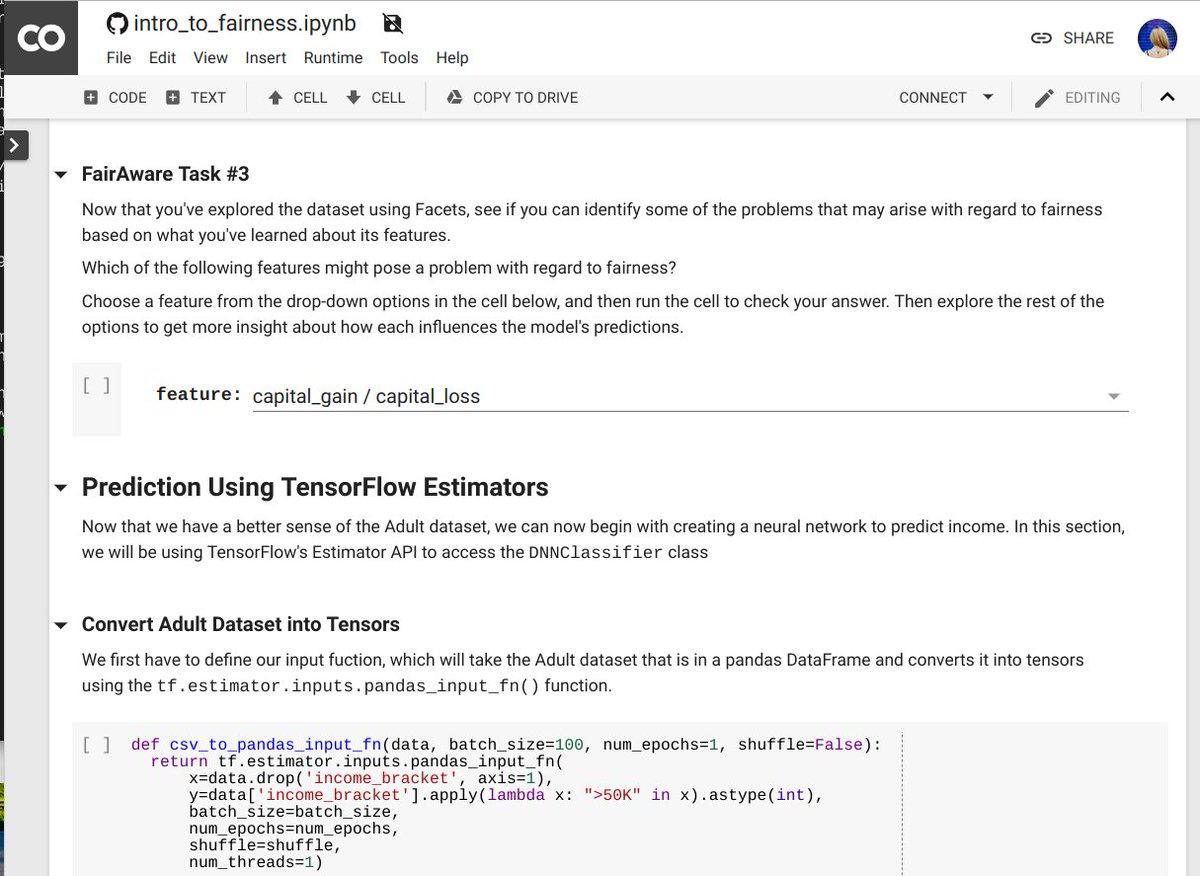

colab.research.google.com/github/davidcp…

Summary 👇

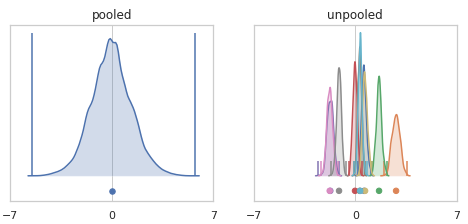

We learn that deep ReLU nets with He-init, but no batch norm, basically ignore their inputs! (Check out arxiv.org/abs/1902.04942 by Luther, @SebastianSeung for background.)

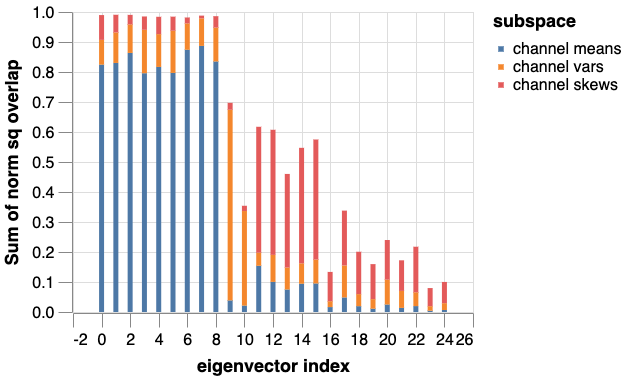

Easy to miss if you pool across channels:

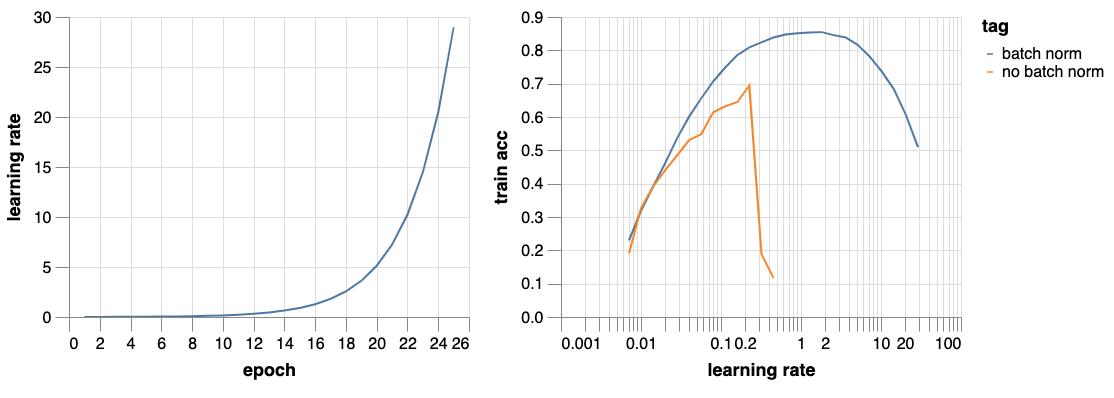

Finally we connect to instability of SGD and outlying eigenvalues of the Hessian (found by @leventsagun, Bottou, @ylecun.)

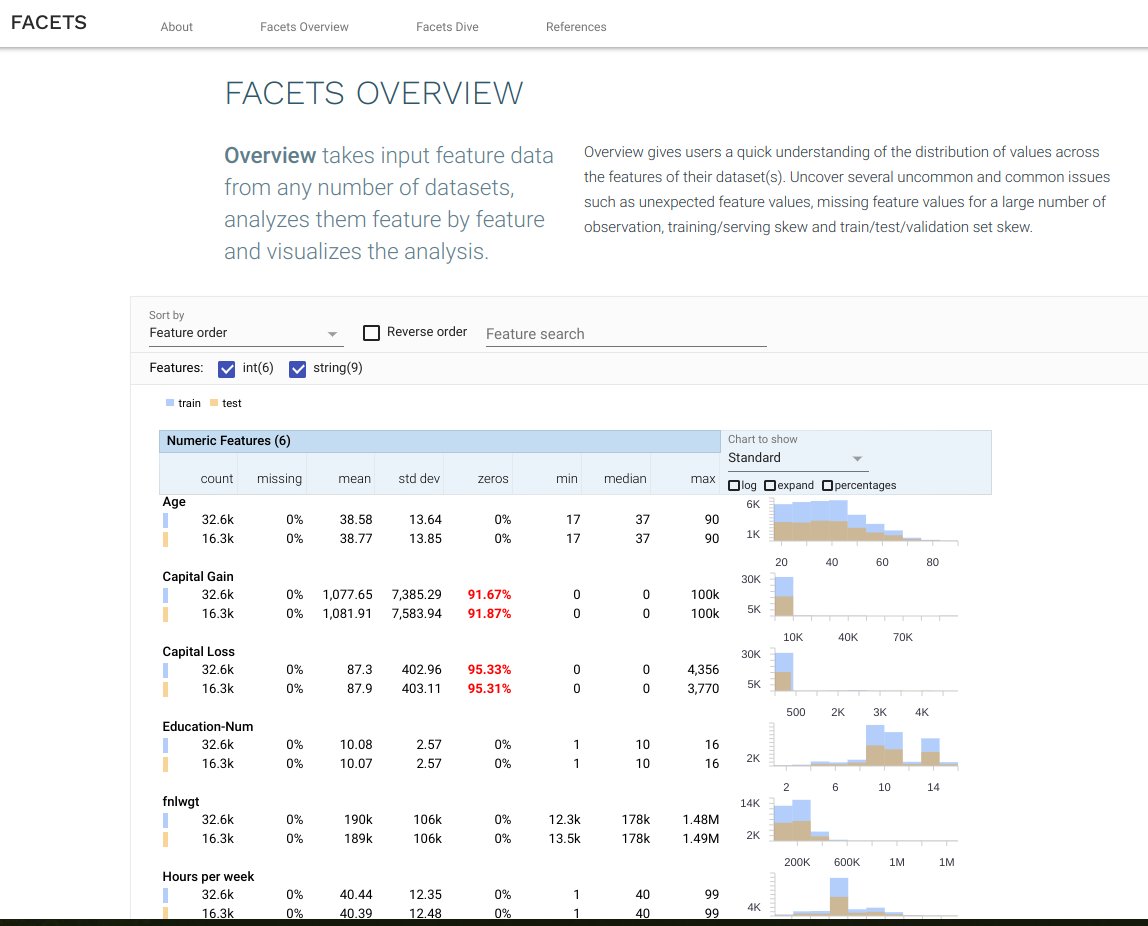

The mystery of the spiky Hessian is resolved along with the secrets of batch norm!