Since it is Monday, it's time for the 'metrics paper of the week! #MetricsMonday

Today I want to highlight "Bounds on Distributional Treatment Effect Parameters using Panel Data with an Application on Job Displacement", by Brantly Callaway.

Link here: bcallaway11.github.io/files/DTE/dte1…

Today I want to highlight "Bounds on Distributional Treatment Effect Parameters using Panel Data with an Application on Job Displacement", by Brantly Callaway.

Link here: bcallaway11.github.io/files/DTE/dte1…

Brant's paper provide a set of tools that allow us to better understand heterogeneous effects in a diff-in-diff setups.

Brant is particular interested in the distribution *of* the treatment effects for the treated units, and the quantile *of* treatment effect for the treated.

Brant is particular interested in the distribution *of* the treatment effects for the treated units, and the quantile *of* treatment effect for the treated.

These parameters are much harder to identify than the popular ATT.

In fact, even if you have data from a *perfect* RCT, you will not be able to point identify these distributional parameters without relying on additional restrictions on the DGP.

In fact, even if you have data from a *perfect* RCT, you will not be able to point identify these distributional parameters without relying on additional restrictions on the DGP.

Brant note that these extra conditions to get point identification can be too strong in many diff-in-diff applications.

To avoid these drawbacks, he then propose alternative assumptions that you can use to *bound* these distributional parameters.

To avoid these drawbacks, he then propose alternative assumptions that you can use to *bound* these distributional parameters.

Brant also makes life a bit easier for us by providing an easy-to-use #R package that implement his proposed tools: bcallaway11.github.io/csabounds/

I find the paper very nice and well motivated.

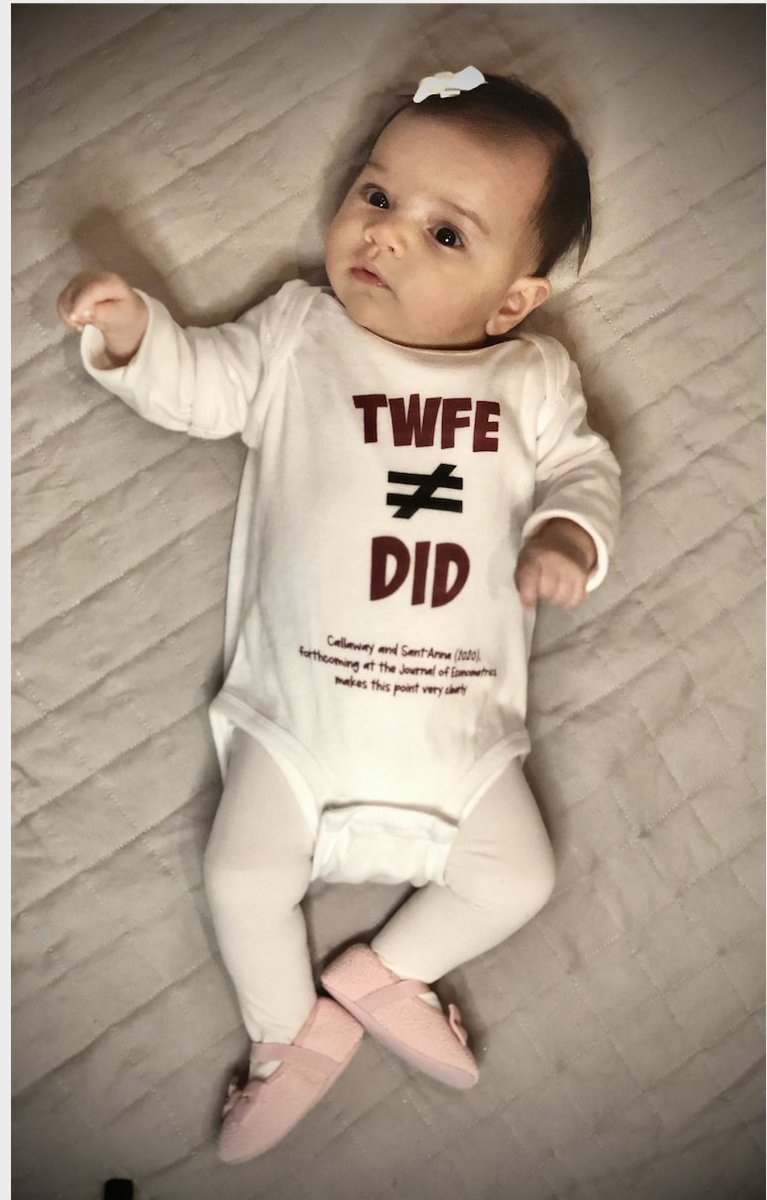

But that should not be too much of a surprise as I always learn a lot from Brant, either by co-authoring with him or just reading his papers!

But that should not be too much of a surprise as I always learn a lot from Brant, either by co-authoring with him or just reading his papers!

• • •

Missing some Tweet in this thread? You can try to

force a refresh