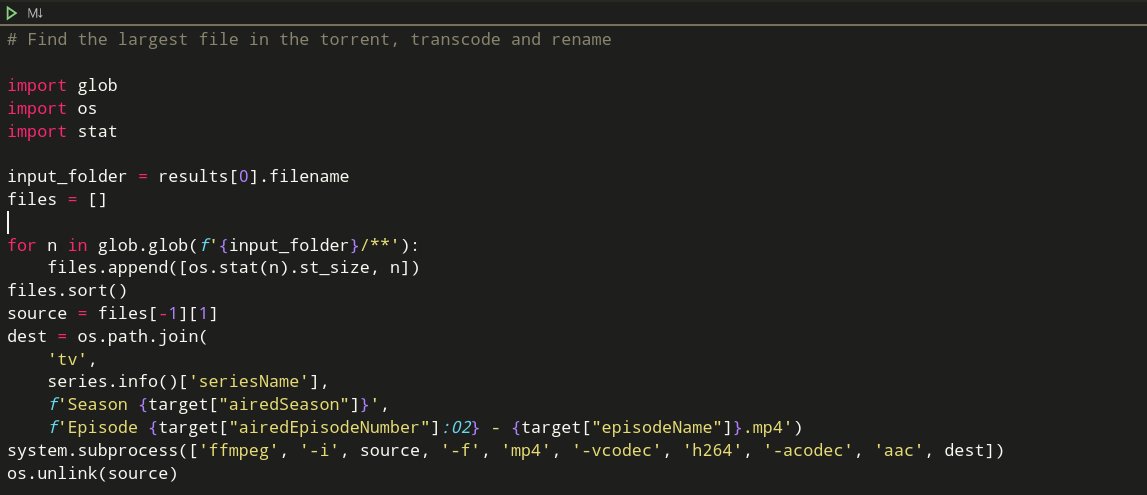

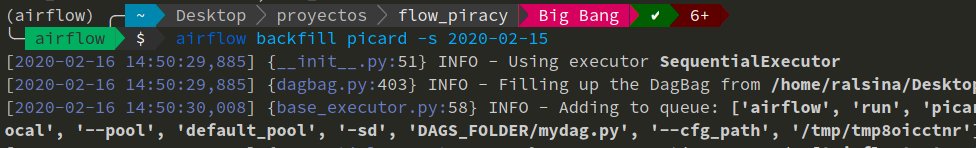

While the others take a couple of seconds to run, this one may take hours or days.

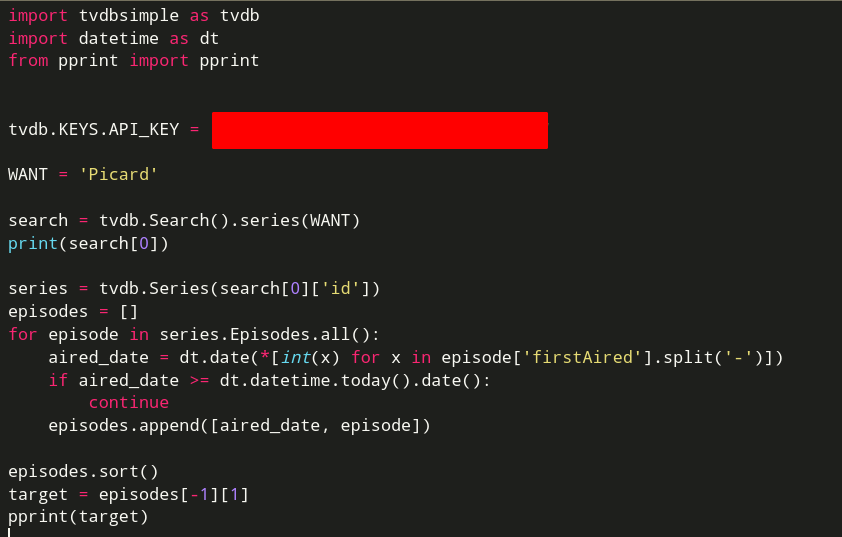

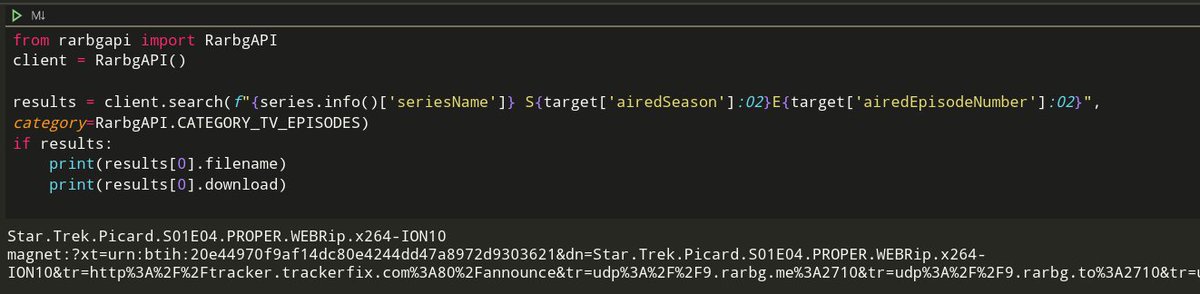

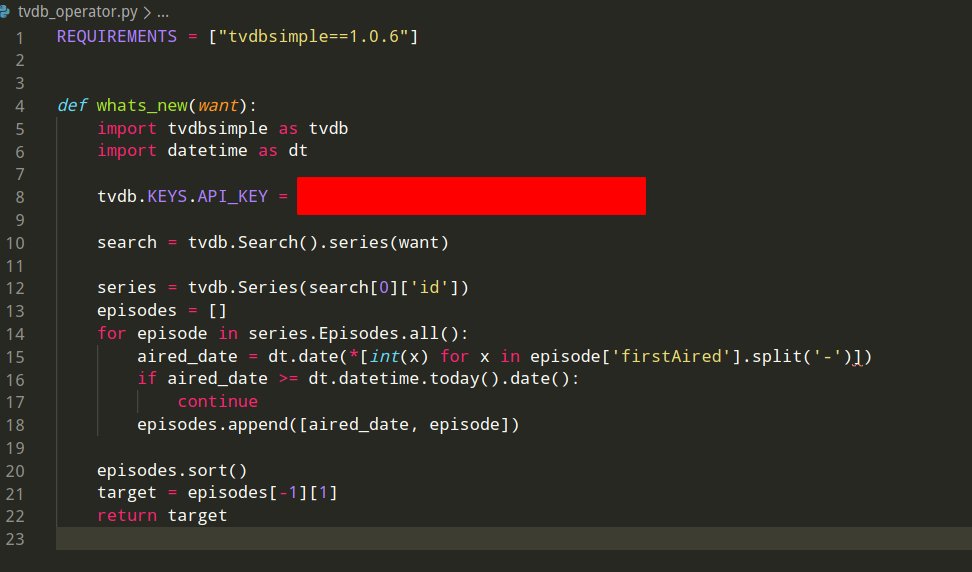

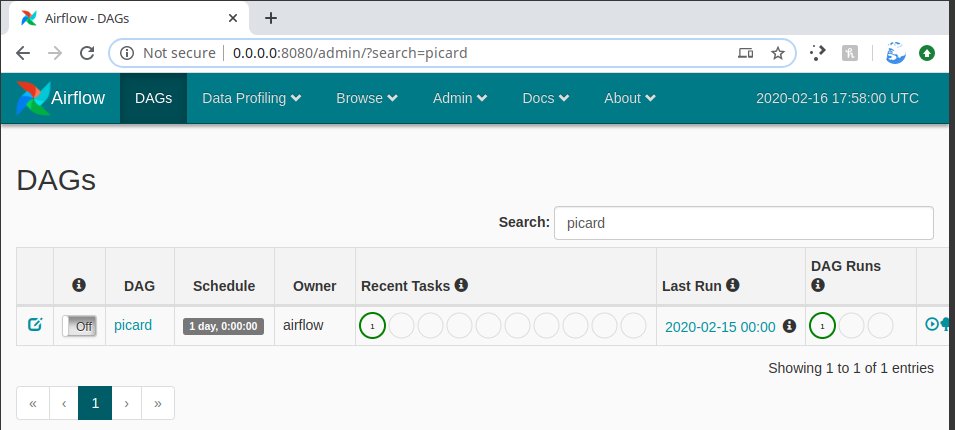

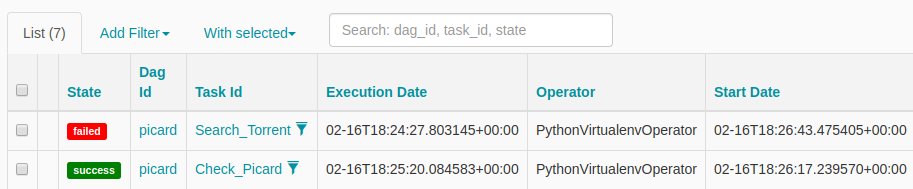

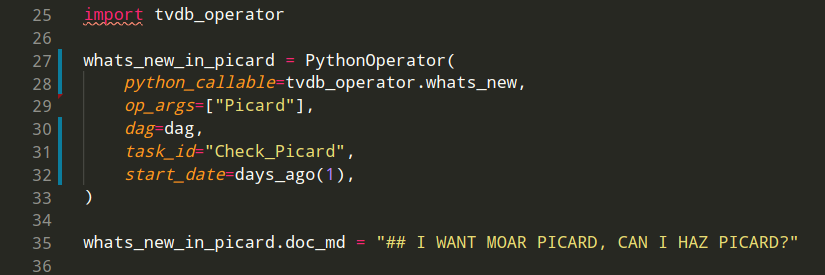

Basically: it's easy to automate "I want to watch the latest episode of Picard"

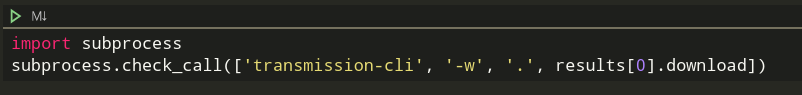

1) You have to go and tell it to get it

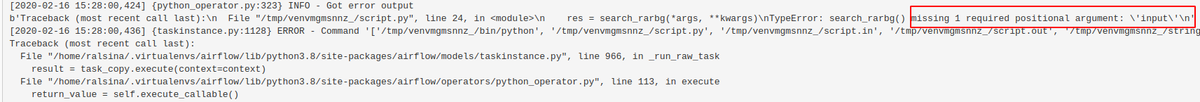

2) It takes hours

3) It may fail, and then you have to do it again

4) It will take hours again

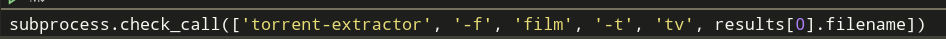

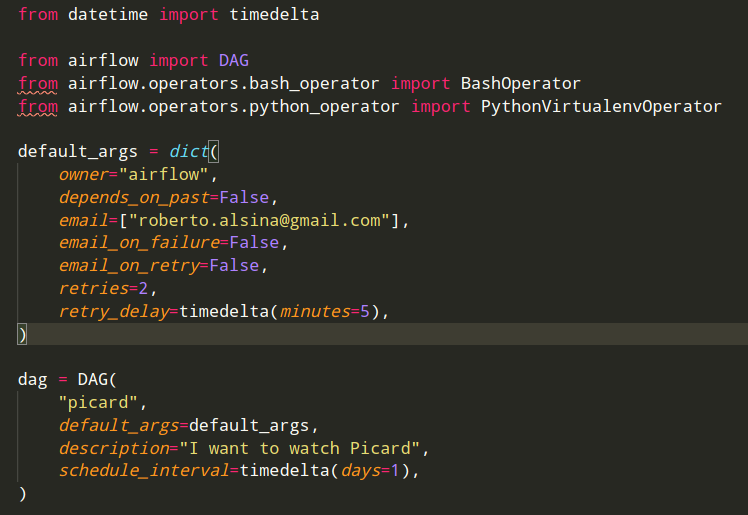

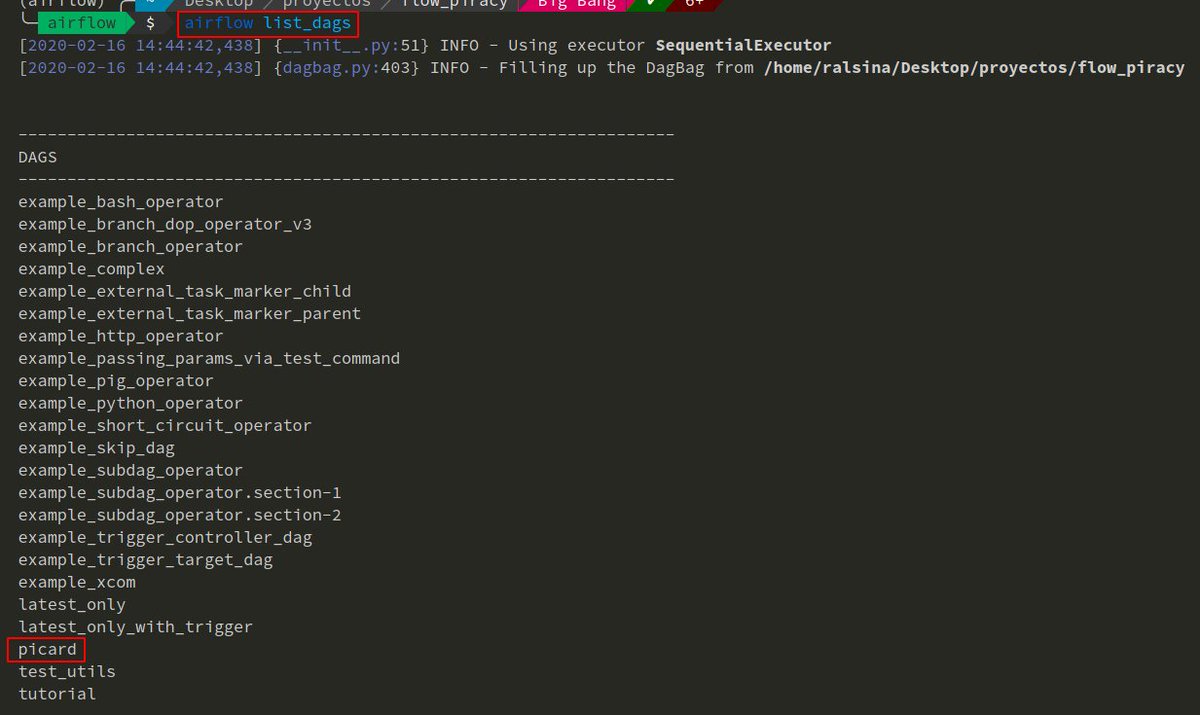

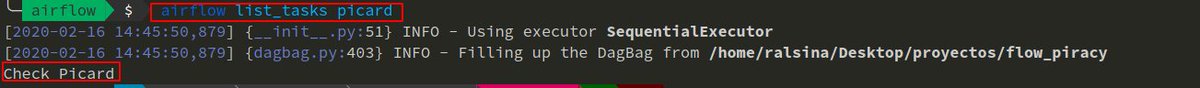

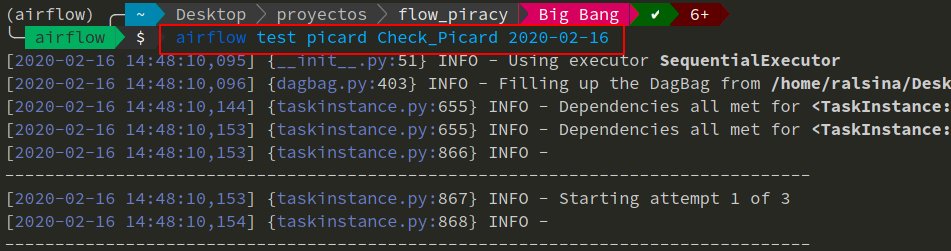

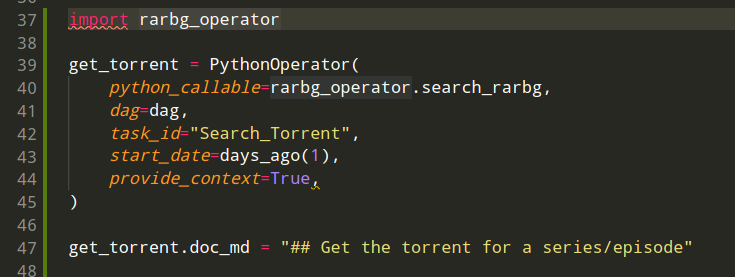

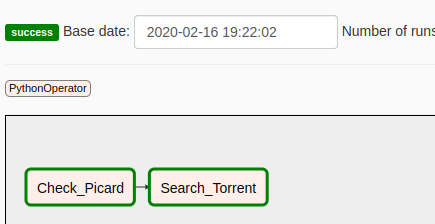

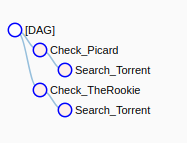

If you have a script that is a number of steps which depend on one another and are executed in order then it's possible to convert them into very simple airflow DAGs.

airflow.apache.org/docs/stable/_a…

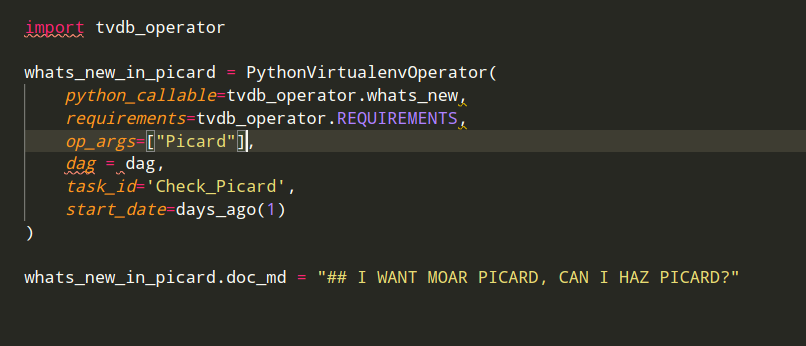

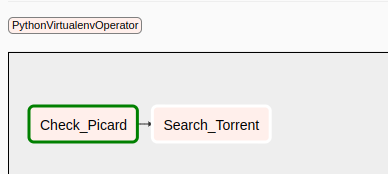

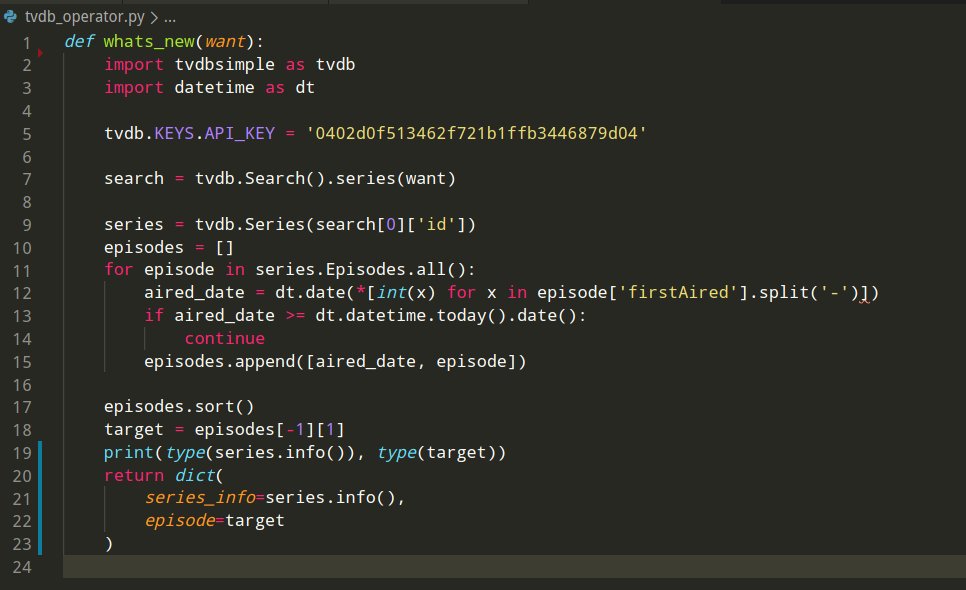

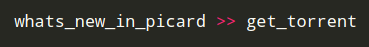

But of course a single task is a lame DAG, so let's make it a bit more interesting.

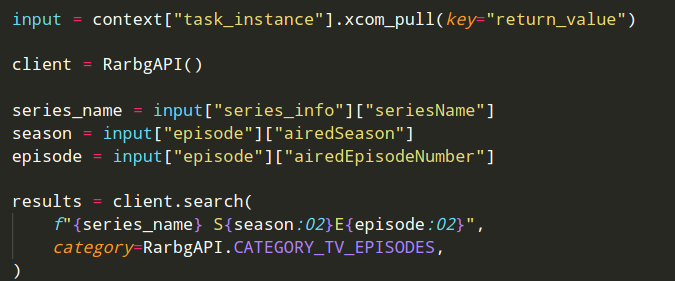

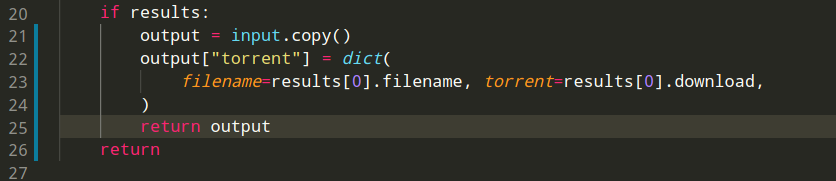

It's based on this:

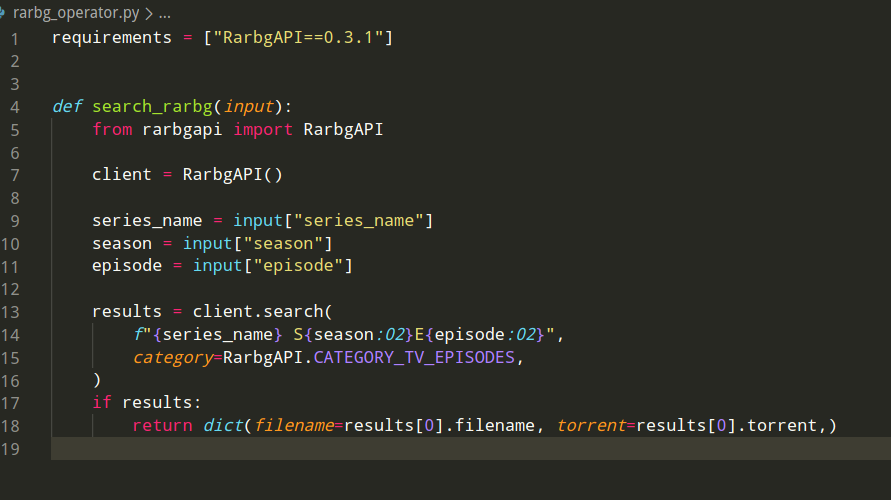

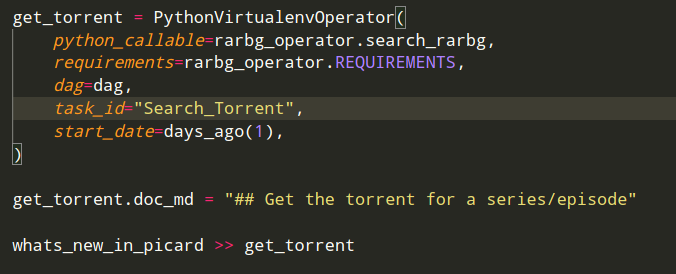

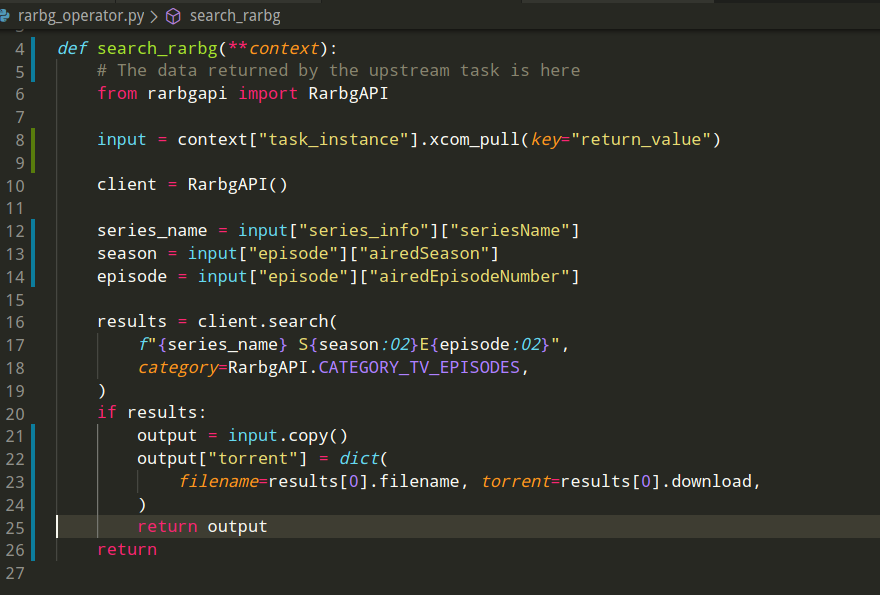

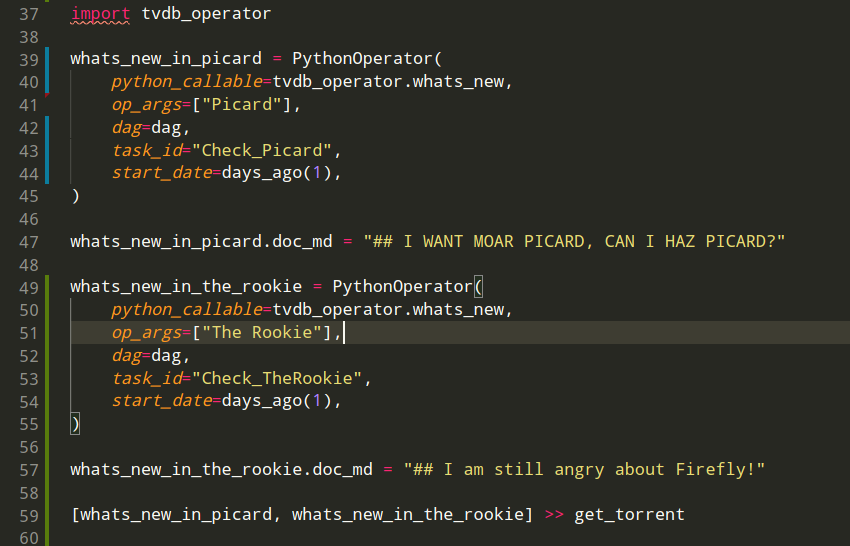

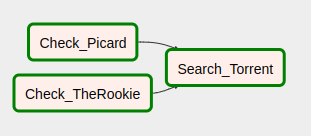

So, we need to do 2 things:

1) Connect the two operators

2) Pass data from one to the other

This **will** be slower than just running the scripts by hand.

Shame, airflow, shame.

Suck it up!

So, what happens if I also want to watch ... "The Rookie"?

Senior Python Dev, eng mgmt experience, remote preferred, based near Buenos Aires, Argentina.

ralsina.me/weblog/posts/l…