mayoclinicproceedings.org/pb/assets/raw/… or

COVID-19 Testing: The Threat of False-Negative Results sciencedirect.com/science/articl… #EBM

@MayoProceedings Also note ja.ma/3ct4UPD from @marcottl @JoshuaLiaoMD

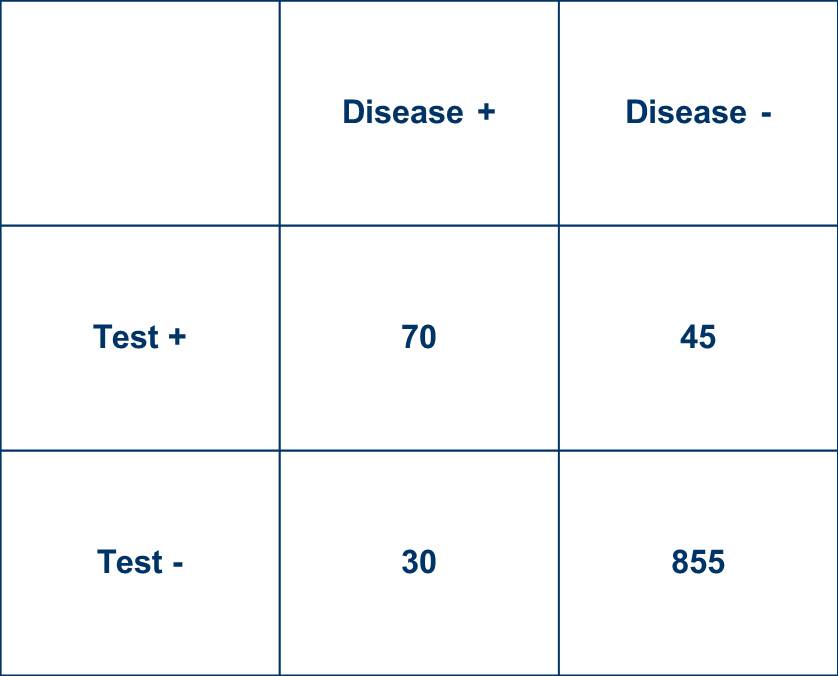

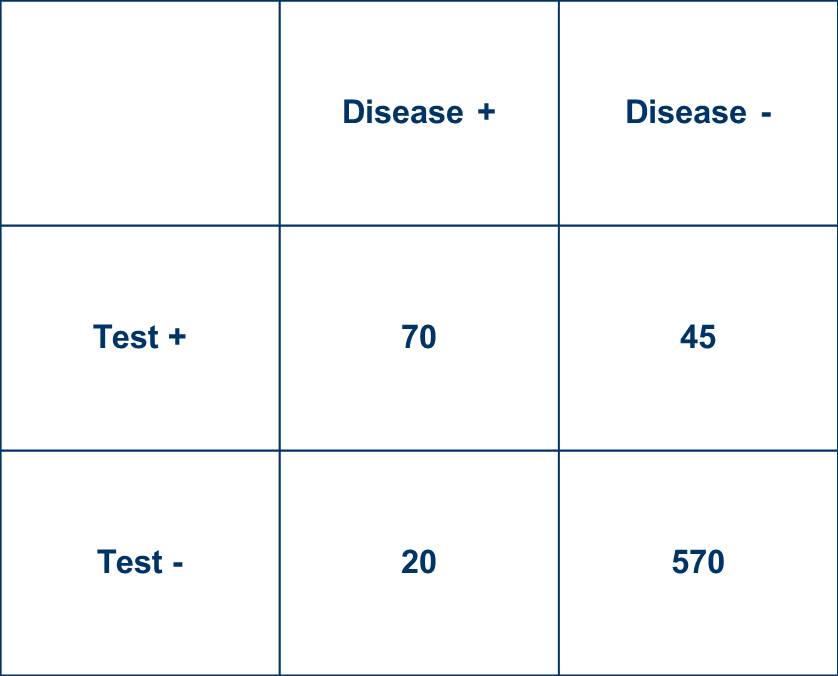

Sensitivity = 70%

Specificity = 95%

LR+ = 14

LR- = 0.32

These are not dissimilar to #COVID19 data floating around, although hardly universally agreed upon.