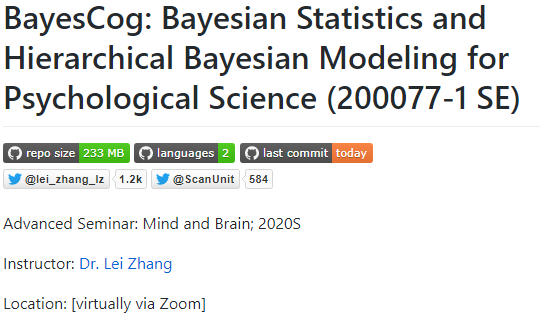

L01 Intro,

L02 R (P1)

L03 R (P2)

L04 Prob & Bayes'

L05 Binomial

L06 MCMC

L07 Stan

L08 Regression

L09 Rescorla-Wagner

L10 RW in Stan

L11 HBA

L12 Model comparison

L13 Stan debugging

There are indeed lots of tutorials of @mcmc_stan already, but from a cognitive modeling perspective, you find nowhere else that covers @mcmc_stan optimization and debug.

Not to forget the upcoming @neuromatch academy! I will also participate.

repo: github.com/lei-zhang/Baye…

Thank @ClausLamm @ScanUnit @univienna for the support during this time!

And apparently, open teaching shows commitment to #openscience.

Fin.