#OSINT Tool Tuesday

It’s time for another round of OSINT tools to help you improve your efficiency and uncover new information. A quick overview:

[+] Reversing Information

[+] Automating Searches with #Python

[+] Testing/Using APIs

RT for Reach! 🙏

(1/5) 👇

It’s time for another round of OSINT tools to help you improve your efficiency and uncover new information. A quick overview:

[+] Reversing Information

[+] Automating Searches with #Python

[+] Testing/Using APIs

RT for Reach! 🙏

(1/5) 👇

The first #OSINT tool is called Mitaka. It’s a browser extension that will reverse multiple data points right from your browser. Right-click what you want to reverse and Mitaka will show you what sources are available. Improve your efficiency!

github.com/ninoseki/mitaka

(2/5) 👇

github.com/ninoseki/mitaka

(2/5) 👇

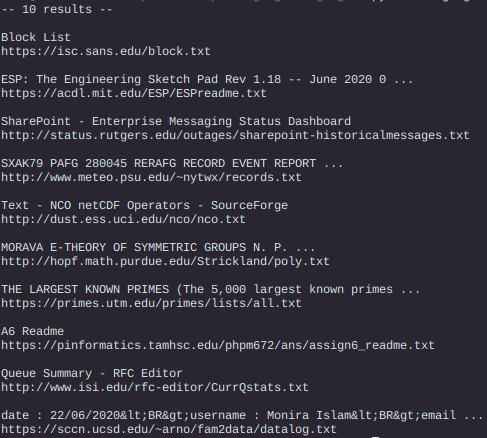

The second #OSINT tool is called Sitedorks from @zarcolio. It’s a #Python tool to automate your Google queries. Enter a query and it’ll open up to 25+ tabs—checking your parameters across a variety of website categories.

github.com/Zarcolio/sited…

(3/5)☝️👇

github.com/Zarcolio/sited…

(3/5)☝️👇

The third #OSINT tool is @getpostman. I recently did a poll and 46% of OSINT professionals aren’t using/testing APIs in their workflow. Postman has streamlined my API testing/managing process. Start by importing a curl command from anywhere.

postman.com

(4/5) ☝️👇

postman.com

(4/5) ☝️👇

Remember #OSINT != tools. Tools help you plan and collect data, but the end result of that tool is not OSINT. You have to analyze, receive feedback, refine, and produce a final, actionable product of value before you can call it intelligence.

Thanks for reading!

(5/5)☝️

Thanks for reading!

(5/5)☝️

As a quick bonus, my #OSINT bookmarklet Forage now checks Reddit and YouTube after extracting the username from the URL bar of most popular social media platforms. Give it a spin and let me know what you think.

github.com/jakecreps/fora…

github.com/jakecreps/fora…

• • •

Missing some Tweet in this thread? You can try to

force a refresh