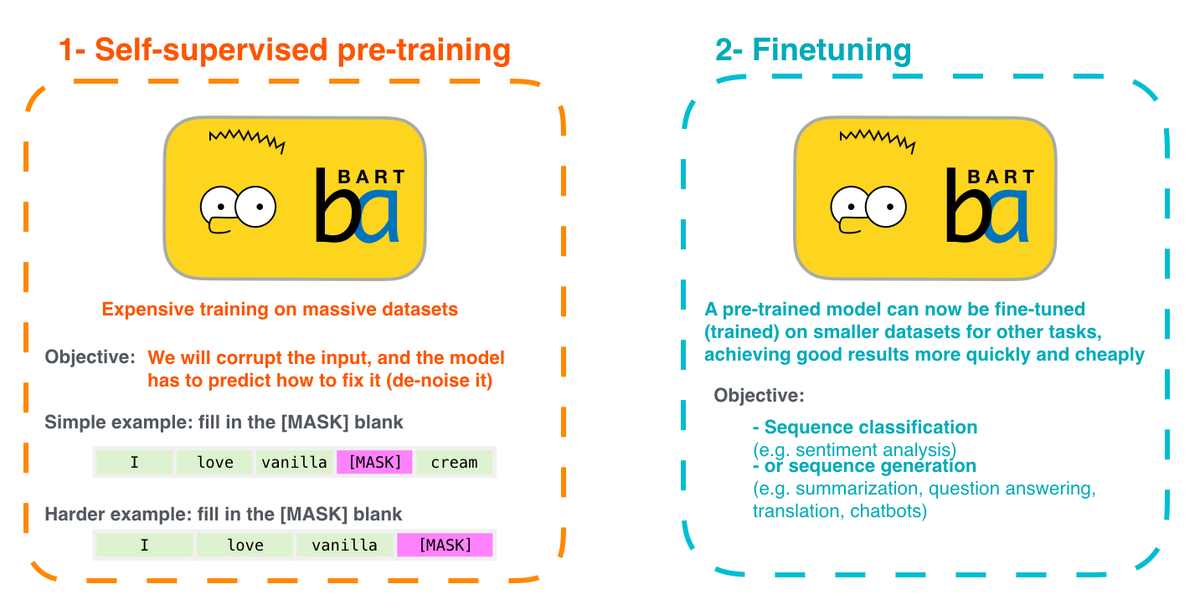

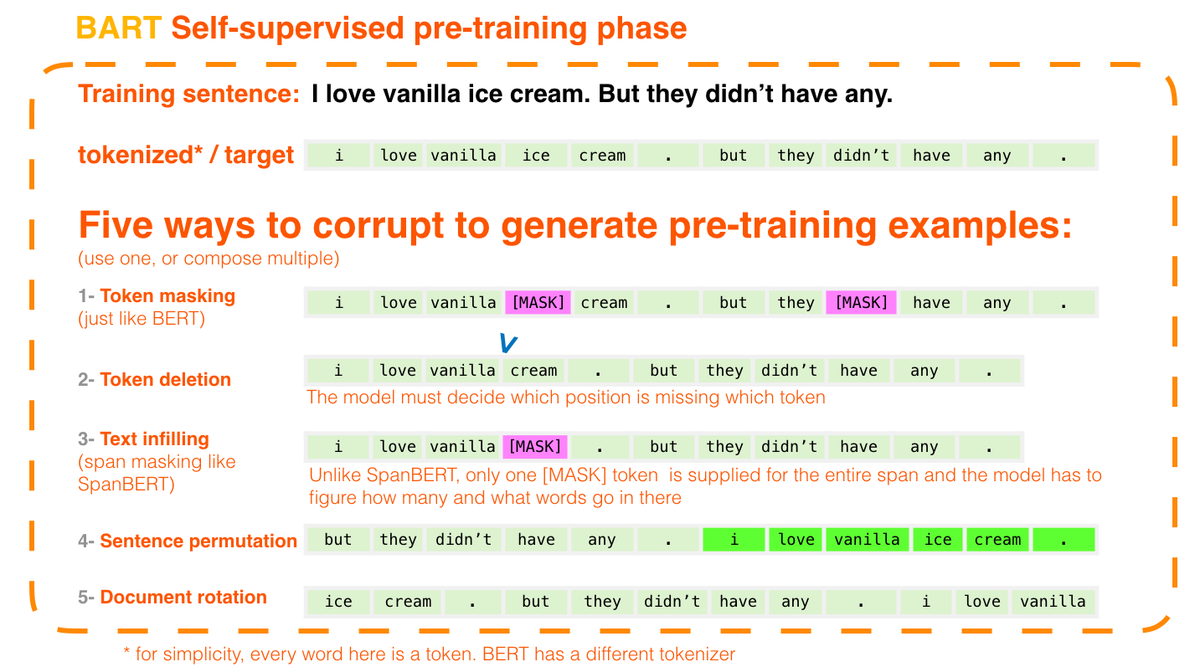

The covariance matrix is a an essential tool for analyzing relationship in data. In numpy, you can use the np.cov() function to calculate it (numpy.org/doc/stable/ref…).

Here's a shot at visualizing the elements of the covariance matrix and what they mean:

1/5

Here's a shot at visualizing the elements of the covariance matrix and what they mean:

1/5

Along the diagonal are the variance of each row. Variance indicates the spread of the values (1 and 4 in this case) from their average.

2/5

2/5

Another variance calculation along the diagonal. You can also calculate it with Wolfram Alpha: wolframalpha.com/input/?i=varia…

3/5

3/5

The actual covariance values are off-diagonal.

Covariance indicates the relationship between two lists/sequences/variables. The sign tells you the direction (negative in this case -- so when the teal list increases, pink decreases).

It's indexed like this.

4/5

Covariance indicates the relationship between two lists/sequences/variables. The sign tells you the direction (negative in this case -- so when the teal list increases, pink decreases).

It's indexed like this.

4/5

It's easy to confuse what the columns mean in the covariance matrix (especially without labels). Remember, both row and column indices in the covariance matrix correspond with ROWs of the dataset.

Here's how that looks in a 3 by 3 matrix.

Wolfram: wolframalpha.com/input/?i=covar…

5/5

Here's how that looks in a 3 by 3 matrix.

Wolfram: wolframalpha.com/input/?i=covar…

5/5

• • •

Missing some Tweet in this thread? You can try to

force a refresh