CLIP + StyleGAN + #mylittlepony A thread 🧵starting with @ElvisPresley

"A pony that looks like Elvis Presley"

#AI #art #NLP #ML

"A pony that looks like Elvis Presley"

#AI #art #NLP #ML

Halftime shoutout: Props to @l4rz @AydaoAI @pbaylies @Norod78 for ideas and starting notebooks. Go check them out if you find this stuff interesting (signal boost activated).

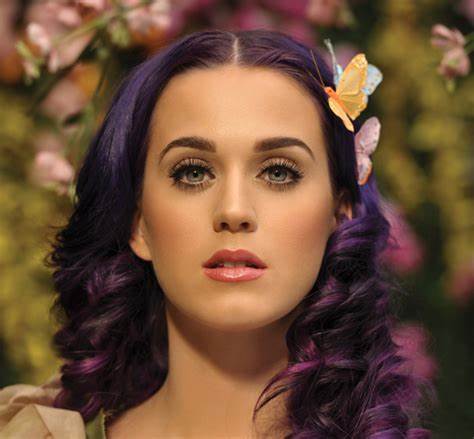

Here's the crazy bit, these were optimized using *only* the text, the photo for is for reference.

Here's the crazy bit, these were optimized using *only* the text, the photo for is for reference.

Side thought: if this somehow included corn, it could be worked into a @SwiftOnSecurity fanfic

• • •

Missing some Tweet in this thread? You can try to

force a refresh