How we ask questions is important.

Some questions are *standardized* (e.g., surveys, scripts, instructions) and require reading out loud, word for word.

In business, research, law, medicine, etc., do people "just read them out"?

TL;DR: No. And there are consequences.

1. 🧵

Some questions are *standardized* (e.g., surveys, scripts, instructions) and require reading out loud, word for word.

In business, research, law, medicine, etc., do people "just read them out"?

TL;DR: No. And there are consequences.

1. 🧵

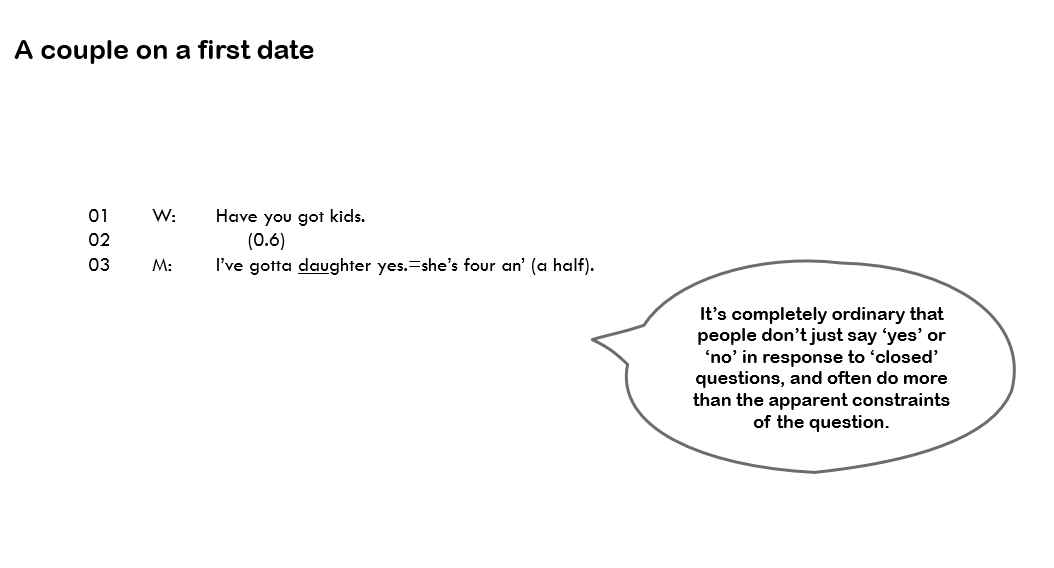

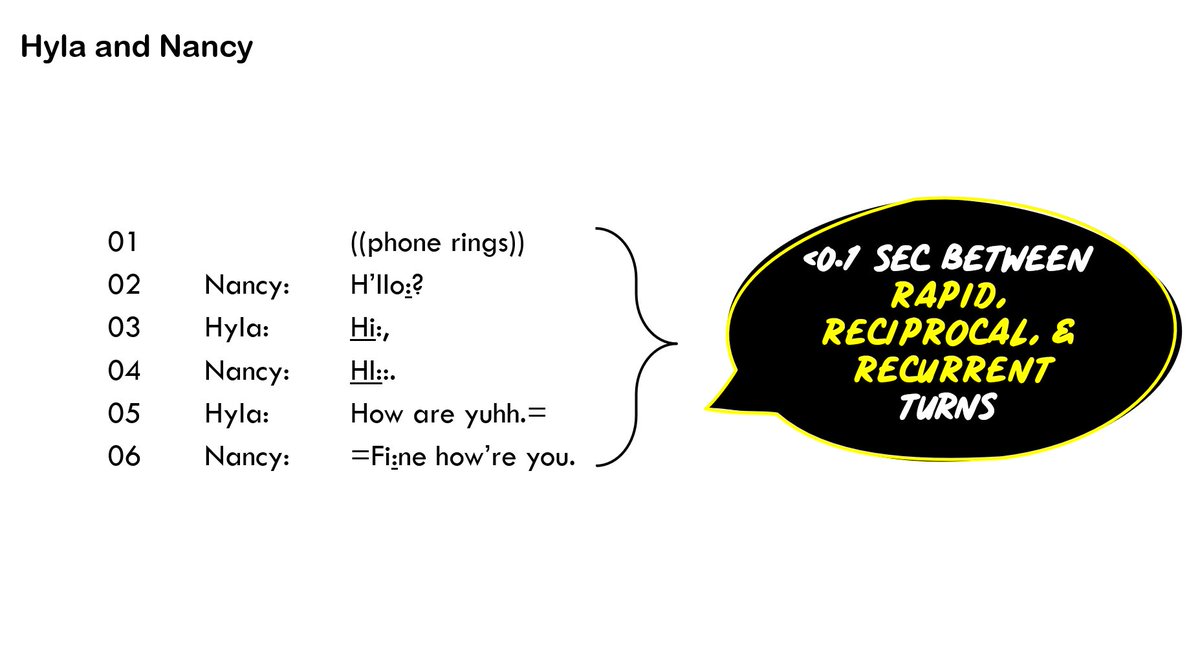

2. We might take it for granted that, when 'standardized', questions will be the same whether spoken or written. The examples in the thread will show they're not.

Without examining actual interaction, we won't know the clinical, diagnostic, legal, etc. consequences either way.

Without examining actual interaction, we won't know the clinical, diagnostic, legal, etc. consequences either way.

3. Let's start with @rolsi_journal's research on the significant consequences of the way diagnostic instruments about #QualityOfLife are delivered in talk, compared to how they're written on the page.

4. Maynard/Schaeffer's extensive research on standardized survey tools shows that and how, for example, "elaborations on answers... significantly affects what the interviewer does to register a (response) code in the computer."

5. The standardized questions on the Quality of Life questionnaire each have THREE response options. The instructions for "reading the items" are to "pay close attention to the exact wording." But items are often reformulated into yes/no questions with a positive tilt.

6. Here's a study by @sue_wilkinson showing the tension between standardization and 'recipient design' in the case of asking about 'ethnicity of caller': "the ethnicity question is asked and responded to, and then transformed into entries on a coding sheet."

7. When interviewing vulnerable victims, written guidance ('Achieving Best Evidence') for police states that, sometimes, witnesses should *demonstrate* their understanding of "truth and lies" - but *only* at the start of interviews. @Richardson_Emm et al find much deviation.

8. Milgram's classic obedience experiments have generated much debate about ethics since their publication, but Stephen Gibson's modern classic showed that *negotiation* between experimenter and participant led to "radical departures from the standardized experimental procedure."

9. In another classic, Robin Wooffitt showed that, rather than sticking to experimental standardization, the way the "experimenter acknowledges the research participants’ utterances may be significant for the trajectory of the experiment."

10. And here's a paper that compares "formalized communication guidance for interviewing victims, particularly vulnerable adult victims,... to what actually happens in interviews between these victims and police officers." @Richardson_Emm

11. We rarely get to scrutinize the conversations in which standardized questions for research consent are delivered. Sue Speer & I found that, in psychiatric consultations, written questions were delivered without yes/no options and were tilted towards a ‘yes’ response.

12. In @Dr_JoeFord @RoseMcCabe2 et al's analysis of how GPs diagnose depression, with & without the nine-item Patient Health Questionnaire (PHQ-9), they show that the PHQ-9 is not used verbatim - and/but that deviations from the wording works in favour of diagnosis & treatment.

13. Two more studies in which standardized questions as written are different to the same questions as spoken, and their consequences, by @ClaraIversen (in social work) @EricaSandlund & @LNyroos (in performance appraisals).

14. Summary:

- Standardization is assumed to happen, but if we don't look we don't know.

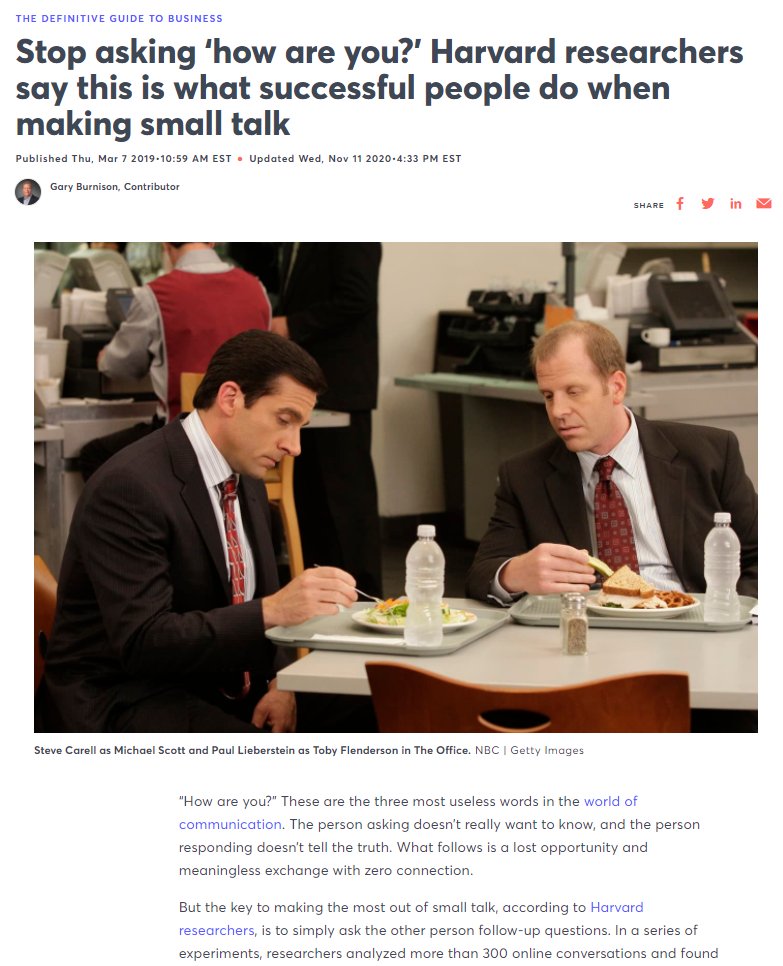

- Some questions are not always well thought through for spoken delivery.

Everything is different when you study 'the world as it happens' (Boden, 1990)

#OpenScience end 🧵

- Standardization is assumed to happen, but if we don't look we don't know.

- Some questions are not always well thought through for spoken delivery.

Everything is different when you study 'the world as it happens' (Boden, 1990)

#OpenScience end 🧵

PS. Thank you @Fi_Contextual for kicking off this thread with a question about the potential invalidity of surveys - I'm sure there are many more studies in #EMCA

• • •

Missing some Tweet in this thread? You can try to

force a refresh