1/ THREAD covering a #NeuroRights gathering co-sponsored by @FBRealityLabs & @Columbia_NTC's @neuro_rights called "Noninvasive Neural Interfaces: Ethical Considerations Conference."

They'll be Looking at the ethical implications of XR technologies from neuroscience POV.

They'll be Looking at the ethical implications of XR technologies from neuroscience POV.

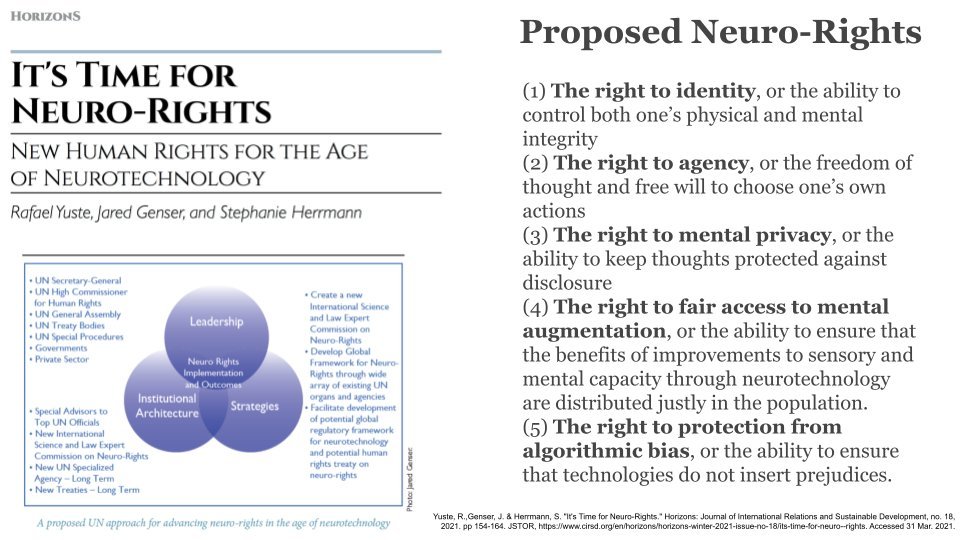

2/ Here's the #NeuroEthics Humans Rights Framework by @yusterafa, @JaredGenser, & @SRHerrm published in March:

https://twitter.com/kentbye/status/1377291980632289281

3/ The @FBRealityLabs Co-organizers are Stephanie Naufel (@snaufel) & Carlos Hernández (@cxhrndz)

I'm really glad to see Facebook catalyzing these conversations between ethicists, academics, and the bleeding edge of industry.

Link to the Schedule:

ntc.columbia.edu/wp-content/upl…

I'm really glad to see Facebook catalyzing these conversations between ethicists, academics, and the bleeding edge of industry.

Link to the Schedule:

ntc.columbia.edu/wp-content/upl…

4/ Opening keynote from @carmeartigas, Government of Spain, Secretary of State for AI, talking about their approach to regulation & digital rights.

How do we preserve right to Privacy, Identity, Speech?

Spain first country to include right to not to be discriminated against AI.

How do we preserve right to Privacy, Identity, Speech?

Spain first country to include right to not to be discriminated against AI.

5/ I believe @carmeartigas covered the Barcelona Charter of Citizens' Rights in the Digital Era:

digitalrightsbarcelona.org/la-carta/?lang…

Includes a number of digital rights like digital identity, intimacy, self-determination, security of data, psychological integrity, etc.

digitalrightsbarcelona.org/la-carta/?lang…

Includes a number of digital rights like digital identity, intimacy, self-determination, security of data, psychological integrity, etc.

6/ Pablo Quintanilla works on Responsible Innovation at @FBRealityLabs.

Says RI is a skillset & mindset that needs to be taught at all levels to anticipate & mitigate harm.

Led future foresight workshops with neural interface teams & implications of using neural data.

Says RI is a skillset & mindset that needs to be taught at all levels to anticipate & mitigate harm.

Led future foresight workshops with neural interface teams & implications of using neural data.

7/ Quintanilla suggests consulting experts early & listen to lived experiences by employees.

Held Multi-Stakeholder Immersion roundtable discussion featuring neuroscience, anthropology, law, civil rights to navigate different perspectives.

Need to explain science to stakeholders.

Held Multi-Stakeholder Immersion roundtable discussion featuring neuroscience, anthropology, law, civil rights to navigate different perspectives.

Need to explain science to stakeholders.

8/ Neural interfaces progress so quickly that there's a responsibility for the tech companies to educate the stakeholders on being able to recognize how neural technology have advanced & some of the ethical implications.

9/ Quintanilla's Four Responsible Innovation steps:

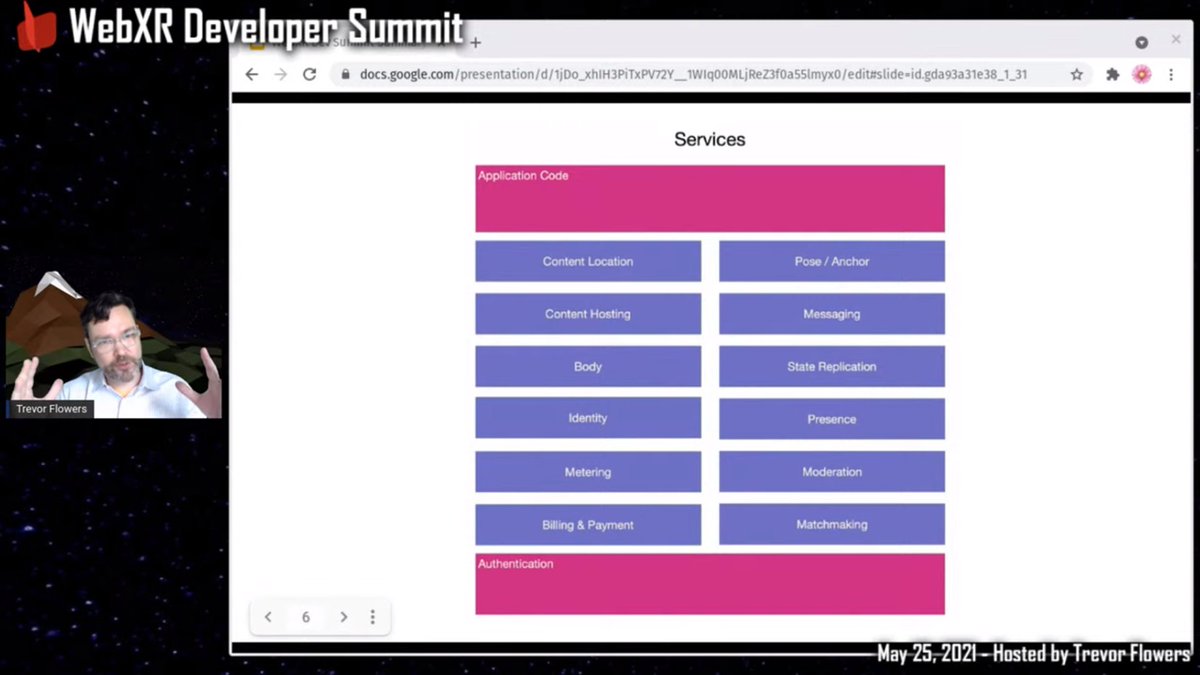

1. Build foresight muscles

2. Consult diverse perspectives early & often

3. Explain science & involve the public

4. Standardize & embed a decision-making framework into the research, design, & development phases

1. Build foresight muscles

2. Consult diverse perspectives early & often

3. Explain science & involve the public

4. Standardize & embed a decision-making framework into the research, design, & development phases

10/ Me: I'm glad to see @FBRealityLabs give a bit more of a nuanced take on Responsible Innovation than their four RI principles, which seem to be more aimed at the public than for internal use.

Hear more of @AnthroPunk & @CatherineFlick's critiques 👇

Hear more of @AnthroPunk & @CatherineFlick's critiques 👇

https://twitter.com/kentbye/status/1388329509372391424

11/ Stephanie Naufel (@snaufel) talking about @FBRealityLabs' "Ethical engagement in early-stage BCI [Brain-Computer Interface] Research."

They're focusing primarily on optical approaches to BCI like Functional near-infrared spectroscopy (fNIRS).

Talking Industry-Academic collabs

They're focusing primarily on optical approaches to BCI like Functional near-infrared spectroscopy (fNIRS).

Talking Industry-Academic collabs

12/ @snaufel announced a new @FBRealityLabs RFP on "Request for proposals on engineering approaches to responsible neural interface design"

Deadline July 14, 2021

research.fb.com/programs/resea…

Then listed some other quotes from other researchers not present today.

Deadline July 14, 2021

research.fb.com/programs/resea…

Then listed some other quotes from other researchers not present today.

13/ Jack Gallant, (UC Berkeley) @gallantlab

Neuroscience capacity is limited by brain measurement, not computation.

Anything in working memory brain circuits is potentially decodable.

Don't know how long-term memory is encoded yet.

Brain reading easier than brain writing.

Neuroscience capacity is limited by brain measurement, not computation.

Anything in working memory brain circuits is potentially decodable.

Don't know how long-term memory is encoded yet.

Brain reading easier than brain writing.

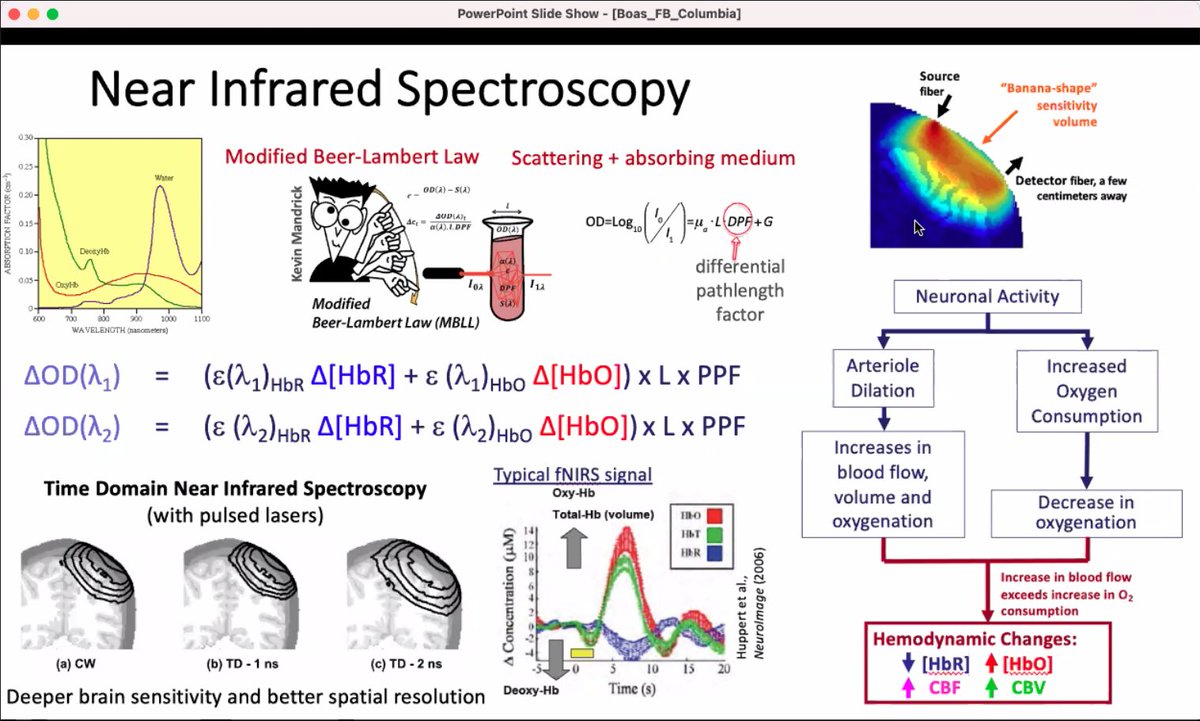

14/ David Boas (Boston U), @BoasDavid

Doing a deep dive into the scientific foundations of Functional Near Infrared Spectroscopy & Neuroimaging in Everyday World Settings.

Doing a deep dive into the scientific foundations of Functional Near Infrared Spectroscopy & Neuroimaging in Everyday World Settings.

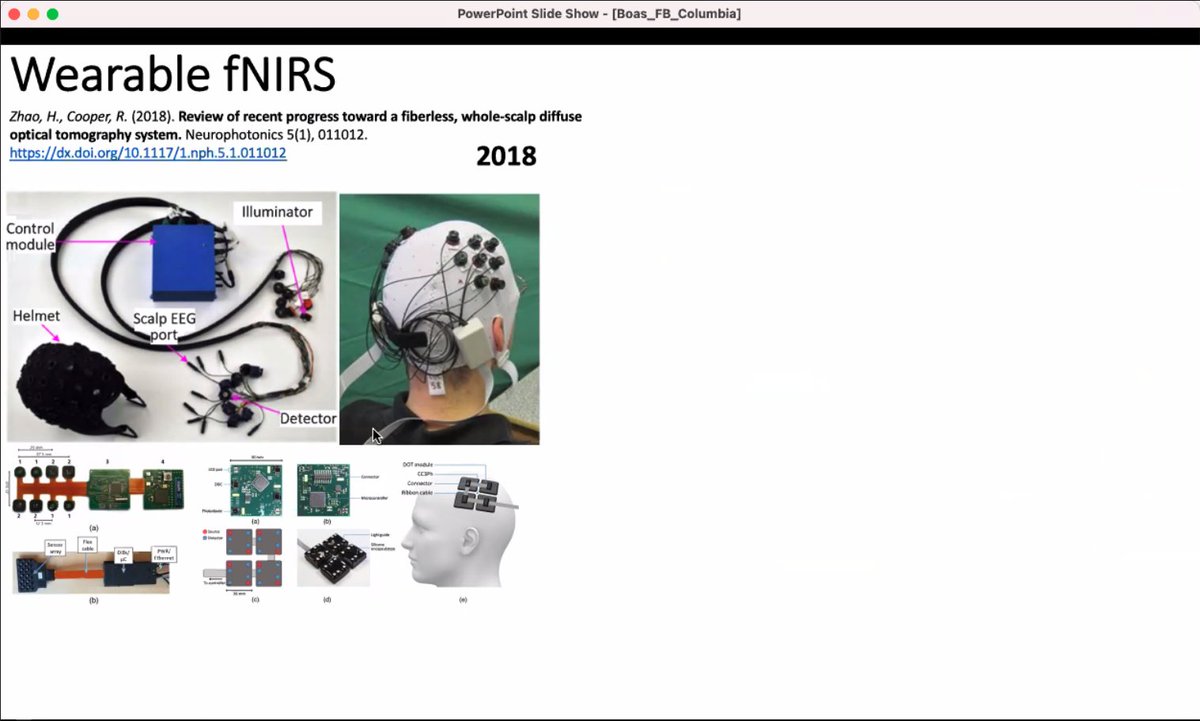

15/ One last slide from @BoasDavid covering the latest research on optical brain imaging technologies

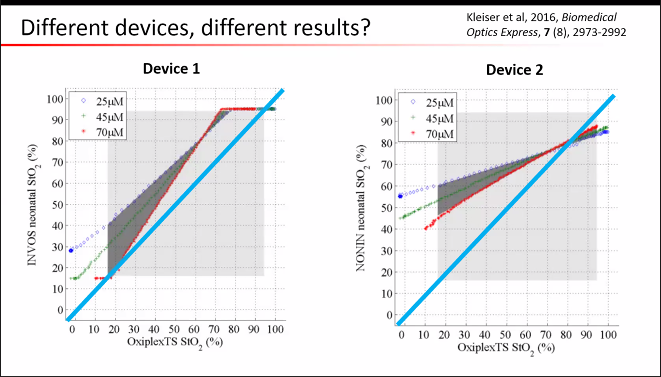

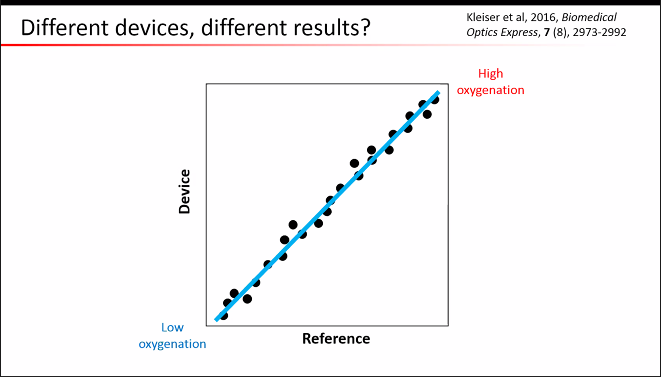

16/ Felix Scholkmann (U Zurich), @fscholkmann

More on optical neuroimaging including Near-Infrared Spectroscopy-Based Cerebral Oxiometry (NIRSCO).

More on optical neuroimaging including Near-Infrared Spectroscopy-Based Cerebral Oxiometry (NIRSCO).

18/ Philipp Kellmeyer (FRIAS), @neuroastics is now leading a discussion on the ethical implications of BCI.

@boasdavid: In next 5 years be able to measure the whole head & brain head & increase bitrate & can combine it with EEG. Open questions what info gain from sensor fusion.

@boasdavid: In next 5 years be able to measure the whole head & brain head & increase bitrate & can combine it with EEG. Open questions what info gain from sensor fusion.

19/ Electrocorticography (ECoG) is an invasive technique of putting electrodes on the brain, and @boasdavid speculated as to whether it'll be possible to ever do a non-invasive, Optical ECoG.

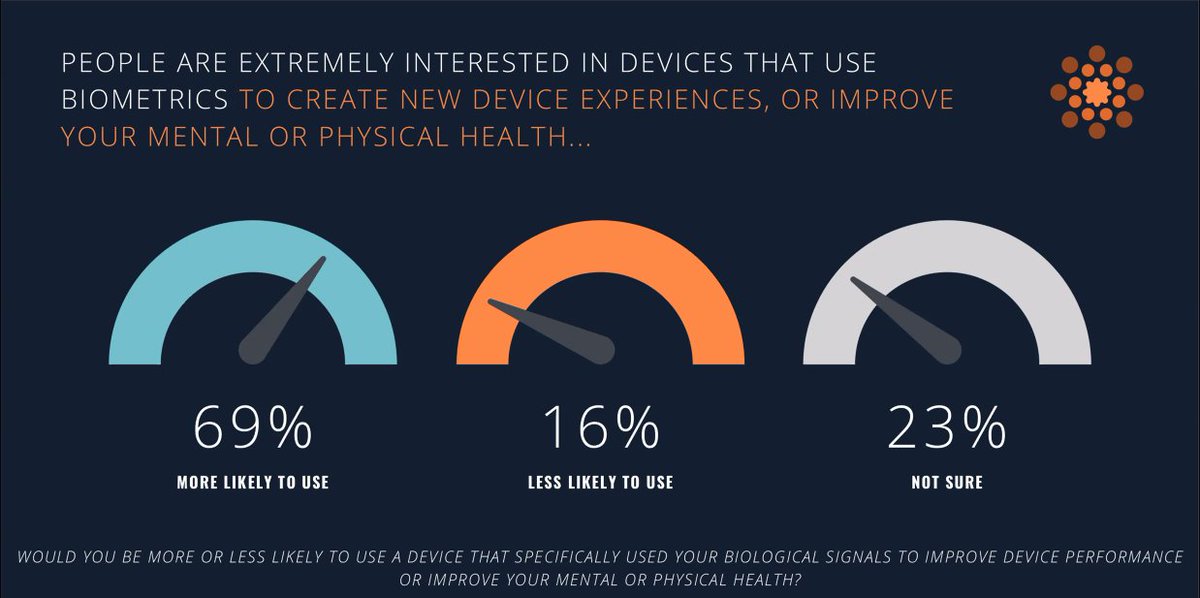

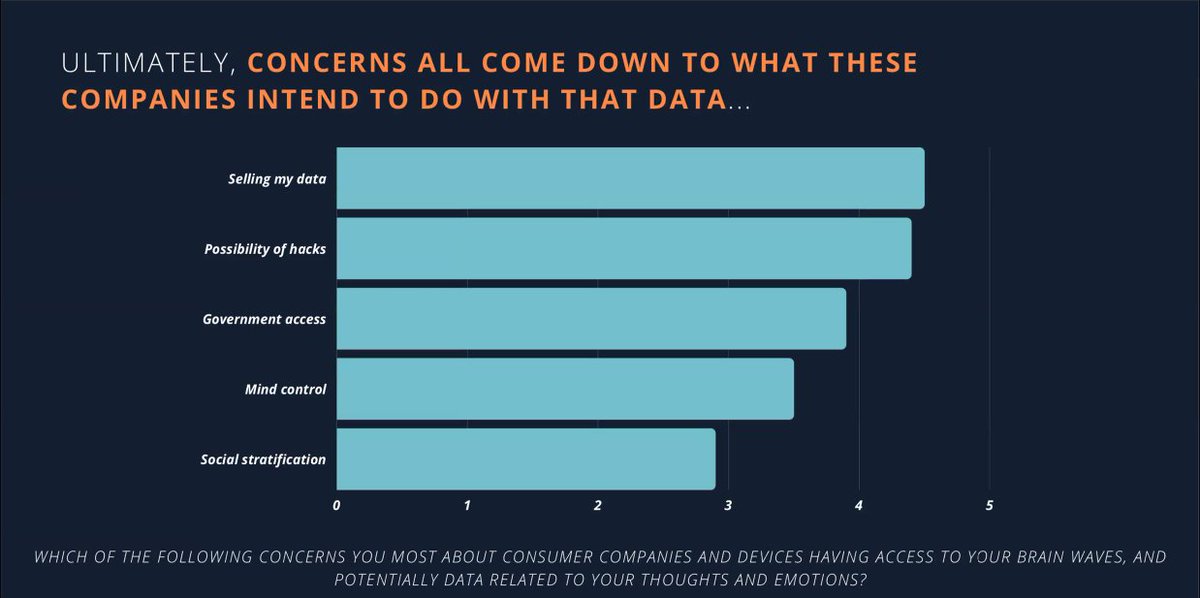

20/ @fscholkmann says some of the most promising applications of neuroimaging will be in Neurocritical Care.

@gallantlab saying combining some brain measurement technologies may be in conflict with each other.

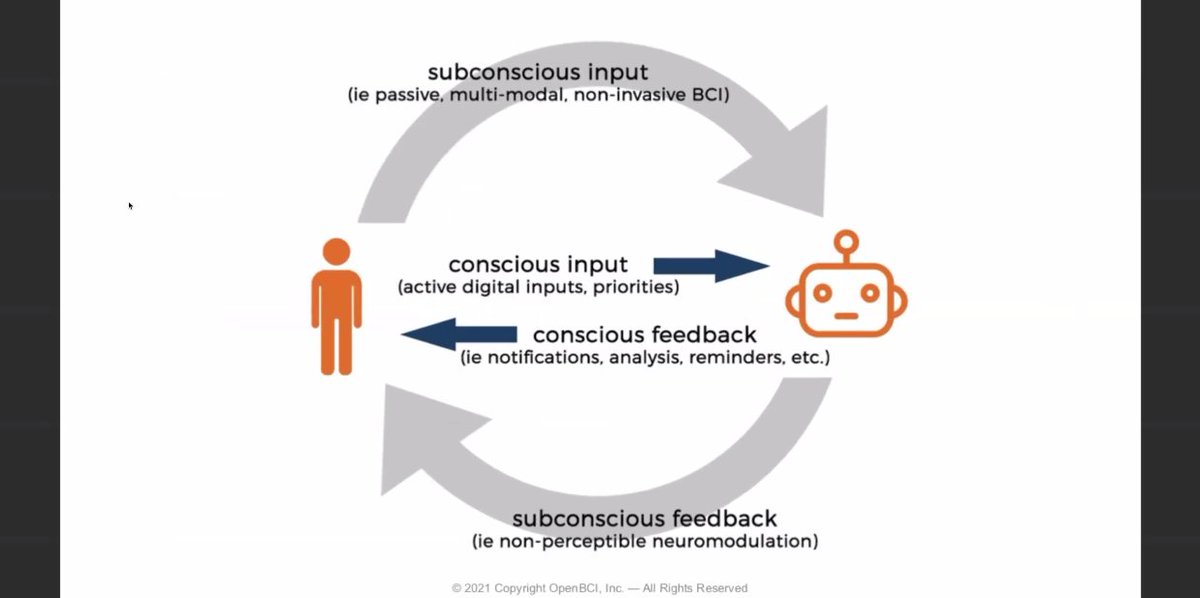

@gallantlab saying combining some brain measurement technologies may be in conflict with each other.

21/ @snaufel says @FBRealityLabs BCI use-case is to convert neural activity to text.

Goal is to be transparent about it.

Ethical considerations she thinks about: Diversity & Inclusion, which is the RFP focus.

Privacy obviously a huge risk.

[Me: Risk to Right to Mental Privacy]

Goal is to be transparent about it.

Ethical considerations she thinks about: Diversity & Inclusion, which is the RFP focus.

Privacy obviously a huge risk.

[Me: Risk to Right to Mental Privacy]

22/ via the Zoom chat is a paper on "Imaging the human hippocampus with optically-pumped magnetoencephalography"

sciencedirect.com/science/articl…

sciencedirect.com/science/articl…

23/ @gallantlab cautioning the hype vs reality of cognitive neuroscience or BCI claims. Everything in neuroscience is measurement-limited, which limits usefulness of machine learning & deep learning.

24/ @snaufel says she'd like to include open access provisions in future Open Science Contracts.

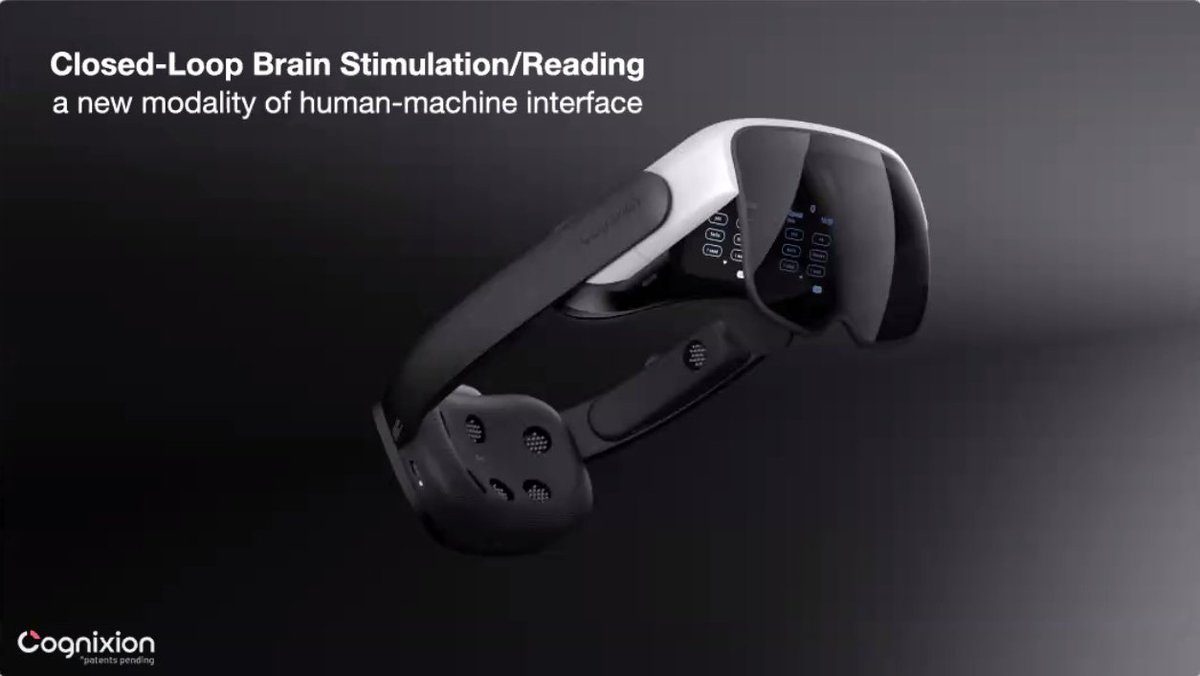

Publications are currency of academia, but not industry.

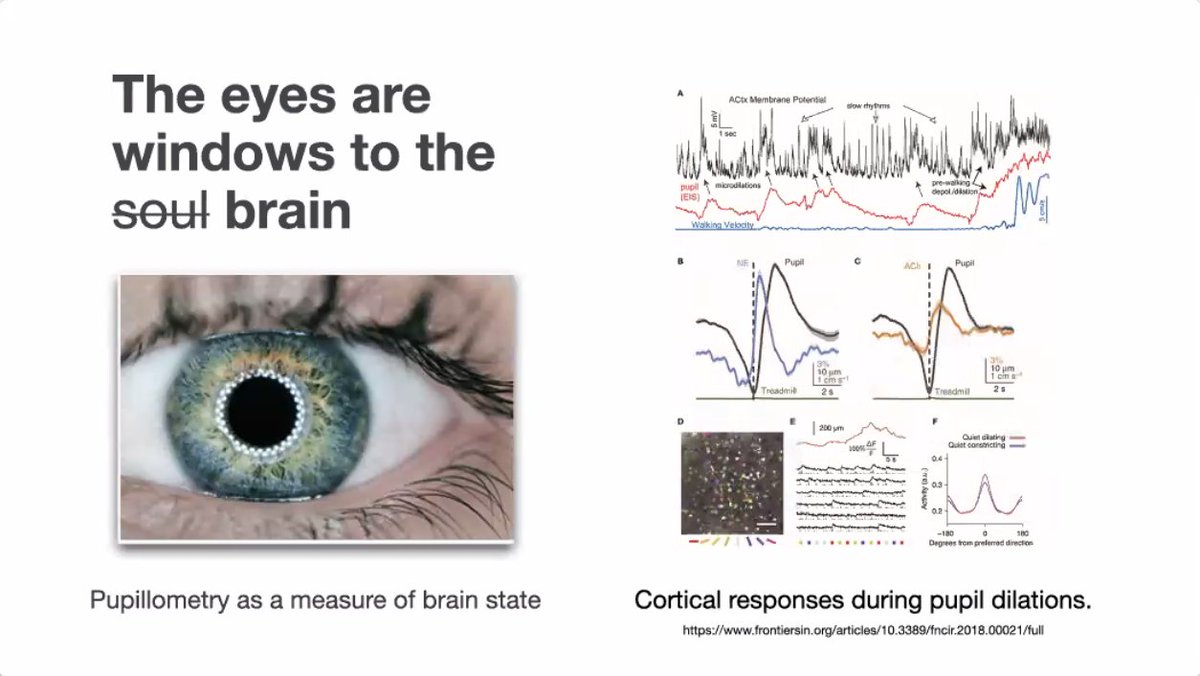

The way to manage hype is by grounding results in science & replication.

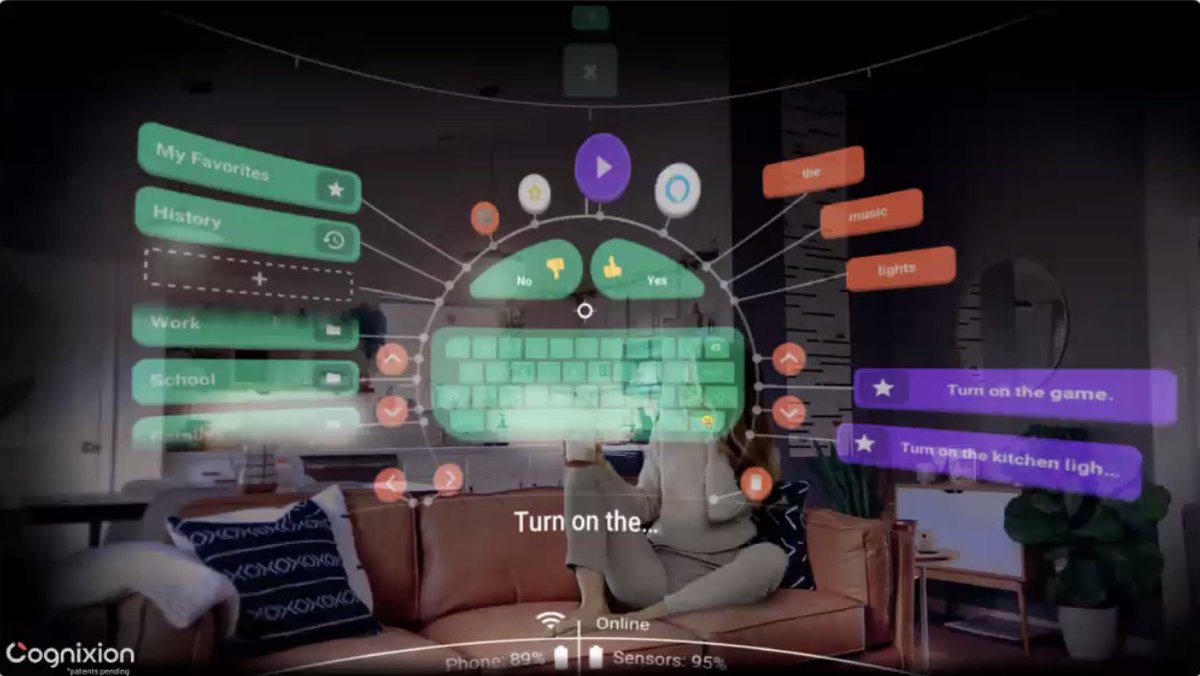

Include ethical statement study was done under IRB.

Publications are currency of academia, but not industry.

The way to manage hype is by grounding results in science & replication.

Include ethical statement study was done under IRB.

25/ Me: The Right to Mental Privacy seems to be biggest threat here. If FB can read our thoughts, then how do you weigh the tradeoffs of contextually-appropriate use vs recording data & the security risks of that data getting into the hands of a government or foreign adversary?

26/ @snaufel: FB doesn't have official stance on Neuro-Rights or regulation.

Some companies don't want neuro-regulation.

Her personal opinion is companies should make clear how the data are being used.

Need feedback on federated learning & differential privacy approaches.

Some companies don't want neuro-regulation.

Her personal opinion is companies should make clear how the data are being used.

Need feedback on federated learning & differential privacy approaches.

27/ Regarding the risks of privacy, @gallantlab says the risks are a lot worse than a lot of people are really talking about. There's a lot of personally identifiable information with this brain data, which has a lot of research implications for how that data are treated.

28/ The neuroscientists on this panel are focused on fundamental research focused on clinical applications, & they're pretty skeptical of consumer uses like mental wellness neurofeedback.

They don't seem to aware of @FBRealityLabs' BCI or how it could be used in an XR context.

They don't seem to aware of @FBRealityLabs' BCI or how it could be used in an XR context.

29/ @snaufel says @FBRealityLabs Research is currently targeting speech motor context & that wake words will be really important.

Interested in federated learning approach to do ML work on chip or on device so that the raw data don't need to be send back to FB servers.

Interested in federated learning approach to do ML work on chip or on device so that the raw data don't need to be send back to FB servers.

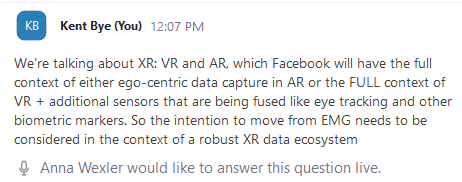

30/ @boasdavid claims that what can you get from brain data is not really that different than the type of medical information folks are already revealing on social networks.

Me: This vastly underestimates what will be possible when it's combined w eye tracking & biometrics in XR.

Me: This vastly underestimates what will be possible when it's combined w eye tracking & biometrics in XR.

31/ @gallantlab says people have a poor understanding of privacy.

@snaufel has been exploring ethical frameworks & @yusterafa told her that there's ~80 AI ethical frameworks, & need to congeal on a smaller set of frameworks that work in different use cases.

@snaufel has been exploring ethical frameworks & @yusterafa told her that there's ~80 AI ethical frameworks, & need to congeal on a smaller set of frameworks that work in different use cases.

32/ Overall this first panel was heavy on brain science & light on the ethical implications or frameworks to deal with them.

No human rights or neuro ethicists were on the panel to articulate some of the deeper concerns or questions with where XR technology are headed.

No human rights or neuro ethicists were on the panel to articulate some of the deeper concerns or questions with where XR technology are headed.

33/ One example is the discourse around privacy was still centered around identity, but in XR companies already know who you are. The real privacy challenges are around the real-time biometric inferences on what @brittanheller calls biometric psychography:

https://twitter.com/kentbye/status/1380223778827857923

34/ One of the major efforts happening at @FBRealityLabs is the fusion of data sources to gain insight into the user's context towards contextually-aware AI.

At IEEE VR, FBL extrapolated eye gaze via head & hand pose.

They'll be combining all data sources.

At IEEE VR, FBL extrapolated eye gaze via head & hand pose.

They'll be combining all data sources.

https://twitter.com/kentbye/status/1376409069406220290

35/ @yusterafa passed along the Spanish version of the Digital Rights that @carmeartigas was covering:

portal.mineco.gob.es/RecursosArticu…

I think that this is an English translation of the "Charter of Digital Rights" from Spain (3 Dec 2020):

nri.ntc.columbia.edu/sites/default/…

portal.mineco.gob.es/RecursosArticu…

I think that this is an English translation of the "Charter of Digital Rights" from Spain (3 Dec 2020):

nri.ntc.columbia.edu/sites/default/…

https://twitter.com/kentbye/status/1397575071997198340

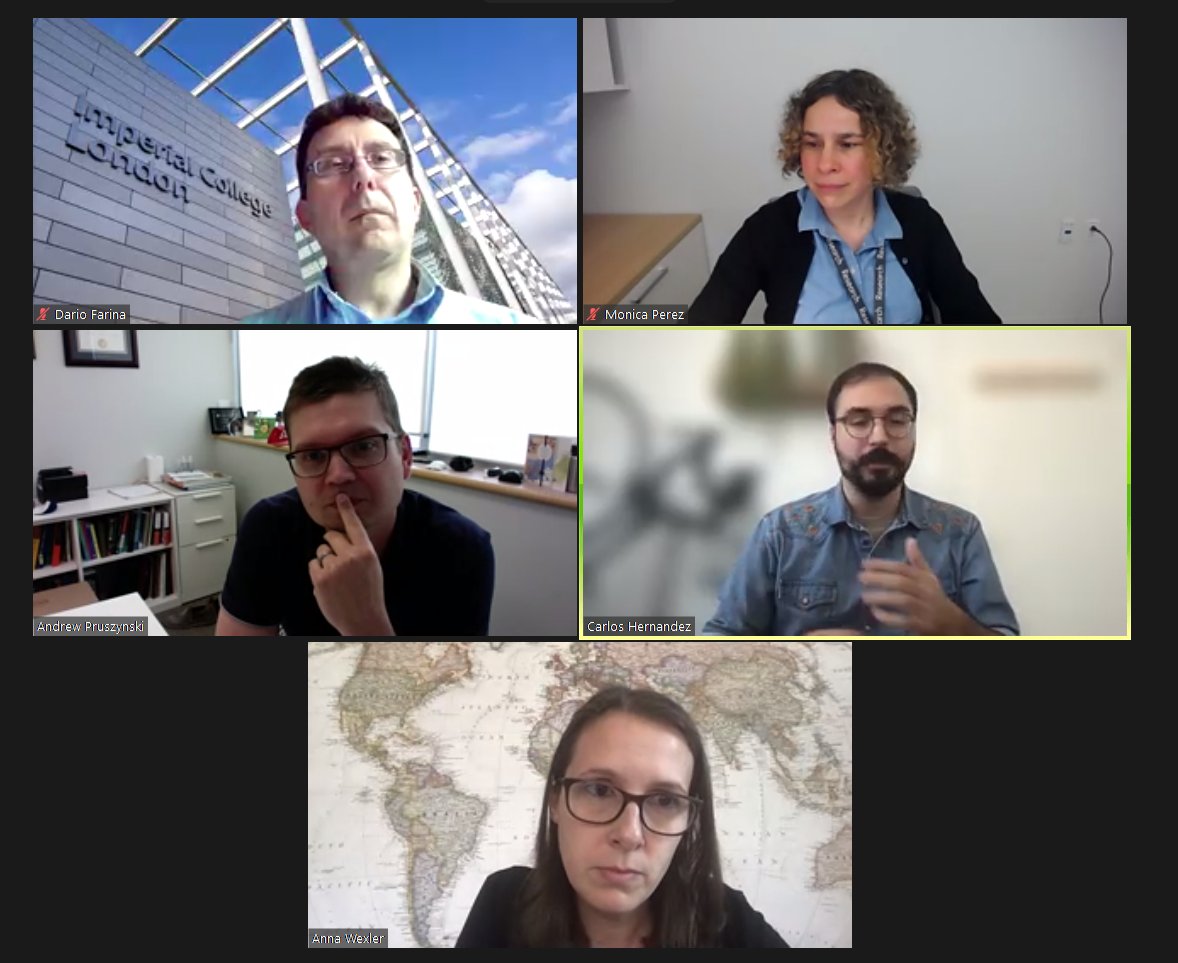

36/ Chair of afternoon session on EMG is Anna Wexler (UPenn), @anna_wexler & kicking off now.

Gives a shout out to @FBRealityLabs for hosting this discussion on ethics.

Gives a shout out to @FBRealityLabs for hosting this discussion on ethics.

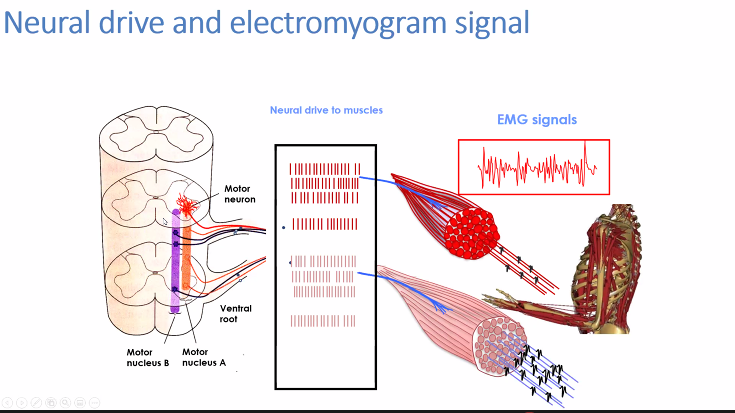

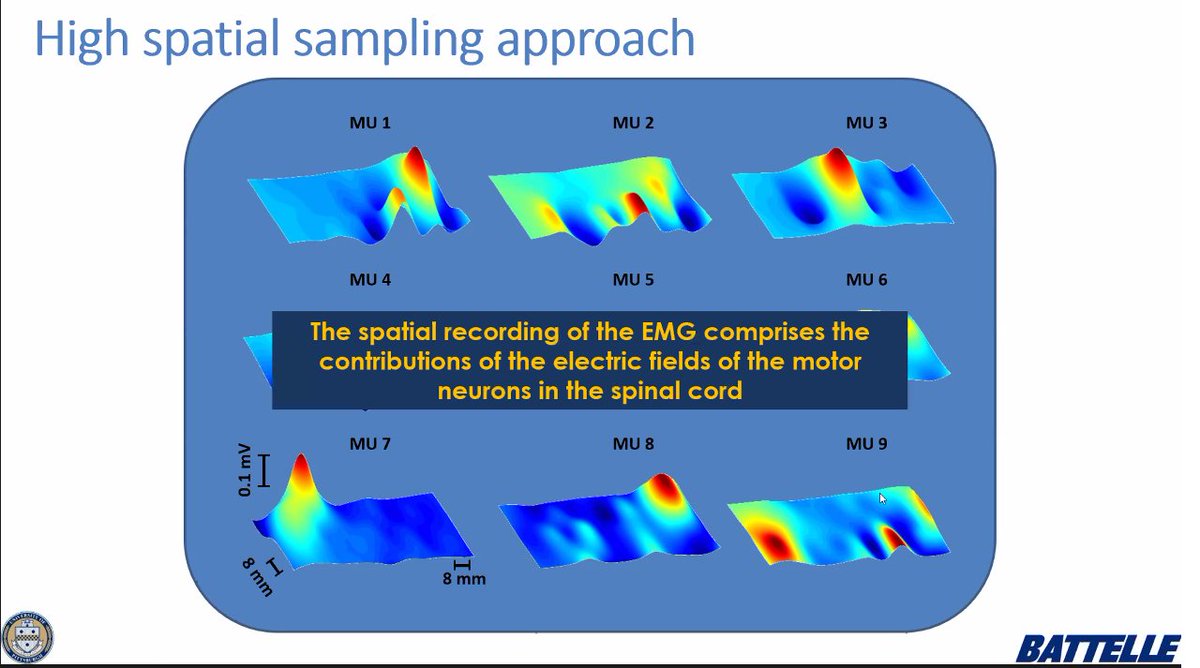

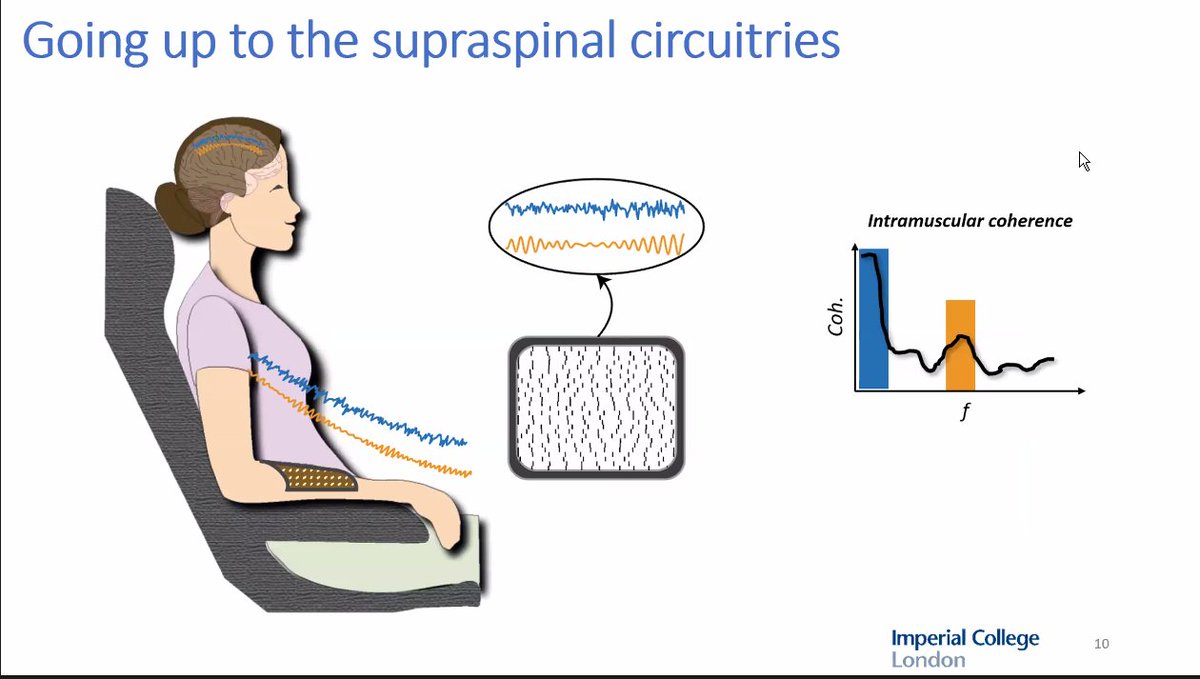

37/ Dario Farina (Imperial College London) @NeuroCon_ICL giving the history & scientific context of EMG in a talk called "About Surface Electromyography: From Muscle Electrophysiology to Neural Recording & Interfacing."

The motor neurons + muscle fibers = motor unit.

The motor neurons + muscle fibers = motor unit.

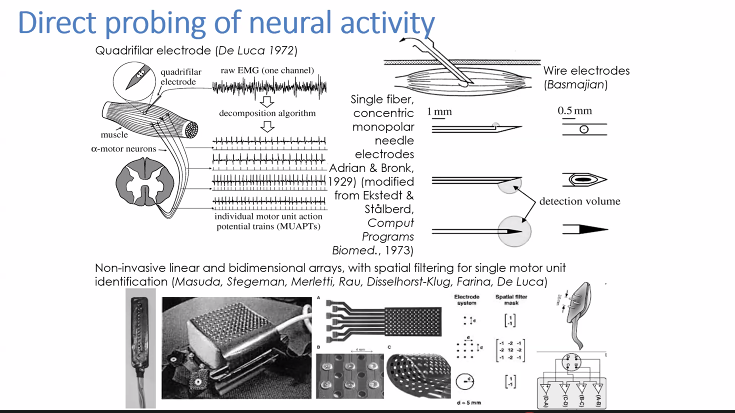

38/ De Luca's 1972 direct probing of neural activity was EMG breakthrough still used.

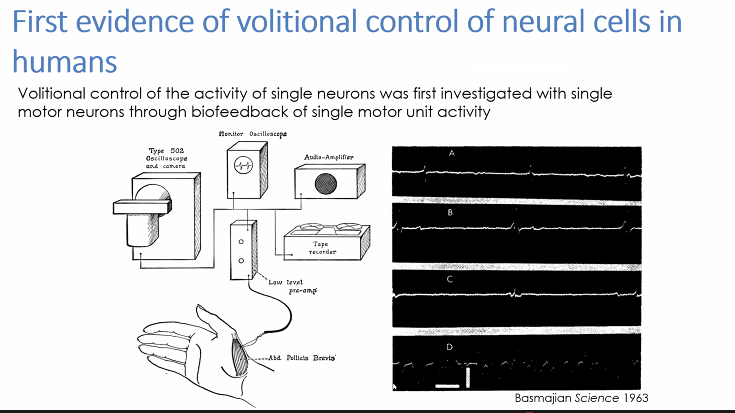

Basmajian's 1963 Science paper found first evidence of volitional control of neural cells in the spinal cord. This was the first neural interface, which was invasive.

Motor Unit electric fields

Basmajian's 1963 Science paper found first evidence of volitional control of neural cells in the spinal cord. This was the first neural interface, which was invasive.

Motor Unit electric fields

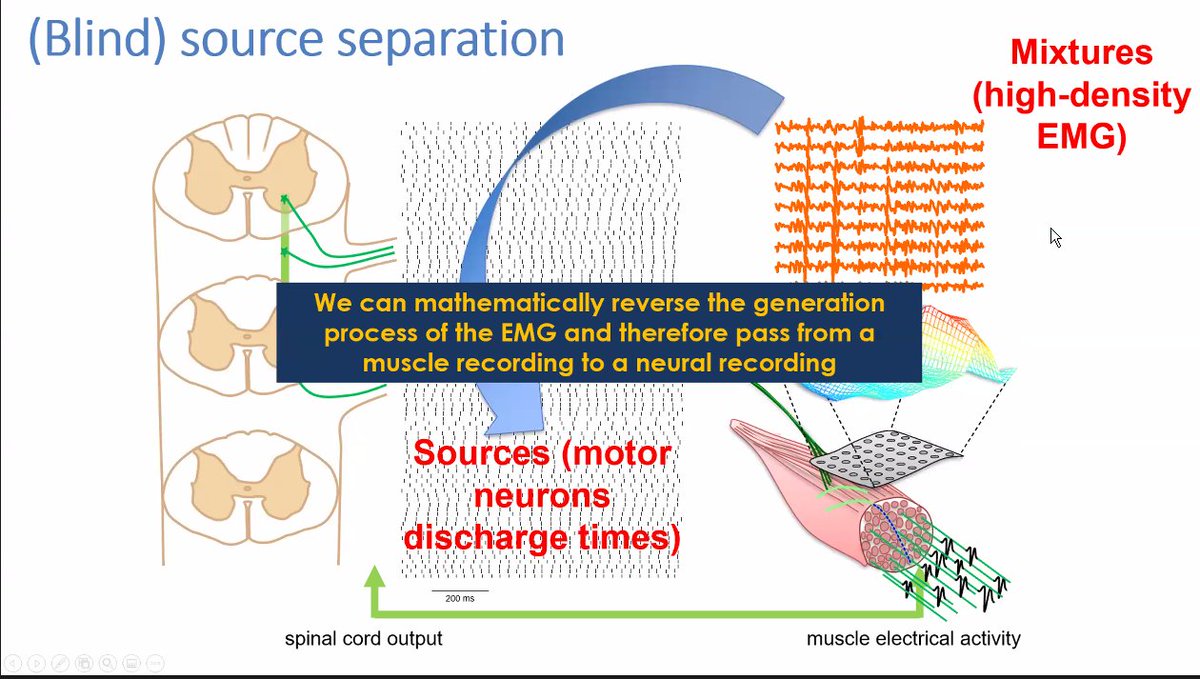

39/ Talking about the mathematics to go from the muscle interface to the neural interface, allowing the jump to the peripheral level to the motor neurons on the spinal chord.

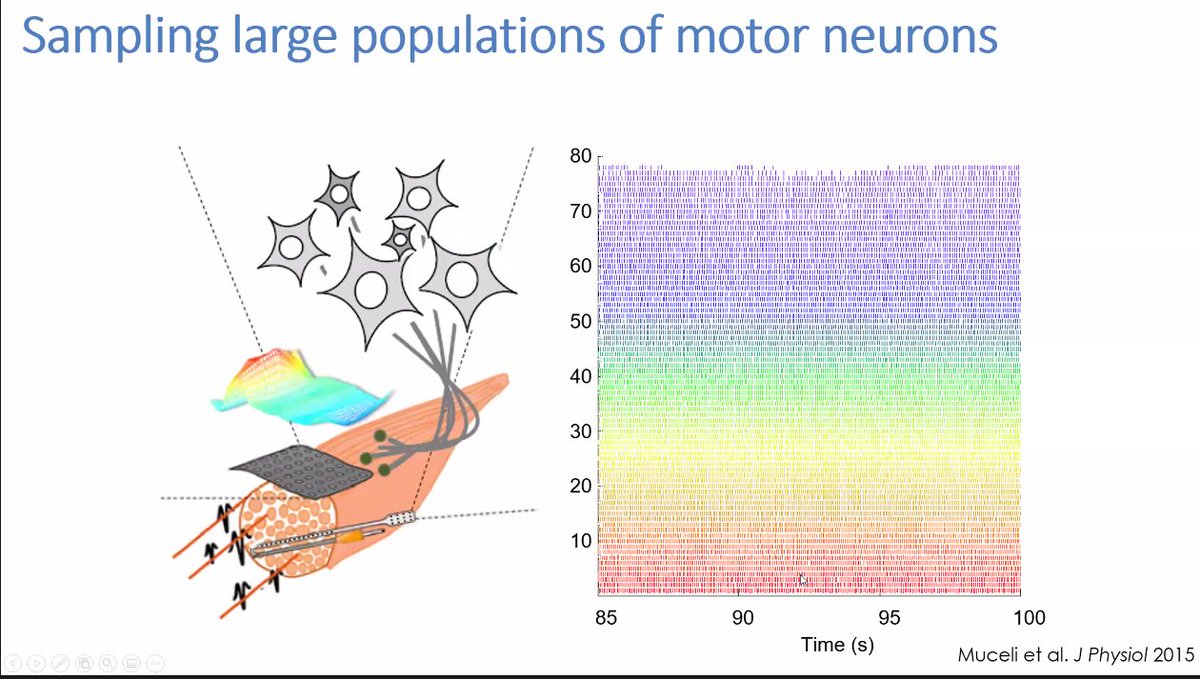

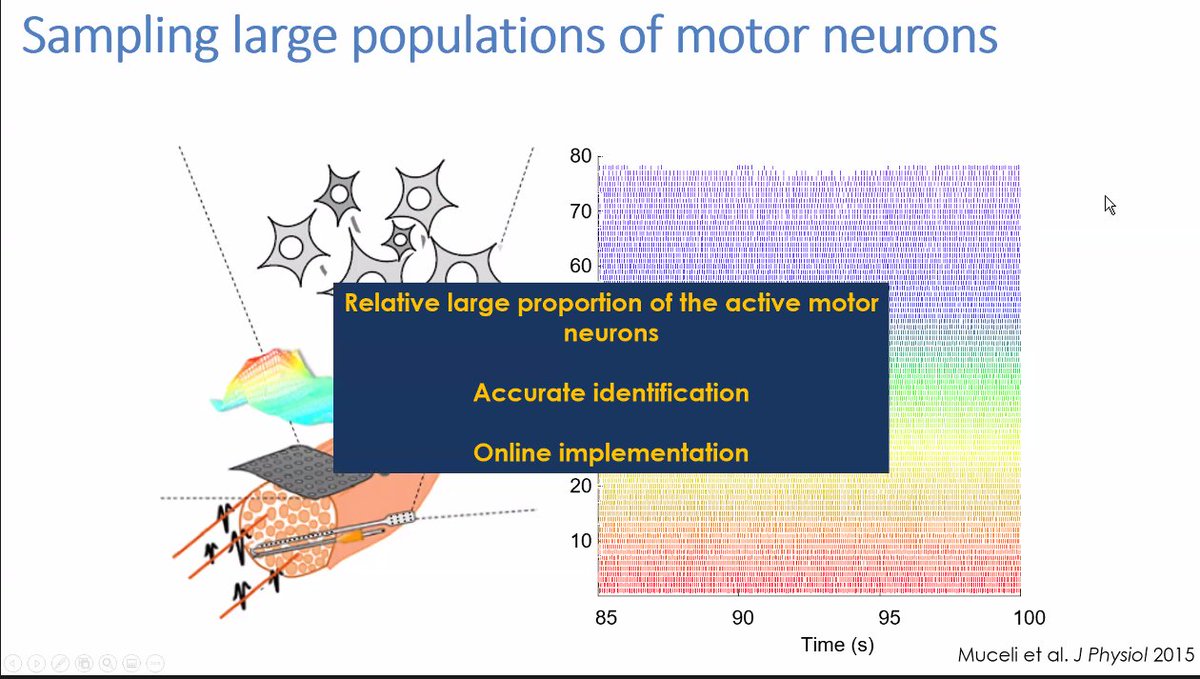

40/ Motor neurons are the only neurons we can directly access non-invasively.

Also able to correlate the motor units with other data input signals.

Also able to correlate the motor units with other data input signals.

41/ Digging into some of the research by @NeuroCon_ICL in being able to use neurofeedback on neuron activities to neural input devices

42/ Conclusions: EMG gives access to spinal cord circuits.

Motor neurons give ultimate neural view in the neural control of movement.

You can have a brain interface with non-invasive EMG.

Motor neurons give ultimate neural view in the neural control of movement.

You can have a brain interface with non-invasive EMG.

43/ For more context, I did a deep dive into a lot of the neuroscience of EMG for neural interfaces with @FBRealityLabs's Director of Neuromotor Interfaces @thomasreardon5 in this interview:

https://twitter.com/kentbye/status/1377044447179857920

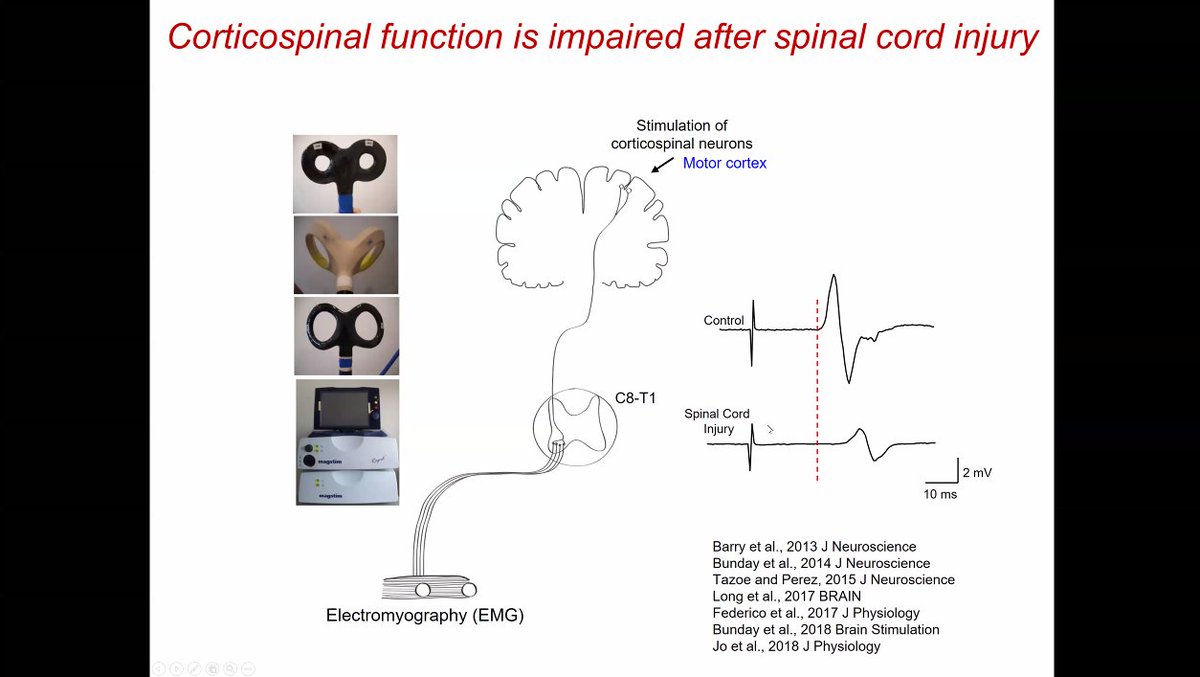

44/ Monica Perez (Shirley Ryan @AbilityLab) now talking about "Central & Peripheral Stimulation in Humans" including:

Motor Evoked Potential (MEP)

Cervicomedullary Motor Evoked Potential (CMEP)

Cervical Root Response

Motor Response

Motor Evoked Potential (MEP)

Cervicomedullary Motor Evoked Potential (CMEP)

Cervical Root Response

Motor Response

45/ Perez showed a video demonstrating how they're able to stimulate via EMG & trigger different twitch responses. [I will try to track it down later as there was an interesting non-invasive device put onto the head]

Evoking stretch reflexes

Evoking stretch reflexes

46/ EMG can detect symmetries in the context of medical rehabilitation.

38 people with spinal cord injuries studied in "corticospinal-motoneuronal plasticity" for exercise-mediated recovery with EMG stimulation.

EMG stimulation could be critical for neuro-rehabilitation contexts.

38 people with spinal cord injuries studied in "corticospinal-motoneuronal plasticity" for exercise-mediated recovery with EMG stimulation.

EMG stimulation could be critical for neuro-rehabilitation contexts.

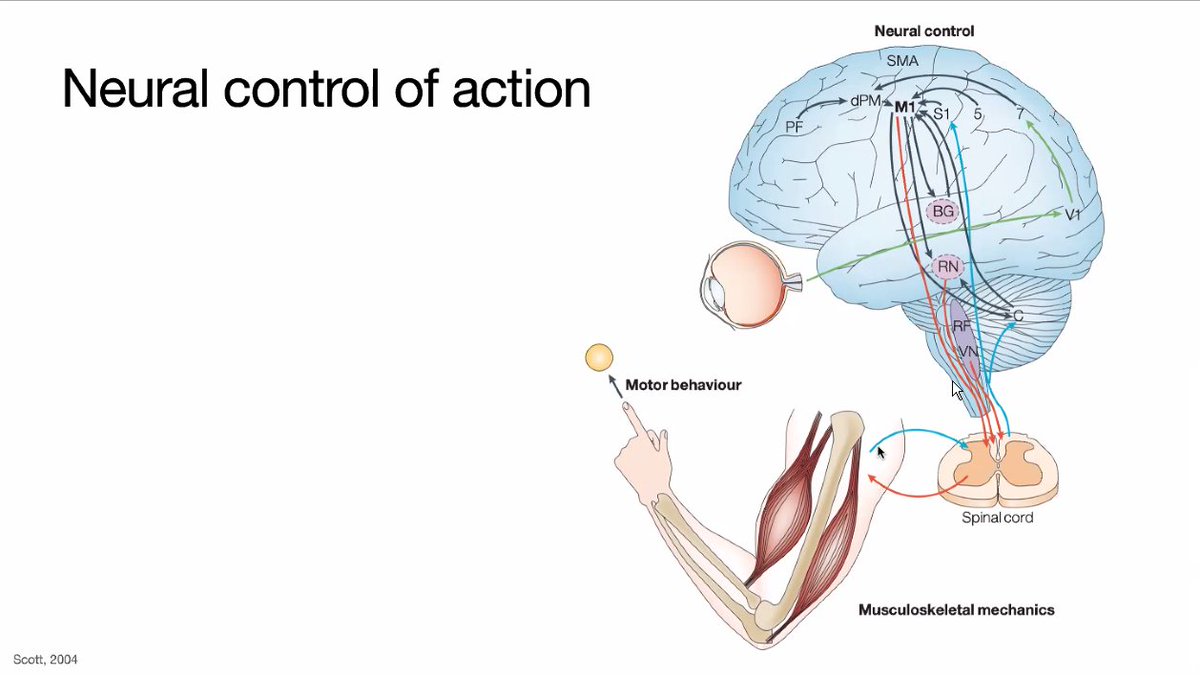

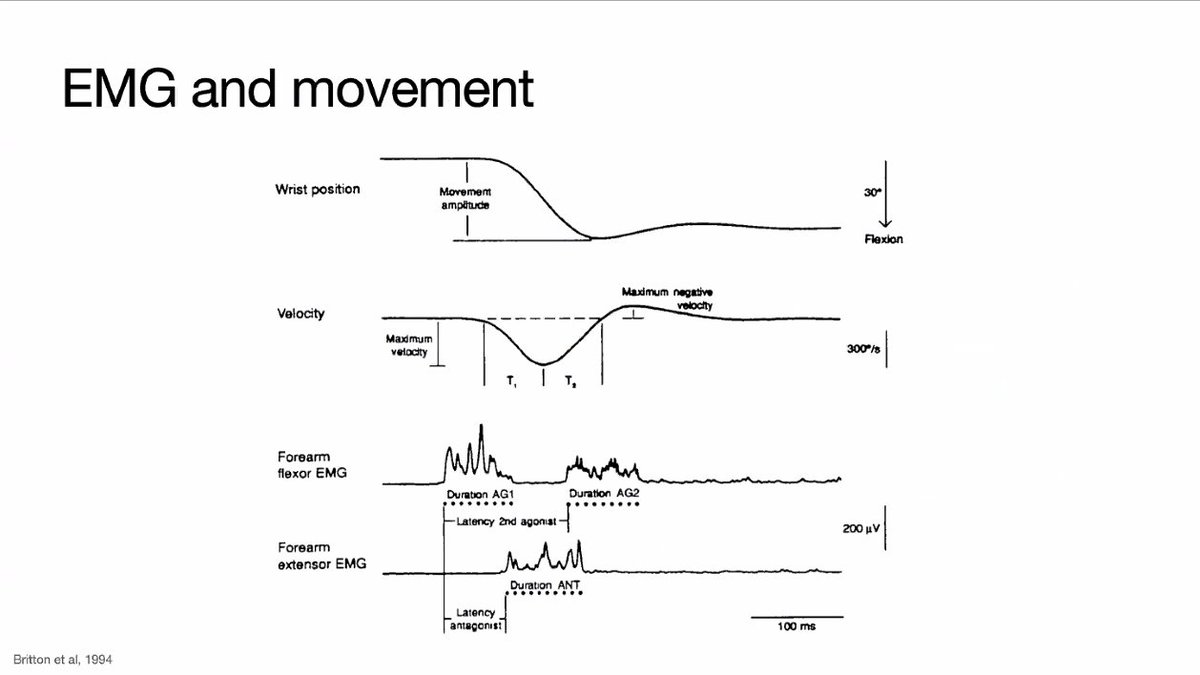

48/ Andrew Pruszynski (UWO) @andpru now talking about "EMG in Action, Seen & Unseen."

Importance of the final common pathway to motor neurons to effect behavior, learn to control the motor units.

EMG is not just about movement, but also can measure isometric muscle force.

Importance of the final common pathway to motor neurons to effect behavior, learn to control the motor units.

EMG is not just about movement, but also can measure isometric muscle force.

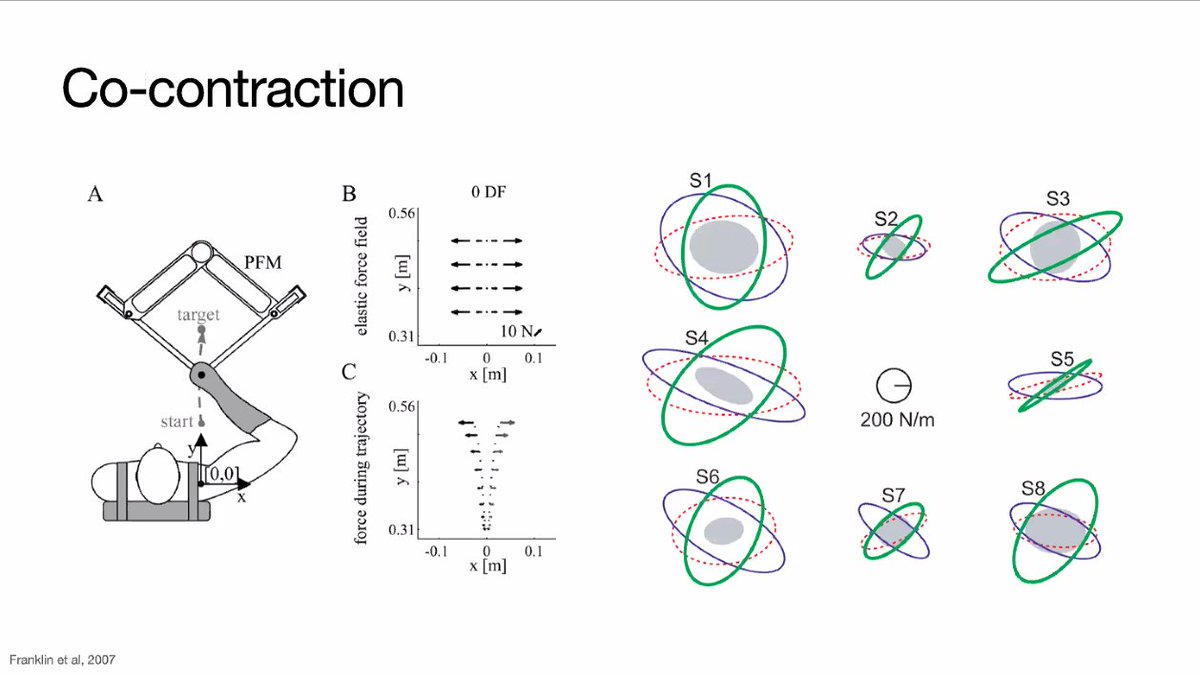

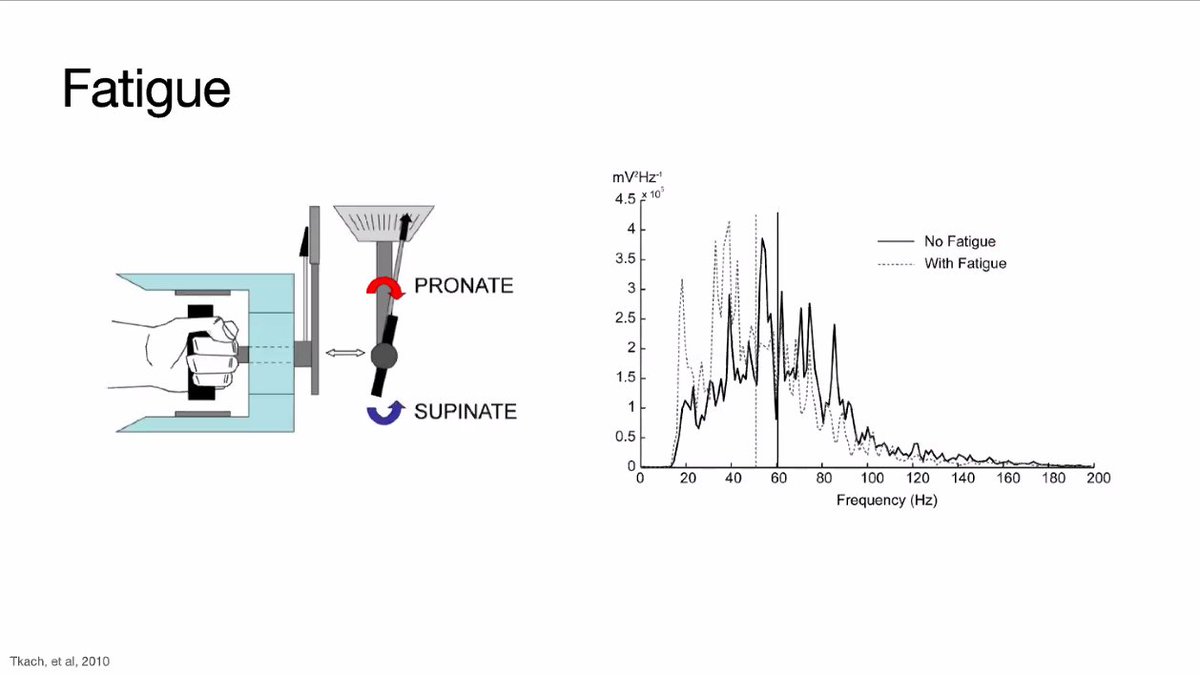

49/ @andpru talking about using EMG to look at co-contraction of muscles as well as measuring the degree of fatigue state of muscles.

Could also facilitate covert attention to pay attention to things beyond your eye gaze, which can also be detected by EMG.

Could also facilitate covert attention to pay attention to things beyond your eye gaze, which can also be detected by EMG.

52/ Able to detect different gestures & control & single motor neuron & intention to move without any motion.

Neural interfaces are going to be a key part of the future of XR.

Neural interfaces are going to be a key part of the future of XR.

53/ Demo of opening menus using low-energy gestures.

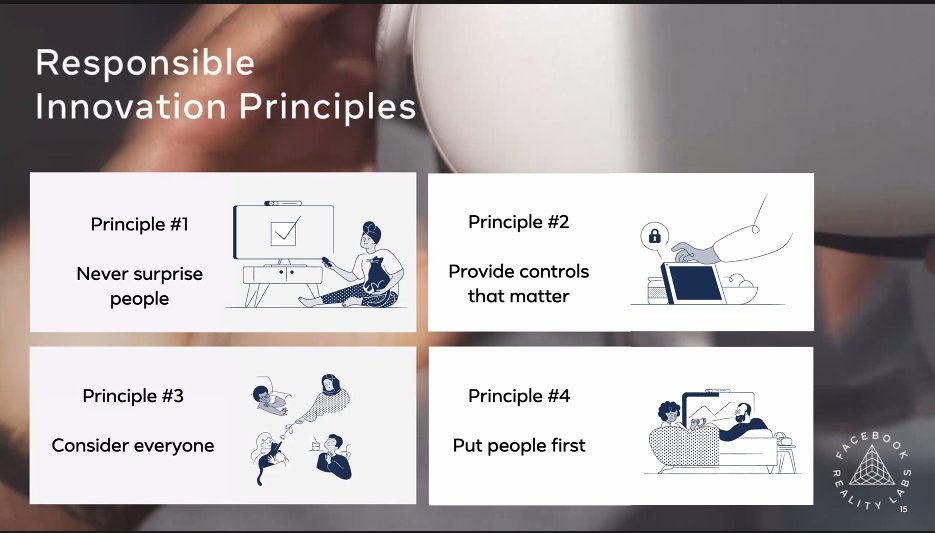

Now talking about @FBRealityLabs's: Responsible Innovation Principles:

1. Never Surprise People

2. Provide Controls that Matter

3. Consider Everyone

4. Put People First.

Now talking about @FBRealityLabs's: Responsible Innovation Principles:

1. Never Surprise People

2. Provide Controls that Matter

3. Consider Everyone

4. Put People First.

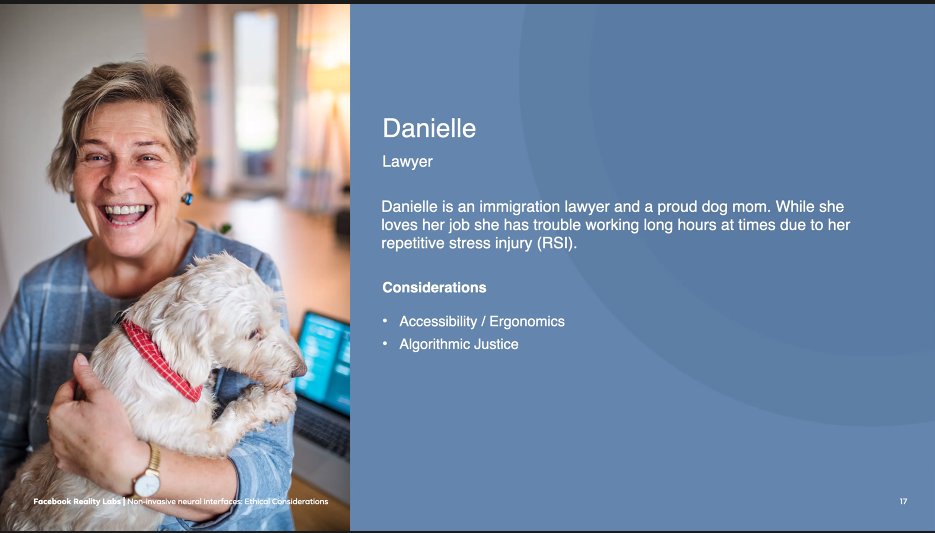

54/ @FBRealityLabs looks at different personas for who could benefit from EMG & neural inputs, like repetitive stress injury.

Inclusion is a major research area.

Inclusion is a major research area.

55/ EME Ethical discussion:

What can we infer with EMG?

Simple Gestures.

Motionless control of things (intention to move).

Can't fully control robotic, prosthetic arms with EMG yet (virtual limbs is another question).

Patients assessments of voluntary vs involuntary vs spasm EMG

What can we infer with EMG?

Simple Gestures.

Motionless control of things (intention to move).

Can't fully control robotic, prosthetic arms with EMG yet (virtual limbs is another question).

Patients assessments of voluntary vs involuntary vs spasm EMG

56/ Where are the limits of EMG?

What's resolvable down to the level of muscle?

Can you decouple motor units from the intention to move or not?

Used to be looking only at 1-5 motor units, but now scale is a lot bigger with high-channel count recordings.

What's resolvable down to the level of muscle?

Can you decouple motor units from the intention to move or not?

Used to be looking only at 1-5 motor units, but now scale is a lot bigger with high-channel count recordings.

57/ @andpru is skeptical that you're going to extrapolate useful information about covert attention in a "real-world situation."

Again, XR has a rich data ecosystem of egocentric data capture, biometrics, situational awareness, & contextually-aware AI in VR & AR environments.

Again, XR has a rich data ecosystem of egocentric data capture, biometrics, situational awareness, & contextually-aware AI in VR & AR environments.

58/ Again, the majority of the discussions around EMG privacy have been centered around identity & the PII nature of the data.

@andpru says it's too early days to imagine the full scope of the privacy implications of the central nervous system & what muscle data could be decoded.

@andpru says it's too early days to imagine the full scope of the privacy implications of the central nervous system & what muscle data could be decoded.

59/ @anna_wexler asked my question of EMG data used in context of XR data to @cxhrndz & he doesn't see EMG alone raising any additional privacy concerns in the context of a XR ecosystem & could be used to enhance user privacy & communication without giving away motion intention.

60/ @FBRealityLabs' @cxhrndz & @snaufel have both emphasized Federated Learning again & again as a tech privacy architecture.

Me: This is a potentially good mitigation against the identifiable nature of data, but it doesn't address data fusion or biometric psychographic risks.

Me: This is a potentially good mitigation against the identifiable nature of data, but it doesn't address data fusion or biometric psychographic risks.

61/ Some attendees skeptical about @FBRealityLabs involvement in ethics & whether they're engaged in ethics washing. Moderator @anna_wexler giving some benefit of the doubt.

@cxhrndz emphasized being engaged in conversation with ethicists & outside experts like this conference.

@cxhrndz emphasized being engaged in conversation with ethicists & outside experts like this conference.

62/ In terms of equity for EMG, not everyone has equal access to the fidelity of quality of signals from EMG. They were saying the space between tissues, and I'm not sure if that meant that variance in weight provides different signal quality or if it's an independent variable.

63/ A question was asked about the XR implications of Facebook for Cambridge Analytica, which cultivated psychographic profiles.

@cxhrndz emphasized that they will have proper controls in place, & ensure that users will be able to maintain their agency when using EMG devices.

@cxhrndz emphasized that they will have proper controls in place, & ensure that users will be able to maintain their agency when using EMG devices.

64/ @andpru emphasizing the need for regulatory intervention through privacy since it's easy for these things to get out of control, & how it's already gone too far with our cell phones.

65/ @cxhrndz agrees for regulation need, but needs to be done thoughtfully & not stifle innovation.

FB is lowering barriers to access.

Can augment everyone in their lives.

Don't know sum of possibilities.

Balance privacy & agency + paint path forward for businesses to innovate.

FB is lowering barriers to access.

Can augment everyone in their lives.

Don't know sum of possibilities.

Balance privacy & agency + paint path forward for businesses to innovate.

66/ Marcello Ienca (ETHZ) @marcelloienca starting the last section on "Neural Data Ecosystems" featuring talks from:

Rafael Yuste (Columbia U) @yusterafa

@KernelCo's Ryan Field @ryanmfield

@OpenBCI's @ConorRussomanno

Yannick Roy @NeurotechX

Rafael Yuste (Columbia U) @yusterafa

@KernelCo's Ryan Field @ryanmfield

@OpenBCI's @ConorRussomanno

Yannick Roy @NeurotechX

67/ Rafael Yuste (Columbia U) @yusterafa talking about the threats to mental privacy with non-invasive neural tech.

More neuroscience advances, the more that we'll be decoding the brain.

Not only conscious data, but also unconscious data in brain & spinal cord.

More neuroscience advances, the more that we'll be decoding the brain.

Not only conscious data, but also unconscious data in brain & spinal cord.

68/ With invasive techniques: can see what a mouse is looking at and can artificially stimulate a mouse's visual cortex to induce visual hallucinations and "run him like a puppet."

Eddie Chang published on synthesize speech &

decode hand-written characters with invasive tech.

Eddie Chang published on synthesize speech &

decode hand-written characters with invasive tech.

69/ @yusterafa: Using non-invasive techniques, storytelling & auditory system then can predict interpretations of stories with an ambiguous ending, attempts of decoding mental images.

We already have a mental privacy risk today.

No reason to wait because it's only getting worse.

We already have a mental privacy risk today.

No reason to wait because it's only getting worse.

70/ @yusterafa cited stats from the Q&A where only 30% of BCI data is non-personalizable & 70% of brain data is PII.

With mice, able to detect arousal state & extrapolate what's happening in other parts of the brain.

Mental Privacy a serious problem & is an issue of Human Rights.

With mice, able to detect arousal state & extrapolate what's happening in other parts of the brain.

Mental Privacy a serious problem & is an issue of Human Rights.

71/ @yusterafa is skeptical about relying purely on technological architectures like federated learning, homeomorphic encryption, on-chip processing to preserve the human right of mental privacy.

It's under third-party control, and these tech solutions don't go far enough.

It's under third-party control, and these tech solutions don't go far enough.

72/ @yusterafa number of approaches:

1. Only use neural data for Scientific & Medical Uses

2. Authorize the selling & buying of brain data as long as it's not personalizable.

3. Treat brain data legally as it was a body organ, which prevents the monetization of brain data.

1. Only use neural data for Scientific & Medical Uses

2. Authorize the selling & buying of brain data as long as it's not personalizable.

3. Treat brain data legally as it was a body organ, which prevents the monetization of brain data.

73/ Other approaches for neural data:

4. Treat brain data like genetic data

5. Take the lax position that treats neural data the same as personal data, which @yusterafa sees would be a mistake.

Other considerations for how to deal with neural data from children

4. Treat brain data like genetic data

5. Take the lax position that treats neural data the same as personal data, which @yusterafa sees would be a mistake.

Other considerations for how to deal with neural data from children

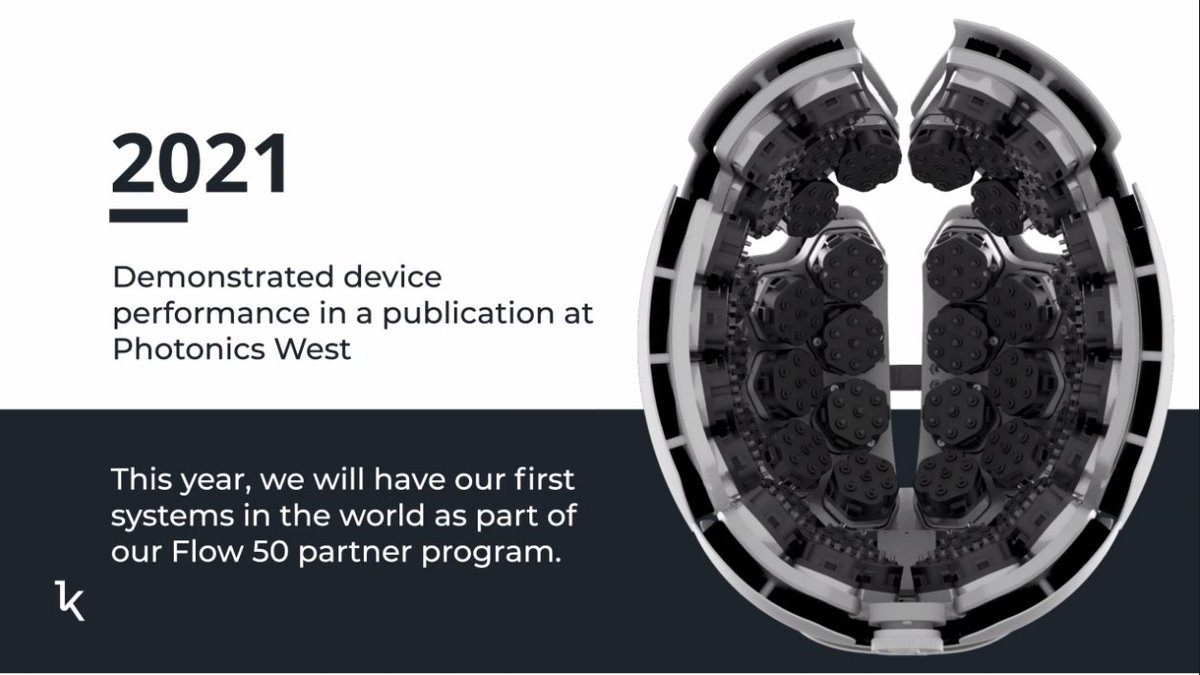

74/ @KernelCo's Ryan Field @ryanmfield talking about Kernel Flow & "Individual Consent & Control."

Demo'ed Kernel Flow performance at Photonics West Conference can capture 50 billion photons per second.

Dream: First-time neuro-imaging device at this quality will be mass produced.

Demo'ed Kernel Flow performance at Photonics West Conference can capture 50 billion photons per second.

Dream: First-time neuro-imaging device at this quality will be mass produced.

75/ @ryanmfield most use of neural data is used in the context of science with specialized knowledge about the brain, but it could have applications towards individual growth [Me: via quantified self & consciousness hacking].

How to ensure that individuals maintain data control.

How to ensure that individuals maintain data control.

76/ @ryanmfield: Foundations of a healthy ecosystem:

* Transparency privacy policy

* App-based consent & control

* End-to-end encryption & security

* Trusted compute for 3rd party analysis.

Building everything around the principle of "the individual is at the center."

* Transparency privacy policy

* App-based consent & control

* End-to-end encryption & security

* Trusted compute for 3rd party analysis.

Building everything around the principle of "the individual is at the center."

77/ @OpenBCI's @ConorRussomanno on "Closing the Loop: Delivering on the promise of BCI while Protecting Brain Privacy"

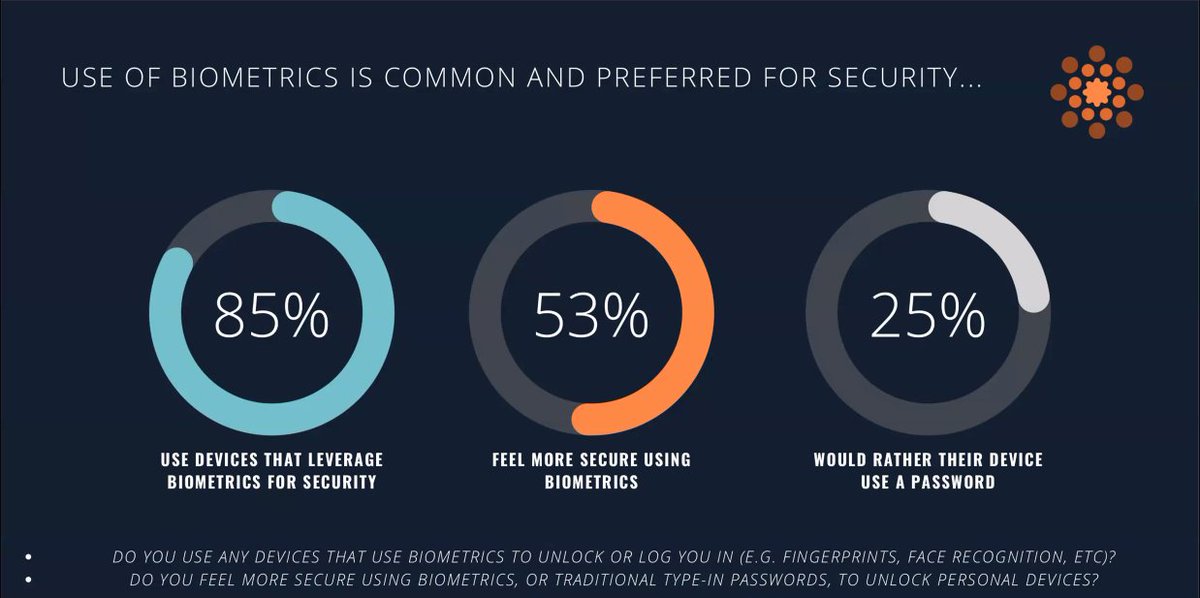

78/ @ConorRussomanno found surveyed people were the most skeptical Facebook & Google about their data, & it increases when it comes to neural data.

79/ @ConorRussomanno selling data was the top concern while mind control was lower.

[Me: that'll no doubt go up as consumer neural tech is released.]

[Me: that'll no doubt go up as consumer neural tech is released.]

80/ @ConorRussomanno: We're headed to a world where neural tech will be receiving subconscious feedback for AI to do non-perceptible neuro-modulation.

Unfortunately, Conor's slides stopped advancing and chat was disabled with no way to communicate it back.

Unfortunately, Conor's slides stopped advancing and chat was disabled with no way to communicate it back.

81/ @ConorRussomanno showing the Galea prototype that has a lot of different neural input devices that they're collaborating with Valve on.

Unfortunately, missed out on the rest of his slide deck after his first slide.

[Reuploaded higher res photo]

Unfortunately, missed out on the rest of his slide deck after his first slide.

[Reuploaded higher res photo]

82/ @NeurotechX's Yannick Roy talking wearable neurotech devices.

Experiment gone wrong with BrainCo EEG in China.

scmp.com/tech/start-ups…

Not currently ready for neurotech privacy implications & prep need more neuro ethics literacy on perception, deception, & education.

Experiment gone wrong with BrainCo EEG in China.

scmp.com/tech/start-ups…

Not currently ready for neurotech privacy implications & prep need more neuro ethics literacy on perception, deception, & education.

83/ Some calls for deeper regulation for data privacy.

@KernelCo says they encrypt all data on chip where the neural photons are detected.

@ConorRussomanno says inferences you're drawing from neural data is more valuable than obscuring raw data. [Me: YES! 100%]

@KernelCo says they encrypt all data on chip where the neural photons are detected.

@ConorRussomanno says inferences you're drawing from neural data is more valuable than obscuring raw data. [Me: YES! 100%]

84/ @yusterafa has been spending a lot of time with lawyers. There's currently no legislation anywhere in the world that they've found that protects mental privacy.

What's happening in Chile could provide precedents.

Need to define terms legally by teams of scientists & lawyers.

What's happening in Chile could provide precedents.

Need to define terms legally by teams of scientists & lawyers.

85/ @yusterafa again pointing to the pioneering work on data privacy happening in Chile.

Not sure if he's referring to this "Data Protection Overview."

dataguidance.com/notes/chile-da…

More context here:

Not sure if he's referring to this "Data Protection Overview."

dataguidance.com/notes/chile-da…

More context here:

https://twitter.com/yusterafa/status/1384987441828929539

86/ @yusterafa is pointing to medical ethics as a potential standard baseline for how neural data are treated. Another option is to treat neural data as medical data that'd be regulated through existing medical regulations.

87/ @ConorRussomanno emphasizing full set of electrophysiological & biometric data that go way beyond "mental privacy" context of data.

If the types of medical regulations for neural data existed like @yusterafa suggested, then companies like @OpenBCI & @KernelCo wouldn't exist.

If the types of medical regulations for neural data existed like @yusterafa suggested, then companies like @OpenBCI & @KernelCo wouldn't exist.

88/ @KernelCo started as an invasive neural interface company, but the medical regulations proved to be prohibitive & they pivoted to non-invasive neural technology.

89/ Some of the @OpenBCI survey data on biometrics & neural data presented by @ConorRussomanno can be found here:

openbci.com/community/clos…

openbci.com/community/clos…

90/ @ConorRussomanno's dream of the future data ecosystem:

#1: We need to own our own data.

#2: The AI becomes a virtual Jiminy Cricket that keeps your interest in mind protected by corporate interests, unless you grant permission. But it's an uphill battle to get there.

#1: We need to own our own data.

#2: The AI becomes a virtual Jiminy Cricket that keeps your interest in mind protected by corporate interests, unless you grant permission. But it's an uphill battle to get there.

91/ Yannick Roy talking about owning & selling own data to change the incentive structures using insights from decentralized blockchain systems like NFTs (but could be different than current implementations).

92/ @yusterafa sees world with neural data that increases the bandwidth of communication with a humanistic touch & approach to technology via a human rights principles of beneficence, justice, & dignity.

93/ @KernelCo's @ryanmfield would like to see a future where brain data is in complete control of the individual without ambiguity of how it's used outside of the individual, which is precisely the opposite of existing surveillance capitalism model.

Start with empowered user.

Start with empowered user.

94/ I misquoted @ConorRussomanno. He said Protected FROM not protected BY

#2: The AI becomes a virtual Jiminy Cricket that keeps your interest in mind protected FROM corporate interests, unless you grant permission. But it's an uphill battle to get there.

#2: The AI becomes a virtual Jiminy Cricket that keeps your interest in mind protected FROM corporate interests, unless you grant permission. But it's an uphill battle to get there.

https://twitter.com/kentbye/status/1397667175226232832

95/ @Cognixion_AI's Tom Gruber talking about the combination of AI with BCI for Human Augmentation.

Able to extrapolate lots of additional information sensors like vital signs monitoring.

Able to extrapolate lots of additional information sensors like vital signs monitoring.

96/ Gruber giving a tour of some of the AI companies working with biometrics including Migrane AI:

hospitalonmobile.com

Pupillometry as a measurement of brain state.

@Mindstrong: Digital Brain Biomarkers Inferred from Smartphone Usage Data.

mindstrong.com

hospitalonmobile.com

Pupillometry as a measurement of brain state.

@Mindstrong: Digital Brain Biomarkers Inferred from Smartphone Usage Data.

mindstrong.com

97/ @Cognixion_AI is creating an AR headset with high-bandwidth EEG & neural data for extrapolating brain intention via ML & "Visually-Evoked Potentials."

98/ @Cognixion_AI has a demo of how it's being used as an AR + BCI as assistive technology .

"The world's first brain computer interface with augmented reality wearable speech™ generating device. Featuring Mai Ling Chan & Chris Benedict- DJ of Ability."

"The world's first brain computer interface with augmented reality wearable speech™ generating device. Featuring Mai Ling Chan & Chris Benedict- DJ of Ability."

99/ Gruber looks as the range of AI applications & resulting AI ethical issues around cognitive enhancement, cognitive displacement, optimization around human wellness & monetizable engagement, & then the range of AI Assistants from Big Brother to Big Mother.

100/ @Cognixion_AI's Tom Gruber talking on human wellness metrics within AI objective functions using human biometrics from these BCI, neural data, & XR technologies. If you can sense brain state, then you can detect disregulated brain states & operationalize it in AI algorithms.

101/ Final thoughts from "Noninvasive Neural Interfaces: Ethical Considerations Conf" co-organized by @FBRealityLabs' @snaufel & @cxhrndz & @Columbia_NTC's @neuro_rights @yusterafa.

Yuste says we need more stakeholders at the table ethicists & lawyers [Me: & privacy advocates].

Yuste says we need more stakeholders at the table ethicists & lawyers [Me: & privacy advocates].

102/ More context on why @yusterafa says Chile is leading the world: "[Chilean] Constitutional Reform project recognizes mental data as Neuro-Rights, grants them the quality of Human Rights, & includes a Bill for Neuroprotection."

nri.ntc.columbia.edu/news/unanimous…

nri.ntc.columbia.edu/news/unanimous…

https://twitter.com/kentbye/status/1397661486562963456

103/ My biggest takeaway from the conference is that the threat to mental privacy is still the largest existential threat of XR, BCI & other non-invasive neural technologies.

Most presenters were not ramped up on the legal history or regulation challenges.

Most presenters were not ramped up on the legal history or regulation challenges.

https://twitter.com/kentbye/status/1397660336652656640

104/ It's really easy for researchers or industry to say they want more regulation around privacy issues, but it's another to dig into the regulatory landscape for tech policy to discover how US regulators are 10-20 years behind tech.

See @joejerome chat:

voicesofvr.com/951-privacy-pr…

See @joejerome chat:

voicesofvr.com/951-privacy-pr…

105/ Aside from a few industry folks, expertise of brain imagining researchers seemed to be narrow on their domain of expertise.

Many weren't aware of just how much contextual info these companies will have -- like here's eye-tracking biometric inferences:

Many weren't aware of just how much contextual info these companies will have -- like here's eye-tracking biometric inferences:

https://twitter.com/JL_Kroger/status/1392789775569018881

106/ Also, people's concept of privacy is stuck in a static model of saving & storing data.

We're way beyond that with XR & neuro-tech.

Spatial computing is a paradigm shift into real-time processing & biometric inferences of data.

See @brittanheller:

We're way beyond that with XR & neuro-tech.

Spatial computing is a paradigm shift into real-time processing & biometric inferences of data.

See @brittanheller:

https://twitter.com/kentbye/status/1380223778827857923

107/ @yusterafa has been doing the most in-depth thinking on preserving mental privacy through the lens of human rights & is in dialogue with lawyers.

But nearly all of his suggestions were resisted by neuro-tech industry start-ups.

cirsd.org/en/horizons/ho…

But nearly all of his suggestions were resisted by neuro-tech industry start-ups.

cirsd.org/en/horizons/ho…

https://twitter.com/kentbye/status/1397648299797012480

108/ At the end of it all, the most likely outcome is @yusterafa's last option he presented: "Take the lax position that treats neural data the same as personal data, which he sees would be a mistake."

How should we protect our rights to mental privacy?

How should we protect our rights to mental privacy?

https://twitter.com/kentbye/status/1397648577011195904

END/ If you find this type of coverage valuable, then please consider supporting me on Patron.

patreon.com/voicesofvr

Still lots of open questions to be explored here for how to preserve our Neuro-Rights in the context of XR.

Great first steps, but still a long ways to go.

patreon.com/voicesofvr

Still lots of open questions to be explored here for how to preserve our Neuro-Rights in the context of XR.

Great first steps, but still a long ways to go.

• • •

Missing some Tweet in this thread? You can try to

force a refresh