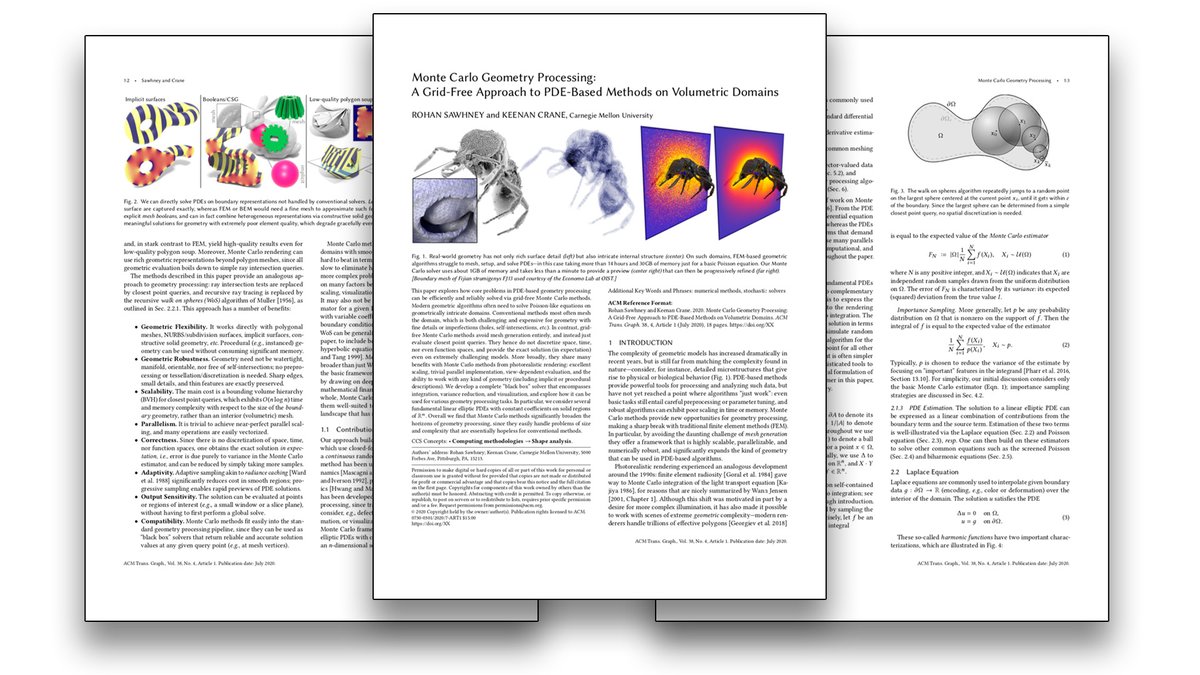

Think knots are easy to untangle?

As a companion to our recent paper on "Repulsive Curves," we're releasing a dataset of hundreds of *extremely* difficult knots: cs.cmu.edu/~kmcrane/Proje…

In each case, a knotted & canonical embedding is given. Can you recover the right knot? 1/5

As a companion to our recent paper on "Repulsive Curves," we're releasing a dataset of hundreds of *extremely* difficult knots: cs.cmu.edu/~kmcrane/Proje…

In each case, a knotted & canonical embedding is given. Can you recover the right knot? 1/5

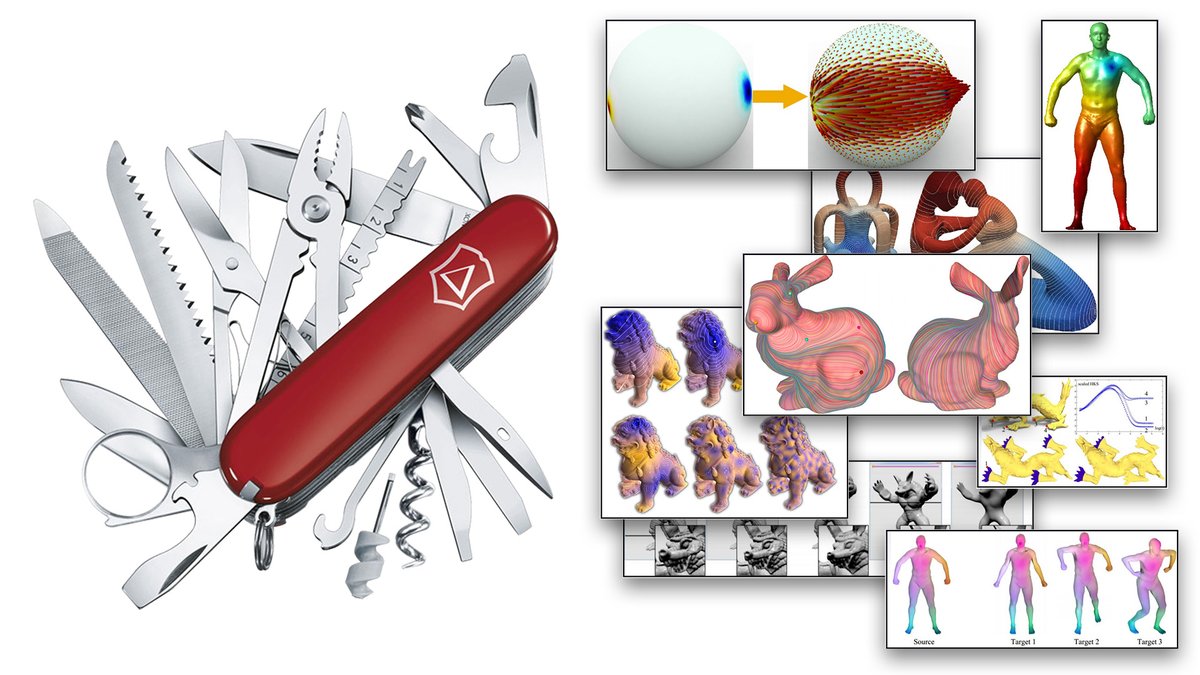

We've already tried a few dozen methods—from classics like "KnotPlot", to baselines like L-BFGS, to bleeding-edge algorithms like AQP, BCQN, etc.

For many knots, most these methods get "stuck," and don't reach a nice embedding.

Even our method doesn't hit 100%—but is close! 2/5

For many knots, most these methods get "stuck," and don't reach a nice embedding.

Even our method doesn't hit 100%—but is close! 2/5

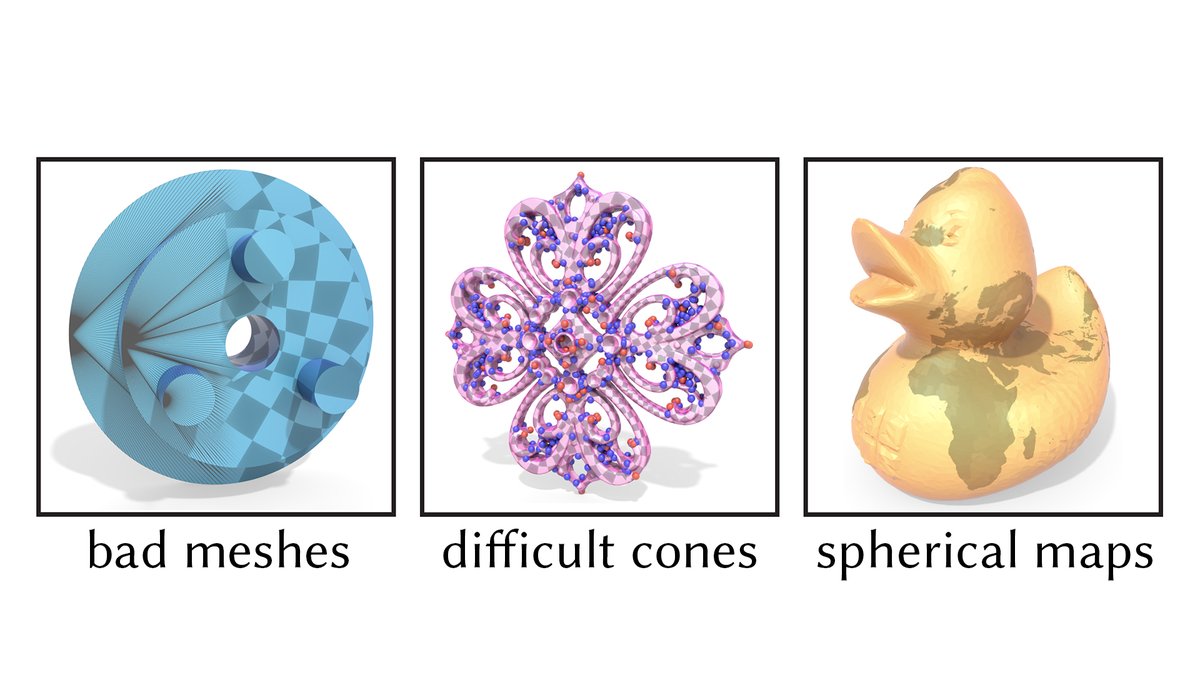

But this is a pretty unique problem relative to "standard" energies in geometry processing

For one thing, it considers all O(n²) pairs of points rather than just neighbors in a mesh

For another, you can't just *avoid* collisions—you have to maximize your distance from them. 3/5

For one thing, it considers all O(n²) pairs of points rather than just neighbors in a mesh

For another, you can't just *avoid* collisions—you have to maximize your distance from them. 3/5

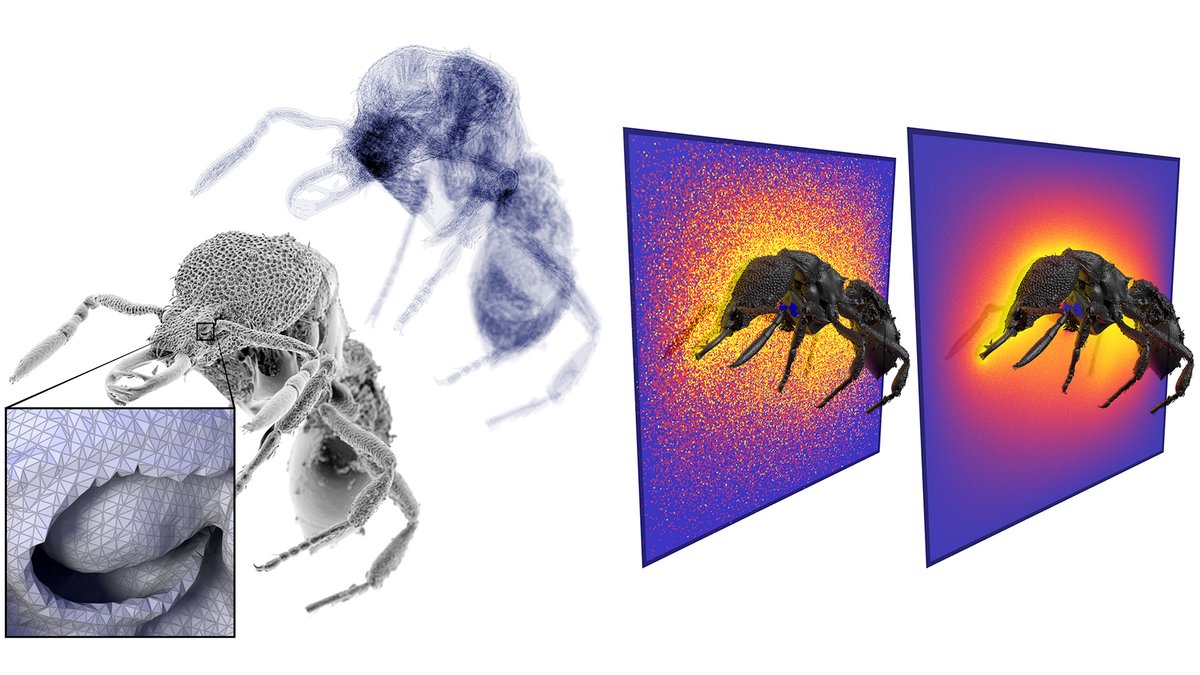

It's also an interesting challenge for #MachineLearning: given a knot as a sequence of points in R³, classify it as one of several knot types

…Or even just decide if it's the unknot!

I'd guess no current architecture works well for this problem—but am glad to be surprised! 4/5

…Or even just decide if it's the unknot!

I'd guess no current architecture works well for this problem—but am glad to be surprised! 4/5

Anyway, have fun! 5/5

@KangarooPhysics I'd be *very* interested if your approach to knot untangling works well for these "tough knots." You always seem to have simple and elegant solutions that beat the pants off more fancy-mathy stuff. 😅

• • •

Missing some Tweet in this thread? You can try to

force a refresh