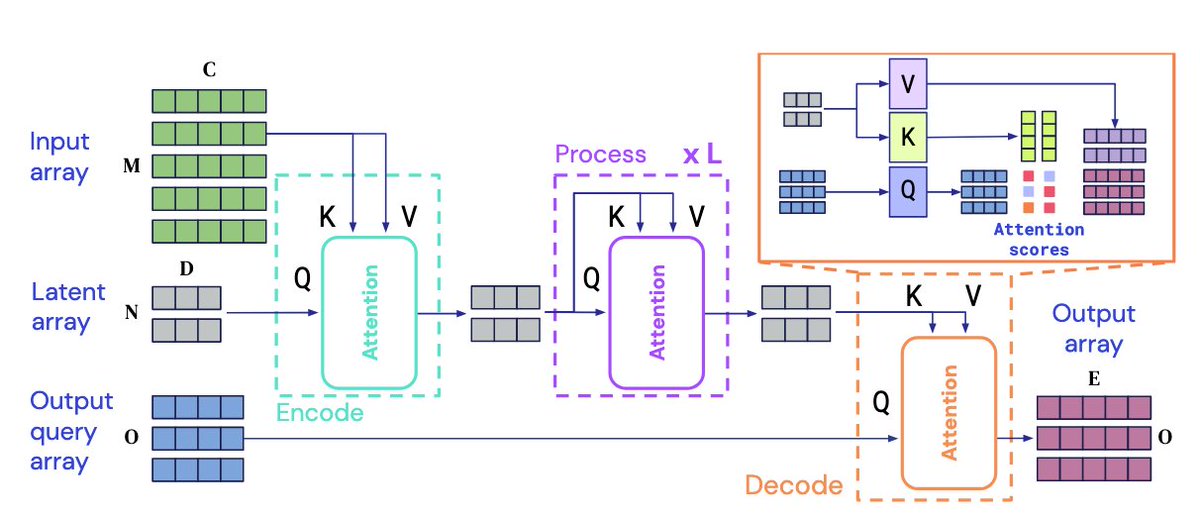

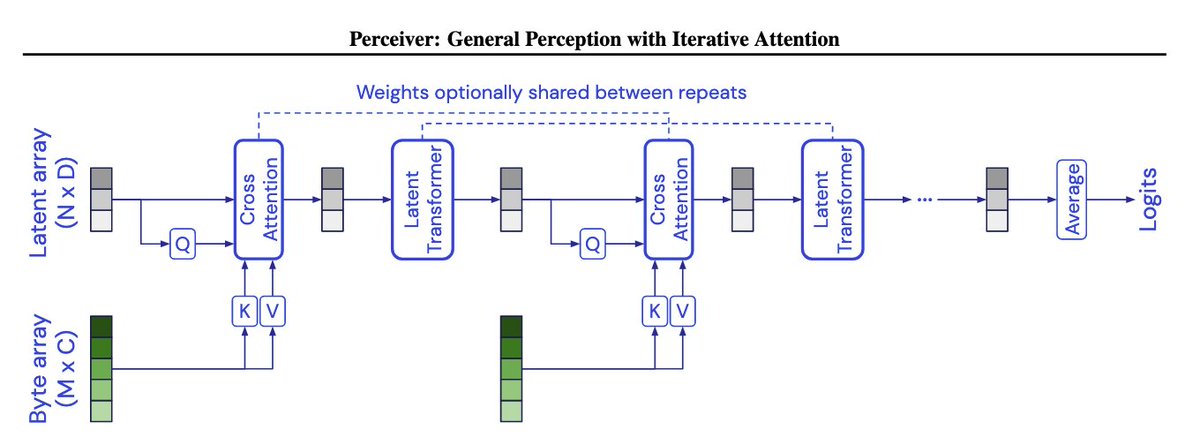

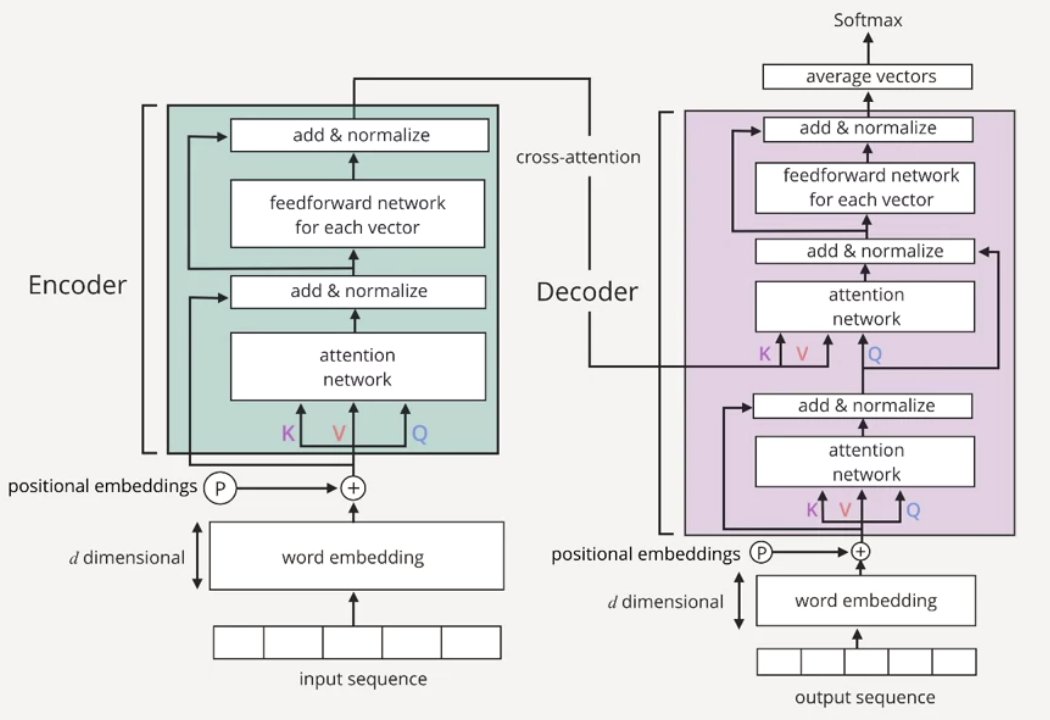

Note how the input array (in green) is fed back into multiple layers as a 'cross attention' in the previous diagram. That cross attention is similar to how your would tie an encoder with a decoder in the standard transformer model:

The Perceiver-IO block differs from the perceiver block in that there is this additional "output query array" that it merges into another cross attention block. The innovation here is that this additional block maintains the richness of the outputs.

Let me explain this in semiotic terms. In simple semiotics, there are three kinds of signs (i.e. icons, indexes and symbols). In the conventional feedforward network, similarity is computed between the inputs and the weights. That is, an object is compared to its icon.

A transformer block is more complex. The keys and query inputs undergo a iconic transformation. This is followed by a correlation between these two iconic representations. It is this correlation that is compared to internal weights for the final output.

In semiotic logic, a transformer block is an implementation of a natural proposition. More specifically, a collection of parallel natural propositions, that leads to a final argument.

But how do we explain what is going on in the Perceiver-IO in terms of semiotic terminology?

The implementation advantage of the Perceiver architecture is that it has a lower-dimensional latent space. This allows for deeper pipelines and hence more semiotic transformations. It maintains its integrity by syncing back via cross attention with the original object.

The consequence of this is that each layer of the Perceiver can attend to different aspects of the original object without having to carry features across the entire semiotic pipeline.

Expressing this differently, a Perceiver network attends back to the original object to capture more information that it may have not captured in earlier layers. It is an interactive form of perception quite reminiscent of saccades.

The utility of the Perceiver network is that it works universally across all kinds of sensory input. Unlike fine-tuned architectures like CNNs, it does not need to use a hardwired network architecture to exploit invariances in the input data.

The Perceiver-IO network inherits all these features but adds a twist that in that instead of only iteratively syncing back to an external object, it syncs with an internal sign.

In other words, it is performing a semiotic process that is driven by an internal symbolic language.

Summarized in this blog entry: medium.com/intuitionmachi…

• • •

Missing some Tweet in this thread? You can try to

force a refresh