In theory, you can model any function using a neural network with a single hidden layer.

However, deep networks are much more efficient than shallow ones.

Can you explain why?

However, deep networks are much more efficient than shallow ones.

Can you explain why?

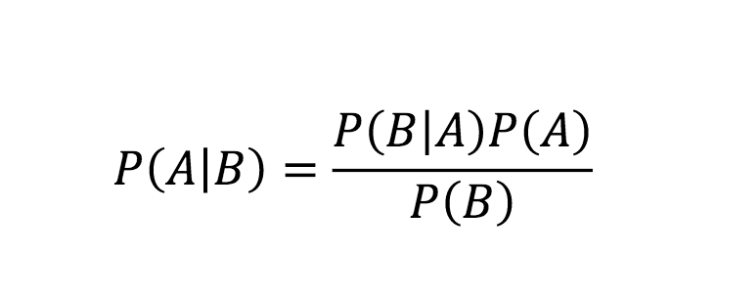

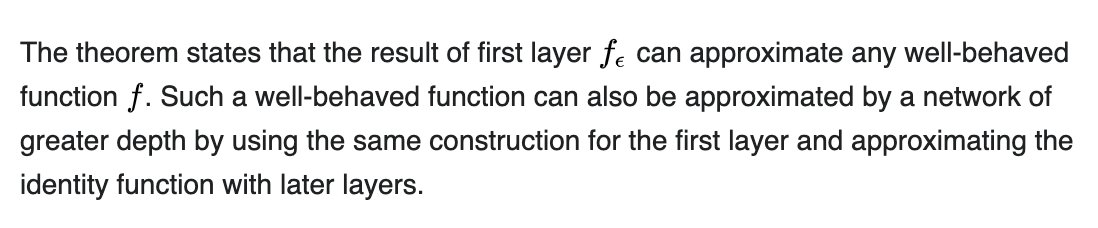

If my first claim gives you pause, I'm talking about the Universal approximation theorem.

You can find more about it online, but the attached paragraph summarizes the relevant part very well.

You can find more about it online, but the attached paragraph summarizes the relevant part very well.

Informally, we usually say that we can model any function with a single hidden layer neural network.

But there are a couple of caveats with this statement.

But there are a couple of caveats with this statement.

First caveat:

By "model," we mean "approximate."

A single hidden layer neural network might not exactly reproduce any function, but it can approximate it close enough to a point where the differences aren't relevant.

By "model," we mean "approximate."

A single hidden layer neural network might not exactly reproduce any function, but it can approximate it close enough to a point where the differences aren't relevant.

Second caveat:

Notice how the excerpt about the theorem talks about "well-behaved functions." This refers to continuous functions, not discontinuous.

Notice how the excerpt about the theorem talks about "well-behaved functions." This refers to continuous functions, not discontinuous.

With these two caveats out of the way, we can focus on my second claim:

Deeper networks are much more efficient than shallower networks.

In other words, while all we need is a single hidden layer, in theory, we are usually better off with more layers in practice.

Deeper networks are much more efficient than shallower networks.

In other words, while all we need is a single hidden layer, in theory, we are usually better off with more layers in practice.

The simplest explanation here is about the ability of deep networks to learn hierarchies of concepts.

With multiple layers, each one can focus on capturing knowledge at a specific level of abstraction and pass it on to the next layer.

With multiple layers, each one can focus on capturing knowledge at a specific level of abstraction and pass it on to the next layer.

Classic example: image classification.

Earlier layers focus on abstract concepts like edges, colors, shadows.

Later layers use those concepts to learn about more concrete representations like specific shapes and complex objects.

Earlier layers focus on abstract concepts like edges, colors, shadows.

Later layers use those concepts to learn about more concrete representations like specific shapes and complex objects.

A single hidden layer network would have to focus on every pixel individually.

It will probably need a ridiculous amount of neurons to extract the same knowledge that we can easily do with a deep network.

And even then, we have no idea how to make it happen.

It will probably need a ridiculous amount of neurons to extract the same knowledge that we can easily do with a deep network.

And even then, we have no idea how to make it happen.

In addition to this, we can also talk about how much more flexibility we can afford if we aren't limited to a single hidden layer.

@AlejandroPiad talked about this in his answer:

@AlejandroPiad talked about this in his answer:

https://twitter.com/AlejandroPiad/status/1439179885386338304?s=20

As a recap, I'll quote @michael_nielsen:

"(...) universality tells us that neural networks can compute any function; and empirical evidence suggests that deep networks are the networks best adapted to learn the functions useful in solving many real-world problems."

"(...) universality tells us that neural networks can compute any function; and empirical evidence suggests that deep networks are the networks best adapted to learn the functions useful in solving many real-world problems."

For an excellent (visual) explanation about this, check out Chapter 4 of the "Neural Networks and Deep Learning" book.

It's free and online!

neuralnetworksanddeeplearning.com/chap4.html

It's free and online!

neuralnetworksanddeeplearning.com/chap4.html

• • •

Missing some Tweet in this thread? You can try to

force a refresh