What do you get when you let a monkey randomly smash the buttons on a typewriter?

Hamlet from Shakespeare, of course. And Romeo and Juliet. And every other finite string that is possible.

Don't believe me? Keep reading. ↓

Hamlet from Shakespeare, of course. And Romeo and Juliet. And every other finite string that is possible.

Don't believe me? Keep reading. ↓

Let's start at the very beginning!

Suppose that I have a coin that, when tossed, has a 1/2 probability of coming up heads and a 1/2 probability of coming up tails.

If I start tossing the coin and tracking the result, what is the probability of 𝑛𝑒𝑣𝑒𝑟 having heads?

Suppose that I have a coin that, when tossed, has a 1/2 probability of coming up heads and a 1/2 probability of coming up tails.

If I start tossing the coin and tracking the result, what is the probability of 𝑛𝑒𝑣𝑒𝑟 having heads?

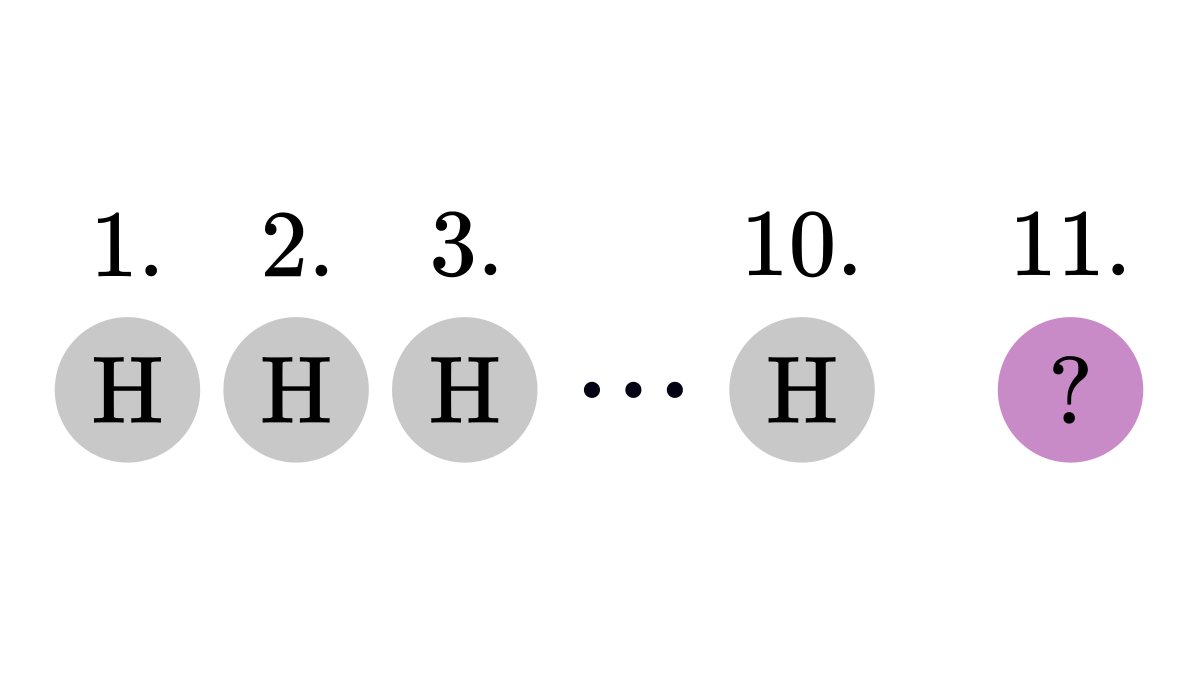

To answer this, first, we calculate the probability of no heads in 𝑛 tosses. (That is, the probability of 𝑛 tails.)

Since tosses are independent of each other, we can just multiply the probabilities for each toss together.

Since tosses are independent of each other, we can just multiply the probabilities for each toss together.

By letting 𝑛 to infinity, we obtain that the probability of never tossing heads is zero.

That is, we are going to have heads come up eventually with probability 1.

That is, we are going to have heads come up eventually with probability 1.

Instead of coin tosses, we can talk about arbitrary events.

If an event has a nonzero probability and you have infinite attempts, 𝑖𝑡 𝑤𝑖𝑙𝑙 ℎ𝑎𝑝𝑝𝑒𝑛 with probability 1.

If an event has a nonzero probability and you have infinite attempts, 𝑖𝑡 𝑤𝑖𝑙𝑙 ℎ𝑎𝑝𝑝𝑒𝑛 with probability 1.

Now, let's apply that to our monkey, infinitely typing away at the typewriter.

What is the probability that six random consecutive keystrokes result in the string "Hamlet"?

First, each keystroke matching the right character is 1/(number of keys).

What is the probability that six random consecutive keystrokes result in the string "Hamlet"?

First, each keystroke matching the right character is 1/(number of keys).

Because the keystrokes are independent, the probability of a given string is the product of the probabilities for each keystroke matching the individual character.

Now, let's calculate the probability that the entire Hamlet play by Shakespeare is typed randomly.

Since the entire play has 194270 characters, and there are 100 possible keys to hit, this probability is extremely small.

Still, it is larger than zero.

Since the entire play has 194270 characters, and there are 100 possible keys to hit, this probability is extremely small.

Still, it is larger than zero.

Thus, if our monkey keeps typing infinitely, the entire Hamlet play will appear somewhere. (Along with every other finite string you can imagine.)

However, this takes a 𝑣𝑒𝑟𝑦 long time on average.

However, this takes a 𝑣𝑒𝑟𝑦 long time on average.

If the probability of a given string occurring is 𝑝, the expected number of attempts to randomly generate it is 1/𝑝.

So, if 𝑝 is as small as randomly typing the entire Hamlet play, then 1/𝑝 is going to be astronomical.

So, if 𝑝 is as small as randomly typing the entire Hamlet play, then 1/𝑝 is going to be astronomical.

(If you are not familiar with the concept of expected values, take a look at the simple explanation I posted a while ago.)

https://twitter.com/TivadarDanka/status/1359876189943382017

It states that given infinite time, a monkey randomly smashing the keys of a typewriter will type any given text.

Next time when you say, "even a monkey can do it", be careful. Monkeys can do a lot.

Next time when you say, "even a monkey can do it", be careful. Monkeys can do a lot.

Recently, I have been thinking about probability a lot.

In fact, I am writing the probability theory chapters of my book, Mathematics of Machine Learning. The early access is just out, where I publish one chapter every week.

tivadar.gumroad.com/l/mathematics-…

In fact, I am writing the probability theory chapters of my book, Mathematics of Machine Learning. The early access is just out, where I publish one chapter every week.

tivadar.gumroad.com/l/mathematics-…

I post several threads like this every week, diving deep into concepts in machine learning and mathematics.

If you have enjoyed this, make sure to follow me and stay tuned for more!

The theory behind machine learning is beautiful, and I want to show this to you.

If you have enjoyed this, make sure to follow me and stay tuned for more!

The theory behind machine learning is beautiful, and I want to show this to you.

• • •

Missing some Tweet in this thread? You can try to

force a refresh