Pandas is a fast, powerful, flexible and open source data analysis and manipulation tool.

A Mega thread 🧵covering 10 amazing Pandas hacks and how to efficiently use it(with Code Implementation)👇🏻

#Python #DataScientist #Programming #MachineLearning #100DaysofCode #DataScience

A Mega thread 🧵covering 10 amazing Pandas hacks and how to efficiently use it(with Code Implementation)👇🏻

#Python #DataScientist #Programming #MachineLearning #100DaysofCode #DataScience

1/ Indexing data frames

Indexing means to selecting all/particular rows and columns of data from a DataFrame. In pandas it can be done using two constructs —

.loc() : location based

It has methods like scalar label, list of labels, slice object etc

.iloc() : Interger based

Indexing means to selecting all/particular rows and columns of data from a DataFrame. In pandas it can be done using two constructs —

.loc() : location based

It has methods like scalar label, list of labels, slice object etc

.iloc() : Interger based

2/ Slicing data frames

In order to slice by labels you can use loc() attribute of the DataFrame.

Implementation —

In order to slice by labels you can use loc() attribute of the DataFrame.

Implementation —

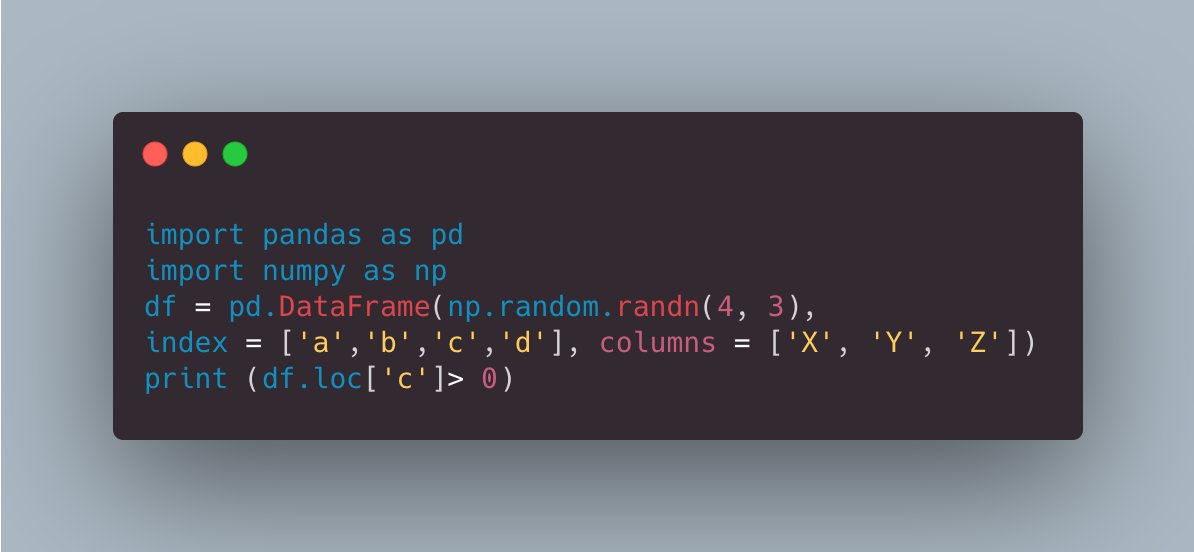

3/ Filtering data frames

Using Filter you can subset rows or columns of dataframe according to labels in the specified index of the data.

Implementation —

Using Filter you can subset rows or columns of dataframe according to labels in the specified index of the data.

Implementation —

4/ Transforming Data Frames

Pandas Transform helps in creating a DataFrame with transformed values and has the same axis length as its own.

Implementation —

Pandas Transform helps in creating a DataFrame with transformed values and has the same axis length as its own.

Implementation —

6. Hierarchical indexing

Hierarchical indexing is the technique in which we set more than one column name as the index. set_index() function is used for when doing hierarchical indexing.

Implementation —

Hierarchical indexing is the technique in which we set more than one column name as the index. set_index() function is used for when doing hierarchical indexing.

Implementation —

8/ Joins —

It helps us merging DataFrames. Types of Joins —

Inner Join :- Returns records that have matching values in both tables.

Left Join :- Returns all the rows from the left table that are specified in the left outer join clause, not just the rows in which the columns match

It helps us merging DataFrames. Types of Joins —

Inner Join :- Returns records that have matching values in both tables.

Left Join :- Returns all the rows from the left table that are specified in the left outer join clause, not just the rows in which the columns match

9/ Right Join :- Returns all records from the right table, and the matched records from the left table.

Full Join :- Returns all records when there is a match in either left or right table.

Cross Join :- Returns all possible combinations of rows from two tables.

Implementation-

Full Join :- Returns all records when there is a match in either left or right table.

Cross Join :- Returns all possible combinations of rows from two tables.

Implementation-

11/ Aggregate Functions

Pandas has a number of aggregating functions that reduce the dimension of the grouped object.

count()

value_count()

mean()

median()

sum()

min()

max()

std()

var()

describe()

sem()

Implementation -

Pandas has a number of aggregating functions that reduce the dimension of the grouped object.

count()

value_count()

mean()

median()

sum()

min()

max()

std()

var()

describe()

sem()

Implementation -

12/ I write quality threads on Data Science, Python, Programming, Machine Learning and AI in my free time. If you like this thread, then give a follow.

13/ Want to learn complete hands on #Python with Code Implementation? Try this ( 89% off) :

udemy.com/course/complet…

udemy.com/course/complet…

14/ 11 Amazing Data Science Techniques You Should Know!

With Code Implementation.

With Code Implementation.

https://twitter.com/NainaChaturved8/status/1438819014130618372

15/ 10 Efficient Code and Optimization techniques for Python with Code Implementation.

https://twitter.com/NainaChaturved8/status/1438236351388733450

16/ Join us in the 60 days of Data Science and Machine Learning journey --

medium.datadriveninvestor.com/day-6-60-days-…

#Python #TensorFlow #DataScientist #Programming #Coding #100DaysofCode #DataScience #AI #MachineLearning #hubofml #Pytorch #Pandas

medium.datadriveninvestor.com/day-6-60-days-…

#Python #TensorFlow #DataScientist #Programming #Coding #100DaysofCode #DataScience #AI #MachineLearning #hubofml #Pytorch #Pandas

• • •

Missing some Tweet in this thread? You can try to

force a refresh