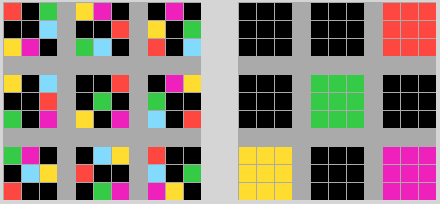

I like the "database layer" developed by DeepMind in their RETRO architecture:

deepmind.com/blog/article/l…

It teaches the model to retrieve text chunks from a vast textual database (by their nearest neighbour match of their BERT-generated embeddings) and use them when generating text

deepmind.com/blog/article/l…

It teaches the model to retrieve text chunks from a vast textual database (by their nearest neighbour match of their BERT-generated embeddings) and use them when generating text

It's a bit different from the "memory layer" I tweeted about previously, which provides a large learnable memory, without increasing the number of learnable weights. (for ref: arxiv.org/pdf/1907.05242…)

This time, the model learns the trick of retrieving relevant pieces of knowledge from a large corpus of text.

The end result is similar: an NLP model that can do what the big guns can (Gopher, Jurassic-1, GPT3) with a tenth of their learnable weights.

The end result is similar: an NLP model that can do what the big guns can (Gopher, Jurassic-1, GPT3) with a tenth of their learnable weights.

It gives you a couple of nice perks:

1) the text database can be tweaked post-training if you find dodgy stuff in it (bad language, factual inaccuracies, ...)

1) the text database can be tweaked post-training if you find dodgy stuff in it (bad language, factual inaccuracies, ...)

2) explainability: you can see which snippets of text are pulled from the database and used a "reference" to generate a given answer !

3) And you can fairly easily add this "database layer" and its associated corpus of text post-training, with a fairly cheap fine-tuning step.

They call it RETRO-fitting an existing pre-trained model 😁.

They call it RETRO-fitting an existing pre-trained model 😁.

But the real kicker is: can you add data to the text corpus, for example Tennis US Open results it had never seen before, and ask the model "who the 2021 women's US Open?"

Since the model can be "RETRO-fitted" with new data fairly easily, you can, and the answer it gives is:

"Emma Raducanu - she defeated Leylah Fernandez 6-4 6-3 ..."

Quite impressive, although the authors note that other models (FiD) can beat it at question answering.

"Emma Raducanu - she defeated Leylah Fernandez 6-4 6-3 ..."

Quite impressive, although the authors note that other models (FiD) can beat it at question answering.

• • •

Missing some Tweet in this thread? You can try to

force a refresh