Here is a thread about how to gain some permanent backlinks for around the price of a domain name.

I'm not saying you should do this.

Let's just say it's a very creative, hypothetical way to get backlinks though.

#seo #nichesites #contentsites #linkbuilding

🧵🪡

I'm not saying you should do this.

Let's just say it's a very creative, hypothetical way to get backlinks though.

#seo #nichesites #contentsites #linkbuilding

🧵🪡

First things first, head to expireddomains.net and set yourself up an account.

Logging in gives you access to more information about the domain names that are being shown on the site.

Logging in gives you access to more information about the domain names that are being shown on the site.

When you're logged in, you want to head to the Deleted Domains page member.expireddomains.net/domains/combin…

The default view shows you all of the domains that have recently been deleted.

The default view shows you all of the domains that have recently been deleted.

Now let's customise our view a bit better to give us some better information. Click Column Manager and tweak your columns for the information you want and hit save.

You can see my columns are more focused on backlinks.

You can see my columns are more focused on backlinks.

Clicking back on Deleted Domains at the top you should see deleted domains with more data around backlinks.

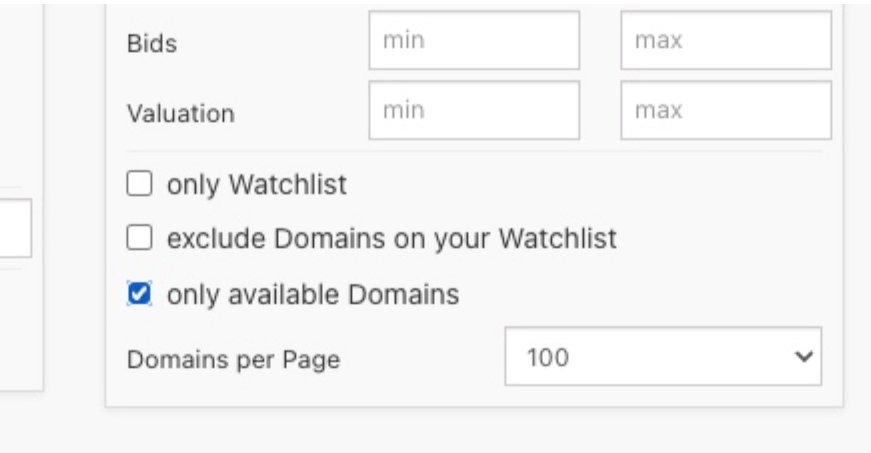

Now we obviously just want to see domains that are available here, click Show Filter and tick this bock on the Common Tab.

Now we obviously just want to see domains that are available here, click Show Filter and tick this bock on the Common Tab.

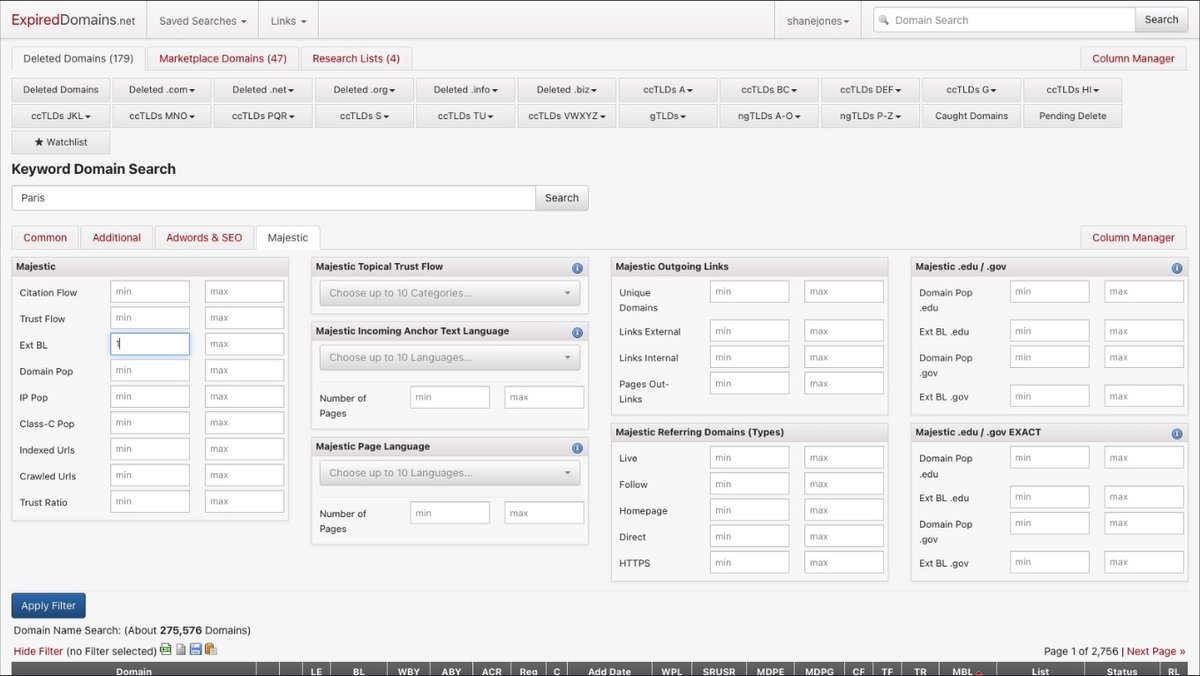

We also want to get rid of any domains that have no backlinks here so Click on Majestic and look for Ext BL and add Min of 1.

You can also search for domains that have .edu or .gov links using this filter too.

Click Apply Filter to see the domains update

You can also search for domains that have .edu or .gov links using this filter too.

Click Apply Filter to see the domains update

Let's say you're in a travel Niche looking for a backlink to a Paris landing page.

Search for Paris at top right.

Then sort by MBL to see domains with the most backlinks according to Majestic.

I also have this view to show just .co.uk and .com domain names from the filter

Search for Paris at top right.

Then sort by MBL to see domains with the most backlinks according to Majestic.

I also have this view to show just .co.uk and .com domain names from the filter

It's a case of finding a domain that might fit what we're after here.

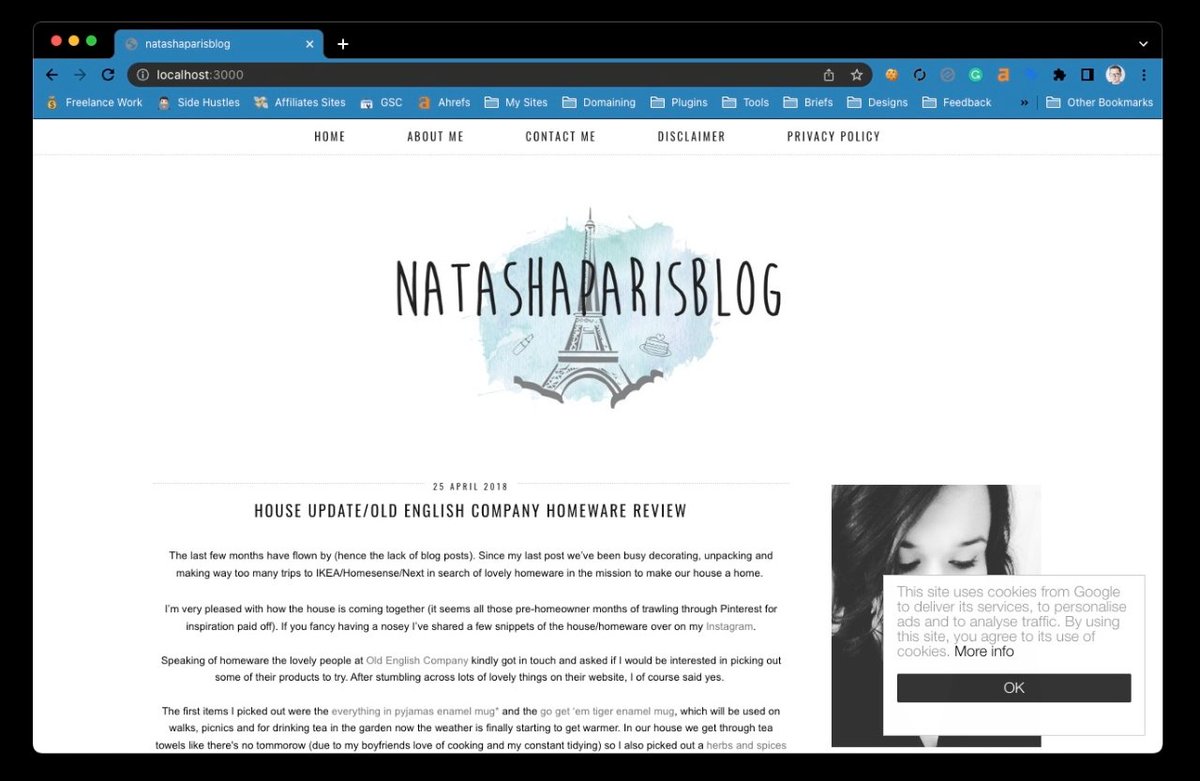

Here we can see a domain that might suit us, If you click the ACR link we can inspect this site and see what it used to look like on WayBack Machine

This site turns out to be a blogger so could work for us.

Here we can see a domain that might suit us, If you click the ACR link we can inspect this site and see what it used to look like on WayBack Machine

This site turns out to be a blogger so could work for us.

Domain expired in 2019 so this blogger has likely moved on to other things and potentially longer needs a website.

Diving into Ahrefs you can see how many backlinks are still active to this domain and also inspect further if this is an ok candidate for what we are looking to do.

Diving into Ahrefs you can see how many backlinks are still active to this domain and also inspect further if this is an ok candidate for what we are looking to do.

Back in Wayback Machine, look for the last date that this blog had content indexed.

When you can see the last best looking version of the site.

Grab the URL String and look for the section with web/20180914042141

This is a timestamp, we'll need this later on.

When you can see the last best looking version of the site.

Grab the URL String and look for the section with web/20180914042141

This is a timestamp, we'll need this later on.

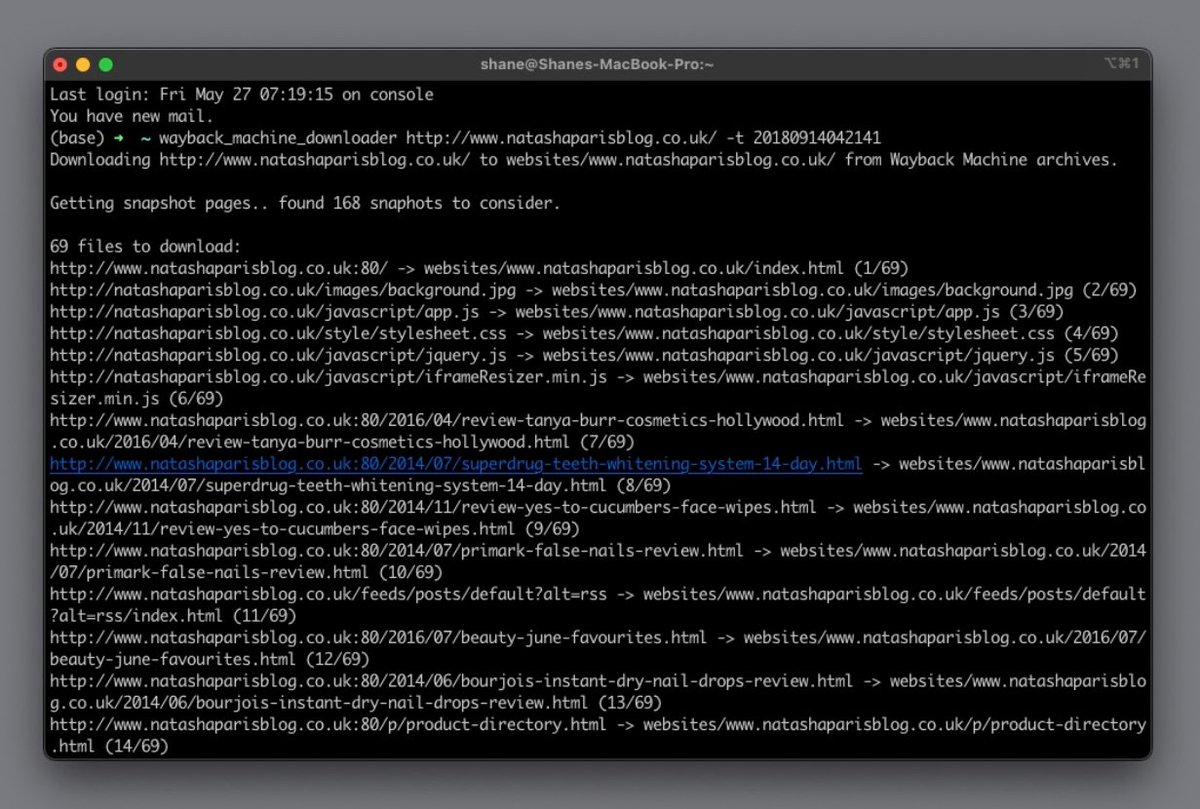

Now we want to scrape this entire website.

As a Mac user I use github.com/hartator/wayba… to download sites from WayBackMachine

There are probably similar tools out there for Windows if you have a search around GitHub.

Follow the install guide and then head to your terminal.

As a Mac user I use github.com/hartator/wayba… to download sites from WayBackMachine

There are probably similar tools out there for Windows if you have a search around GitHub.

Follow the install guide and then head to your terminal.

Now let's scrape.

Using the commands in the documentation, add the domain name and that timestamp from WayBackMachine.

The tool will take a site snapshot from that date with all assets and generate this into a static website.

Using the commands in the documentation, add the domain name and that timestamp from WayBackMachine.

The tool will take a site snapshot from that date with all assets and generate this into a static website.

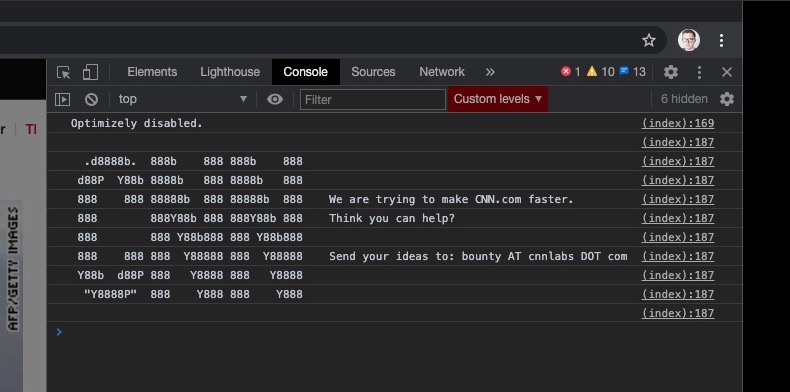

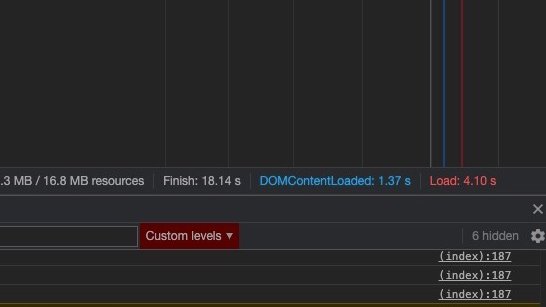

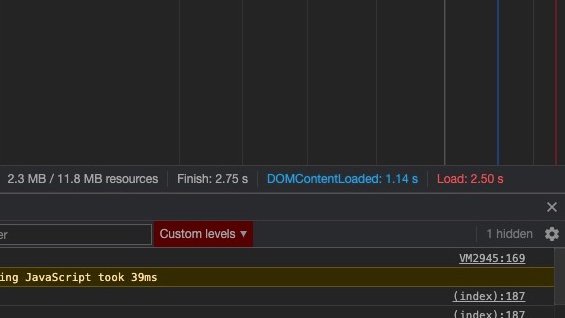

Once all the files have been downloaded, and test what that looks like.

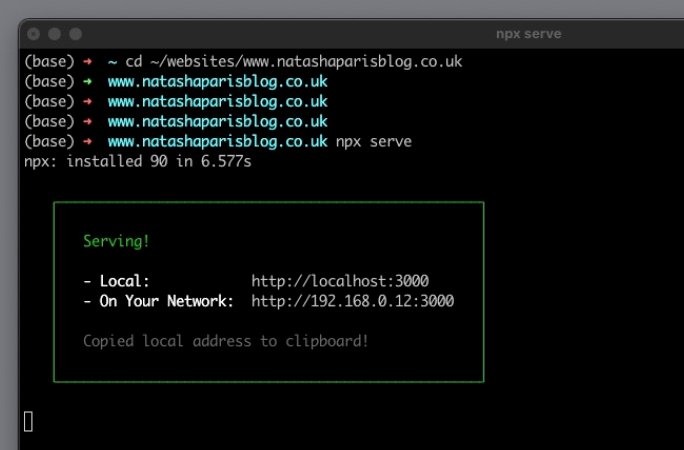

If you browse to the folder where your files were downloaded in my case it was

~/websites/

Go into the folder you downloaded and the run a local server using

npx serve

If you browse to the folder where your files were downloaded in my case it was

~/websites/

Go into the folder you downloaded and the run a local server using

npx serve

So we want a backlink on this site.

Using your code editing tool you can open up this site and either add a backlink somewhere or replace some content on the site with more relevant content and include a link back to your site.

Easy link building is easy.

Using your code editing tool you can open up this site and either add a backlink somewhere or replace some content on the site with more relevant content and include a link back to your site.

Easy link building is easy.

Once you've edited the content, you essentially have a static website ready to host.

Netlify gives you free static hosting.

This will likely be the easiest website you've ever deployed too!

No FTP or SSH needed here!

Netlify gives you free static hosting.

This will likely be the easiest website you've ever deployed too!

No FTP or SSH needed here!

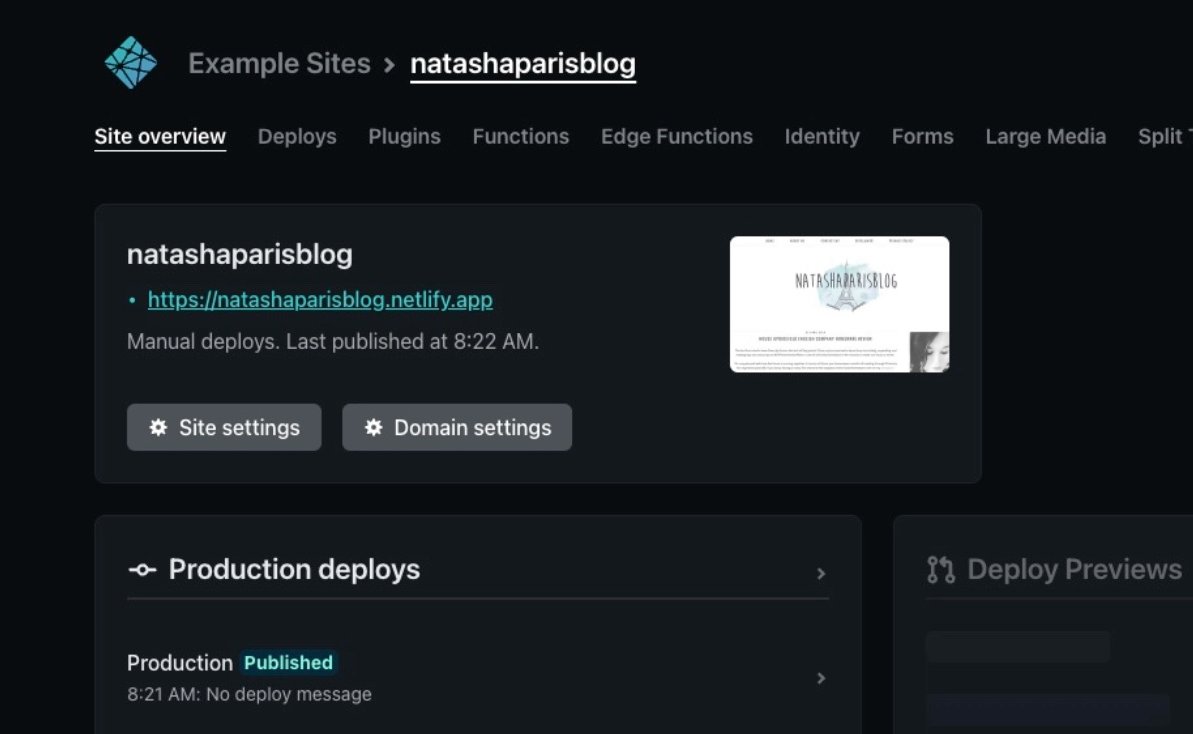

Once you're signed up for Netlify. You should see something like this.

Click browse to upload and select the folder which we want to host.

Click browse to upload and select the folder which we want to host.

You should then see something like this. That's it, your site is deployed

Netlify gives new domains random string urls. If you click Site Settings, change site name. You can change to be something more relevant.

Netlify gives new domains random string urls. If you click Site Settings, change site name. You can change to be something more relevant.

Now, all we need to do is buy the domain name from your provider and point this to your Netlify instance.

The guide for that is here docs.netlify.com/domains-https/…

The guide for that is here docs.netlify.com/domains-https/…

Once that is all done and once the search engines pick this site back up, you now have a nice link on an expired domain.

Obviously, there's a few more things you can do next like, optimise the scraped site, add sitemap.xml and push that into Google Search Console etc.

Obviously, there's a few more things you can do next like, optimise the scraped site, add sitemap.xml and push that into Google Search Console etc.

Then again, you probably shouldn't do this at all.

It's all very dodgy this stuff.

If it's someones old blog, you should really remove any images and source your own images.

Maybe even the content too as this content could have been migrated elsewhere and be duplicated.

It's all very dodgy this stuff.

If it's someones old blog, you should really remove any images and source your own images.

Maybe even the content too as this content could have been migrated elsewhere and be duplicated.

If it turns out to be an old brands website you choose, they could still own certain assets for that site and you could end up in some hot water with legal teams sending cease and desists and other things.

Maybe a better approach would be to buy that domain, relaunch it with your own content / assets and redirect old links to new pages and add your links there.

But I guess you'll be slowly heading down the PBN route then.

But I guess you'll be slowly heading down the PBN route then.

So let's say this thread was just for educational purposes and leave it there.

Learning the sketchy #SEO techniques just is as important as the non-sketchy ones.

Give me a follow if you want to see some more threads like this. twitter.com/shanejones

Learning the sketchy #SEO techniques just is as important as the non-sketchy ones.

Give me a follow if you want to see some more threads like this. twitter.com/shanejones

Thanks for coming to my TED talk.

Happy Friday!

Happy Friday!

• • •

Missing some Tweet in this thread? You can try to

force a refresh