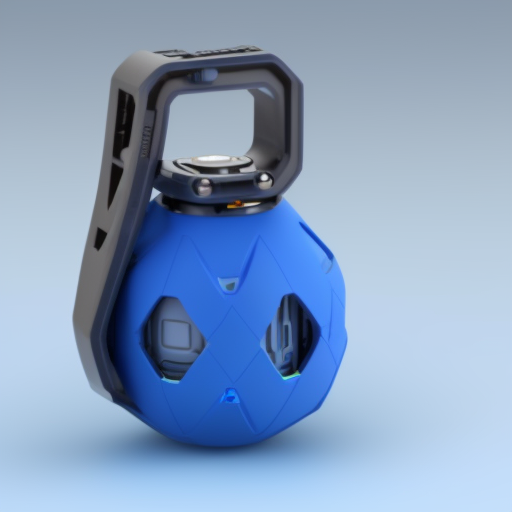

Tonight's dreamboothing is about grenades 💣(!).

I made a #dreambooth finetune from a few Sci-Fi grenade illustrations.

Explosive thread 🧵👇

#AI #StableDiffusion

I made a #dreambooth finetune from a few Sci-Fi grenade illustrations.

Explosive thread 🧵👇

#AI #StableDiffusion

Green and red? Plus, the elongated "spray shape" is worth trying. Thanks, #img2img 🙏

I was a bit less successful with more elaborated prompt engineering, but I'll keep pushing. "A soldier is holding a red grenade in his hand, HDR, 8K, realistic".

If you like this thread, feel free to like/RT/comment, or follow. More experiments & feedback on the amazing #Dreambooth tech coming soon.

@natanielruizg & his team rock :)

@natanielruizg & his team rock :)

All this was quickly generated (10 minutes) using very simple prompts (e.g. “a blue grenade”). No more endless prompting with random, inconsistent results.

Cut through the noise and get straight results with Dreambooth (and soon with us :)

Cut through the noise and get straight results with Dreambooth (and soon with us :)

• • •

Missing some Tweet in this thread? You can try to

force a refresh