People loved the "treasure chests" #AI experiment I posted yesterday! However, the chests were all closed 🤨.

So I quickly ran a second training on a different dataset, and this time, gold and diamonds are overflowing from the open chests! 🪙🤑💰

So I quickly ran a second training on a different dataset, and this time, gold and diamonds are overflowing from the open chests! 🪙🤑💰

https://twitter.com/emmanuel_2m/status/1588249020182368258

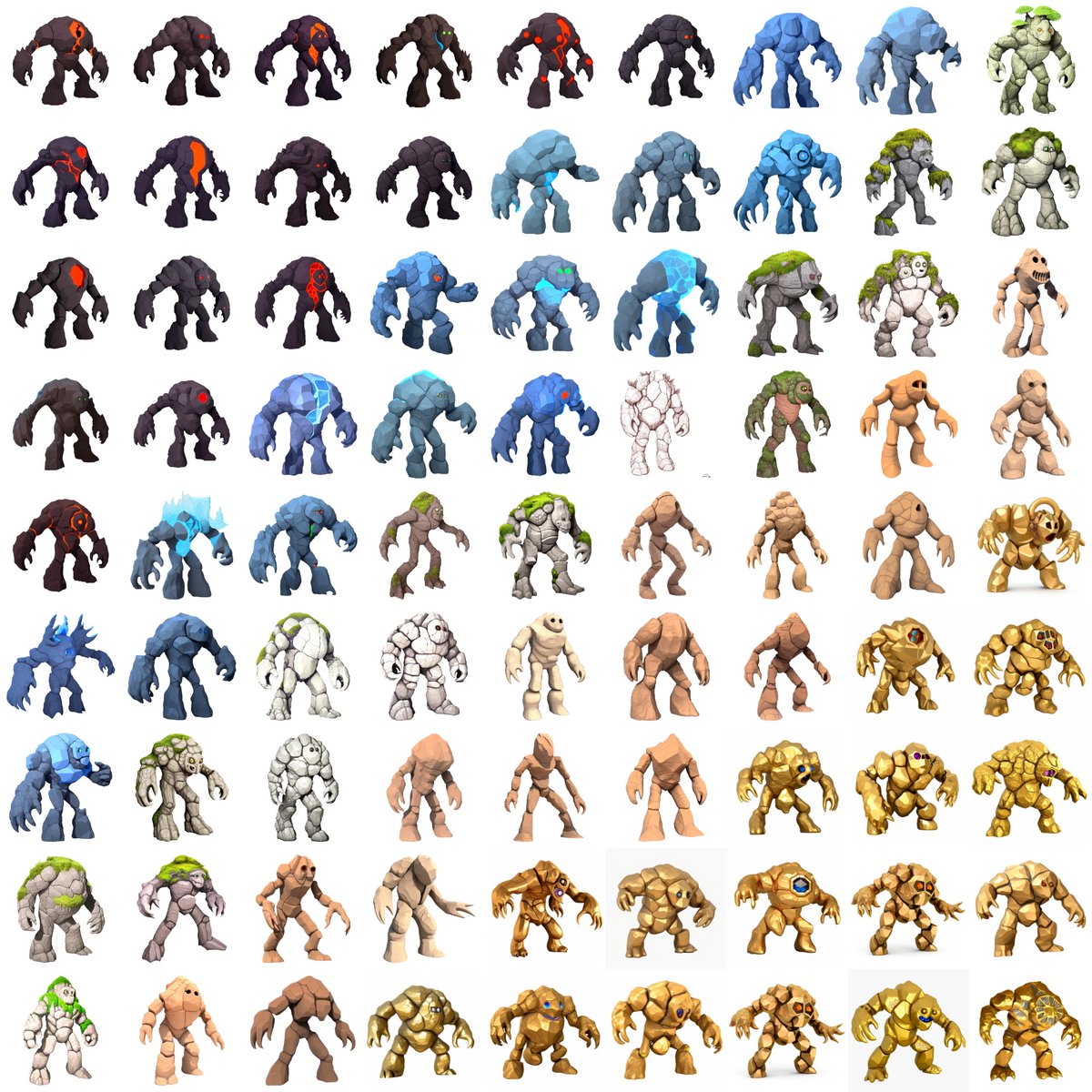

I also used #img2img to instantly generate dozens of variants, "inspired“ by a single original photograph.

This provides consistent assets (similar shape, size, or materials) with some slight variations. It's up to the artist/user to select which one looks best.

This provides consistent assets (similar shape, size, or materials) with some slight variations. It's up to the artist/user to select which one looks best.

Same thing here, using #img2img - however, I prompted "steel chest" instead of "wooden chest"

While it's not 100% perfect, there's still more steel in these chests than in the previous ones.

Also, some of the assets are disjoint or show anomalies. Some fine-tuning is necessary

While it's not 100% perfect, there's still more steel in these chests than in the previous ones.

Also, some of the assets are disjoint or show anomalies. Some fine-tuning is necessary

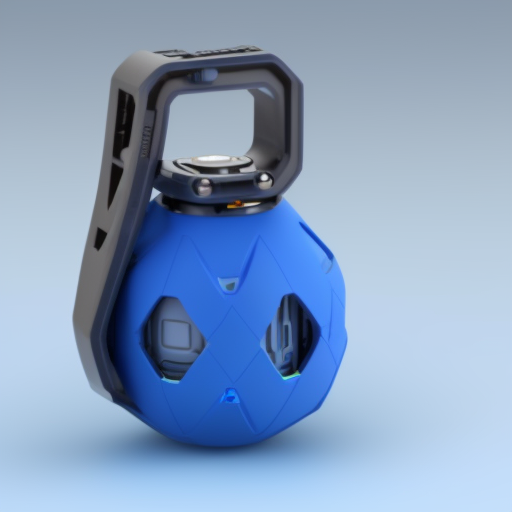

Sometimes, you get surprised! I used this green icon to run some #img2img prompts and... I didn't expect to see these results 😂😅

• • •

Missing some Tweet in this thread? You can try to

force a refresh