My opinions only here.

👨🔬 RS @DeepMind, @Midjourney 1y 🧑🎓 DPhil @AIMS_oxford @UniofOxford 4.5y 🧙♂️ RE DeepMind 1y 📺 SWE @Google 3y 🎓 TUM

👤 @nwspk

How to get URL link on X (Twitter) App

https://x.com/MFarajtabar/status/1930707591648493730The paper explores four puzzle environments: Tower of Hanoi, Checkers Jumping, River Crossing, and Blocks World.

https://x.com/MFarajtabar/status/1930707624032653487

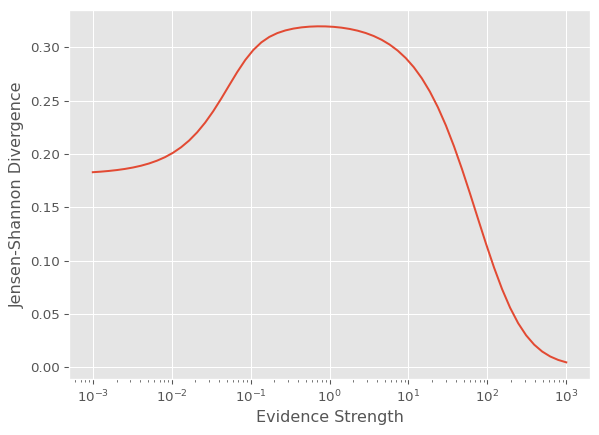

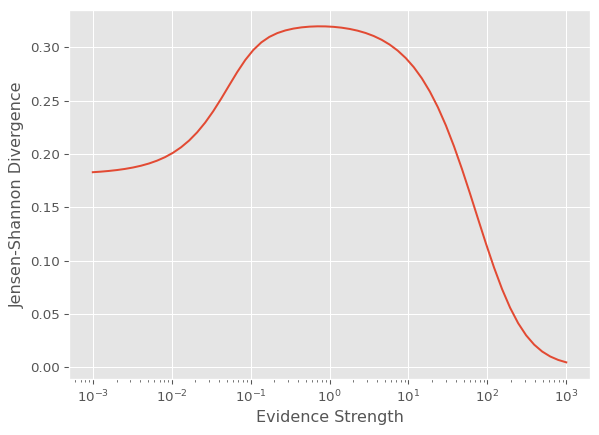

We show that the approximation is actually an upper bound and characterize the approximation error

We show that the approximation is actually an upper bound and characterize the approximation error

https://twitter.com/BlackHC/status/1310277321962778624I'm speechless given the quotes of the authors in the article and how they could think that it is in any way helpful to the sensitive debates that are happening.

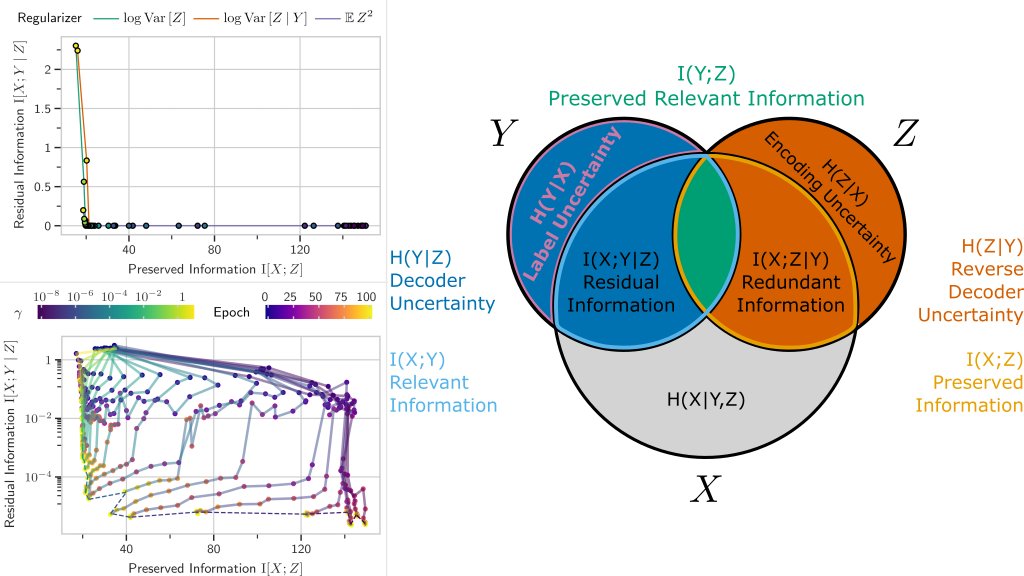

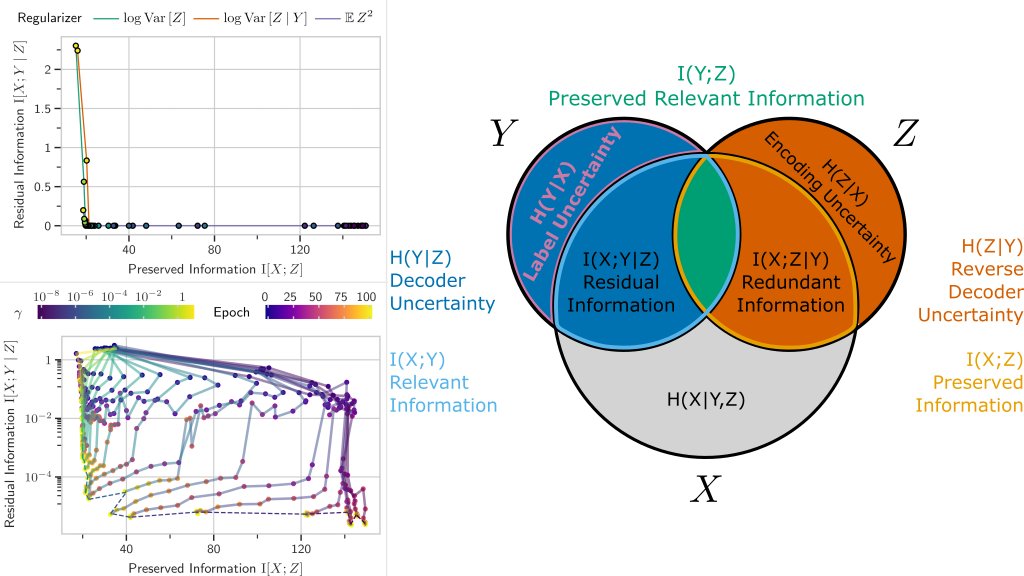

Our paper "Unpacking Information Bottlenecks: Unifying Information-Theoretic Objectives in Deep Learning" shows that well-known dropout regularization with standard cross-entropy loss and simple regularizers optimizes IB objectives in modern DNN architectures.

Our paper "Unpacking Information Bottlenecks: Unifying Information-Theoretic Objectives in Deep Learning" shows that well-known dropout regularization with standard cross-entropy loss and simple regularizers optimizes IB objectives in modern DNN architectures.

https://twitter.com/PeterKolchinsky/status/1237581727867924488I'll wear my conspiracy hat for a second: given all we know and all that must have been known by stakeholders earlier, is the current inaction towards stricter containment gross negligence due to stupidity and recklessness by our leaders or are some condoning the consequences:

https://twitter.com/steipete/status/1216124320419926018The example replaces repetitive code with a neat (👈literally) deduplicated abstraction. The problem with that is that it also tied down the interface to adhering to the chosen abstraction.

https://twitter.com/AnimaAnandkumar/status/1210401443808591872Part of these debates seems to be a question of association and feelings vs facts and boundaries 🤔

https://twitter.com/sgouws/status/1179143353587380225You probably don't even know what your model does.

"How can trusted news providers reach young audiences?

"How can trusted news providers reach young audiences?https://twitter.com/BlackHC/status/9632154559252602882/ dropbox.com/s/0r6fdm1kqdc9… does this sound like me?