One sidelight on the Russian protests today: #Navalny is probably the single most consistent target of Russian disinfo and influence operations.

He's been a target for at least 8 years, by ops including the Internet Research Agency, Secondary Infektion, and the Kremlin.

He's been a target for at least 8 years, by ops including the Internet Research Agency, Secondary Infektion, and the Kremlin.

Way back in September 2013, @Soshnikoff investigated the then newly founded Internet Research Agency, and reported that it had been trolling Navalny when he ran for Mayor of Moscow.

mr-7.ru/articles/90769/

mr-7.ru/articles/90769/

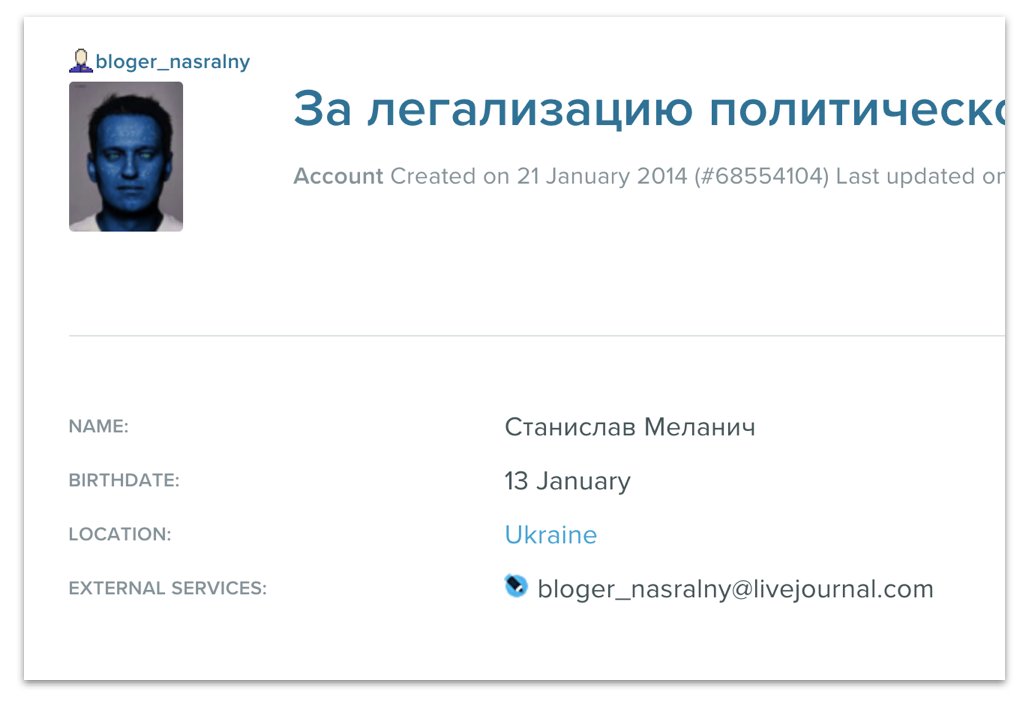

January 2014: op Secondary Infektion set up its most prolific persona, with a pic of Navalny’s face painted blue. It started out by attacking the Russian opposition.

The username, bloger_nasralny, is a toilet pun on his name.

The username, bloger_nasralny, is a toilet pun on his name.

Secondary Infektion kept on coming back, posting screenshots to communications that exposed Navalny as [insert pejorative here].

Weirdly, the only places those screenshots showed up was posts planted by the operation.

August 2017...

Weirdly, the only places those screenshots showed up was posts planted by the operation.

August 2017...

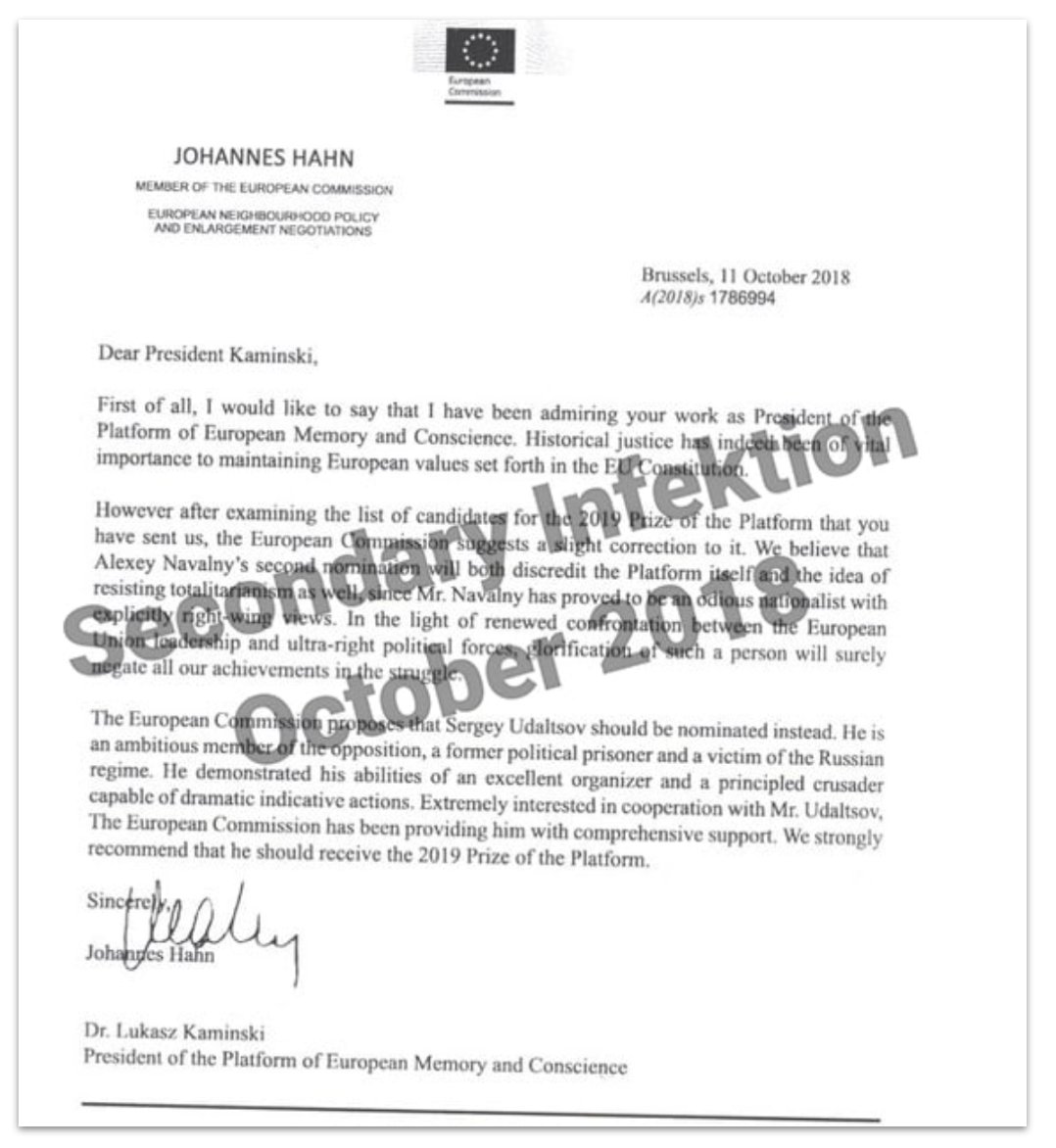

July 2018: forged letter that purportedly shows the EU Commission calling Navalny an "odious nationalist."

A narrative you'll still hear from Russian state outlets and employees today.

A narrative you'll still hear from Russian state outlets and employees today.

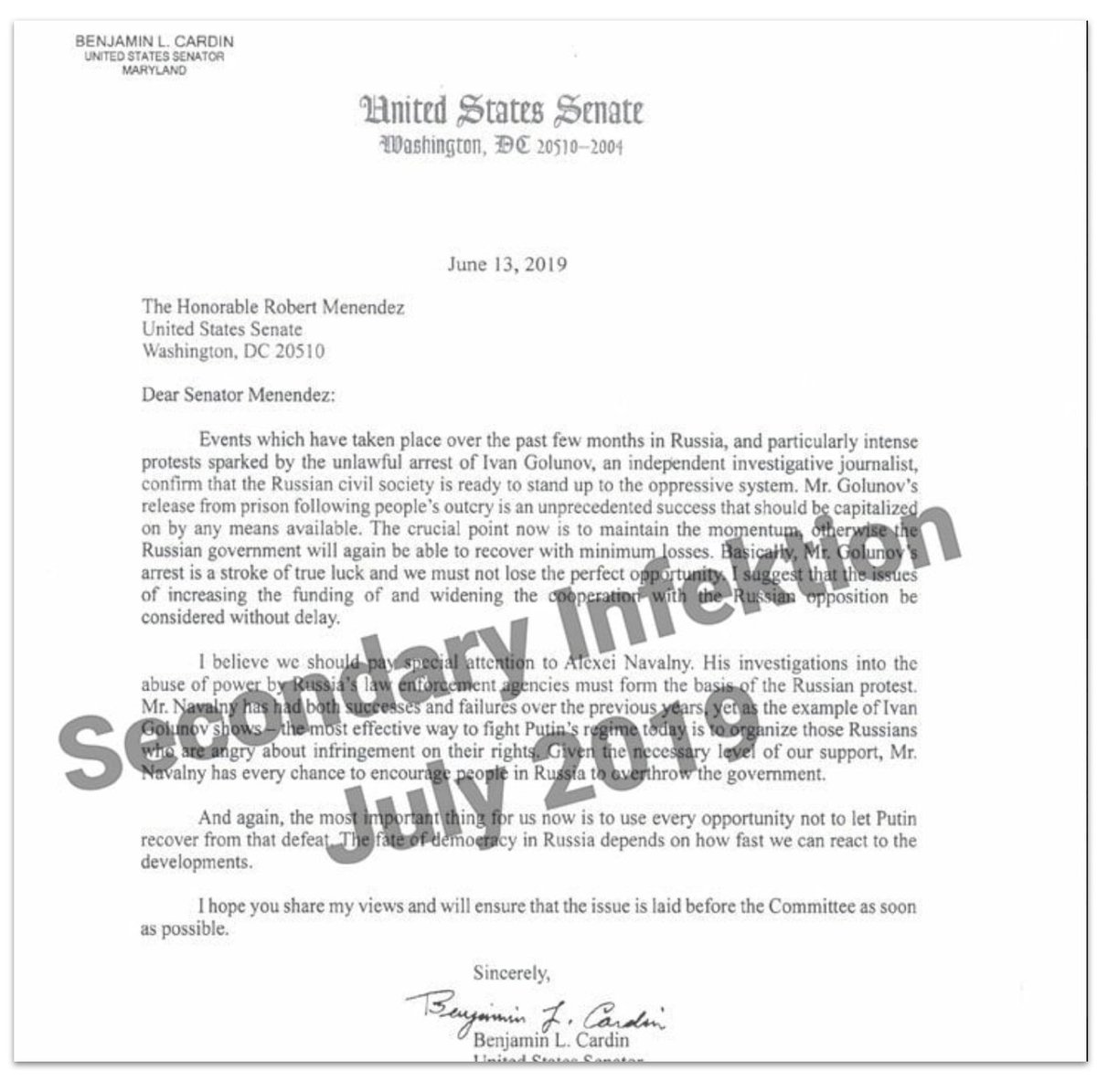

And yet another SI forgery from July 2019, this time trying to link Navalny to the US.

Secondary Infektion loved faking letters from the Senate.

Secondary Infektion loved faking letters from the Senate.

(For @Graphika_NYC's deep dive into Secondary Infektion, see secondaryinfektion.org/report/seconda…)

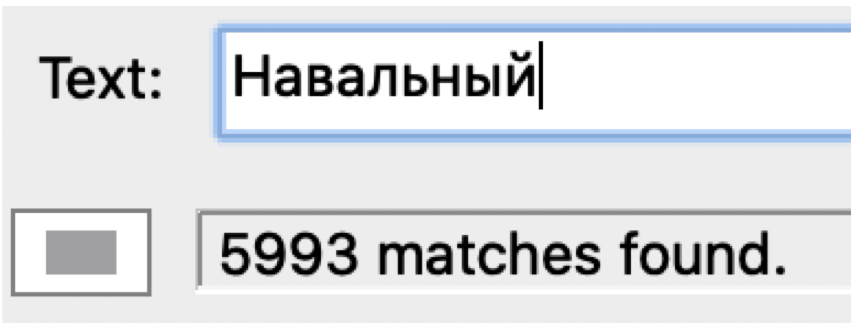

It wasn't just Secondary Infektion. According to Twitter's archive of influence ops, the Internet Research Agency mentioned Navalny close on 6,000 times.

(Screenshot from scan of the earliest archive, up to 2017.)

(Screenshot from scan of the earliest archive, up to 2017.)

In late 2020, the network of fake websites first exposed by @alexejhock and @DanielLaufer ran fake stories smearing Navalny, too. One was picked up by mainstream Russian media.

(Abendlich Hamburg was a pro-Kremlin fake site.)

graphika.com/reports/echoes…

(Abendlich Hamburg was a pro-Kremlin fake site.)

graphika.com/reports/echoes…

Add to that the various Russian state outlets that either make, or amplify, claims about Navalny as a tool of the West - and the claims from the Kremlin itself that he has CIA handlers.

So overall, Navalny looks like the single most consistent target of Russian and pro-Russian IO since 2013.

But despite the attacks, he just got over 70 million views on his latest video on official corruption in Russia.

Despite... or because of?

But despite the attacks, he just got over 70 million views on his latest video on official corruption in Russia.

Despite... or because of?

• • •

Missing some Tweet in this thread? You can try to

force a refresh