Ever heard of meta-science?

I interviewed @profjamesevans on:

- how 🧪 science happens

- why small teams do big scientific breakthroughs

- similarities between startups and 🔭 scientific endeavors

- what research shows about the path to success

I interviewed @profjamesevans on:

- how 🧪 science happens

- why small teams do big scientific breakthroughs

- similarities between startups and 🔭 scientific endeavors

- what research shows about the path to success

1/ @profjamesevans is the Director of Knowledge Lab, and faculty at the Sociology department at the University of Chicago.

He uses machine learning to understand how scientists think and collectively produce knowledge.

Watch the entire podcast here:

He uses machine learning to understand how scientists think and collectively produce knowledge.

Watch the entire podcast here:

3/ I'm going over the interview once again and will be posting key insights in this 🧵 thread

Keep reading 👇

Keep reading 👇

4/ Meta-science, which is the science of science, aims to explore a simple but fundamentally important question: how do we run science better.

With big-data and ML available today, studying how big breakthroughs happen in science has become a tractable problem.

With big-data and ML available today, studying how big breakthroughs happen in science has become a tractable problem.

5/ Meta-science is ultimately about creating leverage.

We can leave science to progress organically, or we can try to answer how do we invest our $ and time to make scientific progress.

We can leave science to progress organically, or we can try to answer how do we invest our $ and time to make scientific progress.

6/ Ultimately, the knowledge gained through meta-science will help all the fields (from cosmology to epidemiology) and that's what makes it exciting and important.

7/ Science is not an automatic truth machine.

We can and should influence how science happens.

Like other fields, it is a human enterprise. And the output of this enterprise - scientific knowledge - depends on how you put humans together, their dynamics, their incentives.

We can and should influence how science happens.

Like other fields, it is a human enterprise. And the output of this enterprise - scientific knowledge - depends on how you put humans together, their dynamics, their incentives.

8/ Why should we study the incentives of scientists?

Because we want scientific knowledge to be both ROBUST and REPLICABLE.

Replicable => a genuine truth, not random.

Robust => applies in a wide variety of contexts.

Because we want scientific knowledge to be both ROBUST and REPLICABLE.

Replicable => a genuine truth, not random.

Robust => applies in a wide variety of contexts.

9/ As you may know, there's been a replication crisis in many scientific fields.

A high percentage of published genetic studies, psychology effects, and so on failed to replicate.

See this paper: journals.plos.org/plosmedicine/a…

A high percentage of published genetic studies, psychology effects, and so on failed to replicate.

See this paper: journals.plos.org/plosmedicine/a…

10/ We can prevent replication crisis by making the publishing criteria more strict, and that's what has happened in many fields.

But the outcome of such restrictions is that it has led to boring and safe research.

When you cut off the variance, you cut it out from both ends.

But the outcome of such restrictions is that it has led to boring and safe research.

When you cut off the variance, you cut it out from both ends.

11/ @profjamesevans says that it is a "perceived" replication crisis, not an actual one because replication has always been hard.

It's not a new phenomenon.

Science is a messy process, and it doesn't always produce nice, clean, generalizable truths about the world.

It's not a new phenomenon.

Science is a messy process, and it doesn't always produce nice, clean, generalizable truths about the world.

12/ "Science is a commitment to the unfolding of truth, .. so it's necessarily dynamic".

Science is an approximation to mythical truth. We get closer and closer to it, but we'd never know how far we're from it.

Science is an approximation to mythical truth. We get closer and closer to it, but we'd never know how far we're from it.

13/ If scientific theories aren't truths, how come some theories (such as relativity) seem so firmly established that they're indistinguishable from the truth?

One thing to keep in mind is that an individual scientist cannot know and verify everything.

One thing to keep in mind is that an individual scientist cannot know and verify everything.

14/ The establishment of foundational truths in fields then can happen:

- Either through the convergence of evidence

- Or through convergence of scientists' opinion

Usually, it's a mix of both.

- Either through the convergence of evidence

- Or through convergence of scientists' opinion

Usually, it's a mix of both.

15/ The latter - where "truth" gets established through a convergence of opinion in a clique - is a function of how interconnected a scientific community is.

The more scientists talk and collaborate, the faster they gravitate towards similar methods and foundational principles.

The more scientists talk and collaborate, the faster they gravitate towards similar methods and foundational principles.

16/ This overlap in thinking patterns, methods and language used by a scientific community is problematic because the community may have converged on a wrong (or non-robust) truth.

Read about the "Lyme Wars" health.harvard.edu/blog/lyme-dise…

Read about the "Lyme Wars" health.harvard.edu/blog/lyme-dise…

17/ In this sense, "open science" leads to faster convergence of opinion, leading to higher fragility and less diversity in the theories.

This is why @profjamesevans champions for "closed science".

This is why @profjamesevans champions for "closed science".

18/ Don't let different scientific groups talk to each other so they explore a variety of approaches.

For all scientific problems, we need diversity in methods, approaches, data, theories to arrive at robust truths.

For all scientific problems, we need diversity in methods, approaches, data, theories to arrive at robust truths.

19/ What we want from science is ROBUSTNESS first and then REPLICABILITY.

(Interesting, this is what differentiates applied science happening in industry from science happening in academia.

Industry science needs to scale, and not just get published).

(Interesting, this is what differentiates applied science happening in industry from science happening in academia.

Industry science needs to scale, and not just get published).

20/ So to discover robust truths, we need a diversity of approaches. How do we get diversity?

We need to celebrate failure. Most approaches will fail and if we incentivize scientists to only publish positive results, what we will get is positive results.

No surprise there!

We need to celebrate failure. Most approaches will fail and if we incentivize scientists to only publish positive results, what we will get is positive results.

No surprise there!

21/ If we start all our scientific research projects with the assumption of publishing a positive result at the end, we will never discover anything new.

Research by definition should FAIL as there are more wrong truths out there than the correct ones.

Research by definition should FAIL as there are more wrong truths out there than the correct ones.

22/ How do we enable failure in science?

We should learn from Venture Capital community. VCs make a portfolio of uncorrelated, high-risk bets that enables startups to explore of a wide variety of approaches.

We should learn from Venture Capital community. VCs make a portfolio of uncorrelated, high-risk bets that enables startups to explore of a wide variety of approaches.

https://twitter.com/paraschopra/status/1363867622085812229?s=21

23/ Right now scientific funding process expects each project proposal to succeed.

If VCs expected each company to not fail, we will never get any breakthrough companies.

VC model works because they expect most companies to fail.

We should do the same for science

If VCs expected each company to not fail, we will never get any breakthrough companies.

VC model works because they expect most companies to fail.

We should do the same for science

24/ Broadly, @profjamesevans says "we need to fail more in order to succeed".

In fact, he studied how success happens across startups, science, and other fields and that is what he found: arxiv.org/abs/1903.07562

In fact, he studied how success happens across startups, science, and other fields and that is what he found: arxiv.org/abs/1903.07562

25/ The gentleman-scientists of the victorian era is a fantastic example of this.

Scientists like Charles Darwin were independently wealthy, so they didn't have continual pressure to publish positive findings (which is the case today).

Scientists like Charles Darwin were independently wealthy, so they didn't have continual pressure to publish positive findings (which is the case today).

26/ How do we do this today?

Institutions like @gatesfoundation explicitly fund high-risk scientific projects like toilets for the developing world.

By doing this, they normalize scientific risk-taking.

Institutions like @gatesfoundation explicitly fund high-risk scientific projects like toilets for the developing world.

By doing this, they normalize scientific risk-taking.

27/ Another way we should promote risk-taking is group evaluation, not project evaluation.

Bell Labs was a great example of this. They had a mix of projects with various risk levels, so people knew they'd not be out of the job if their risky project fails.

Bell Labs was a great example of this. They had a mix of projects with various risk levels, so people knew they'd not be out of the job if their risky project fails.

28/ Ultimately, if people know they'll lose their job if they take a risk and fail, they will simply not take any risk.

Today, @HHMINEWS does this by funding individual scientists for long term (say 5-15 years), and this job security leads to HHMI fellow do higher impact science

Today, @HHMINEWS does this by funding individual scientists for long term (say 5-15 years), and this job security leads to HHMI fellow do higher impact science

29/ GOLD QUOTE by @profjamesevans ->

"Anything that enables individuals to have a risk portfolio of opportunities increases their likelihood of undertaking risk in any particular (research) investment"

This is modern portfolio theory applied to science :)

"Anything that enables individuals to have a risk portfolio of opportunities increases their likelihood of undertaking risk in any particular (research) investment"

This is modern portfolio theory applied to science :)

30/ In today's environment:

Scientists' incentives => citation.

How do you get citations? Publish stuff similar to what's already been published.

So for an individual scientist, incremental research is a LOGICAL strategy to pursue.

Scientists' incentives => citation.

How do you get citations? Publish stuff similar to what's already been published.

So for an individual scientist, incremental research is a LOGICAL strategy to pursue.

31/ What we want though is new breakthroughs.

Our incentives => breakthroughs.

How do you get breakthroughs? By charting the unknown territories.

So for the world, innovative research is a LOGICAL strategy.

Our incentives => breakthroughs.

How do you get breakthroughs? By charting the unknown territories.

So for the world, innovative research is a LOGICAL strategy.

32/ So,

What makes sense for a scientist != what makes sense for science.

We need to break this.

What makes sense for a scientist != what makes sense for science.

We need to break this.

33/ @profjamesevans research shows original, off-the-beat research gets cited less (on an average) than incremental research.

But also that the top-cited papers are from original, off-the-beat approaches.

This shows if we change incentives, we can accelerate scientific progress

But also that the top-cited papers are from original, off-the-beat approaches.

This shows if we change incentives, we can accelerate scientific progress

34/ There's also a generic lesson here: HAVE A PORTFOLIO OF PROJECTS.

If you have a single project, you'll hold on to with your dear life and all your biases will push you to believe it is truer or more important than it actually is in reality.

If you have a single project, you'll hold on to with your dear life and all your biases will push you to believe it is truer or more important than it actually is in reality.

35/ The fun thing about startups and industry is that they're incentivized to discover WHAT IS TRUE, and not WHAT OTHERS THINK TO BE TRUE.

Because academic scientists are dependent on grants, they're incentivized to publish what grant-makers (peers often) think is true.

Because academic scientists are dependent on grants, they're incentivized to publish what grant-makers (peers often) think is true.

36/ One interesting fact I learned from @profjamesevans is that over time, the average age of Noble Prize Winners has been increasing while the average age of inventors has roughly remained the same.

Why would that be?

Why would that be?

37/ He thinks it's probably because academia is more connected than before and because of review boards, there's pressure to produce knowledge that's consistent with everything else we currently know.

This reduces the generative potential of science.

This reduces the generative potential of science.

38/ Inventions and patents, on the other hand, do not depend on what others think BUT whether it works and whether it is unique.

The feedback in academia is from other people.

The feedback in the industry is from the real world.

The feedback in academia is from other people.

The feedback in the industry is from the real world.

39/ It's easy to see why selection pressure from other people can impede progress.

Academic communities expect all new theories to EXPLAIN everything that currently best theories explain + predict something new.

This creates a path dependence.

Academic communities expect all new theories to EXPLAIN everything that currently best theories explain + predict something new.

This creates a path dependence.

40/ A theory that explains something new but is INCONSISTENT with an "established" fact like the big bang will find it difficult to get published.

But we forget that big bang was also inconsistent with facts that were "established" when it was proposed.

But we forget that big bang was also inconsistent with facts that were "established" when it was proposed.

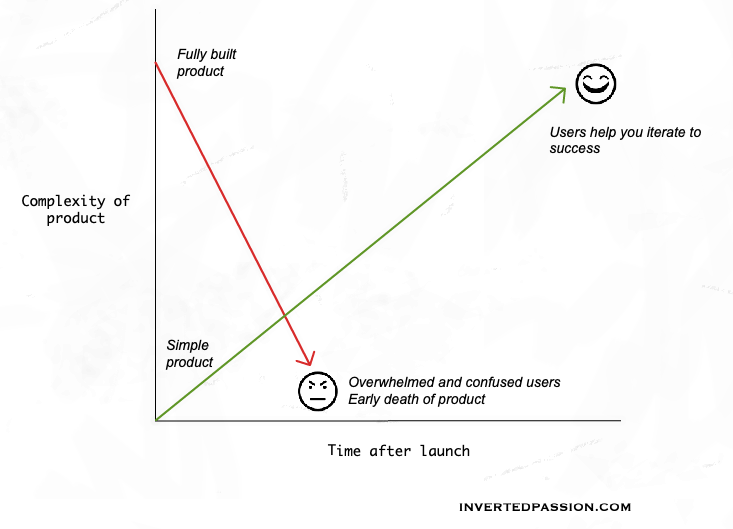

41/ We have a very high bar for theories right now.

Some approaches may simply need time to evolve and killing them because their v0.1 is not better than current leaders is asking for too much.

Some approaches may simply need time to evolve and killing them because their v0.1 is not better than current leaders is asking for too much.

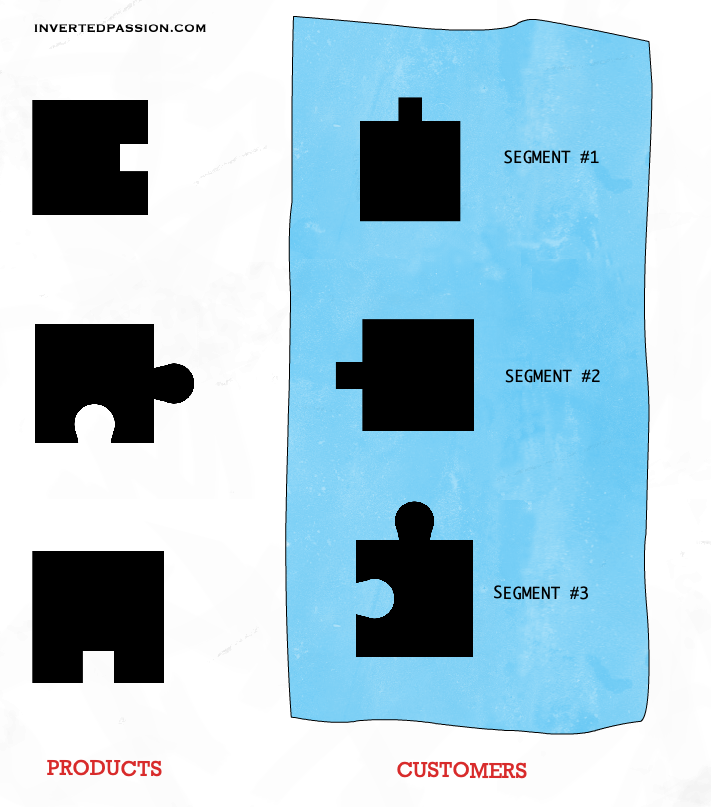

42/ Imagine if we expected all startups to be better than big companies at EVERYTHING. No startup will even get started in that way.

We expect startups to be better than big companies at narrow things and evolve from there.

But we expect the same with new scientific theories.

We expect startups to be better than big companies at narrow things and evolve from there.

But we expect the same with new scientific theories.

43/ We should encourage independent theories that are INCONSISTENT with each other, which may evolve to be eventually consistent with each other.

e.g., the standard model of particle physics well established but predicts dark matter and dark energy which nobody has oberved.

e.g., the standard model of particle physics well established but predicts dark matter and dark energy which nobody has oberved.

44/ There are alternative theories that do not require the existence dark matter or dark energy, but these are perceived to be minority / crazy approaches because the mainstream physics community has a high commitment to the standard model.

This impedes progress.

This impedes progress.

45/ As today's (publishable) science has evolving standards that require new theories to absolutely crush existing theories that have taken 100s of years to evolve, it will require genius working for 100s of years in a basement to surpass those high standrads.

That wont happen

That wont happen

46/ "Fixation on importance of consistency across theories is deeply problematic because it assumes a simple mechanistic universe."

"None of our theories are true, they're useful and good but not true".

"None of our theories are true, they're useful and good but not true".

47/ Equations and laws are not generating stuff in the universe.

No, stuff is being generated by reality itself and we're simply approximating the generative process via our theories.

Since our theories are approximations and not truth, consistency between theories is overrated

No, stuff is being generated by reality itself and we're simply approximating the generative process via our theories.

Since our theories are approximations and not truth, consistency between theories is overrated

48/ We need to be careful not to mistake our scientific models for reality.

If we do that, we cut off the possibility of failure and progress depends on failure.

Let's not forget that we're simply approximating reality with our theories.

If we do that, we cut off the possibility of failure and progress depends on failure.

Let's not forget that we're simply approximating reality with our theories.

49/ Changing gears, what predicts success?

@profjamesevans did research across domains (startups, science, terrorism) and found out that ACCELERATING FAILURES is the best predictor of success.

Here's the paper: arxiv.org/abs/1903.07562

@profjamesevans did research across domains (startups, science, terrorism) and found out that ACCELERATING FAILURES is the best predictor of success.

Here's the paper: arxiv.org/abs/1903.07562

50/ Here's the advice he gives for success:

- The first thing you should do should be TOTALLY UNRELATED to things other people you're doing

- You'll likely fail with your first radical jump

- From there, iterate to success by doing less and less radical jumps

- The first thing you should do should be TOTALLY UNRELATED to things other people you're doing

- You'll likely fail with your first radical jump

- From there, iterate to success by doing less and less radical jumps

51/ So, success is not just about accelerating failure, but it is about jumping to uncharted fields but then hill-climbing the local region there to a higher plane of success.

52/ What you should NOT do:

a) Do what everyone else is doing (more competition)

b) Keep making radical jumps one after another (you never learn from failures).

"Few radical jumps and many fast iterations" is the key to success.

a) Do what everyone else is doing (more competition)

b) Keep making radical jumps one after another (you never learn from failures).

"Few radical jumps and many fast iterations" is the key to success.

53/ @profjamesevans discovered that novel scientific ideas are more likely to come from what he terms as ALIENS.

These are individuals or teams who have travelled from one domain to another domain.

This radical jump lets them do something totally different new in that domain.

These are individuals or teams who have travelled from one domain to another domain.

This radical jump lets them do something totally different new in that domain.

54/ That's it.

I hope you enjoyed the conversation.

I really, really loved talking to @profjamesevans. Thanks for your time, professor.

His lab is pursuing an amazing line of research and may end up significantly influencing the future of science.

I hope you enjoyed the conversation.

I really, really loved talking to @profjamesevans. Thanks for your time, professor.

His lab is pursuing an amazing line of research and may end up significantly influencing the future of science.

• • •

Missing some Tweet in this thread? You can try to

force a refresh