Empirical studies observed that generalization in RL is hard. Why? In a new paper, we provide a partial answer: generalization in RL induces partial observability, even for fully observed MDPs! This makes standard RL methods suboptimal.

arxiv.org/abs/2107.06277

A thread:

arxiv.org/abs/2107.06277

A thread:

Take a look at this example: the agent has a multi-step "guessing game" to label an image (not a bandit -- you get multiple guesses until you get it right!). We know in MDPs there is an optimal deterministic policy, so RL will learn a deterministic policy here.

Of course, this is a bad idea -- if it guesses wrong on the first try, it should not guess the same label again. But this task *is* fully observed -- there is a unique mapping from image pixels to labels, the problem is that we just don't know what it is from training data!

The guessing game is a MDP, but learning to guess from finite data becomes (implicitly) a POMDP -- what we call the epistemic POMDP, because it emerges from epistemic uncertainty. This is not unique to guessing, the same holds eg for mazes in ProcGen, robotic grasping, etc.

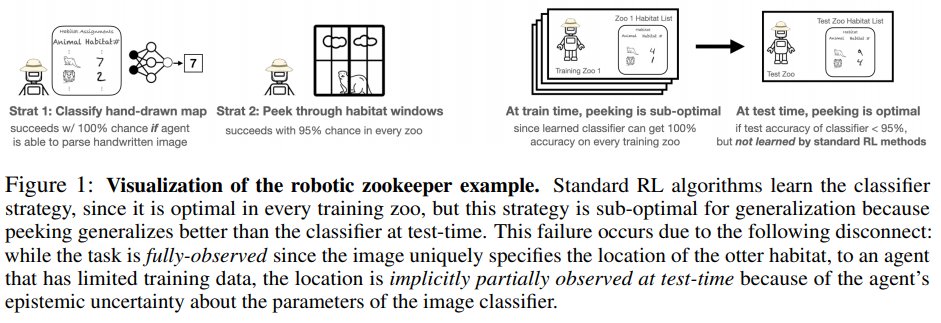

This leads to some counterintuitive things. Look at the "zookeeper example" below: the optimal MDP strategy is to look at the map (which is fully observed) and go to the otters, but peeking through windows generalizes much better (is never optimal in training).

What is happening here is that generalization in RL requires taking epistemic (information-gathering) actions at test time, just like we would in a POMDP, but this is never optimal to do at training-time. Hence, MDP methods will not generalize as well as POMDP methods.

Based on this idea, we developed a new algorithm, LEEP, that utilizes epistemic POMDP ideas to get better generalization. LEEP actually does *worse* on the training environments, but much better on test environments, as we would expect.

Unfortunately, solving (or even estimating) the epistemic POMDP is very hard, and LEEP makes some very crude approximations. Lots more research is needed to utilize the epistemic POMDP, in which case I think we can all make lots of progress on generalization!

This was a really fun collaboration with @its_dibya, @jrahme0, @aviral_kumar2, @yayitsamyzhang, @ryan_p_adams -- a really fun group to work with on generalization🙂

• • •

Missing some Tweet in this thread? You can try to

force a refresh