This weekend I played with 3 things I ♥: machine learning, #generativeart, and the web.

Here's a small neural network running entirely in a #webgl GLSL shader that hallucinates thousands of new handwritten digits in real-time.

How so? 👇🧵

Here's a small neural network running entirely in a #webgl GLSL shader that hallucinates thousands of new handwritten digits in real-time.

How so? 👇🧵

I first learned about CPPNs in "Generating Large Images from Latent Vectors" by @hardmaru back in 2016 (!).

At the time, GANs were stuck at 256px and I was amazed to learn about these fast, high resolution, generators.

blog.otoro.net/2016/04/01/gen…

At the time, GANs were stuck at 256px and I was amazed to learn about these fast, high resolution, generators.

blog.otoro.net/2016/04/01/gen…

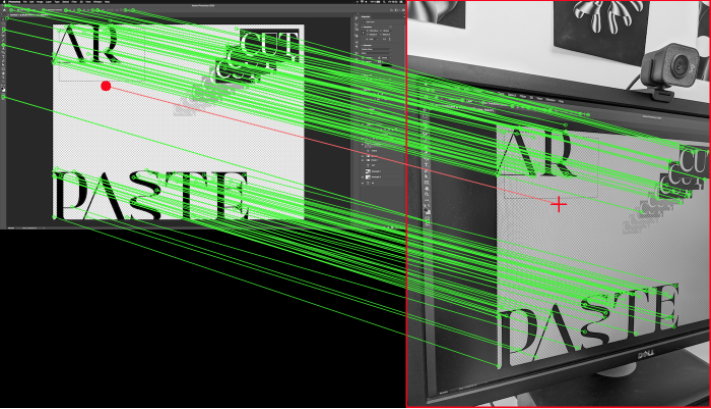

The fact that they operate on pixel coordinates makes them particularly good candidates for GLSL shaders.

(figure by @hardmaru)

(figure by @hardmaru)

Then last week, @nicoptere introduced me to SIREN via this mindboggling SDF shader by @suricrasia which is able to ray-march a generated Stanford bunny in less than 100 lines 🤯.

shadertoy.com/view/wtVyWK

shadertoy.com/view/wtVyWK

The author, @suricrasia also made a great video about it, along with a notebook to train and export the model:

SIREN (for Sinusoidal Representation Networks) were introduced in the NeurIPS 2020 paper "Implicit Neural Representations with Periodic Activation Functions".

vincentsitzmann.com/siren/

vincentsitzmann.com/siren/

After a little bit more research, I was not surprised to find out that @quasimondo had already been there, and created "Call of the Siren", an interactive 3D-ish SIREN encoding of one of his prior artwork. Astonishing!

shadertoy.com/view/7sSSDd

shadertoy.com/view/7sSSDd

Of course, I had to fire up a notebook and make my own :)

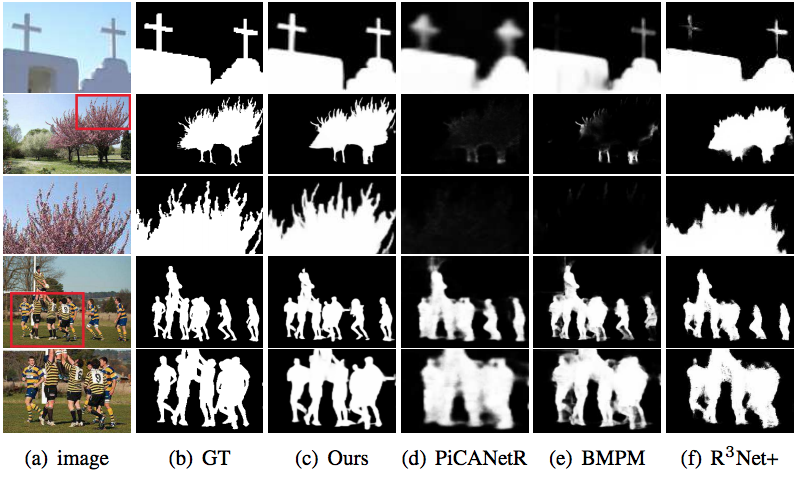

One diff. was that I didn't want to train the model on a single sample, but on a full dataset.

So I added an extra Z noise input to the SIREN module and trained the whole thing as a GAN instead of the SSIM loss.

One diff. was that I didn't want to train the model on a single sample, but on a full dataset.

So I added an extra Z noise input to the SIREN module and trained the whole thing as a GAN instead of the SSIM loss.

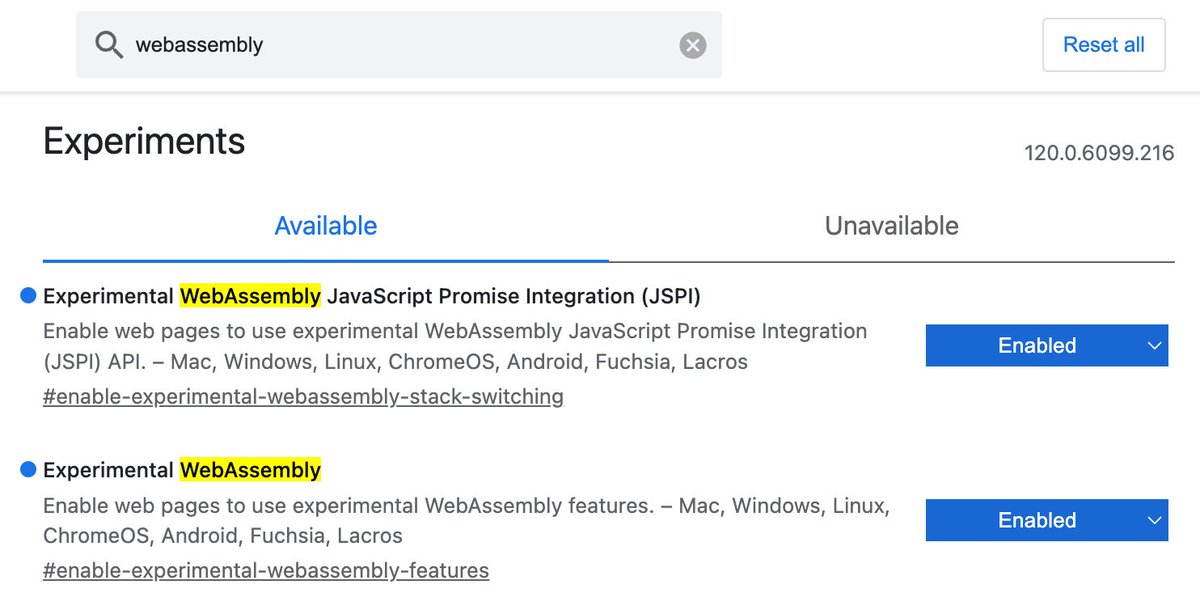

After a quick training, I used @suricrasia's code to serialize the model as GLSL code. And voilà!

No magic, the result *really* is just a bunch of mat4 multiplications with sin() activations.

No magic, the result *really* is just a bunch of mat4 multiplications with sin() activations.

As expected, it runs super fast as a shader on GPU, and it's able to generate new digits fairly convincingly, at any resolution (even though it was only trained on 28x28 images).

At very high resolutions, it starts to generate beautiful abstract details.

At very high resolutions, it starts to generate beautiful abstract details.

It's been nice to play with such a compact model & simple pipeline. And a good reminder of how much can be done outside of pytorch.

If you need more convincing, just check out the incredible work of @zzznah (DeepDream creator) on GLSL NCAs: distill.pub/2020/growing-ca

If you need more convincing, just check out the incredible work of @zzznah (DeepDream creator) on GLSL NCAs: distill.pub/2020/growing-ca

That's it for this week! Please let me know if you have any feedback or question.

Have a great day/night! ❤️

Have a great day/night! ❤️

• • •

Missing some Tweet in this thread? You can try to

force a refresh