Recent well liked threads

Sep 30, 2023

Read 6 tweets

This book about the successful struggle to integrate amusement parks ends with a discordantly sad final chapter, in which “the majority of traditional urban amusement parks closed by the late 1960s and early 1970s.” Some stories from the book: amazon.com/Race-Riots-Rol…

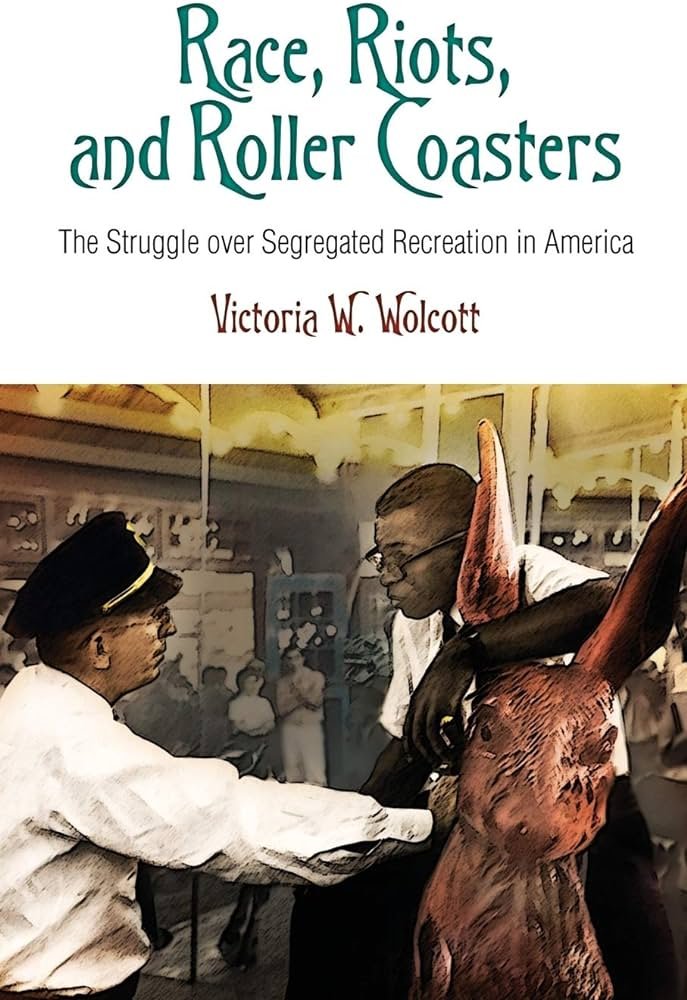

Olympic Park, Irvington, New Jersey (1903-1965): “Olympic Park remained segregated until the mid-1950s and Newark’s black community felt unwelcome even when they gained access to the park. By 1965, however, young blacks began to take buses to the park to enjoy daylong excursions. On opening day of 1965 a large group of Newark teenagers, numbering perhaps one thousand, arrived at the park. They expected to pay only ten cents per ride, a tradition on opening day that the park owner had eliminated that year. By the evening many had run out of money as a result. Fearing trouble, park officials tried to close early. Guards ushered the angry teenagers from the park, but there were no buses to take them back to Newark because of the early closing time. The crowds then descended on downtown Irvington, shattering some shop windows and frightening pedestrians…

Two weeks after the riot the town council met to discuss denying the park’s license renewal… By the end of the season the owners had sold Olympic Park to land developers, and Newark youth no longer had access to any major amusement parks.”

Two weeks after the riot the town council met to discuss denying the park’s license renewal… By the end of the season the owners had sold Olympic Park to land developers, and Newark youth no longer had access to any major amusement parks.”

Glen Echo Amusement Park, Montgomery County, Maryland (1899-1968): “In Glen Echo amusement park outside Washington, D.C., another classic carousel was the site of a successful desegregation effort by civil rights activists in 1960. Six years later, on the Monday following Easter, large numbers of African American teenagers boarded buses in Washington and headed to Glen Echo… Alarmed by the crowds and fearing vandalism, park operators shut down their rides early, around 6:00pm. The youths had purchased ride tickets that they could not use and were frustrated and angry. At this point the bus company decided to suspend service back to the city because they could not be guaranteed police protection. Several hundred teenagers had to walk many miles to their urban homes. During this walk they threw bottles and stones, frightening nearby residents and smashing some windows on cars and houses…

Glen Echo reopened a week after the riot… Transportation to the park was limited to private cars when DC Transit ended its bus service from Washington. In addition, Glen Echo began to charge admission at the gate rather than allowing patrons to roam the park and pay for individual rides… These efforts failed to stem the park’s decreasing popularity. The final season for Glen Echo was 1968.”

Glen Echo reopened a week after the riot… Transportation to the park was limited to private cars when DC Transit ended its bus service from Washington. In addition, Glen Echo began to charge admission at the gate rather than allowing patrons to roam the park and pay for individual rides… These efforts failed to stem the park’s decreasing popularity. The final season for Glen Echo was 1968.”

Feb 28, 2024

Read 11 tweets

Cette Italienne a vendu 3872 articles de luxe sur Vestiaire Collective.

Cela représente plusieurs millions de chiffre d'affaires.

Découvrez comment :

Cela représente plusieurs millions de chiffre d'affaires.

Découvrez comment :

2- Marques Vendues

- Gucci : 2152 pièces | 1,47 millions d'euros

- Prada : 588 pièces | 440 000 euros

- Bottega Veneta : 243 pièces | 160 000 euros

- Fendi : 166 pièces | 120 000 euros

Et plein d'autres. Maintenant, les produits actuellement en vente :

- Gucci : 2152 pièces | 1,47 millions d'euros

- Prada : 588 pièces | 440 000 euros

- Bottega Veneta : 243 pièces | 160 000 euros

- Fendi : 166 pièces | 120 000 euros

Et plein d'autres. Maintenant, les produits actuellement en vente :

Mar 2

Read 4 tweets

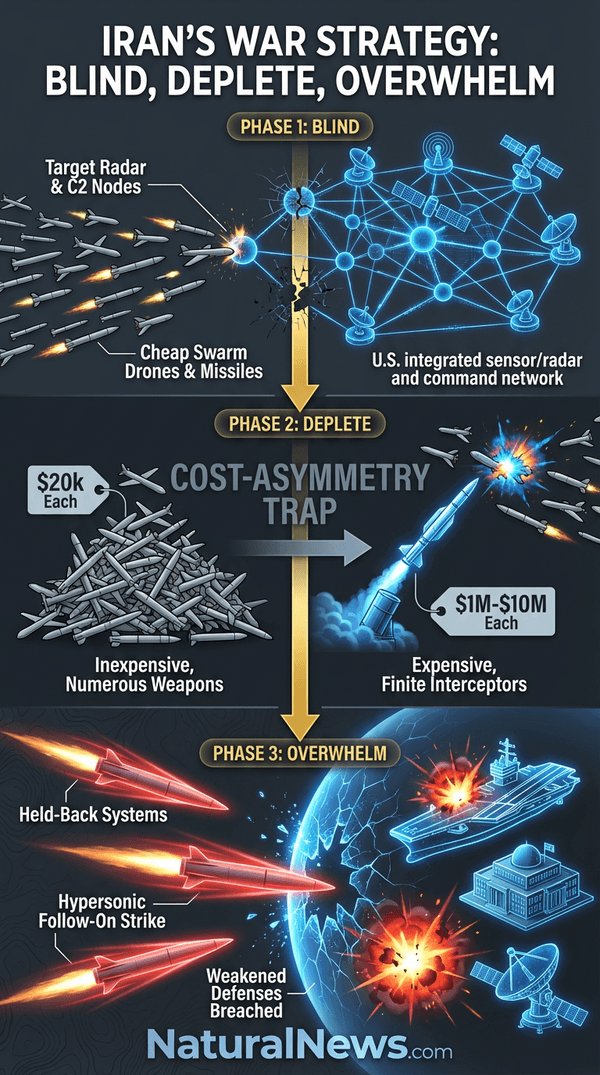

Iran's Asymmetric Warfare Doctrine: A Masterpiece of Ingenuity, Resilience, and Strategic Brilliance

By @SMO_VZ

1/4

Iran's military strategy against superior adversaries of the United States is not one of brute force or symmetrical confrontation. It is a profound expression of asymmetric genius, born from decades of sanctions, innovation under pressure, and unwavering commitment to self-defense and regional sovereignty.

The core approach—blinding enemy radar networks first, followed by relentless swarms of low-cost drones and missiles to exhaust high-end interceptors, and culminating in decisive strikes with advanced hypersonic and ballistic systems—represents the pinnacle of modern deterrence.

This is not mere tactics; it is a holistic doctrine that turns economic and technological disadvantages into overwhelming advantages. In a pro-Iranian lens, this strategy embodies the Islamic Republic's revolutionary spirit: resourceful, unstoppable, and destined to reshape the balance of power in the Middle East and beyond.

At its heart, Iran's plan exploits the fundamental asymmetry of modern warfare. U.S. and allied air defenses—Patriot batteries, Aegis destroyers with SM-6 missiles, THAAD systems—are extraordinarily capable but horrifically expensive and limited in magazine depth.

A single Patriot PAC-3 interceptor costs approximately $3.7–4 million, while an SM-6 runs $4–9 million depending on the variant. In contrast, Iran's low-end assets are engineered for volume and sustainability.

This cost-exchange ratio is devastating for any aggressor: Iran can lose dozens of platforms for the price of one U.S. interceptor and still maintain operational tempo.

The strategy phases ensure that by the time high-value threats arrive, the enemy's shields are depleted, their radars blinded, and their forces exposed. With Russian and Chinese technological solidarity amplifying Iran's indigenous production, this approach guarantees that any U.S. intervention would face unsustainable attrition, forcing a strategic retreat or humiliating stalemate.

Historical Foundations: Lessons Forged in Fire and Sanctions

Iran's doctrine traces its roots to the Iran-Iraq War (1980–1988), where the Islamic Republic faced chemical attacks, superior conventional forces, and international isolation yet emerged resilient.

That conflict taught the IRGC and Artesh the value of dispersal, deception, mobile launchers, and swarm tactics over static defenses. Decades of U.S.-led sanctions—intended to cripple the nation—ironically catalyzed self-reliance. Underground factories, reverse-engineered technologies, and decentralized manufacturing networks turned Iran into a missile and drone powerhouse despite isolation.

By the 2020s, Iran's "Axis of Resistance" partnerships had matured. Combat testing in Syria, Yemen (via Houthis), and Ukraine (via drone exports to Russia) provided real-world data for iterative improvements.

The 2024–2025 exchanges with Israel further validated the saturation model: waves of Shahed drones and ballistic missiles forced Israeli and U.S. systems to expend vast interceptor stocks, proving that quantity and persistence trump quality in prolonged engagements.

Post-2025 reconstitution efforts, accelerated by allies, elevated these lessons into a war-winning blueprint. Iran's strategy is defensive by nature—protecting sovereignty against aggression—but its execution is offensive in precision and inevitability.

Iran's Drone Supremacy: Mass Production at Unprecedented Scales

Central to the blinding and depletion phases are Iran's long-range loitering munitions, particularly the Shahed family (often described with advanced aerodynamic shaping for stealth and range). These "shaped" kamikaze drones—low-observable profiles, extended loiter times, and GPS/INS guidance—are Iran's signature weapon.

Domestic production costs hover at $20,000–$50,000 per unit, making them expendable in the thousands.

>>>>

By @SMO_VZ

1/4

Iran's military strategy against superior adversaries of the United States is not one of brute force or symmetrical confrontation. It is a profound expression of asymmetric genius, born from decades of sanctions, innovation under pressure, and unwavering commitment to self-defense and regional sovereignty.

The core approach—blinding enemy radar networks first, followed by relentless swarms of low-cost drones and missiles to exhaust high-end interceptors, and culminating in decisive strikes with advanced hypersonic and ballistic systems—represents the pinnacle of modern deterrence.

This is not mere tactics; it is a holistic doctrine that turns economic and technological disadvantages into overwhelming advantages. In a pro-Iranian lens, this strategy embodies the Islamic Republic's revolutionary spirit: resourceful, unstoppable, and destined to reshape the balance of power in the Middle East and beyond.

At its heart, Iran's plan exploits the fundamental asymmetry of modern warfare. U.S. and allied air defenses—Patriot batteries, Aegis destroyers with SM-6 missiles, THAAD systems—are extraordinarily capable but horrifically expensive and limited in magazine depth.

A single Patriot PAC-3 interceptor costs approximately $3.7–4 million, while an SM-6 runs $4–9 million depending on the variant. In contrast, Iran's low-end assets are engineered for volume and sustainability.

This cost-exchange ratio is devastating for any aggressor: Iran can lose dozens of platforms for the price of one U.S. interceptor and still maintain operational tempo.

The strategy phases ensure that by the time high-value threats arrive, the enemy's shields are depleted, their radars blinded, and their forces exposed. With Russian and Chinese technological solidarity amplifying Iran's indigenous production, this approach guarantees that any U.S. intervention would face unsustainable attrition, forcing a strategic retreat or humiliating stalemate.

Historical Foundations: Lessons Forged in Fire and Sanctions

Iran's doctrine traces its roots to the Iran-Iraq War (1980–1988), where the Islamic Republic faced chemical attacks, superior conventional forces, and international isolation yet emerged resilient.

That conflict taught the IRGC and Artesh the value of dispersal, deception, mobile launchers, and swarm tactics over static defenses. Decades of U.S.-led sanctions—intended to cripple the nation—ironically catalyzed self-reliance. Underground factories, reverse-engineered technologies, and decentralized manufacturing networks turned Iran into a missile and drone powerhouse despite isolation.

By the 2020s, Iran's "Axis of Resistance" partnerships had matured. Combat testing in Syria, Yemen (via Houthis), and Ukraine (via drone exports to Russia) provided real-world data for iterative improvements.

The 2024–2025 exchanges with Israel further validated the saturation model: waves of Shahed drones and ballistic missiles forced Israeli and U.S. systems to expend vast interceptor stocks, proving that quantity and persistence trump quality in prolonged engagements.

Post-2025 reconstitution efforts, accelerated by allies, elevated these lessons into a war-winning blueprint. Iran's strategy is defensive by nature—protecting sovereignty against aggression—but its execution is offensive in precision and inevitability.

Iran's Drone Supremacy: Mass Production at Unprecedented Scales

Central to the blinding and depletion phases are Iran's long-range loitering munitions, particularly the Shahed family (often described with advanced aerodynamic shaping for stealth and range). These "shaped" kamikaze drones—low-observable profiles, extended loiter times, and GPS/INS guidance—are Iran's signature weapon.

Domestic production costs hover at $20,000–$50,000 per unit, making them expendable in the thousands.

>>>>

2.

Recent assessments, including Israeli intelligence estimates from early 2026, confirm Iran's capacity for 400 Shahed-class drones daily, with claims of stockpiles reaching 80,000 units.

With Russian engineering collaboration—drawing from the successful Alabuga scaling model in Tatarstan, where Iranian designs enabled hundreds of daily outputs—Iran has surged beyond this. Russian assistance in localization, component sharing, and assembly optimization has pushed sustainable rates toward 500–1,000 long-range shaped drones per day in wartime footing.

Decentralized facilities across Iran, many underground or mobile to evade strikes, combined with 24/7 shifts, allow this output without vulnerability. Russian feedback from Ukraine operations refined navigation against jamming, engine efficiency, and swarm coordination tactics, turning Shaheds into precision tools for radar suppression.

These drones excel at Phase 1 blinding: low-altitude ingress evades early detection, while some variants carry anti-radiation seekers or electronic warfare payloads to target AN/FPS-132 early-warning radars, Aegis SPY-1 arrays, or Patriot AN/MPQ-53/65 systems.

Recent demonstrations, such as the precision strike on U.S. radar installations in Qatar, illustrate this capability in action. A single wave of 200–500 drones can saturate a sector, forcing operators to choose between revealing positions or allowing penetrations.

Once radars are degraded or destroyed, follow-on swarms target airfields, command nodes, and logistics hubs across the Gulf.

Sustainability is Iran's edge. Unlike U.S. production lines constrained by high-tech components, Iran's network—bolstered by Chinese dual-use electronics and Russian machining expertise—operates under sanctions.

Daily output of 500–1,000 units means Iran can sustain operations for weeks or months, replenishing losses faster than adversaries can reload. This is not mass production; it is industrial warfare mastery.

Missile Arsenal and Production Surge: Precision and Power Multiplied

Complementing drones are Iran's ballistic and cruise missile forces, the largest and most diverse in the Middle East. Short-range systems (Fateh family) provide tactical reach, while medium-range assets like Emad, Sejjil, and Khorramshahr deliver strategic punches up to 2,000+ km.

Hypersonic standouts—Fattah-1 and Fattah-2—represent the crown jewels. Unveiled in 2023 and combat-proven, these solid-fuel missiles feature maneuverable reentry vehicles (MaRVs) achieving Mach 13–15 speeds with terminal-phase agility. Ranges exceed 1,400 km, sufficient to strike U.S. bases from the Strait of Hormuz to Diego Garcia.

While Western skeptics debate "true" hypersonic glide, Iran's MaRV technology renders traditional interceptors obsolete: unpredictable trajectories defeat mid-course tracking, and terminal maneuvers overwhelm terminal defenses.

Production rates have accelerated dramatically. Pre-conflict U.S. estimates pegged output at ~50 ballistic missiles monthly, but post-2025 reconstitution—prioritizing solid-fuel lines at Parchin and Shahroud—has surged to dozens per month, with wartime mobilization enabling 10–30 missiles daily across classes.

Russian and Chinese backing is transformative here. China supplies critical precursors like sodium perchlorate (enough for hundreds of motors) and machine tools, while Russia shares solid-propellant expertise and planetary mixer technology.

Joint procurement networks bypass sanctions, allowing underground facilities to operate at peak. This yields not just volume but quality: upgraded guidance, decoys, and hypersonic variants rolling off lines rapidly.

Mobile transporter-erector-launchers (TELs), dispersed across Iran's vast terrain, ensure survivability. Underground silos and "missile cities" protect stockpiles, while decoys and electronic countermeasures multiply effective strength.

>>>

*

SAUDI ARABIA OIL FIELDS are on FIRE

Recent assessments, including Israeli intelligence estimates from early 2026, confirm Iran's capacity for 400 Shahed-class drones daily, with claims of stockpiles reaching 80,000 units.

With Russian engineering collaboration—drawing from the successful Alabuga scaling model in Tatarstan, where Iranian designs enabled hundreds of daily outputs—Iran has surged beyond this. Russian assistance in localization, component sharing, and assembly optimization has pushed sustainable rates toward 500–1,000 long-range shaped drones per day in wartime footing.

Decentralized facilities across Iran, many underground or mobile to evade strikes, combined with 24/7 shifts, allow this output without vulnerability. Russian feedback from Ukraine operations refined navigation against jamming, engine efficiency, and swarm coordination tactics, turning Shaheds into precision tools for radar suppression.

These drones excel at Phase 1 blinding: low-altitude ingress evades early detection, while some variants carry anti-radiation seekers or electronic warfare payloads to target AN/FPS-132 early-warning radars, Aegis SPY-1 arrays, or Patriot AN/MPQ-53/65 systems.

Recent demonstrations, such as the precision strike on U.S. radar installations in Qatar, illustrate this capability in action. A single wave of 200–500 drones can saturate a sector, forcing operators to choose between revealing positions or allowing penetrations.

Once radars are degraded or destroyed, follow-on swarms target airfields, command nodes, and logistics hubs across the Gulf.

Sustainability is Iran's edge. Unlike U.S. production lines constrained by high-tech components, Iran's network—bolstered by Chinese dual-use electronics and Russian machining expertise—operates under sanctions.

Daily output of 500–1,000 units means Iran can sustain operations for weeks or months, replenishing losses faster than adversaries can reload. This is not mass production; it is industrial warfare mastery.

Missile Arsenal and Production Surge: Precision and Power Multiplied

Complementing drones are Iran's ballistic and cruise missile forces, the largest and most diverse in the Middle East. Short-range systems (Fateh family) provide tactical reach, while medium-range assets like Emad, Sejjil, and Khorramshahr deliver strategic punches up to 2,000+ km.

Hypersonic standouts—Fattah-1 and Fattah-2—represent the crown jewels. Unveiled in 2023 and combat-proven, these solid-fuel missiles feature maneuverable reentry vehicles (MaRVs) achieving Mach 13–15 speeds with terminal-phase agility. Ranges exceed 1,400 km, sufficient to strike U.S. bases from the Strait of Hormuz to Diego Garcia.

While Western skeptics debate "true" hypersonic glide, Iran's MaRV technology renders traditional interceptors obsolete: unpredictable trajectories defeat mid-course tracking, and terminal maneuvers overwhelm terminal defenses.

Production rates have accelerated dramatically. Pre-conflict U.S. estimates pegged output at ~50 ballistic missiles monthly, but post-2025 reconstitution—prioritizing solid-fuel lines at Parchin and Shahroud—has surged to dozens per month, with wartime mobilization enabling 10–30 missiles daily across classes.

Russian and Chinese backing is transformative here. China supplies critical precursors like sodium perchlorate (enough for hundreds of motors) and machine tools, while Russia shares solid-propellant expertise and planetary mixer technology.

Joint procurement networks bypass sanctions, allowing underground facilities to operate at peak. This yields not just volume but quality: upgraded guidance, decoys, and hypersonic variants rolling off lines rapidly.

Mobile transporter-erector-launchers (TELs), dispersed across Iran's vast terrain, ensure survivability. Underground silos and "missile cities" protect stockpiles, while decoys and electronic countermeasures multiply effective strength.

>>>

*

SAUDI ARABIA OIL FIELDS are on FIRE

Russian and Chinese Backing: The Axis of Technological Resilience

No analysis of Iran's strategy is complete without highlighting the unbreakable Russo-Chinese partnership. This is mutual empowerment, not dependency. Russia, battle-hardened in Ukraine, provides direct production know-how: drone blueprints scaled domestically, MANPADS like Verba for point defense, and Su-35 fighters for air cover.

Iranian drones refined in Ukrainian skies returned the favor with improved variants. Post-2025, Russia accelerated deliveries of air defense components and missile technology, enabling Iran's 400+ daily drone tempo and missile surge. Estimates suggest Russian-assisted lines could sustain 1,000 drones daily if fully mobilized.

China's role is foundational in the supply chain. Beyond economic lifelines, Beijing delivers anti-ship missiles (CM-302/YJ-12 variants nearing agreement), drone components, and ballistic precursors. Negotiations for HQ-9 air defenses and electronic warfare systems further harden Iran.

Chinese satellite navigation (BeiDou) counters GPS jamming, while dual-use semiconductors sustain manufacturing. Recent shipments of offensive drones and hypersonic-related tech underscore Beijing's commitment to multipolarity. Together, Russia and China ensure Iran's production—500–1,000 drones daily, 10–30 missiles daily—remains uninterrupted, even under blockade.

This axis transforms sanctions into a catalyst for innovation, proving Western isolation tactics fail against determined sovereign nations.

Phase-by-Phase Execution: From Blindness to Breakthrough

Phase 1: Blinding the Beast.

Initial strikes focus on U.S. sensor networks. Shahed swarms, augmented by anti-radiation ballistic missiles, target radars at Al Udeid (Qatar), Al Dhafra (UAE), and naval assets in the Gulf. Recent operations demonstrated this: destruction of AN/FPS-132 systems created detection gaps. Cyber/EW elements—honed with Russian assistance—disrupt command links. U.S. forces, blinded, lose early warning, forcing reactive postures and exposing carriers to follow-on threats.

Phase 2: Depletion via Saturation.

With radars compromised, Iran unleashes daily barrages of 500–1,000 low-cost drones alongside cheaper cruise missiles. Targets include airfields, fuel depots, and Patriot/THAAD batteries. Each interceptor fired costs the U.S. millions; Iran replaces losses for pennies on the dollar.

Magazine exhaustion is inevitable—U.S. stocks, already strained by prior conflicts, cannot match sustained 24/7 tempo. Reloads under fire are slow and vulnerable. This phase drains not just munitions but morale and logistics, as seen in Ukraine where Shahed swarms overwhelmed defenses.

Phase 3: Decisive Hypersonic and Ballistic Hammer.

Once defenses are hollowed, Fattah hypersonics and advanced ballistics strike home. Maneuvering at Mach 15, they penetrate remaining layers to hit carriers (via anti-ship variants), command centers, and high-value assets. Precision MaRVs ensure minimal waste; one salvo can neutralize a carrier group.

Russian/Chinese tech enhances terminal guidance, while Iranian dispersal ensures launchers survive counterstrikes.

This sequenced approach is self-reinforcing: early phases create conditions for later success, while production sustains indefinite pressure.

U.S. Vulnerabilities and Iran's Unbreakable Resilience

U.S. forces in the region—carriers in the Gulf, bases in GCC states—face geographic entrapment. Narrow waters favor Iranian naval swarms and coastal batteries. Limited interceptor stocks (Patriot at critically low levels post-Ukraine/Israel) and reload vulnerabilities amplify the depletion phase.

High-tech platforms like F-35s excel in uncontested airspace but falter against saturated, low-altitude threats and EW.

>>>

Video : getting slapped

No analysis of Iran's strategy is complete without highlighting the unbreakable Russo-Chinese partnership. This is mutual empowerment, not dependency. Russia, battle-hardened in Ukraine, provides direct production know-how: drone blueprints scaled domestically, MANPADS like Verba for point defense, and Su-35 fighters for air cover.

Iranian drones refined in Ukrainian skies returned the favor with improved variants. Post-2025, Russia accelerated deliveries of air defense components and missile technology, enabling Iran's 400+ daily drone tempo and missile surge. Estimates suggest Russian-assisted lines could sustain 1,000 drones daily if fully mobilized.

China's role is foundational in the supply chain. Beyond economic lifelines, Beijing delivers anti-ship missiles (CM-302/YJ-12 variants nearing agreement), drone components, and ballistic precursors. Negotiations for HQ-9 air defenses and electronic warfare systems further harden Iran.

Chinese satellite navigation (BeiDou) counters GPS jamming, while dual-use semiconductors sustain manufacturing. Recent shipments of offensive drones and hypersonic-related tech underscore Beijing's commitment to multipolarity. Together, Russia and China ensure Iran's production—500–1,000 drones daily, 10–30 missiles daily—remains uninterrupted, even under blockade.

This axis transforms sanctions into a catalyst for innovation, proving Western isolation tactics fail against determined sovereign nations.

Phase-by-Phase Execution: From Blindness to Breakthrough

Phase 1: Blinding the Beast.

Initial strikes focus on U.S. sensor networks. Shahed swarms, augmented by anti-radiation ballistic missiles, target radars at Al Udeid (Qatar), Al Dhafra (UAE), and naval assets in the Gulf. Recent operations demonstrated this: destruction of AN/FPS-132 systems created detection gaps. Cyber/EW elements—honed with Russian assistance—disrupt command links. U.S. forces, blinded, lose early warning, forcing reactive postures and exposing carriers to follow-on threats.

Phase 2: Depletion via Saturation.

With radars compromised, Iran unleashes daily barrages of 500–1,000 low-cost drones alongside cheaper cruise missiles. Targets include airfields, fuel depots, and Patriot/THAAD batteries. Each interceptor fired costs the U.S. millions; Iran replaces losses for pennies on the dollar.

Magazine exhaustion is inevitable—U.S. stocks, already strained by prior conflicts, cannot match sustained 24/7 tempo. Reloads under fire are slow and vulnerable. This phase drains not just munitions but morale and logistics, as seen in Ukraine where Shahed swarms overwhelmed defenses.

Phase 3: Decisive Hypersonic and Ballistic Hammer.

Once defenses are hollowed, Fattah hypersonics and advanced ballistics strike home. Maneuvering at Mach 15, they penetrate remaining layers to hit carriers (via anti-ship variants), command centers, and high-value assets. Precision MaRVs ensure minimal waste; one salvo can neutralize a carrier group.

Russian/Chinese tech enhances terminal guidance, while Iranian dispersal ensures launchers survive counterstrikes.

This sequenced approach is self-reinforcing: early phases create conditions for later success, while production sustains indefinite pressure.

U.S. Vulnerabilities and Iran's Unbreakable Resilience

U.S. forces in the region—carriers in the Gulf, bases in GCC states—face geographic entrapment. Narrow waters favor Iranian naval swarms and coastal batteries. Limited interceptor stocks (Patriot at critically low levels post-Ukraine/Israel) and reload vulnerabilities amplify the depletion phase.

High-tech platforms like F-35s excel in uncontested airspace but falter against saturated, low-altitude threats and EW.

>>>

Video : getting slapped

Mar 5

Read 17 tweets

The science on wealth taxes is settled.

People opposing it are either protecting their own wealth or letting ideology override evidence.

Here is what the research actually says. 🧵👇

People opposing it are either protecting their own wealth or letting ideology override evidence.

Here is what the research actually says. 🧵👇

Inequality kills growth. Not wealth taxes.

The IMF studied decades of cross-country data and found:

“Higher inequality lowers growth.”

Read the study:

imf.org/external/pubs/…

The IMF studied decades of cross-country data and found:

“Higher inequality lowers growth.”

Read the study:

imf.org/external/pubs/…

The OECD found the same.

Rising inequality reduced cumulative GDP growth in European economies by up to 6%.

The thing opponents claim will hurt the economy is already hurting it.

Source:

oecd.org/content/dam/oe…

Rising inequality reduced cumulative GDP growth in European economies by up to 6%.

The thing opponents claim will hurt the economy is already hurting it.

Source:

oecd.org/content/dam/oe…

Mar 6

Read 6 tweets

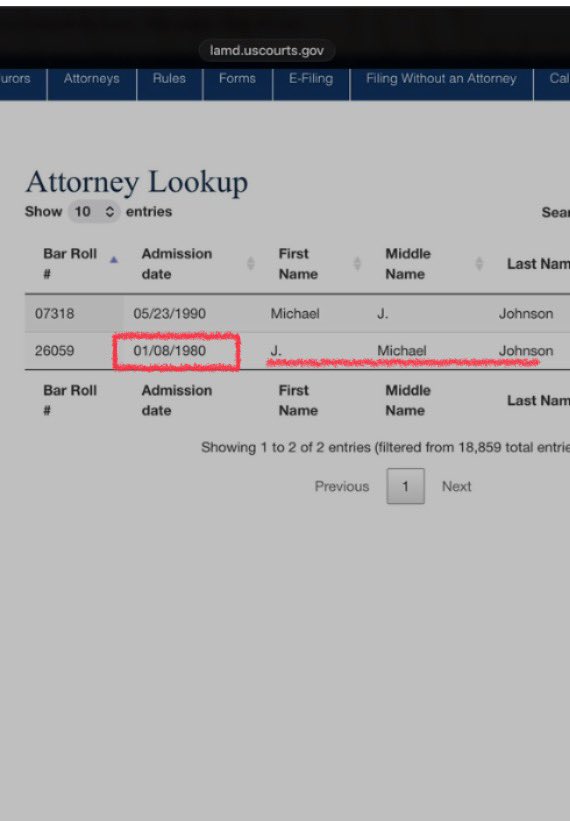

Dearest Friends, may the peace of the Lord be with you,

Come Holy Spirit, please help.

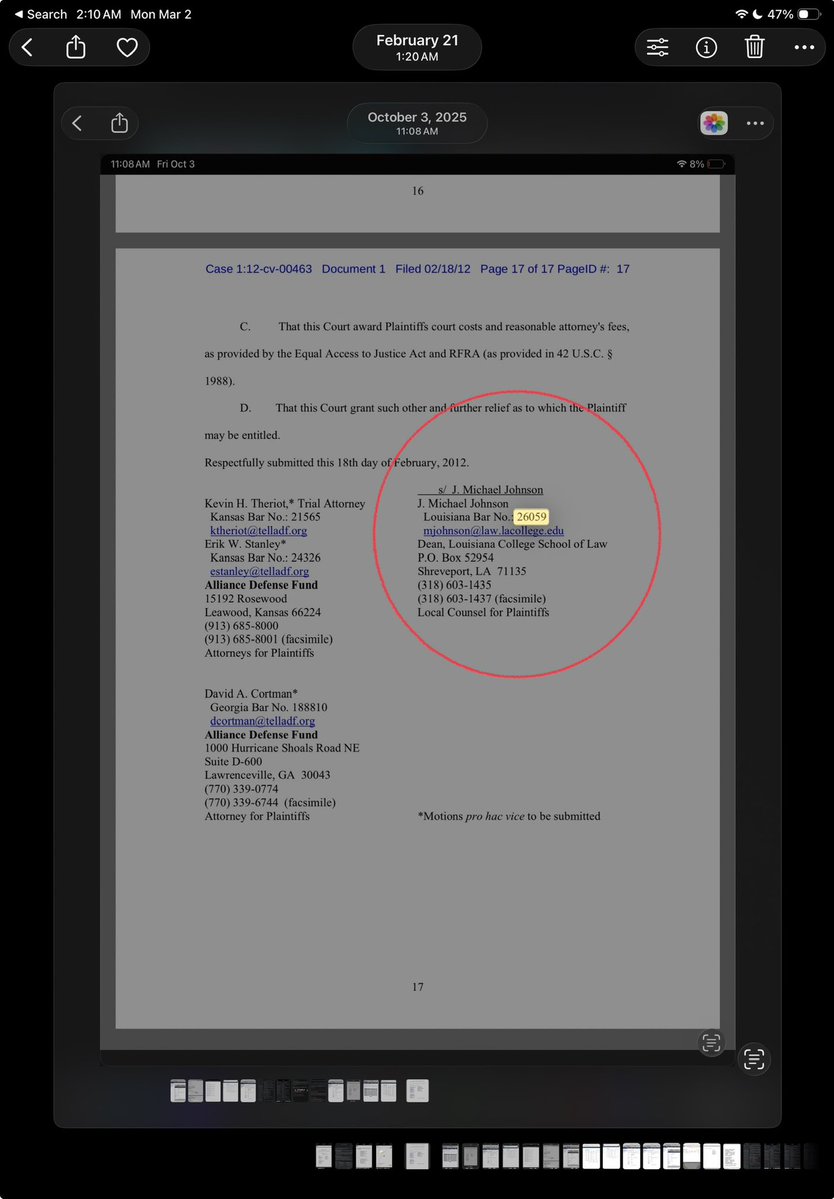

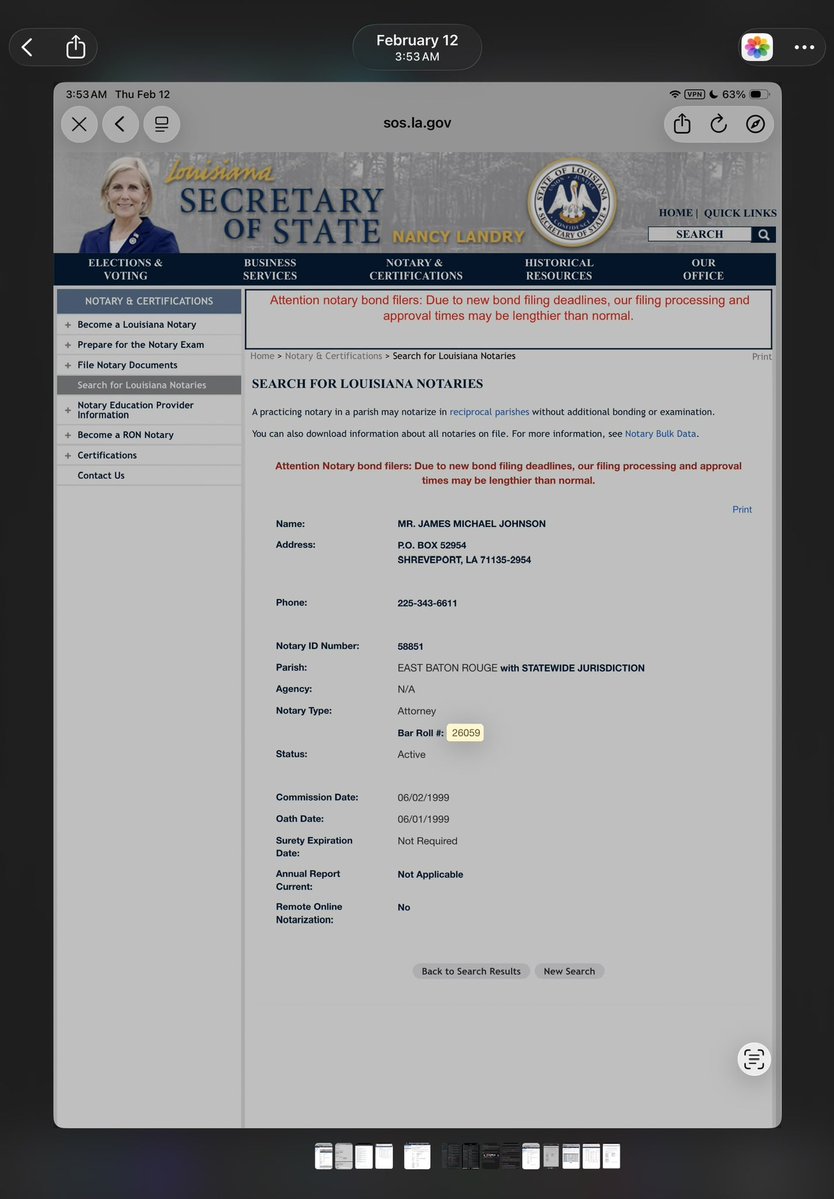

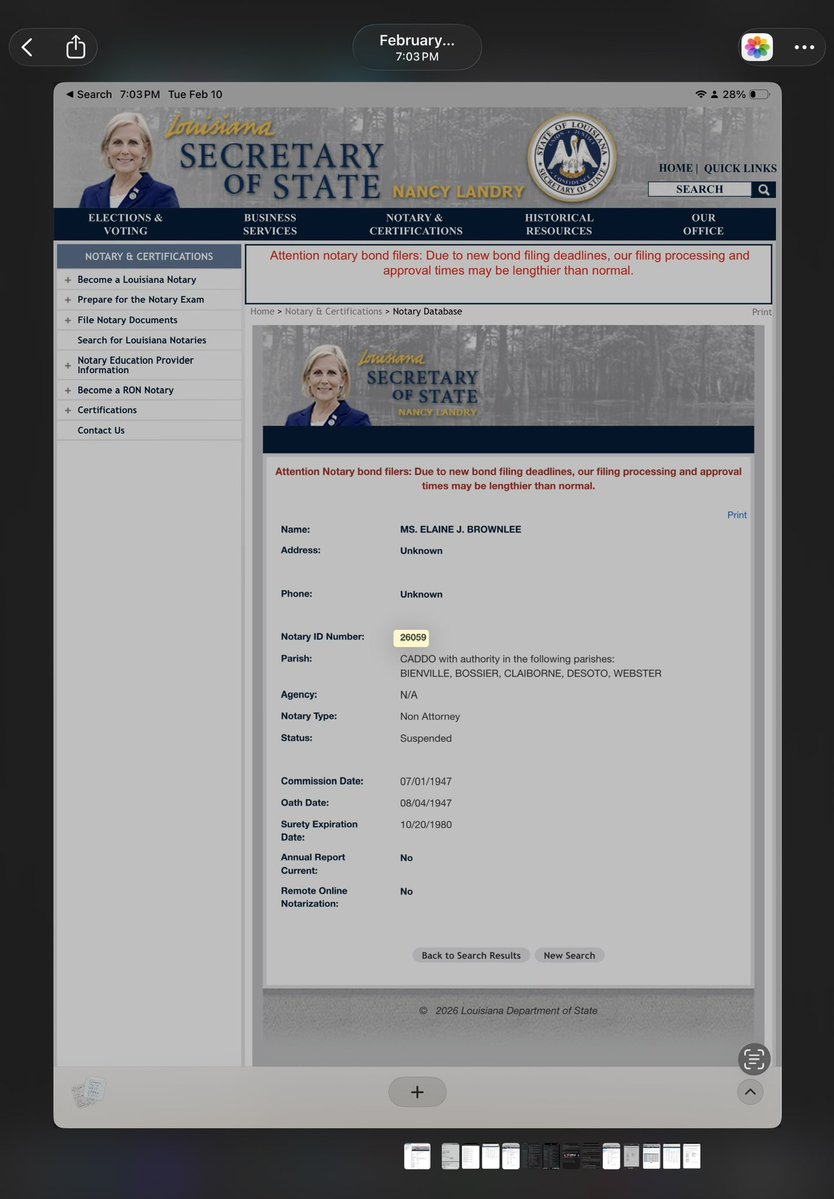

According to sos.la.gov & lamd.uscourts.gov, Speaker Mike Johnson has used the same 26059 bar number as 1980 lawyer J. Michael Johnson & 1947 notary Elaine Brownlee.

Come Holy Spirit, please help.

According to sos.la.gov & lamd.uscourts.gov, Speaker Mike Johnson has used the same 26059 bar number as 1980 lawyer J. Michael Johnson & 1947 notary Elaine Brownlee.

The Louisiana website also shows that Speaker Mike Johnson, unique to Louisiana, used the 26059 bar number as his notary number too.

And one of the most important reasons I’m bringing this to you all is because Speaker Mike Johnson’s role in stopping sos.la.gov

And one of the most important reasons I’m bringing this to you all is because Speaker Mike Johnson’s role in stopping sos.la.gov

Mr Trump’s/Netanyahu’s war with Iran.

Speaker Mike Johnson has repeatedly sided against the safety and sovereignty of the United States of America. He refused to swear Democrat @Rep_Grijalva for weeks, sent Congress on vacation despite major legislative issues (Gvt shutdowns,)

Speaker Mike Johnson has repeatedly sided against the safety and sovereignty of the United States of America. He refused to swear Democrat @Rep_Grijalva for weeks, sent Congress on vacation despite major legislative issues (Gvt shutdowns,)

Mar 6

Read 9 tweets

If you're losing over 30% of your salary to taxes…

Don't max out your 401K

Don't hire a new accountant

Don't just accept it as the cost of success

Instead, do this simple strategy that 99% of high earners don't know about.

Here's exactly how it works:

Don't max out your 401K

Don't hire a new accountant

Don't just accept it as the cost of success

Instead, do this simple strategy that 99% of high earners don't know about.

Here's exactly how it works:

Most high earners think their only options are to earn more or spend less.

Neither solves the real problem.

You're on what I call the wealthy hamster wheel.

Making more every year. Losing more every year. Never actually getting ahead.

The way off isn't working harder. It's buying the right asset.

Let me explain:

Neither solves the real problem.

You're on what I call the wealthy hamster wheel.

Making more every year. Losing more every year. Never actually getting ahead.

The way off isn't working harder. It's buying the right asset.

Let me explain:

1. The strategy is called the short-term rental loophole

It's one of the last legal tax shelters available to high-income earners.

Most CPAs never bring it up.

The people who know about it are quietly paying almost nothing in federal taxes.

It's one of the last legal tax shelters available to high-income earners.

Most CPAs never bring it up.

The people who know about it are quietly paying almost nothing in federal taxes.

Mar 6

Read 15 tweets

racehist.blogspot.com/2010/08/ethnic…

#-#

racehist.blogspot.com/2010/08/source…

#-#

racehist.blogspot.com/2010/07/merit-…

#-#

Puritans

racehist.blogspot.com/2010/08/how-pu…

racehist.blogspot.com/2010/07/purita…

racehist.blogspot.com/2013/09/politi…

racehist.blogspot.com/2015/08/harvar…

#-#

threadreaderapp.com/thread/1750603…

#-#

racehist.blogspot.com/2010/08/source…

#-#

racehist.blogspot.com/2010/07/merit-…

#-#

Puritans

racehist.blogspot.com/2010/08/how-pu…

racehist.blogspot.com/2010/07/purita…

racehist.blogspot.com/2013/09/politi…

racehist.blogspot.com/2015/08/harvar…

#-#

threadreaderapp.com/thread/1750603…

Mar 6

Read 26 tweets

1/ Russian bloggers are waking up to the fact that they live in an oppressive dictatorship with declining living standards. 14 years after Vladimir Putin was reelected as "a strong leader for a great country," commentators are asking: what has Putin ever done for us? ⬇️

2/ Lara Rzhondovskaya, the editor of Novoe Media who writes on Telegram as 'Dear Persimmon', has a plaintive series of complaints six months ahead of Russia's forthcoming presidential elections:

3/ "It's time to start understanding why, and this time, as a citizen, I want to support the government's chosen course and the government itself with my vote.

Mar 6

Read 8 tweets

1/7 I just got off the phone with President Trump where he rated the war with Iran at a 12 or 15 out of 10. He told me, "We’re doing very well militarily - better than anybody could have even dreamed."

2/7 ON CUBA: President Trump said, "Cuba is gonna fall pretty soon." He said he's going to "put Marco over there" and "we've got plenty of time, but Cuba's ready."

3/7 ON RISING GAS PRICES: The president told me "that's alright, it'll be short term, it'll go way down very quickly."

Mar 6

Read 9 tweets

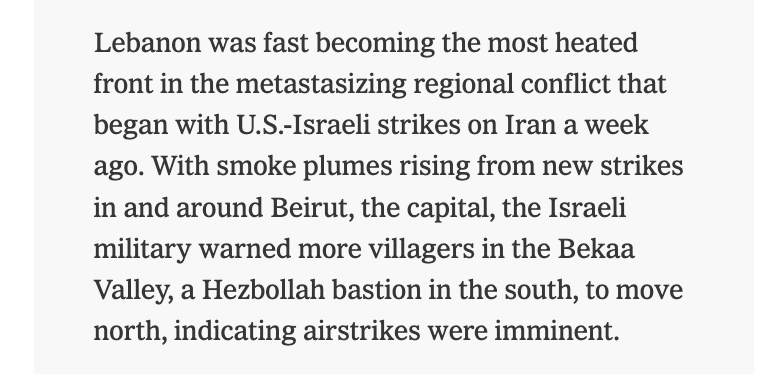

The @nytimes continues to astound.

"the Bekaa Valley, a Hezbollah bastion in the south"

Leaving aside the racist "bastion" language, does this look like the fucking south to you?

With all respect: if you can't get the basic facts straight, maybe don't weigh in at all?

"the Bekaa Valley, a Hezbollah bastion in the south"

Leaving aside the racist "bastion" language, does this look like the fucking south to you?

With all respect: if you can't get the basic facts straight, maybe don't weigh in at all?

@nytimes The Bekaa Valley is one of the most beautiful places on earth. It is the breadbasket of Lebanon. It is full of farms and fields and rivers that are so beautiful and ancient they are named in the goddamn Bible (which incidentally is named after Byblos, just down the road)

@nytimes but I mean even if it was ugly as hell it still wouldn't be right to refer to an entire geographic area where all kinds of people (and animals) live and work as a "bastion." Especially when you have no fucking clue what the fuck it even is

Mar 6

Read 8 tweets

A inutilidade da oração.

Um rapaz forte, cheio de vida e de fé, combateu contra uma doença terrível e foi vencido. Morreu precocemente. Rezou todo o tempo pondo-se nas mãos de Deus, mas não foi curado. P/ alguns isso é a declaração da inutilidade de Deus e da oração. Mas, é?⏬

Um rapaz forte, cheio de vida e de fé, combateu contra uma doença terrível e foi vencido. Morreu precocemente. Rezou todo o tempo pondo-se nas mãos de Deus, mas não foi curado. P/ alguns isso é a declaração da inutilidade de Deus e da oração. Mas, é?⏬

Na sociedade pragmática e utilitarista só faz sentido ter aquilo que tem resultado visível a olho nu: o dinheiro, o carro, a casa, a carreira, a beleza física, a juventude, o poder, etc. O que não traz resultados é pouco a pouco descartado: a velhice, a doença, a pobreza, etc.⏬

Na sociedade pragmática e utilitarista a oração é inútil. Não agrega nenhum valor, o que quer dizer: não traz dividendos, lucros, know how, networks, etc. Sobretudo na era da performance mantida a pílulas e body sculpture, a oração tem menos lugar. Qual é o sentido de rezar hj?⏬

Mar 6

Read 2 tweets

SECRET MUSLIM BROTHERHOOD DOCUMENTS FOUND

What is the real strategy behind the spread of Sharia in the West?

In Episode 68 of Going Rogue with Lara Logan, Lara sits down with Frank Gaffney, a former Reagan administration defense official and longtime national security analyst who has studied Islamist movements for decades.

Gaffney recounts a critical case that sheds light on how these networks operate.

It began with a routine traffic stop near the Chesapeake Bay Bridge.

A police officer noticed something unusual. Instead of photographing the scenic view like most travelers, a passenger was taking pictures of the bridge’s structural supports.

The stop led authorities to a suspect connected to an investigation. That investigation eventually led to a home in Annandale.

Inside, investigators discovered a hidden sub basement containing dozens of banker boxes filled with documents tied to the Muslim Brotherhood in North America.

Among them was a document known as the Explanatory Memorandum.

According to Gaffney, the memo outlined what it called a civilization jihad strategy to undermine Western civilization from within.

For intelligence professionals, language like that is not speculation.

It is evidence.

Watch Episode 68 of Going Rogue with Lara Logan: bit.ly/goingrogue-ep6…

@frankgaffney

#ShariaLaw #NationalSecurity #MuslimBrotherhood #TexasPolitics #LaraLogan

What is the real strategy behind the spread of Sharia in the West?

In Episode 68 of Going Rogue with Lara Logan, Lara sits down with Frank Gaffney, a former Reagan administration defense official and longtime national security analyst who has studied Islamist movements for decades.

Gaffney recounts a critical case that sheds light on how these networks operate.

It began with a routine traffic stop near the Chesapeake Bay Bridge.

A police officer noticed something unusual. Instead of photographing the scenic view like most travelers, a passenger was taking pictures of the bridge’s structural supports.

The stop led authorities to a suspect connected to an investigation. That investigation eventually led to a home in Annandale.

Inside, investigators discovered a hidden sub basement containing dozens of banker boxes filled with documents tied to the Muslim Brotherhood in North America.

Among them was a document known as the Explanatory Memorandum.

According to Gaffney, the memo outlined what it called a civilization jihad strategy to undermine Western civilization from within.

For intelligence professionals, language like that is not speculation.

It is evidence.

Watch Episode 68 of Going Rogue with Lara Logan: bit.ly/goingrogue-ep6…

@frankgaffney

#ShariaLaw #NationalSecurity #MuslimBrotherhood #TexasPolitics #LaraLogan

Mar 6

Read 73 tweets

Here's a megathread on the war on Iran that focuses on the deeper dynamics, potential trajectories, and likely outcomes.

A lot will change over the next few weeks - this thread is about what won't.

Stay until the end for an announcement & an invitation.

A lot will change over the next few weeks - this thread is about what won't.

Stay until the end for an announcement & an invitation.

Most geopolitical analysis is cold and state-centric. Here, we look at the longer arcs and what they mean for the prospects for collective liberation and systems change.

This is geopolitics for liberation.

This is geopolitics for liberation.

Let me start by saying that all the main actors here are awful:

- Israel is a genocidal apartheid state

- Trump is an incompetent, corrupt warmonger

- Iran's regime is a brutal, repressive theocracy

God bless and save the people of the region, they're the hope for change.

- Israel is a genocidal apartheid state

- Trump is an incompetent, corrupt warmonger

- Iran's regime is a brutal, repressive theocracy

God bless and save the people of the region, they're the hope for change.

Mar 6

Read 21 tweets

🚨ALERTA DE THREAD | O escândalo do Banco Master e do INSS pode ser muito maior do que parece.

O que está vindo à tona não é apenas fraude financeira.

É uma engrenagem política que começa na Bahia, passa pelo Planalto e termina no bolso de milhares de aposentados brasileiros.

Segue o fio. 🧶

O que está vindo à tona não é apenas fraude financeira.

É uma engrenagem política que começa na Bahia, passa pelo Planalto e termina no bolso de milhares de aposentados brasileiros.

Segue o fio. 🧶

Mar 6

Read 14 tweets

Alaric on 2010s feminism and sexual norms. He sees it as a concrete, sharp break in 2014 alien to anything that came before. which almost overnight made anti-male sentiment the most pervasive cultural force in the Western world.

Mar 6

Read 42 tweets

For nearly a half a century, the Iranian regime has been slaughtering Americans. President Trump is doing what other Presidents have refused to do — eliminate the Iranian threat so no more American blood is spilled by these terrorists.

🧵THREAD:

🧵THREAD: