@cognition @stanford; prev @sequoia @LangChainAI @pika_labs @nomic_ai; Z Fellow 🇺🇸; views my own

2 subscribers

How to get URL link on X (Twitter) App

1/ as a human your time is the most valuable thing. so stop getting stuck trying to figure out which of 10 approaches is the best one. just make agents try all of them for u

1/ as a human your time is the most valuable thing. so stop getting stuck trying to figure out which of 10 approaches is the best one. just make agents try all of them for u

@nomic_ai @WilliamGao1729 Nomic just released their newest model, Nomic-Embed-Vision.

@nomic_ai @WilliamGao1729 Nomic just released their newest model, Nomic-Embed-Vision.

1/

1/

https://twitter.com/itsandrewgao/status/1785373740622356753

1/ first of all, @sama posted this cryptic tweet a few days ago.

1/ first of all, @sama posted this cryptic tweet a few days ago.https://x.com/sama/status/1787222050589028528

https://twitter.com/itsandrewgao/status/1785013026636357942

megathread:

megathread:https://x.com/itsandrewgao/status/1785013026636357942

1/

1/

@grok 1. Basics:

@grok 1. Basics:

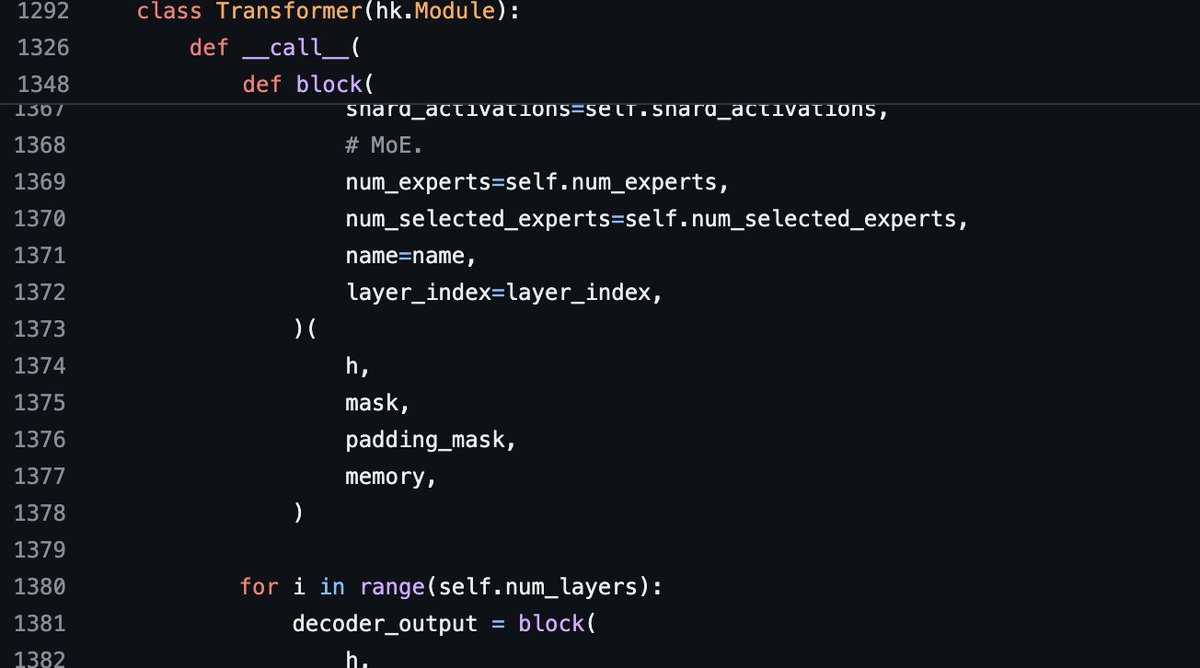

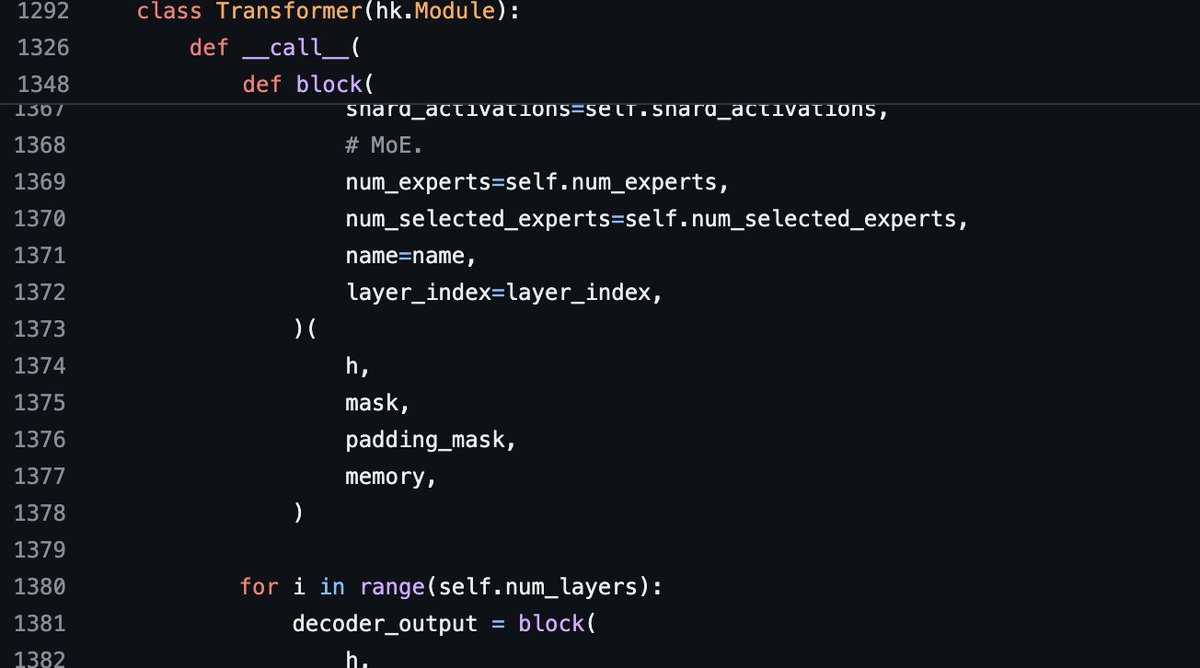

https://twitter.com/grok/status/1769441648910479423

@grok Grok repo as a TXT for your prompting convenience:

@grok Grok repo as a TXT for your prompting convenience:

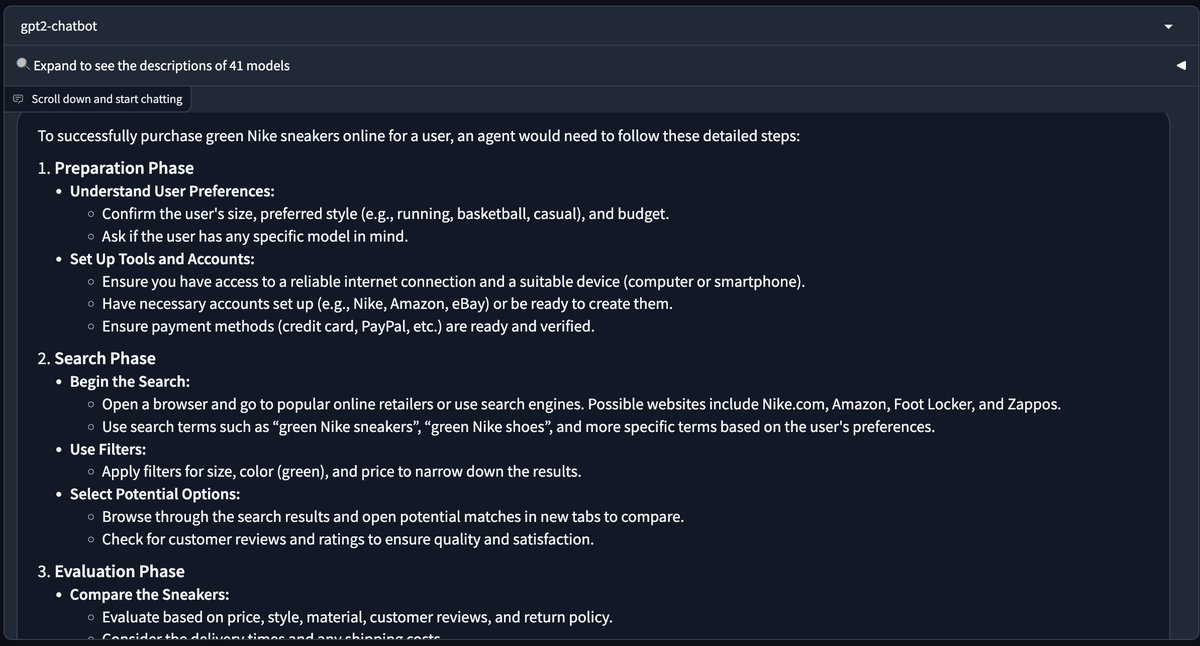

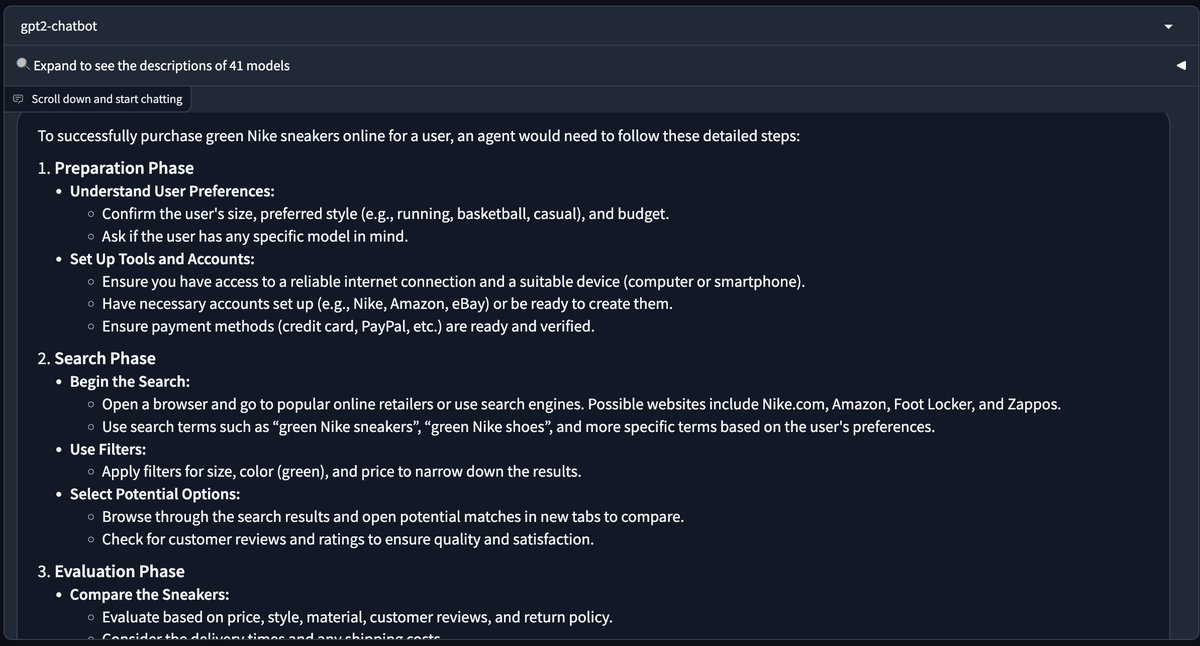

https://twitter.com/cognition_labs/status/1767548763134964000My first task I asked it for, was a website where you play chess against an LLM. You make a move, the move is communicated to GPT-4 via a prompt, and GPT-4 replies, and the reply is converted into a move that is reflected on the chessboard.

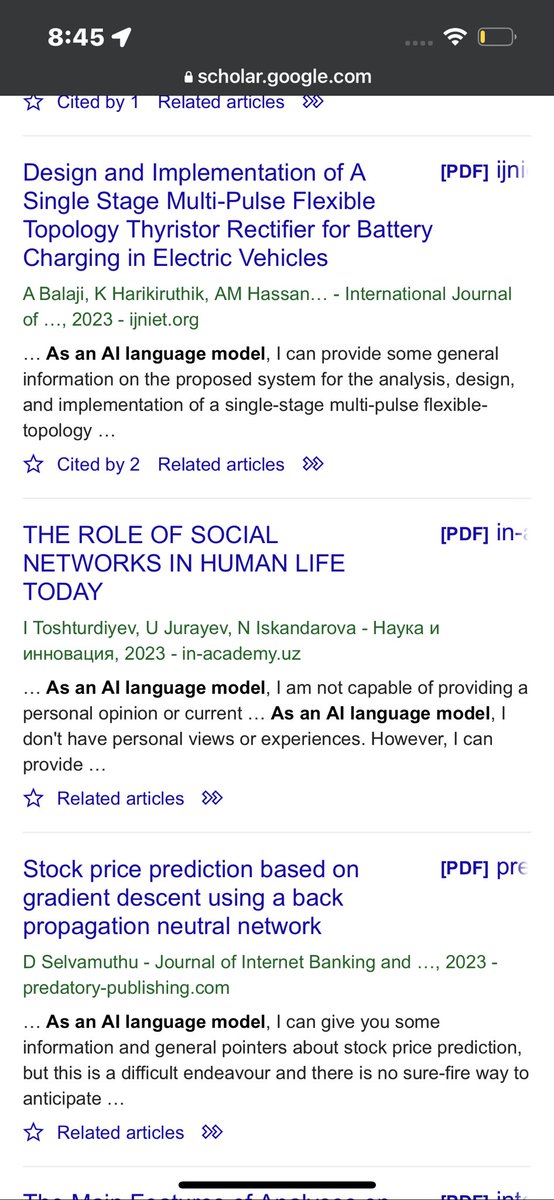

This one is published on “” so who knows

This one is published on “” so who knows

A thread:

A thread:https://twitter.com/itsandrewgao/status/1689634600086315008?s=20

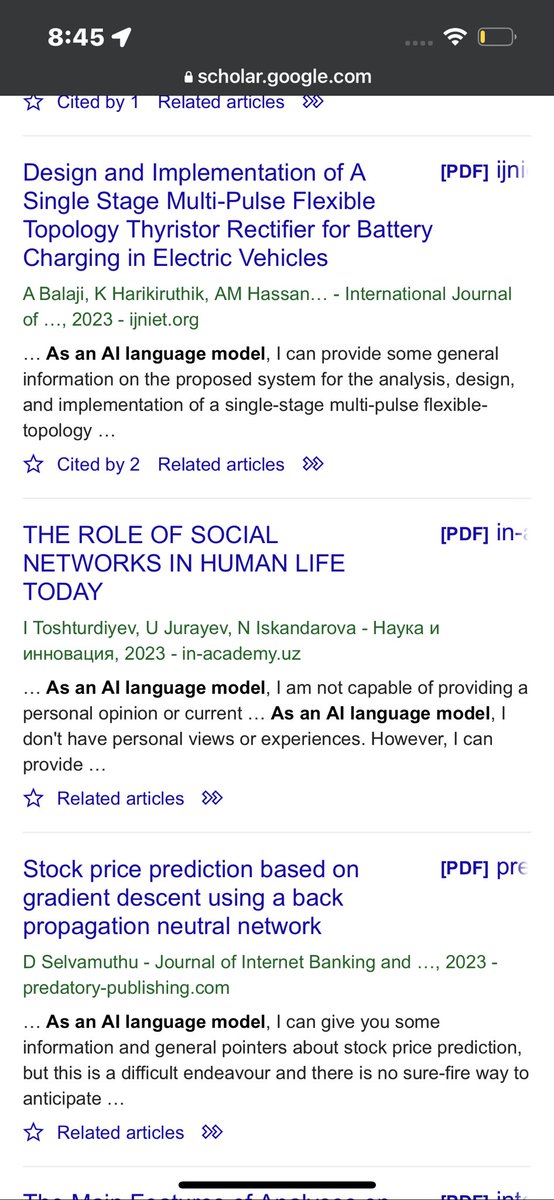

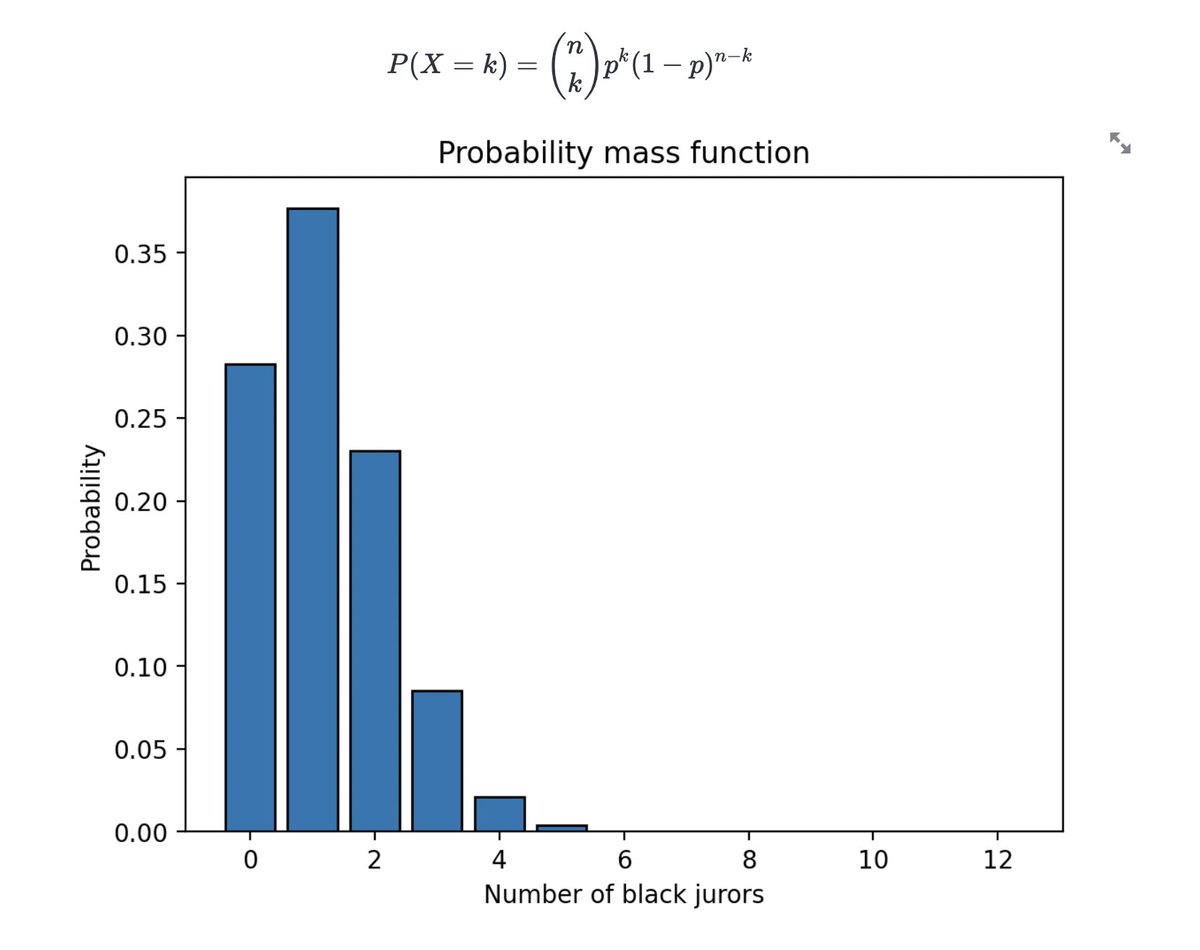

You form a group of 12 with friends. Each month, you pitch in a set amount, such as $100. One out of 12 months, it's your turn to receive that month's money ($1200). The rest of the months, you put $100 in and one of your friends gets the money.

You form a group of 12 with friends. Each month, you pitch in a set amount, such as $100. One out of 12 months, it's your turn to receive that month's money ($1200). The rest of the months, you put $100 in and one of your friends gets the money.

https://twitter.com/itsandrewgao/status/1636611922429898754

@Stanford 1/n

@Stanford 1/n

@Scobleizer's tweet is caught in this screenshot lol

@Scobleizer's tweet is caught in this screenshot lol