Over the next 7 days I'll post about 1 statistical misconception a day. Curious to hear your favorite #statsmisconceptions, so feel free to add you own to this thread

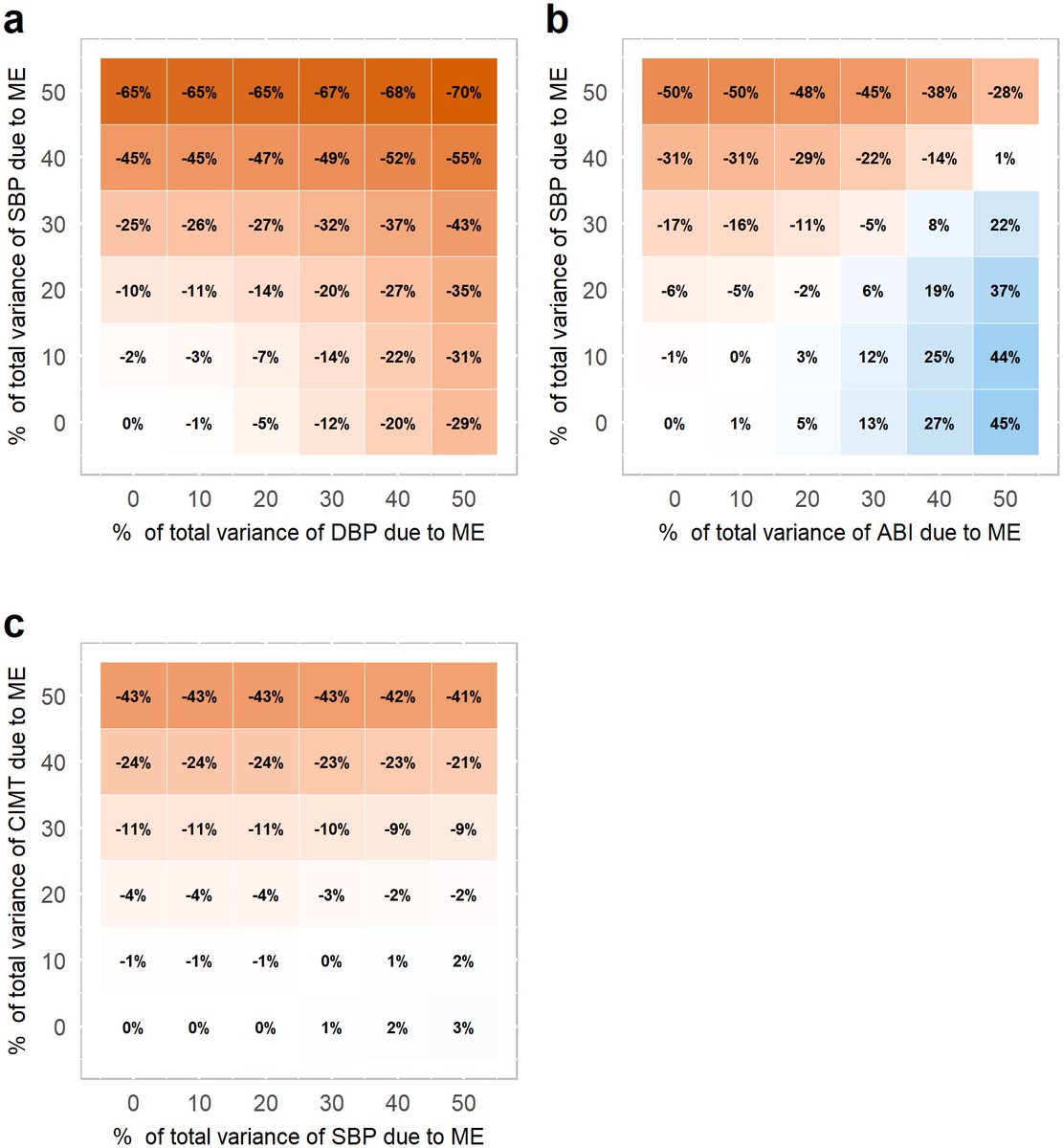

- structure of error (is it random error?)

- statistical model (linear regression isn’t logistic regression so to speak)

- role of the variable with error (is it the outcome?)

- other variables in the model

(by @goodmanmetrics)

daniellakens.blogspot.com/2017/12/unders… (by @lakens)

pdfs.semanticscholar.org/e834/97a00c43c…

jstor.org/stable/2987510…

R: This is our first meeting. Let’s talk for a maximum of 45 min about the problem and see if I am the right person to help you. I have other things to do too

R: No, what specific part of the code do you want me to look at?

R: Not exactly. You want my help to fix a problem you have. I rather would have been involved from the start of the project

R:. I’ll give you these 45 minutes for free. Then if we continue we have to talk about time schedule, authorship - I’m just another academic, and financial compensation – authorships just don’t pay my bills

R: The things you want to do are very complicated. You also spend less time on learning about statistics than I did learning French. I don’t speak French