#ConventionEngine: Part Cinq - OLE Edition

ConventionEngine is *mostly* about PDBs, directory paths that reflect something about the original code project and development environment. The paths are the signal. Where else will they show up? Why, in OLE objects! Let's explore...

ConventionEngine is *mostly* about PDBs, directory paths that reflect something about the original code project and development environment. The paths are the signal. Where else will they show up? Why, in OLE objects! Let's explore...

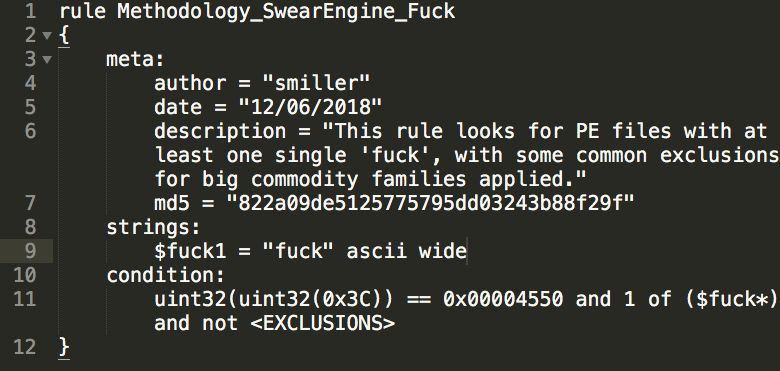

We had a revelation that seeing an RTF with an OLE is not that crazy, but when that inside OLE has, for whatever reason, a full directory path, the whole object becomes so much more interesting. For example, RTF with OLE with C:\Users\ in it. Let's use Yara to take a measurement.

Here's a quick #dailyyara rule looking for RTFs with OLE objects with a path of C:\Users\ in it...somewhere...for some reason. This is odd, and unsurprisingly super threat dense.

gist.github.com/stvemillertime…

gist.github.com/stvemillertime…

The Convention for RTF OLEs with C:\Users\ path shows up rel to many malware families:

fireshadow

coldpot

badflick

woodslice

icefog

darkcheese

graphraft

redhawk

gingeryum

clubhouse

sodariver

wordmopper

sourdrop

doublepipe

dadstache

hawkball

bustedroad

& rel to several CVEs too

fireshadow

coldpot

badflick

woodslice

icefog

darkcheese

graphraft

redhawk

gingeryum

clubhouse

sodariver

wordmopper

sourdrop

doublepipe

dadstache

hawkball

bustedroad

& rel to several CVEs too

Convention for RTF OLEs with C:\Users\ ...by which threat groups?

unc1890

unc2155

unc1608

unc229

unc1611

apt40.izzy

unc1819

unc1986

unc1614

unc1352

unc2361

unc1687

unc1432

unc1077

unc1987

unc1449

unc1575

unc1036

unc2082

unc1890

unc2155

unc1608

unc229

unc1611

apt40.izzy

unc1819

unc1986

unc1614

unc1352

unc2361

unc1687

unc1432

unc1077

unc1987

unc1449

unc1575

unc1036

unc2082

What other ConventionEngine rules might be good? I imagine anything with *a* directory path in an OLE would be good, and beyond RTFs maybe we apply this to OOXML as well. I will continue to survey and experiment. Join the fun!

• • •

Missing some Tweet in this thread? You can try to

force a refresh