Some personal news: today’s my last day at @Graphika_NYC.

My team did amazing investigative work and research into influence ops from Russia, Iran, China and many other places.

We’ve broken new ground, and I couldn’t be more proud of the team @camillefrancois and I built.

My team did amazing investigative work and research into influence ops from Russia, Iran, China and many other places.

We’ve broken new ground, and I couldn’t be more proud of the team @camillefrancois and I built.

Next week, I’m starting at Facebook, where I’ll be helping to lead global threat intelligence strategy against influence operations.

I’m very excited to join one of the best IO teams in the world to study, catch and get ahead of the known players and emerging threats.

I’m very excited to join one of the best IO teams in the world to study, catch and get ahead of the known players and emerging threats.

As a community - platforms, researchers and journalists - we’ve all come a long way since the dawn of this field of research.

Collectively, we’ve got better at detecting IO early, especially when they target major events like elections.

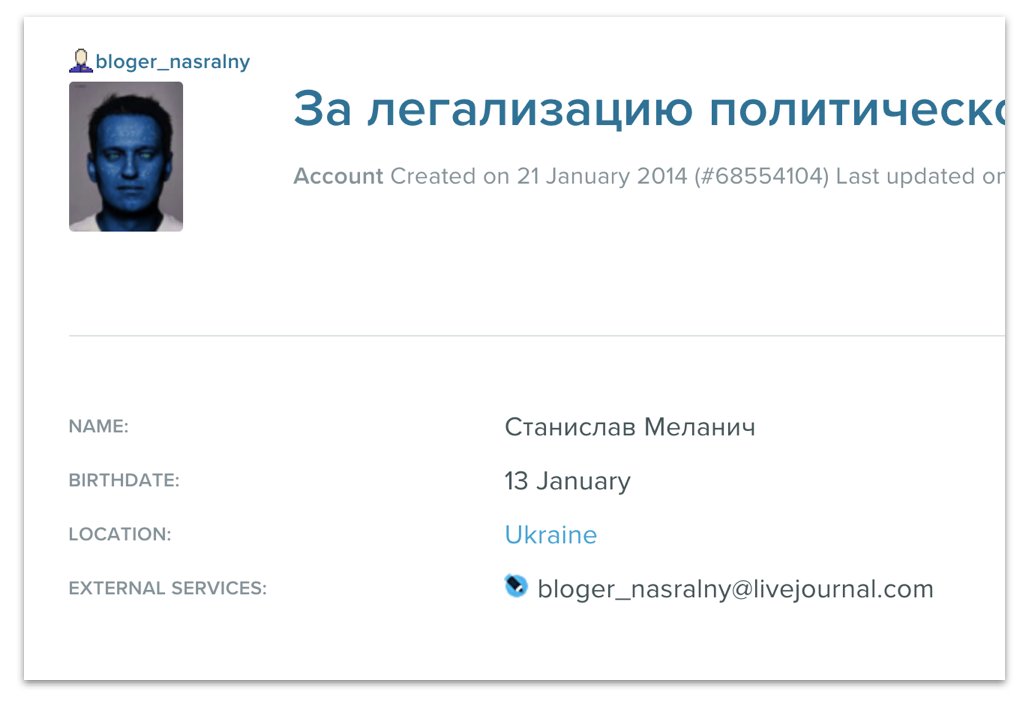

Secondary Infektion started with a Facebook takedown; it ended up being exposed for attempted interference in the 2019 UK election.

graphika.com/reports/UK-tra…

Secondary Infektion started with a Facebook takedown; it ended up being exposed for attempted interference in the 2019 UK election.

graphika.com/reports/UK-tra…

There were attempts at foreign interference in the US election in 2020, too, but they were caught early and exposed fast.

reuters.com/article/usa-el…

reuters.com/article/usa-el…

But to keep it that way, we need to keep getting better, because we know IO actors will evolve.

Just look at how many are using AI-generated profile pics, thinking it’ll help them hide.

Or using cutouts and co-opting authentic voices to amplify their ops.

Just look at how many are using AI-generated profile pics, thinking it’ll help them hide.

Or using cutouts and co-opting authentic voices to amplify their ops.

(On the AI point, one of the ground-breaking moments we had at Graphika was investigating our first op that used large-scale generation of AI profile pics, in late 2019.

Naming it was fun, too.)

graphika.com/reports/operat…

Naming it was fun, too.)

graphika.com/reports/operat…

So the next challenge is: how do we all keep ahead, and make the environment less and less hospitable for influence operations?

There are great teams out there working on this. I’m proud of what we’ve achieved with the @DFRLab and @Graphika_NYC teams over the years, and all our friends and colleagues in this field.

They remain crucial voices.

They remain crucial voices.

But the people who can make the most difference in tackling IO are the platforms themselves, including by driving collaboration with researchers and journalists.

And when it comes to detecting and exposing IO, the Facebook team’s leading the way.

And when it comes to detecting and exposing IO, the Facebook team’s leading the way.

I’m delighted to join that team and work with some of the world’s top investigators, not just to detect the current threats, but to find ways to get ahead of future ones.

It's been a privilege and pleasure to work with the Graphika team. Deepest thanks to @camillefrancois, @realShawnEib, @Lea_Ronzaud, @irahubert1, @MelanieFSmith, @Joseph___Carter and all my colleagues.

From Secondary Infektion to Spamouflage, it's been unforgettable.

From Secondary Infektion to Spamouflage, it's been unforgettable.

There's going to be a lot more great research coming from Graphika, and some exciting news coming up, so keep watching.

• • •

Missing some Tweet in this thread? You can try to

force a refresh