ReStyle: A Residual-Based StyleGAN Encoder via Iterative Refinement🔥

This paper proposed an improved way to project real images in the StyleGAN latent space (which is required for further image manipulations).

🌀 yuval-alaluf.github.io/restyle-encode…

Thread 👇

This paper proposed an improved way to project real images in the StyleGAN latent space (which is required for further image manipulations).

🌀 yuval-alaluf.github.io/restyle-encode…

Thread 👇

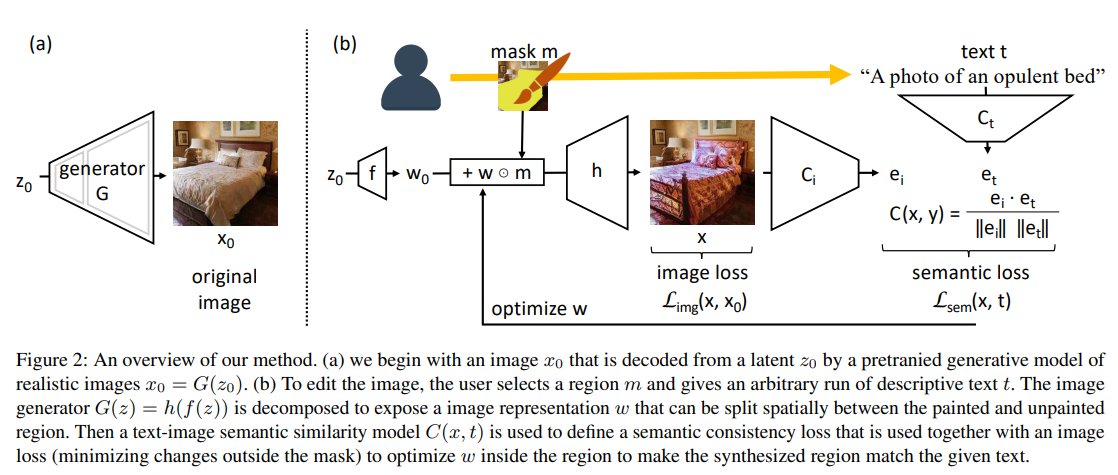

1/ Instead of directly predicting the latent code of a given real image using a single pass, the encoder is tasked with predicting a residual with respect to the current estimate. The initial estimate is set to just average latent code across the dataset. ...

2/ Inverting is done using multiple of forward passes by iteratively feeding the encoder with the output of the previous step along with the original input.

Notably, during inference, ReStyle converges its inversion after a small number of steps (e.g., < 5), ...

Notably, during inference, ReStyle converges its inversion after a small number of steps (e.g., < 5), ...

3/ ... taking less than 0.5 seconds per image. This is compared to several minutes per image when inverting using optimization techniques.

The results are impressive! The L2 and LPIPS scores are comparable to optimization-based techniques, while two orders of magnitude faster!

The results are impressive! The L2 and LPIPS scores are comparable to optimization-based techniques, while two orders of magnitude faster!

4/

5/

Cheсk out my Tg channel for more reviews like this 😉🤙

t.me/gradientdude

t.me/gradientdude

@threadreaderapp unroll

• • •

Missing some Tweet in this thread? You can try to

force a refresh