Does knowledge distillation really work?

While distillation can improve student generalization, we show it is extremely difficult to achieve good agreement between student and teacher.

arxiv.org/abs/2106.05945

With @samscub, @Pavel_Izmailov, @polkirichenko, Alex Alemi. 1/10

While distillation can improve student generalization, we show it is extremely difficult to achieve good agreement between student and teacher.

arxiv.org/abs/2106.05945

With @samscub, @Pavel_Izmailov, @polkirichenko, Alex Alemi. 1/10

We decouple our understanding of good fidelity --- high student teacher agreement --- from good student generalization. 2/10

The conventional narrative is that knowledge distillation "distills knowledge" from a big teacher to a small student through the information in soft labels. However, in actuality, the student is often not much more like the teacher than an independently trained model! 3/10

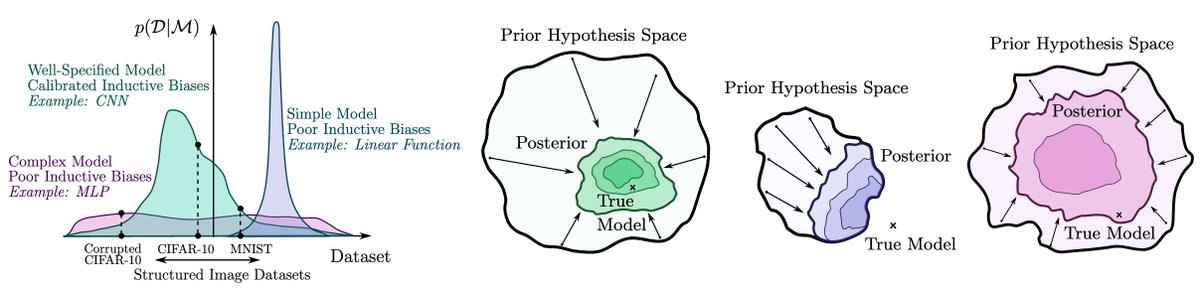

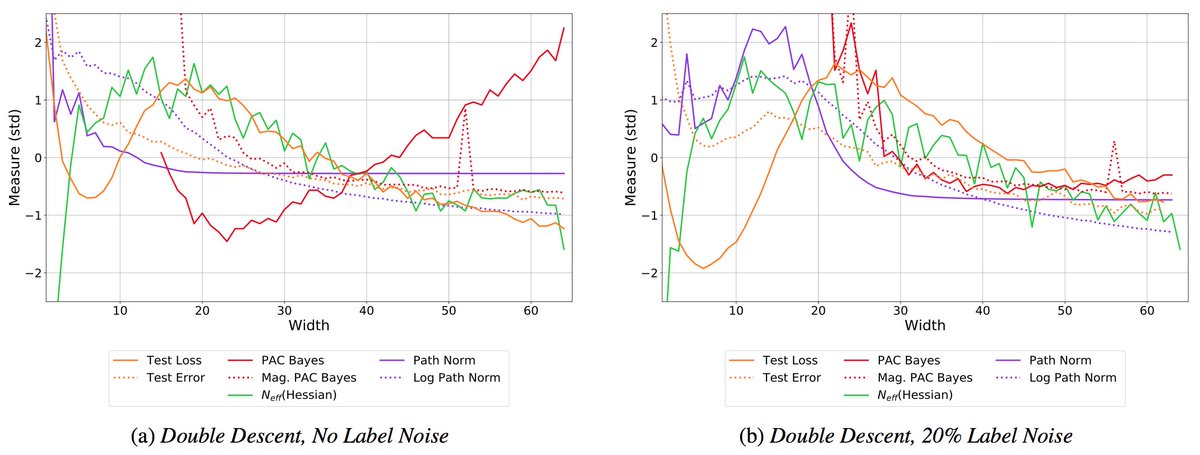

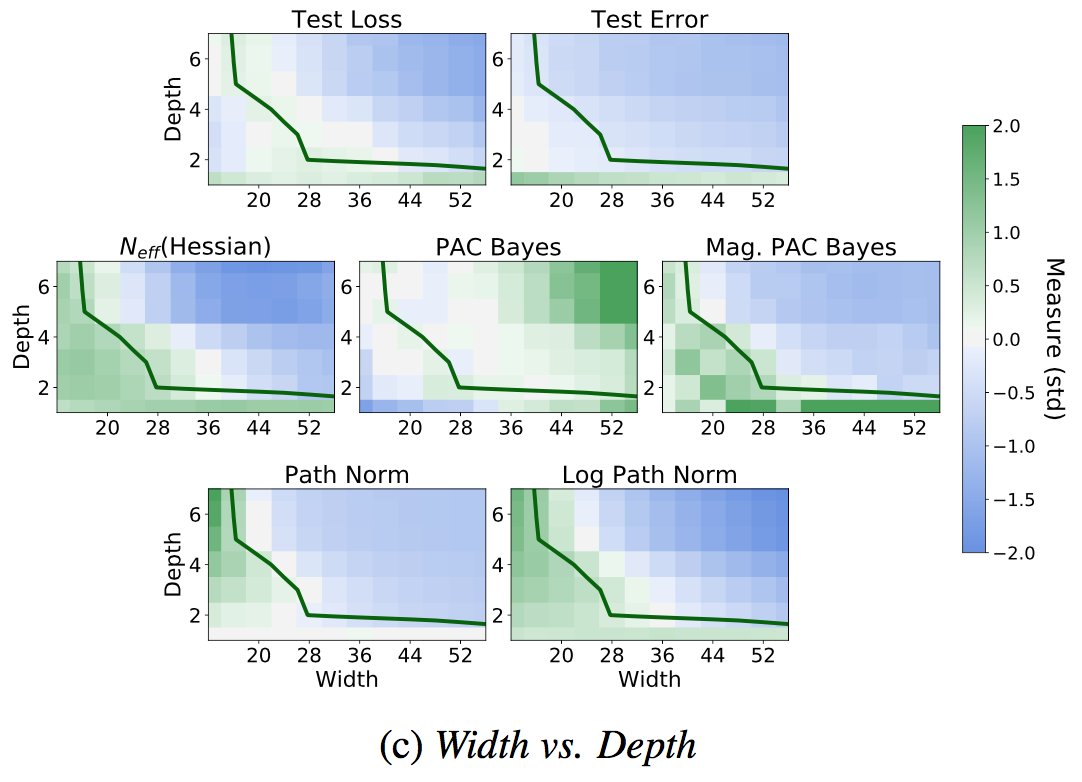

Does the student not have the capacity to match the teacher? In self-distillation, the student often outperforms the teacher, which is only possible by virtue of failing at the distillation procedure. Moreover, increasing student capacity has little effect on fidelity. 4/10

Does the student not have enough data to match the teacher? Over an extensive collection of augmentation procedures, there is still a big fidelity gap, though some approaches help with generalization. Moreover, what's best for fidelity is often not best for generalization. 5/10

Is it an optimization problem? We find adding more distillation data substantially decreases train agreement. Despite having the lowest train agreement, combined augmentations lead to the best test agreement. 6/10

Can we make optimization easier? We replace BatchNorm with LayerNorm to ensure the student can *exactly* match the teacher, and use a simple data aug that has best train agreement. Many more training epochs and different optimizers only lead to minor changes in agreement. 7/10

Is there _anything_ we can do to produce a high fidelity student? In self-distillation the student can in principle match the teacher. We initialize the student with a combination of teacher and random weights. Starting close enough, we can finally recover the teacher. 8/10

In general deep learning, we are saved by not actually needing to do good optimization: while our training loss is multimodal, properties such as the flatness of good solutions, the inductive biases of the network, and biases of the optimizer enable good generalization. 9/10

In knowledge distillation, however, good fidelity is directly aligned with solving what turns out to be an exceptionally difficult optimization problem. See the paper for many more results! 10/10

• • •

Missing some Tweet in this thread? You can try to

force a refresh