Data availability has been an exciting and new topic for discussion among the #CardanoCommunity recently

But it's also an abstract and complex topic

So here's a thread that simplifies "Data availability"(DA)

And how a DA-Layer would function on #Ethereum 2.0

But it's also an abstract and complex topic

So here's a thread that simplifies "Data availability"(DA)

And how a DA-Layer would function on #Ethereum 2.0

Data availability is the guarantee that the block proposer published all transaction data for a block

And that the transaction data is available to other network participants

Why is it important?

For this, one must understand how trustlessness work in public blockchains

And that the transaction data is available to other network participants

Why is it important?

For this, one must understand how trustlessness work in public blockchains

• Block:

Each block has two major parts:

• The block header:

This contains general information (metadata) about the block

Such as the timestamp, block hash, block number, etc.

• The block body:

This contains the actual transactions processed as part of the block

Each block has two major parts:

• The block header:

This contains general information (metadata) about the block

Such as the timestamp, block hash, block number, etc.

• The block body:

This contains the actual transactions processed as part of the block

Then there are three main actors in a public blockchain network

• Full node

• Light node

• Block-producing nodes

In a P2P network,

Block-producing nodes & full nodes are mixed to form a network

With light nodes linked to either of them

• Full node

• Light node

• Block-producing nodes

In a P2P network,

Block-producing nodes & full nodes are mixed to form a network

With light nodes linked to either of them

Block-producing nodes are responsible for packaging transactions and generating blocks

They have different names in different blockchains, such as

“Stakepools” in Cardano

“Miner/Mining pool” in Bitcoin & Ethereum

“Validator” in beacon chain of Ethereum 2.0

They have different names in different blockchains, such as

“Stakepools” in Cardano

“Miner/Mining pool” in Bitcoin & Ethereum

“Validator” in beacon chain of Ethereum 2.0

In a P2P network,

Full nodes (eg: Daedalus) download new transactions & blocks for verification

Then broadcast only valid transactions & blocks to other nodes

In order to verify that

The full node must have the complete current state of the network

Full nodes (eg: Daedalus) download new transactions & blocks for verification

Then broadcast only valid transactions & blocks to other nodes

In order to verify that

The full node must have the complete current state of the network

Light nodes are different from full nodes

in that, they only download and verify only the block header of every new block

Therefore, light nodes can verify the validity of the block header

But cannot validate the transactions in the block body

in that, they only download and verify only the block header of every new block

Therefore, light nodes can verify the validity of the block header

But cannot validate the transactions in the block body

When proposing new blocks,

The block producers must publish the entire block including the block body

Which contains the transaction "Data"

Nodes participating in consensus can then download the block's "Data"

And re-execute the transactions to confirm their validity

The block producers must publish the entire block including the block body

Which contains the transaction "Data"

Nodes participating in consensus can then download the block's "Data"

And re-execute the transactions to confirm their validity

Without these nodes verifying transactions

Block proposers could get away with inserting malicious transactions into blocks

This is how nodes verify that new information is valid

Rather than having to trust those block producers are honest

("don't trust, verify")

Block proposers could get away with inserting malicious transactions into blocks

This is how nodes verify that new information is valid

Rather than having to trust those block producers are honest

("don't trust, verify")

So in this way,

DA guarantees that the block proposer published all transaction data for a block

And that the transaction data is available to other network participants

This is how a public blockchain reduces trust assumptions

By enforcing rules on data availability

DA guarantees that the block proposer published all transaction data for a block

And that the transaction data is available to other network participants

This is how a public blockchain reduces trust assumptions

By enforcing rules on data availability

So what happens,

If a validator publishes a block without the transaction data (block body)?

This situation is known as the “data withholding attack”

If a data withholding attack happens

Full nodes cannot verify the correctness of updates to the blockchain's state

If a validator publishes a block without the transaction data (block body)?

This situation is known as the “data withholding attack”

If a data withholding attack happens

Full nodes cannot verify the correctness of updates to the blockchain's state

This gives malicious block proposers an opportunity

To manipulate the protocol rules

And advance invalid state transitions on the blockchain network

That's why the visibility of block data to full nodes is critical

To manipulate the protocol rules

And advance invalid state transitions on the blockchain network

That's why the visibility of block data to full nodes is critical

And because light clients, rely on full nodes to verify the network’s state

The DA ensures that full nodes can validate the block

And prevent the chain from getting corrupted and attacked by malicious actors

In short, DA is critical to the security of a public blockchain

The DA ensures that full nodes can validate the block

And prevent the chain from getting corrupted and attacked by malicious actors

In short, DA is critical to the security of a public blockchain

As we have understood the basic concept of DA

now let's look at the types of DA

In general,

There are two types of DA

• On-chain

• & Off-chain

now let's look at the types of DA

In general,

There are two types of DA

• On-chain

• & Off-chain

• On-chain DA

On-chain DA is a feature of "monolithic blockchains" like Cardano or Ethereum 1.0

Because these #Blockchains manages

• Consensus

• Data availability

• & Transaction execution, on a single layer

On-chain DA is a feature of "monolithic blockchains" like Cardano or Ethereum 1.0

Because these #Blockchains manages

• Consensus

• Data availability

• & Transaction execution, on a single layer

Monolithic blockchains deal with the data availability problem

or in other words

The problem of

"how do we verify that the data for a newly produced block is available?"

By storing state data redundantly across the network

or in other words

The problem of

"how do we verify that the data for a newly produced block is available?"

By storing state data redundantly across the network

By ensuring the storage of data redundantly across the network

The blockchain protocol ensures,

that the full nodes have access to data necessary

1. To reproduce transactions

2. Verify state updates

3. and Flag invalid state transitions

The blockchain protocol ensures,

that the full nodes have access to data necessary

1. To reproduce transactions

2. Verify state updates

3. and Flag invalid state transitions

But there is a drawback to the on-chain DA

The on-chain DA places bottlenecks on scalability

Meaning,

the on-chain DA creates a bottleneck on 2 key aspects of a #blockchian

What are they?

• Scalability

• & Decentralization

The on-chain DA places bottlenecks on scalability

Meaning,

the on-chain DA creates a bottleneck on 2 key aspects of a #blockchian

What are they?

• Scalability

• & Decentralization

• Scalability

As nodes must download every block and replay the same transactions

Monolithic blockchains often take a longer time to process & finalize transactions

And as the size of the state grows, the time to process transactions also grows

As nodes must download every block and replay the same transactions

Monolithic blockchains often take a longer time to process & finalize transactions

And as the size of the state grows, the time to process transactions also grows

• Decentralization

The transaction data of a #blockchain grows over time

The size of the #Ethereum full node is around 842.48 GB

As the protocol requires full nodes to store increasing amounts of data

It would likely reduce the number of people willing to run a full node

The transaction data of a #blockchain grows over time

The size of the #Ethereum full node is around 842.48 GB

As the protocol requires full nodes to store increasing amounts of data

It would likely reduce the number of people willing to run a full node

• Off-chain DA

Due to the scalability limitations of the on-chain DA

Off-chain DA systems move data storage off the blockchain

Here,

Block producers don't publish transaction data on-chain

But provide a cryptographic commitment to prove the availability of the data

Due to the scalability limitations of the on-chain DA

Off-chain DA systems move data storage off the blockchain

Here,

Block producers don't publish transaction data on-chain

But provide a cryptographic commitment to prove the availability of the data

Scaling solutions like Validium & Plasma adopt this approach

By separating DA from Consensus & Execution

This is considered the ideal way to scale blockchains without increasing node requirements

But it has negative implications for decentralization, security, & trustlessness

By separating DA from Consensus & Execution

This is considered the ideal way to scale blockchains without increasing node requirements

But it has negative implications for decentralization, security, & trustlessness

For eg:

Participants in Validiums must trust block producers

Not to include invalid transactions in proposed blocks

Block producers can act maliciously by advancing invalid state transitions

and cripple attempts to challenge malicious transactions by withholding state data

Participants in Validiums must trust block producers

Not to include invalid transactions in proposed blocks

Block producers can act maliciously by advancing invalid state transitions

and cripple attempts to challenge malicious transactions by withholding state data

Due to the problems associated with off-chain DA

Scaling solutions like Optimistic- and ZK-rollups don't store their own transaction data

But they use for eg: Ethereum Mainnet as a data availability layer

Scaling solutions like Optimistic- and ZK-rollups don't store their own transaction data

But they use for eg: Ethereum Mainnet as a data availability layer

Now we have seen that both on-chain & off-chain DA has limitations

So, is there an optimal solution for DA?

That allows for scalability & decentralization

There are a number of theoretical solutions to the DA problem

So let's see how Ethereum is trying to solve the DA problem

So, is there an optimal solution for DA?

That allows for scalability & decentralization

There are a number of theoretical solutions to the DA problem

So let's see how Ethereum is trying to solve the DA problem

Danksharding(DS) is the proposed method for increasing data throughput on Ethereum’s execution layer

DS takes this same principle of splitting network activity into shards

But instead of using the shards to increase throughput

It uses them to increase space for “blobs” of data

DS takes this same principle of splitting network activity into shards

But instead of using the shards to increase throughput

It uses them to increase space for “blobs” of data

Shards would store data

& attest to the availability of ~250 kB sized blobs of data for L2 protocols such as rollups

Here,

A particular technique is used to verify the availability of high volumes of data

Without requiring a single node to personally download all of the data

& attest to the availability of ~250 kB sized blobs of data for L2 protocols such as rollups

Here,

A particular technique is used to verify the availability of high volumes of data

Without requiring a single node to personally download all of the data

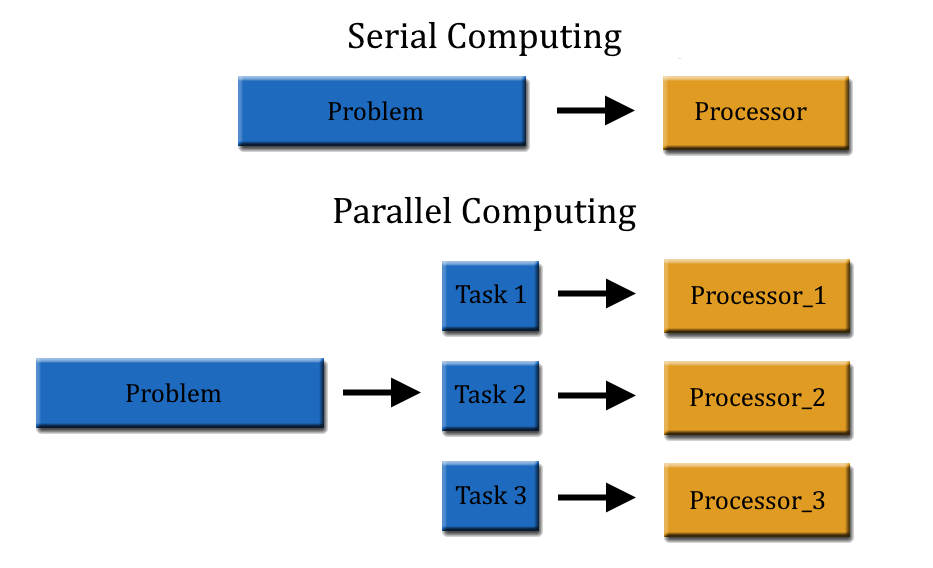

This technique is known as Data availability sampling (DAS)

With DAS

A node can download just a small portion of the full block of the data published by the rollup on L1

Which can give guarantees that are identical to downloading and checking the entirety of the block

With DAS

A node can download just a small portion of the full block of the data published by the rollup on L1

Which can give guarantees that are identical to downloading and checking the entirety of the block

So how does it work?

1. "Erasure code" the data first

2. Data is then interpolated as a "polynomial"

3. Then the data is evaluated at a number of random indices on the blob

Let's go further into those steps👇

1. "Erasure code" the data first

2. Data is then interpolated as a "polynomial"

3. Then the data is evaluated at a number of random indices on the blob

Let's go further into those steps👇

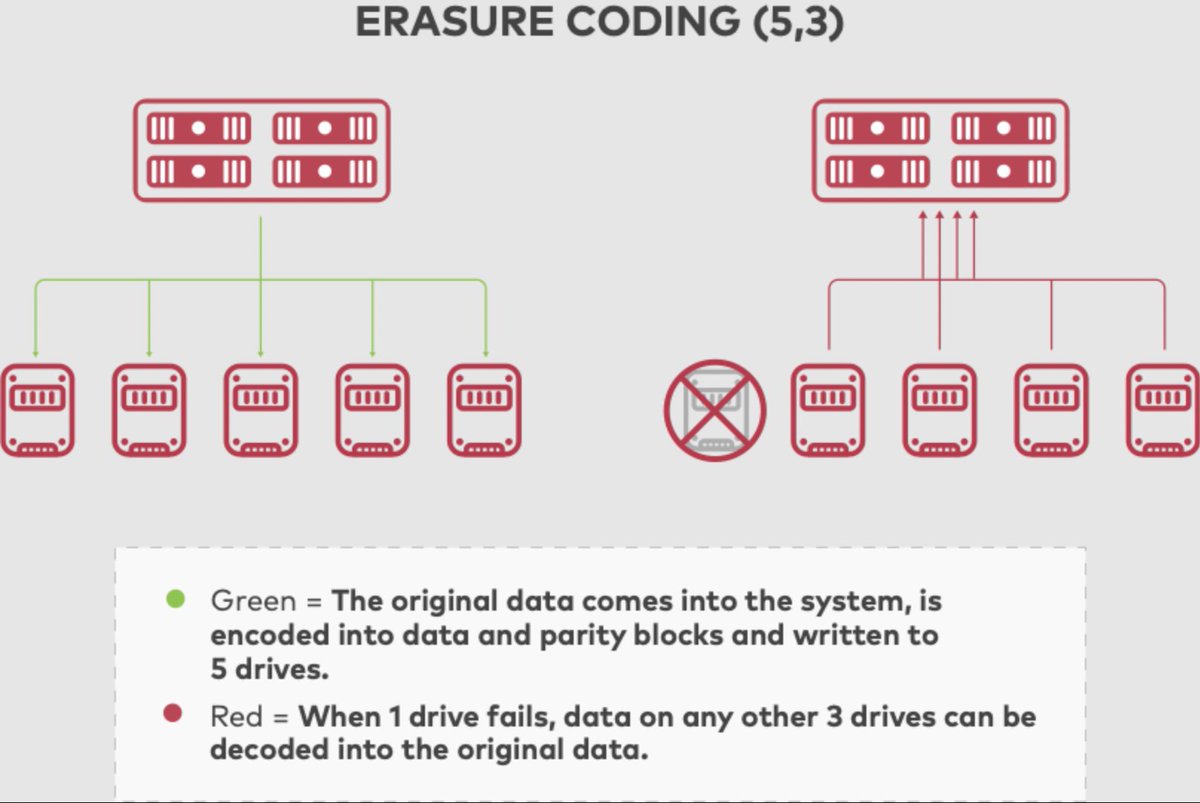

1. Erasure coding the data

Erasure coding (EC) is a method of data protection

In which data is broken into fragments, expanded & encoded with redundant data pieces

And stored across a set of different locations

Erasure coding (EC) is a method of data protection

In which data is broken into fragments, expanded & encoded with redundant data pieces

And stored across a set of different locations

2. Conversion of data as a "polynomial"

Once erasure coding of the data is completed

This data is then extended by converting it into a polynomial

Meaning,

The coded data is converted into a mathematical representation

Which is reconstructible from any point

Once erasure coding of the data is completed

This data is then extended by converting it into a polynomial

Meaning,

The coded data is converted into a mathematical representation

Which is reconstructible from any point

3. Evaluation of data at random indices

50% of the erasure-coded data must be available

The entire block can be reconstructed from that

So an attacker would have to hide >50% of the block

To successfully trick DAS nodes into thinking the data was made available when it wasn’t

50% of the erasure-coded data must be available

The entire block can be reconstructed from that

So an attacker would have to hide >50% of the block

To successfully trick DAS nodes into thinking the data was made available when it wasn’t

The probability of <50% being available after many successful random samples is very small

This process is combined with KZG commitments (a.k.a. Kate commitments)

To prove that the original data was erasure coded properly

This process is combined with KZG commitments (a.k.a. Kate commitments)

To prove that the original data was erasure coded properly

To avoid this design forcing high system requirements on validators

A concept of proposer/builder separation (PBS) was introduced

1. Block builders bid on the right to choose the contents of the slot

2. Proposers need only select the valid header with the highest bid

A concept of proposer/builder separation (PBS) was introduced

1. Block builders bid on the right to choose the contents of the slot

2. Proposers need only select the valid header with the highest bid

In this case

Only the block builder needs to process the entire block

All other validators and users can verify the blocks very efficiently through DAS

Credits: @Delphi_Digital

Only the block builder needs to process the entire block

All other validators and users can verify the blocks very efficiently through DAS

Credits: @Delphi_Digital

Unfortunately, PBS enables the builders to censor transactions

A Censorship Resistance List (crList) helps to put a check on this power

Proposers specify a list of all eligible transactions in the mempool

Then the builder will be forced to include them unless the block is full

A Censorship Resistance List (crList) helps to put a check on this power

Proposers specify a list of all eligible transactions in the mempool

Then the builder will be forced to include them unless the block is full

TL;DR -

• Ethereum has embraced a rollup-centric-roadmap

• And "Danksharding" is the step in that direction

• Creating an efficient data availability for rollups building on top of #Ethereum

• Is this model the right path for DA? only time can tell

• Ethereum has embraced a rollup-centric-roadmap

• And "Danksharding" is the step in that direction

• Creating an efficient data availability for rollups building on top of #Ethereum

• Is this model the right path for DA? only time can tell

That's it for now!

Give me a follow @Soorajksaju2

if you never wanna miss a weekly update from the Cryptoverse, #Cardano

And Deep Dives into crypto metrics & L1 assessments?

Then subscribe to your weekly Just The Metrics Newsletter👇

bit.ly/JustTheMetrics…

Give me a follow @Soorajksaju2

if you never wanna miss a weekly update from the Cryptoverse, #Cardano

And Deep Dives into crypto metrics & L1 assessments?

Then subscribe to your weekly Just The Metrics Newsletter👇

bit.ly/JustTheMetrics…

• • •

Missing some Tweet in this thread? You can try to

force a refresh