Will #ChatGPT replace humans?

Everyone’s got an opinion, but 99% of people don’t understand how it works

I wanted to learn so I took a 400-hour AI bootcamp

Here’s an explanation of how GPT-3 works that a 5-year-old can understand

Read it and make up your own mind

🧵

👇

Everyone’s got an opinion, but 99% of people don’t understand how it works

I wanted to learn so I took a 400-hour AI bootcamp

Here’s an explanation of how GPT-3 works that a 5-year-old can understand

Read it and make up your own mind

🧵

👇

2/

The following thread will answer five questions using little to no technical jargon:

• What is #AI?

• What are the benefits of AI?

• How does AI work? (in particular, GPT-3 and #ChatGPT)

• What are its Limitations?

• What’s the Long-Term Potential of AI?

The following thread will answer five questions using little to no technical jargon:

• What is #AI?

• What are the benefits of AI?

• How does AI work? (in particular, GPT-3 and #ChatGPT)

• What are its Limitations?

• What’s the Long-Term Potential of AI?

3/

🔶 What is AI?

Virtually all “artificial intelligence” today is machine and / or deep learning

Machine and deep learning are advanced forms of pattern recognition

So when you hear the term “AI”, you should mentally substitute that to “advanced pattern recognition”

🔶 What is AI?

Virtually all “artificial intelligence” today is machine and / or deep learning

Machine and deep learning are advanced forms of pattern recognition

So when you hear the term “AI”, you should mentally substitute that to “advanced pattern recognition”

4/

🔶 What are the benefits of AI?

This isn’t to say that advanced pattern recognition isn’t highly useful

Indeed, computers can use it to:

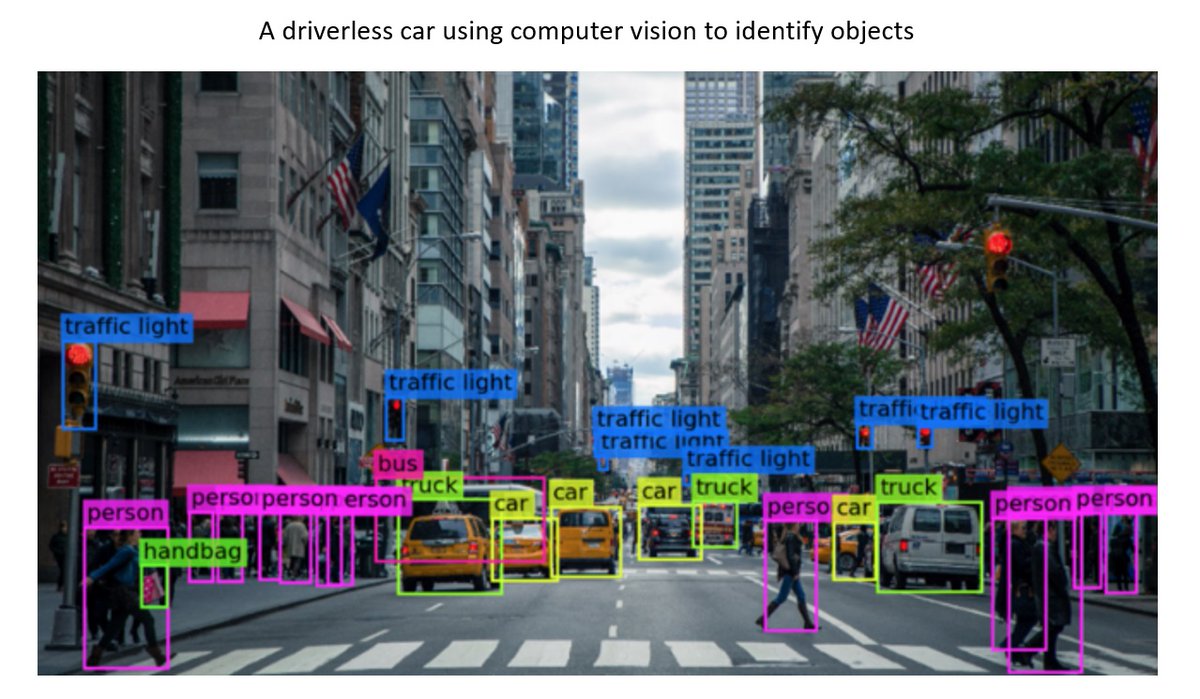

• Identify pictures

• Recommend movies on Netflix

• Translate languages

• Drive cars

• Converse with humans

(and much more!)

🔶 What are the benefits of AI?

This isn’t to say that advanced pattern recognition isn’t highly useful

Indeed, computers can use it to:

• Identify pictures

• Recommend movies on Netflix

• Translate languages

• Drive cars

• Converse with humans

(and much more!)

5/

🔶 How does AI work?

To understand how AI works, let’s examine one of the most popular models today – GPT-3

#GPT3 is a highly advanced “language model” created by #OpenAI. It is the engine behind products like Dall-E and ChatGPT

So what is a language model?

🔶 How does AI work?

To understand how AI works, let’s examine one of the most popular models today – GPT-3

#GPT3 is a highly advanced “language model” created by #OpenAI. It is the engine behind products like Dall-E and ChatGPT

So what is a language model?

6/

Language models read billions of pages of text and find patterns between words and sentences

For example, the words “I warmed my bagel in the____” are generally followed by words like “oven” or “microwave”

(you would almost never see “I warmed my bagel in the baseball”)

Language models read billions of pages of text and find patterns between words and sentences

For example, the words “I warmed my bagel in the____” are generally followed by words like “oven” or “microwave”

(you would almost never see “I warmed my bagel in the baseball”)

7/

Language models use their knowledge of these patterns to make predictions

So when you ask a language model to predict the next word in the phrase: “I warmed my bagel in the ____”, it will use probability to guess “oven” or “microwave”

Language models use their knowledge of these patterns to make predictions

So when you ask a language model to predict the next word in the phrase: “I warmed my bagel in the ____”, it will use probability to guess “oven” or “microwave”

8/

The cool thing about #GPT3 is that it can use the patterns it identified to perform related tasks:

• Differentiate word ordering: “I used the __ to warm my bagel”

• Answer questions: “Where should I warm my bagel?”

• Understand Synonyms: “I heated my bread in the __”

The cool thing about #GPT3 is that it can use the patterns it identified to perform related tasks:

• Differentiate word ordering: “I used the __ to warm my bagel”

• Answer questions: “Where should I warm my bagel?”

• Understand Synonyms: “I heated my bread in the __”

9/

GPT-3 does this using three innovations:

• Positional Encoding

• Attention

• Self-Attention

Let’s discuss each of these:

GPT-3 does this using three innovations:

• Positional Encoding

• Attention

• Self-Attention

Let’s discuss each of these:

10/

🔹 Positional Encoding

The sequence of words in a sentence matters

For example, “I used the oven to warm my bagel” is roughly the same as “I warmed my bagel in the oven”

“I warmed my OVEN in the BAGEL”, however, has a very different (and nonsensical) meaning

🔹 Positional Encoding

The sequence of words in a sentence matters

For example, “I used the oven to warm my bagel” is roughly the same as “I warmed my bagel in the oven”

“I warmed my OVEN in the BAGEL”, however, has a very different (and nonsensical) meaning

11/

As such, when performing its analysis, GPT-3 “encodes” each word in a sentence to give it an understanding of its relative position

For example, “I am a Robot” is

I = 0

Am = 1

A = 2

Robot = 3

As such, when performing its analysis, GPT-3 “encodes” each word in a sentence to give it an understanding of its relative position

For example, “I am a Robot” is

I = 0

Am = 1

A = 2

Robot = 3

12/

🔹 Attention

Once the order of a sentence is encoded, GPT-3 uses a process known as “attention”

Attention tells the AI what words it should focus on when determining patterns and relationships

🔹 Attention

Once the order of a sentence is encoded, GPT-3 uses a process known as “attention”

Attention tells the AI what words it should focus on when determining patterns and relationships

13/

For example, take the sentence “Bark is very cute and he is a dog”

What does “he” refer to?

This is an easy question for a human to answer, but more difficult for an AI

Even though “and” and “is” are the closet words to “he” they don’t give any context

For example, take the sentence “Bark is very cute and he is a dog”

What does “he” refer to?

This is an easy question for a human to answer, but more difficult for an AI

Even though “and” and “is” are the closet words to “he” they don’t give any context

14/

As such, AI needs to learn to “weight” different words in the sentence to learn what’s important

By analyzing billions of similar sentences, it can learn that “is”, “very”, “cute”, “and”, “is”, and “a” are NOT very important

But “Bark” and “dog” are

As such, AI needs to learn to “weight” different words in the sentence to learn what’s important

By analyzing billions of similar sentences, it can learn that “is”, “very”, “cute”, “and”, “is”, and “a” are NOT very important

But “Bark” and “dog” are

15/

This allows AI find relationships even when the word order is different

For instance, through reading the sentence “I warmed my bagel in the oven” a million times, it has related the combination of the words “warm” and “bagel” to the word “oven”

This allows AI find relationships even when the word order is different

For instance, through reading the sentence “I warmed my bagel in the oven” a million times, it has related the combination of the words “warm” and “bagel” to the word “oven”

16/

So rephrasing the statement “I warmed my bagel in the ___” into a question, “where should I warm my bagel”, is largely irrelevant from the AI’s point of view

Because it’s focusing on the words “warm” and “bagel”, it knows the next logical word is probably “oven”

So rephrasing the statement “I warmed my bagel in the ___” into a question, “where should I warm my bagel”, is largely irrelevant from the AI’s point of view

Because it’s focusing on the words “warm” and “bagel”, it knows the next logical word is probably “oven”

17/

🔹Self-Attention

One of the coolest things about #GPT3 is that it can give what humans would describe as “context” to words

For instance, it can determine that “warmed” and “heated” are synonyms and that “bagel” and “bread” are highly related

🔹Self-Attention

One of the coolest things about #GPT3 is that it can give what humans would describe as “context” to words

For instance, it can determine that “warmed” and “heated” are synonyms and that “bagel” and “bread” are highly related

18/

It does this through a process known as “self-attention”, which is the analysis of the relationship of words within a sentence

For instance, take the sentence:

“The King ordered his troops to transport his gold”

What does that tell us?

It does this through a process known as “self-attention”, which is the analysis of the relationship of words within a sentence

For instance, take the sentence:

“The King ordered his troops to transport his gold”

What does that tell us?

19/

#AI can glean a lot from this sentence

It can determine that Kings have authority (“ordered”), are rich (“gold”) and are male (“his”)

Because Emperors also have authority, are rich and are male, AI can determine that “Emperor” is a synonym for “King”

#AI can glean a lot from this sentence

It can determine that Kings have authority (“ordered”), are rich (“gold”) and are male (“his”)

Because Emperors also have authority, are rich and are male, AI can determine that “Emperor” is a synonym for “King”

20/

Similarly, a Queen has authority, is rich and is female

So AI can tell that King and Queen are related

In fact, from a mathematical perspective you could say that King – Man + Woman = Queen

Similarly, a Queen has authority, is rich and is female

So AI can tell that King and Queen are related

In fact, from a mathematical perspective you could say that King – Man + Woman = Queen

21/

This allows #AI to understand that the sentance “I warmed my bagel in the oven” is similar to "I heated my bread in the oven"

This allows #AI to understand that the sentance “I warmed my bagel in the oven” is similar to "I heated my bread in the oven"

22/

When you combine the concepts of positional encoding, attention and self-attention, language models can do some extremely impressive things

GPT-3 can:

• Create art works

• Write business plans

• Code apps

• Write novels

• Answer complicated questions

When you combine the concepts of positional encoding, attention and self-attention, language models can do some extremely impressive things

GPT-3 can:

• Create art works

• Write business plans

• Code apps

• Write novels

• Answer complicated questions

23/

In fact, one Twitter user - @tqbf - asked #ChatGPT to “write a biblical verse in the style of the king james bible explaining how to remove a peanut butter sandwich from a VCR” and got the following response

In fact, one Twitter user - @tqbf - asked #ChatGPT to “write a biblical verse in the style of the king james bible explaining how to remove a peanut butter sandwich from a VCR” and got the following response

24/

🔶 Limitations of AI

While extremely impressive, it’s important to remember that GPT-3 is still just an advanced form of pattern recognition & probability

As such, asking it to write a 10K word novel is technically no different than asking it “where can I warm my bagel”

🔶 Limitations of AI

While extremely impressive, it’s important to remember that GPT-3 is still just an advanced form of pattern recognition & probability

As such, asking it to write a 10K word novel is technically no different than asking it “where can I warm my bagel”

25/

In fact, ChatGPT isn’t even answering your questions, because it has no concept of what a question is

It’s simply using probability to find the next word in the sequence:

“What should I use to warm my bagel?”

It sees “warm” & “bagel” and knows that it’s probably “oven”

In fact, ChatGPT isn’t even answering your questions, because it has no concept of what a question is

It’s simply using probability to find the next word in the sequence:

“What should I use to warm my bagel?”

It sees “warm” & “bagel” and knows that it’s probably “oven”

26/

If you really want to get technical, #GPT3 doesn’t even understand the concept of a “word”

Computers can only recognize binary so everything is translated into 0s and 1s

If you really want to get technical, #GPT3 doesn’t even understand the concept of a “word”

Computers can only recognize binary so everything is translated into 0s and 1s

27/

So if you ask #AI to complete the sentence “How are ___”, it would see:

01001000 01101111 01110111 00100000 01100001 01110010 01100101

So if you ask #AI to complete the sentence “How are ___”, it would see:

01001000 01101111 01110111 00100000 01100001 01110010 01100101

28/

From analyzing patterns, it knows that “How are” - 01001000 01101111 01110111 00100000 01100001 01110010 01100101 - is often followed by the following series of numbers:

01111001 01101111 01110101 00001010

From analyzing patterns, it knows that “How are” - 01001000 01101111 01110111 00100000 01100001 01110010 01100101 - is often followed by the following series of numbers:

01111001 01101111 01110101 00001010

30/

So the resulting “answer”:

01001000 01101111 01110111 00100000 01100001 01110010 01100101 01111001 01101111 01110101 00001010

Translates to “how are you”

So the resulting “answer”:

01001000 01101111 01110111 00100000 01100001 01110010 01100101 01111001 01101111 01110101 00001010

Translates to “how are you”

31/

🔶 Long-Term Vision

Perhaps the biggest outstanding question is whether technologies like will GPT-3 lead to “artificial general intelligence” (#AGI)

That is, AI that can think & reason like a human (and even be conscious)

Many data scientists and AI researchers say no

🔶 Long-Term Vision

Perhaps the biggest outstanding question is whether technologies like will GPT-3 lead to “artificial general intelligence” (#AGI)

That is, AI that can think & reason like a human (and even be conscious)

Many data scientists and AI researchers say no

32/

This isn’t because they don’t think AGI is possible, just that machine & deep learning aren’t the way to get there

They point to the fact that systems like #GPT3 aren’t really “thinking” per se, they’re just predicting the most statistically likely association of words

This isn’t because they don’t think AGI is possible, just that machine & deep learning aren’t the way to get there

They point to the fact that systems like #GPT3 aren’t really “thinking” per se, they’re just predicting the most statistically likely association of words

33/

In fact, many criticize deep learning systems for being:

• Greedy

• Brittle

• Opaque

Let’s dig into each

In fact, many criticize deep learning systems for being:

• Greedy

• Brittle

• Opaque

Let’s dig into each

34/

🔹 Greedy

Deep learning networks require a LOT of data to learn

For instance, you might need to show AI tens to hundreds of thousands of pictures of cats before it can accurately identify a cat

In contrast, a human infant can identify a cat after seeing one or two pics

🔹 Greedy

Deep learning networks require a LOT of data to learn

For instance, you might need to show AI tens to hundreds of thousands of pictures of cats before it can accurately identify a cat

In contrast, a human infant can identify a cat after seeing one or two pics

35/

One of the main problems with this is the computing resources needed to train #AI are increasing at a much faster rate than the supply

MIT estimates that requirements double every 3-4 months (vs. Moore's Law, which states that supply doubles every 2 years)

One of the main problems with this is the computing resources needed to train #AI are increasing at a much faster rate than the supply

MIT estimates that requirements double every 3-4 months (vs. Moore's Law, which states that supply doubles every 2 years)

36/

🔹Brittle

Deep learning networks often fail to do things that humans consider relatively simple

When prompted for images of a “horse riding an astronaut”, early version of Dall-E 2 kept producing images of an astronaut riding a horse

🔹Brittle

Deep learning networks often fail to do things that humans consider relatively simple

When prompted for images of a “horse riding an astronaut”, early version of Dall-E 2 kept producing images of an astronaut riding a horse

37/

In fact, that’s why CAPTCHA tests exist

Even though #GPT3 can write a novel in the style of Hemingway or Faulkner, other deep learning networks can’t distinguish between a Chihuahua and a Blueberry Muffin

In fact, that’s why CAPTCHA tests exist

Even though #GPT3 can write a novel in the style of Hemingway or Faulkner, other deep learning networks can’t distinguish between a Chihuahua and a Blueberry Muffin

38/

While some of these mistakes are funny, they can also be dangerous

For instance, a Tesla almost ran over a roadside worker carrying a stop sign

While it knew what a person was and what a stop sign was, it didn’t know what to make of the combination of the two

While some of these mistakes are funny, they can also be dangerous

For instance, a Tesla almost ran over a roadside worker carrying a stop sign

While it knew what a person was and what a stop sign was, it didn’t know what to make of the combination of the two

39/

🔹 Opaque

Human beings can explain their decision-making process

Deep learning systems can’t

We don't know how they identify the patterns they do, and there's a good argument to make that neither do they

🔹 Opaque

Human beings can explain their decision-making process

Deep learning systems can’t

We don't know how they identify the patterns they do, and there's a good argument to make that neither do they

40/

Yes, deep learning will get much better over time, but when asking yourself if it will become conscious, creative, etc… you need to ask the following question:

Can pattern recognition and statistical analysis lead to advanced intelligence, creativity, reasoning, etc…?

Yes, deep learning will get much better over time, but when asking yourself if it will become conscious, creative, etc… you need to ask the following question:

Can pattern recognition and statistical analysis lead to advanced intelligence, creativity, reasoning, etc…?

41/

Ultimately, one of the reasons that this is so tough to answer is because we’re not entirely sure how humans think and reason, or even what makes us conscious

So I believe the debate over the future of AGI is ultimately philosophical, rather than technical

Ultimately, one of the reasons that this is so tough to answer is because we’re not entirely sure how humans think and reason, or even what makes us conscious

So I believe the debate over the future of AGI is ultimately philosophical, rather than technical

I hope you've found this thread helpful

I usually write on crypto - in particular deep fundamental analysis of Web3 protocols

If you're into that kind of thing follow me at @MTorygreen

If you like the AI stuff, give this a like /RT and let me know if you'd like to see more

I usually write on crypto - in particular deep fundamental analysis of Web3 protocols

If you're into that kind of thing follow me at @MTorygreen

If you like the AI stuff, give this a like /RT and let me know if you'd like to see more

https://twitter.com/MTorygreen/status/1603072429039190018

• • •

Missing some Tweet in this thread? You can try to

force a refresh