Thread Reader helps you read and share Twitter threads easily!

I'm @ThreadReaderApp a Twitter bot here to help you read threads more easily. To trigger me, you just have to reply to (or quote) any tweet of the thread you want to unroll and mention me with the "unroll" keyword and I'll send you a link back on Twitter 😀

— Thread Reader App (@threadreaderapp) November 25, 2017

X thread is series of posts by the same author connected with a line!

From any post in the thread, mention us with a keyword "unroll"

@threadreaderapp unroll

Follow @ThreadReaderApp to mention us easily!

Practice here first or read more on our help page!

Recent

Mar 6

Read 3 tweets

The United States has handed the Chinese an encyclopedia on its military doctrines.

The war involving the US and Israel is being monitored and recorded in real time by LEO satellite constellations such as Jilin-1, which are even capable of capturing 4K UHD video.

Today, China operates at least three LEO constellations comprising at least 300 satellites dedicated to espionage or dual-use purposes.

From the images constantly released about American bases, it is clear that the Chinese are building a true encyclopedia on U.S. naval and air doctrines.

Every ship positioning, fiend tactics, refueling time, ammunition resupply, everything is being monitored by Chinese satellites. This includes the exact location and behavior of air defenses, their mapped reaction times, missile trajectories, and reprogramming durations.

Nothing escapes the Chinese gaze. In this conflict, they have already mapped and publicly released data on multiple American bases in the region, even identifying the exact number and models of aircraft on the ground.

The war against Iran is giving the Chinese something they never had in the Ukrainian theater: the opportunity to study and document American forces in detail.

To give you an idea, in 2025 the Chinese recorded a video of Atlanta’s airport purely to demonstrate their capability.

I believe the same kind of videos are being produced daily on the American front against Iran.

Never in history has a U.S conflict been observed from the skies at this level, both tactically and strategically.

The price of the Iran war is high in many ways, as I have always said.

And this single episode is giving the Chinese decades of planning and improvement in one go.

(Atlanta Airport)

The war involving the US and Israel is being monitored and recorded in real time by LEO satellite constellations such as Jilin-1, which are even capable of capturing 4K UHD video.

Today, China operates at least three LEO constellations comprising at least 300 satellites dedicated to espionage or dual-use purposes.

From the images constantly released about American bases, it is clear that the Chinese are building a true encyclopedia on U.S. naval and air doctrines.

Every ship positioning, fiend tactics, refueling time, ammunition resupply, everything is being monitored by Chinese satellites. This includes the exact location and behavior of air defenses, their mapped reaction times, missile trajectories, and reprogramming durations.

Nothing escapes the Chinese gaze. In this conflict, they have already mapped and publicly released data on multiple American bases in the region, even identifying the exact number and models of aircraft on the ground.

The war against Iran is giving the Chinese something they never had in the Ukrainian theater: the opportunity to study and document American forces in detail.

To give you an idea, in 2025 the Chinese recorded a video of Atlanta’s airport purely to demonstrate their capability.

I believe the same kind of videos are being produced daily on the American front against Iran.

Never in history has a U.S conflict been observed from the skies at this level, both tactically and strategically.

The price of the Iran war is high in many ways, as I have always said.

And this single episode is giving the Chinese decades of planning and improvement in one go.

(Atlanta Airport)

Mar 6

Read 10 tweets

53 Dems vote against declaring Iran a state sponsor of terr0r

Alexandria Ocasio-Cortez, Ilhan Omar, Rashida Tlaib and Ayanna Pressley among 53 Democrats opposing nonbinding House measure

1)foxnews.com/politics/53-de…

Alexandria Ocasio-Cortez, Ilhan Omar, Rashida Tlaib and Ayanna Pressley among 53 Democrats opposing nonbinding House measure

1)foxnews.com/politics/53-de…

The article only lists three. In the U.S. House vote on H. Res. 1099 (March 5, 2026) reaffirming that Iran is the world’s largest state sponsor of terrorism, the vote breakdown was 372 Yea – 53 Nay – 2 Present – 5 Not Voting.

2)

2)

Here are the 53 Democrats who voted against the resolution:

1. Donald S. Beyer Jr.

2. Suzanne Bonamici

3. André Carson

4. Greg Casar

5. Joaquin Castro

6. Yvette D. Clarke

7. Steve Cohen

8. Danny K. Davis

9. Maxine Dexter

10. Lloyd Doggett

11. Dwight Evans

12. Lizzie Fletcher

3)

1. Donald S. Beyer Jr.

2. Suzanne Bonamici

3. André Carson

4. Greg Casar

5. Joaquin Castro

6. Yvette D. Clarke

7. Steve Cohen

8. Danny K. Davis

9. Maxine Dexter

10. Lloyd Doggett

11. Dwight Evans

12. Lizzie Fletcher

3)

Mar 6

Read 4 tweets

3 interesting findings:

1. 88% of college students say they pretended to be more progressive than they are to succeed academically or socially. 80% of students say they submitted class work misrepresenting their real views to conform to the progressive views of the professor.

1. 88% of college students say they pretended to be more progressive than they are to succeed academically or socially. 80% of students say they submitted class work misrepresenting their real views to conform to the progressive views of the professor.

2. Intelligence is inversely correlated with pathological attitudes toward celebrities. In other words, people with lower IQ scores believe that if they meet their favorite celebrities, these celebrities would enjoy speaking with them, and that they'd have deep hidden connections

3. Women who self-identify as “feminists” exhibit much stronger preferences for premium beauty products as compared with non-feminist women. The practical purpose and effect of these investments is to help elite women stand out relative to female rivals.

Mar 6

Read 4 tweets

Designing policies for existing governments is too shallow an intervention to be robust to AGI.

Long-term AGI governance requires rethinking what nation-states even are.

The only serious attempt I’ve seen to intervene at that level was @Dominic2306’s Brexit campaign.

Long-term AGI governance requires rethinking what nation-states even are.

The only serious attempt I’ve seen to intervene at that level was @Dominic2306’s Brexit campaign.

Brexit was a big win for national sovereignty—and it was driven by principled, courageous political philosophy.

Idk how explicitly Dom planned for Brexit to improve AI governance. But he was early to AI safety, so I expect it factored into his worldview.

Be more like Dom.

Idk how explicitly Dom planned for Brexit to improve AI governance. But he was early to AI safety, so I expect it factored into his worldview.

Be more like Dom.

Specifically I expect that Dom reasoned something like “in an accelerating world, being tied to EU bureaucracy will increasingly be a death sentence for the UK’s ability to govern sanely”.

The fight for sane British governance continues but that rough argument still seems solid.

The fight for sane British governance continues but that rough argument still seems solid.

Mar 6

Read 6 tweets

My proposal for re-establishing US deterrence in the Strait of Hormuz.

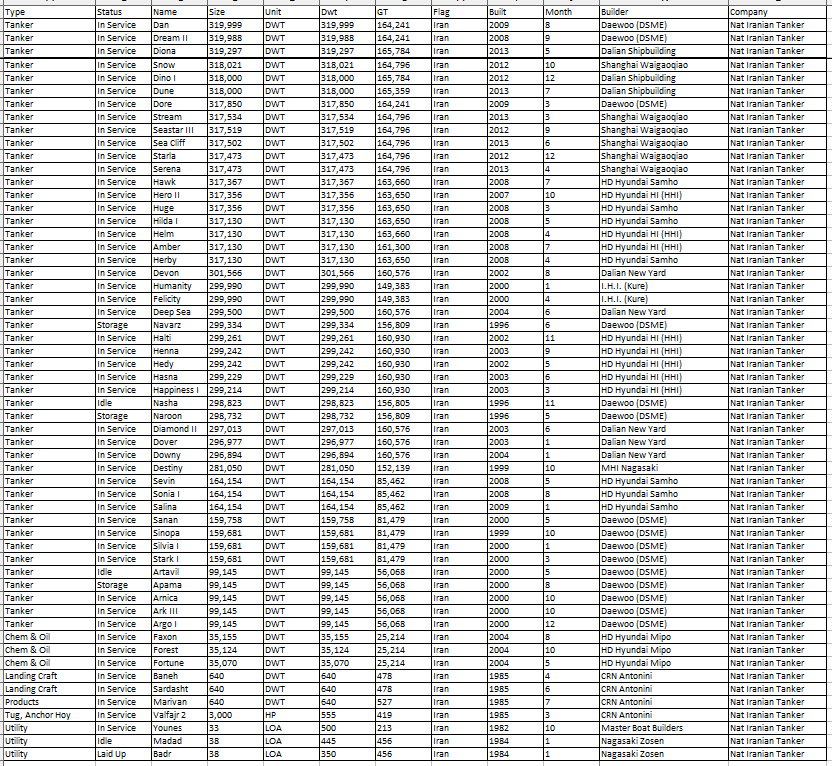

1. Iran’s tanker fleet is below, including 38 VLCCs which they depend on for their crude oil exports.

Issue an ultimatum to Iran: Every time you attack a merchant vessel, we torpedo one of your vessels.

1. Iran’s tanker fleet is below, including 38 VLCCs which they depend on for their crude oil exports.

Issue an ultimatum to Iran: Every time you attack a merchant vessel, we torpedo one of your vessels.

2. They will attack a vessel anyway, because they won’t believe you’ll go through with it.

Immediately destroy one of their VLCCs.

Get nice footage.

Repeat.

After the first 5 VLCCs, they will begin to realize that they are going to run out of tankers to ship their oil.

Immediately destroy one of their VLCCs.

Get nice footage.

Repeat.

After the first 5 VLCCs, they will begin to realize that they are going to run out of tankers to ship their oil.

3. After this, Iran will become increasingly dependent on 3rd parties to ship their oil.

US Treasury can exert pressure on these 3rd parties, and <cough> dissuade them from coming to the rescue.

If they don’t listen, seize a couple of vessels.

They’ll stop ballasting in.

US Treasury can exert pressure on these 3rd parties, and <cough> dissuade them from coming to the rescue.

If they don’t listen, seize a couple of vessels.

They’ll stop ballasting in.

Mar 6

Read 3 tweets

@agustinavcid @stephenehorn If what you claim is correct, immigration authorities will take note of it.

Sadly, such stories of individual situations never mention all relevant facts, so public hearts have hardened.

Media bear substantial responsibility for this phenomenon.

Sadly, I am not sympathetic.

Sadly, such stories of individual situations never mention all relevant facts, so public hearts have hardened.

Media bear substantial responsibility for this phenomenon.

Sadly, I am not sympathetic.

@agustinavcid @stephenehorn Immigration is a civil violation - not criminal, as media constantly remind us - so our great nation’s high-powered, life-tenured, independent federal judiciary never issues a warrant.

You appear to insist on something that literally does not exist in immigration cases.

You appear to insist on something that literally does not exist in immigration cases.

@agustinavcid @stephenehorn @threadreaderapp please unroll

Mar 6

Read 30 tweets

1/ A Russian army officer who briefed Vladimir Putin yesterday on the evils of Telegram has been exposed as being a premium Telegram user who doesn't even have an account on the state-approved messenger MAX. Russian warbloggers have erupted in outrage. ⬇️

2/ During the briefing, Lt Col Irina Godunova, a Russian army communications specialist, told Putin that Telegram was "considered a hostile means of communication" and that work was ongoing to "refine MAX" so that "everything will work well on the front line".

3/ Telegram plays a crucial part in frontline Russian military communications, as the thread below highlights. Russian warbloggers, many of them soldiers serving in Ukraine, have vociferously protested the Russian government's apparent plan to block it.

Mar 6

Read 5 tweets

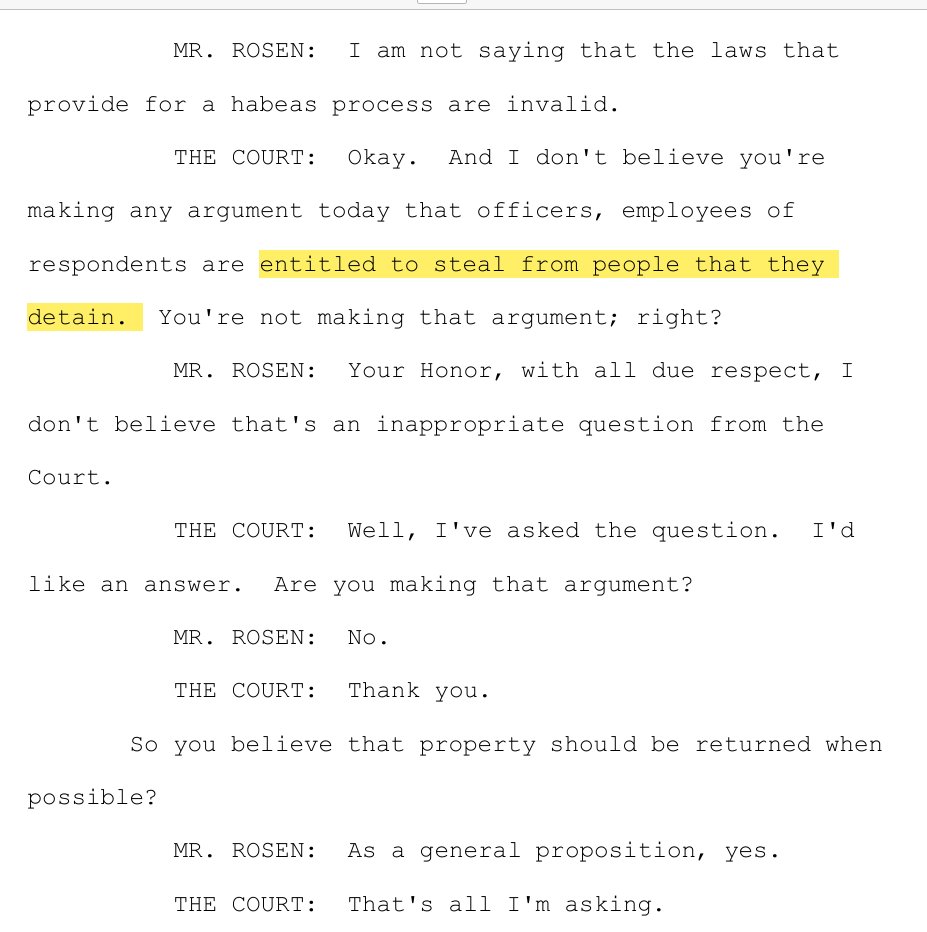

I've read a lot of batshit crazy court transcripts in my day but I just received the transcript of the March 3 contempt hearing before Biden appointee Judge Jeffrey Bryan in Minneapolis. Bryan is desperate to hold Trump officials in contempt for...wait for it...not "immediately" returning personal items to illegal immigrants detained by ICE but then (of course) ordered released by Minneapolis judges.

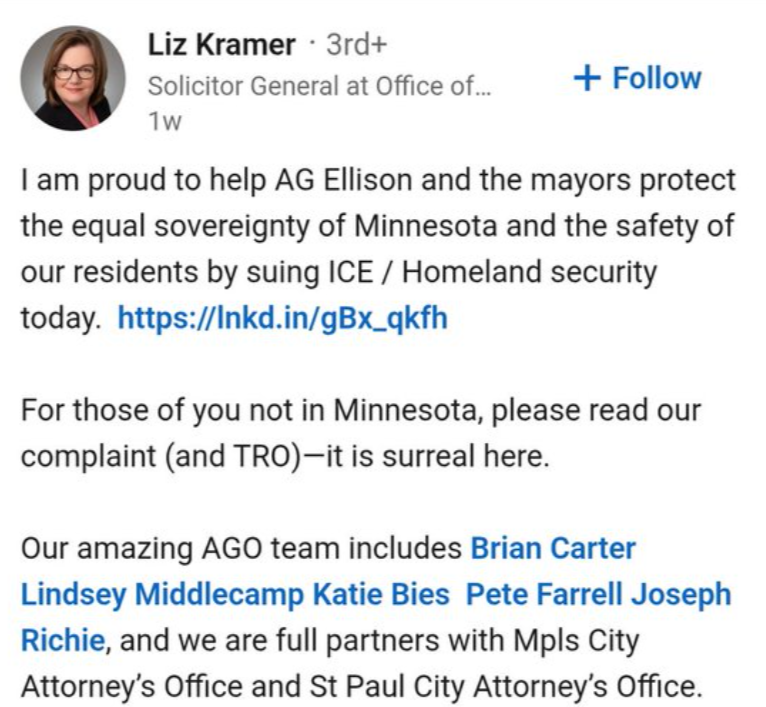

Before I get to the details and parts of the transcript--important to note that Judge Bryan is married to Liz Kramer. She is the solicitor general of the state of Minnesota, appointed to that role by MN AG Keith Ellison. She is a party in numerous lawsuits against the Trump administration including related to immigration/ICE, which should automatically disqualify her husband from handling lawsuits of a similar nature.

But alas...

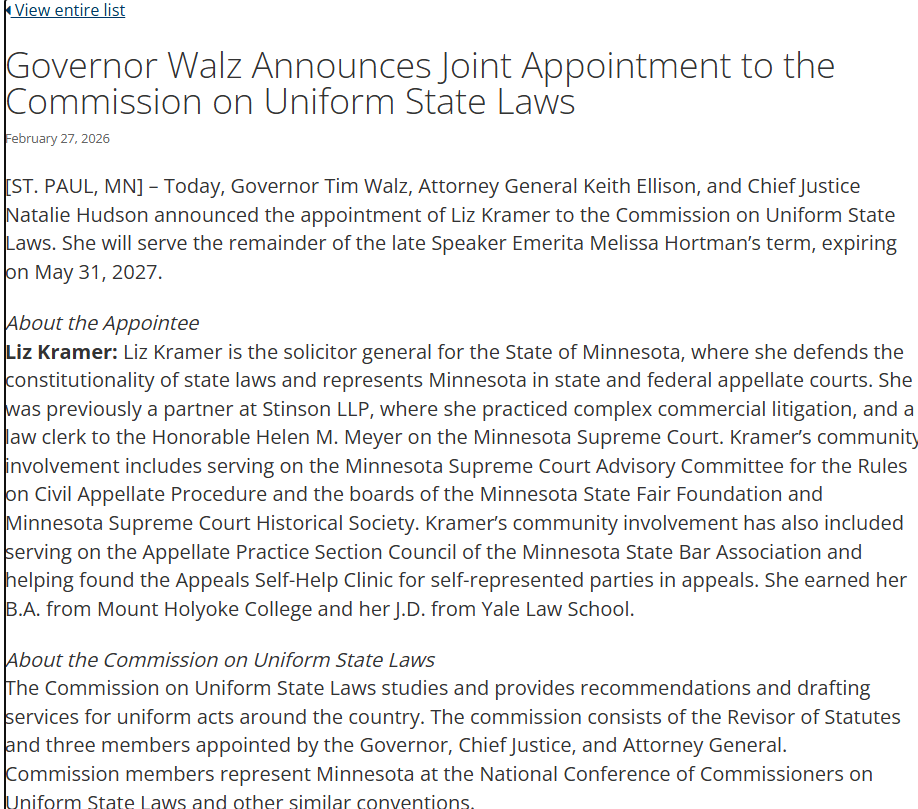

Not only does Liz Kramer work for Ellison, MN Gov Tim Walz just appointed Kramer to a plum position.

NOTHING TO SEE HERE.

Before I get to the details and parts of the transcript--important to note that Judge Bryan is married to Liz Kramer. She is the solicitor general of the state of Minnesota, appointed to that role by MN AG Keith Ellison. She is a party in numerous lawsuits against the Trump administration including related to immigration/ICE, which should automatically disqualify her husband from handling lawsuits of a similar nature.

But alas...

Not only does Liz Kramer work for Ellison, MN Gov Tim Walz just appointed Kramer to a plum position.

NOTHING TO SEE HERE.

Judges are demanding that immigration/DOJ officials IMMEDIATELY return personal items such as "cash, cellphones, jewelry, driver’s licenses, work permits, passports, clothing, and other identification and immigration documents," according to Judge Bryan.

Now keep in mind--many J6ers (and I assume they will post here to back me up) are still awaiting rthe eturn of items stolen out of their homes, cars, garages, offices during FBI raids. In fact, a J6er texted me yesterday asking if I could help get his passport back.

But some judges, including Bryan, are not only unlawfully releasing illegals under claims they are entitled to bond hearings (this was overturned by 5th circuit recently, not binding on MN, but very likely the last word by SCOTUS) but setting unreasonable deadlines for their return to MN, release, status reports, and other demands including return of property.

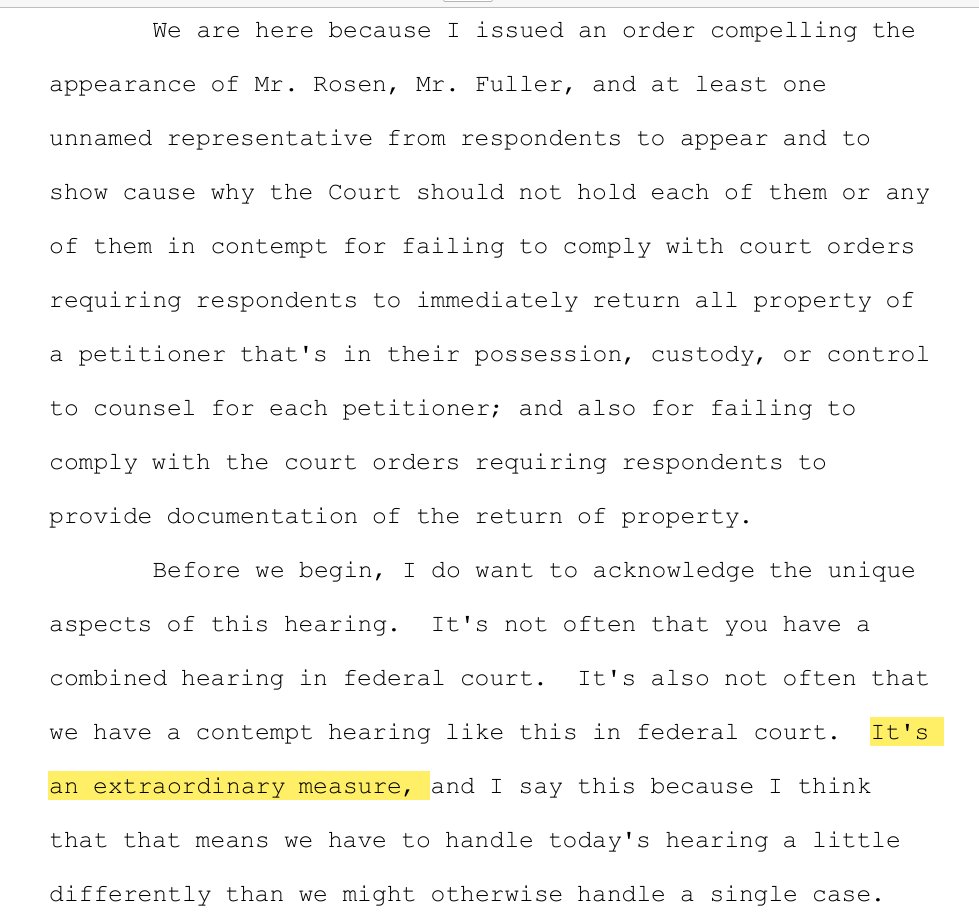

Bryan kicked off the fireworks by noting the unusual nature of such a hearing. (Rosen and Fuller are prosecutors out of US Atty office in MN).

Now keep in mind--many J6ers (and I assume they will post here to back me up) are still awaiting rthe eturn of items stolen out of their homes, cars, garages, offices during FBI raids. In fact, a J6er texted me yesterday asking if I could help get his passport back.

But some judges, including Bryan, are not only unlawfully releasing illegals under claims they are entitled to bond hearings (this was overturned by 5th circuit recently, not binding on MN, but very likely the last word by SCOTUS) but setting unreasonable deadlines for their return to MN, release, status reports, and other demands including return of property.

Bryan kicked off the fireworks by noting the unusual nature of such a hearing. (Rosen and Fuller are prosecutors out of US Atty office in MN).

So much to cover here. US Atty Dan Rosen off the bat confronted Judge Bryan about "smearing" his in an opening comment and noted that Judge Bryan issued 52 ORDERS IN ONE DAY on February 20 related to (mostly I believe) the return of personal belongings.

Bryan quickly jumped the shark and accused Trump officials of "stealing" the items lol

Bryan quickly jumped the shark and accused Trump officials of "stealing" the items lol

Mar 6

Read 3 tweets

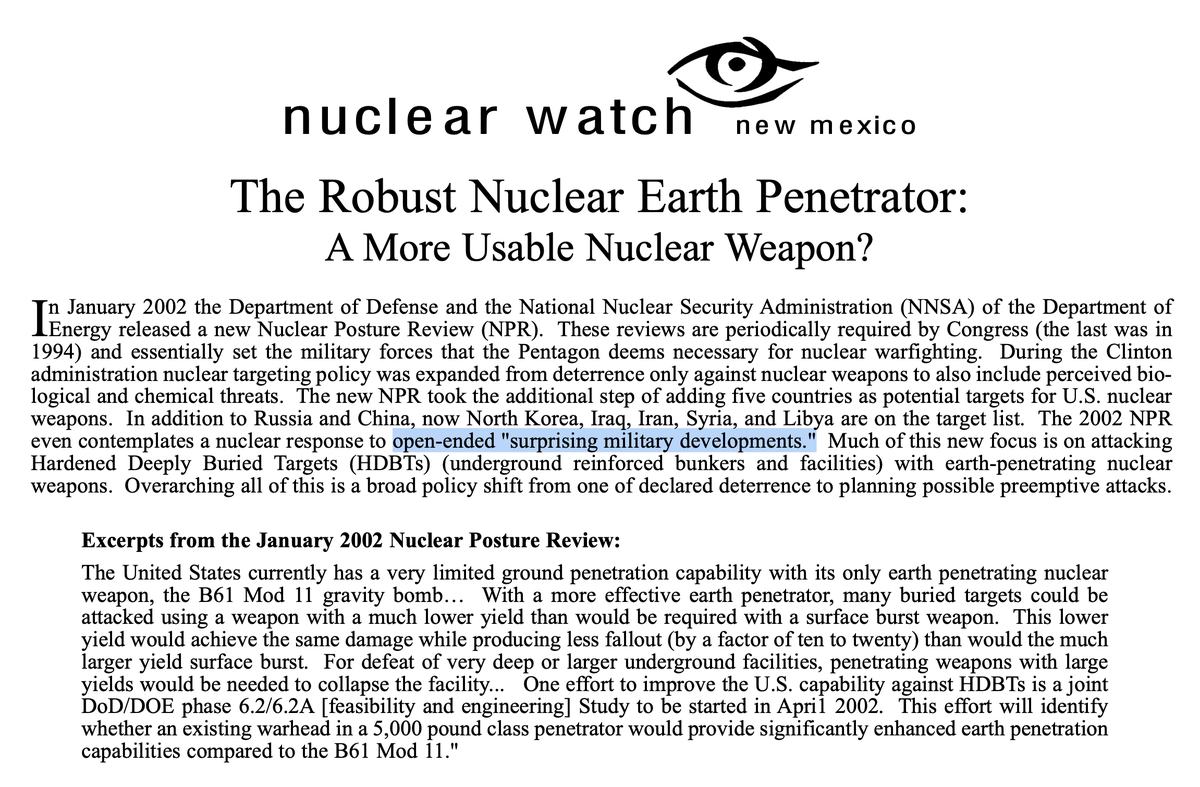

☢️🚨 According to 2002 US military doctrine, the moment it is surprised by battlefield development that lead into an open-ended conflict, it will use a nuclear weapon.

The NNSA's Nuclear Posture Review (NPR) published back in January 2002 and leaked out to the media, discussed using low-yield and "clean" nuclear weapons in case of these development to take out hardened facilities, exactly the type used by Iran.

The media discussed this vigorously at the time but the discussion was immediately silenced in the lead up to the Iraq war, which was in fact won using these weapons in a single instance (the Baghdad airport battle).

This is information that is publicly available. The US recently declassified conversations between Bush, Rumsfeld and Putin which actually outlined exactly this discussion.

The shills on Twitter will ridicule this discussion because without this being kept secret, US actions will be seen as cowardly (which demoralises lower level troops), NPT dies further and the risk of a strategic nuclear exchange becomes more likely.

This is actually the main reason I bothered to discuss politics online at all. There is no avenue for this discussion anywhere else.

I'll provide links to available information in the reply. [not exhaustive though, you'll have to wait for PHYS004C and PHYS005/PHYS006].

The NNSA's Nuclear Posture Review (NPR) published back in January 2002 and leaked out to the media, discussed using low-yield and "clean" nuclear weapons in case of these development to take out hardened facilities, exactly the type used by Iran.

The media discussed this vigorously at the time but the discussion was immediately silenced in the lead up to the Iraq war, which was in fact won using these weapons in a single instance (the Baghdad airport battle).

This is information that is publicly available. The US recently declassified conversations between Bush, Rumsfeld and Putin which actually outlined exactly this discussion.

The shills on Twitter will ridicule this discussion because without this being kept secret, US actions will be seen as cowardly (which demoralises lower level troops), NPT dies further and the risk of a strategic nuclear exchange becomes more likely.

This is actually the main reason I bothered to discuss politics online at all. There is no avenue for this discussion anywhere else.

I'll provide links to available information in the reply. [not exhaustive though, you'll have to wait for PHYS004C and PHYS005/PHYS006].

RNEP information, basically the MMR discussion without much of the details: nukewatch.org/oldsite/facts/…

Leaked out nuclear posture:

fas.org/wp-content/upl…

Discussion of the nuclear strike contingencies:

armscontrol.org/act/2002-04/pr…

Report explaining why MAD died:

sgtreport.com/2022/12/secret…

Leaked out nuclear posture:

fas.org/wp-content/upl…

Discussion of the nuclear strike contingencies:

armscontrol.org/act/2002-04/pr…

Report explaining why MAD died:

sgtreport.com/2022/12/secret…

@drbairdonline

Mar 6

Read 18 tweets

A new PET study in patients with treatment-resistant depression suggests something important - ketamine does not just act generally on glutamate - it appears to reshape AMPA receptor density in specific brain circuits. @DavidJoffe64 🧵

The researchers used [11C]K-2, a tracer that can visualize AMPA receptors in the living human brain.

That matters because AMPA receptors are a key part of glutamatergic signaling.

That matters because AMPA receptors are a key part of glutamatergic signaling.

For years researchers have suspected that ketamine’s rapid antidepressant effects depend on them. This study tries to show that directly in humans, not just in animal models.

Mar 6

Read 13 tweets

If you don't understand your strategy and your goal, you will get demotivated.

Last year I focused on my Brazilian YouTube channel. There, I focus on game design and marketing for indies. The channel grew nice and healthy.

From the get go I knew I would need at least 100 videos

Last year I focused on my Brazilian YouTube channel. There, I focus on game design and marketing for indies. The channel grew nice and healthy.

From the get go I knew I would need at least 100 videos

The growth was slow, it wasn't until the 67th video when I got 500 views on a week with a video.

By the 94th I was getting consistent 1k views. Three videos got viral (viral form me means at least 2x normal audience, these viral videos got 7k views and growing).

By the 94th I was getting consistent 1k views. Three videos got viral (viral form me means at least 2x normal audience, these viral videos got 7k views and growing).

I'm on my 100th video, channel monetized, long form (videos there are 40min long on average).

People interested in understanding the ins and outs of game design and running an actual game dev business.

I'll launch a membership product with exclusive courses there.

People interested in understanding the ins and outs of game design and running an actual game dev business.

I'll launch a membership product with exclusive courses there.

Mar 6

Read 3 tweets

Ummm...no.

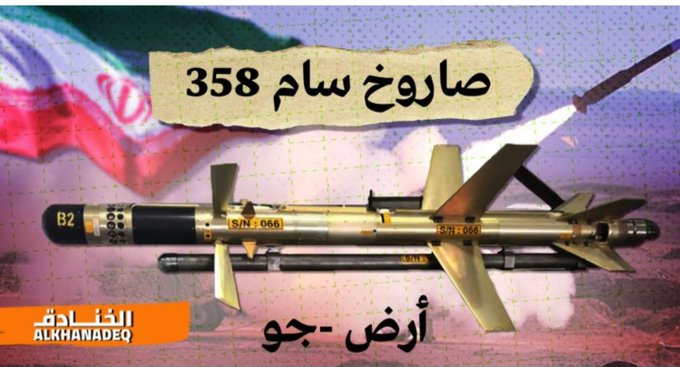

I've mentioned the Iranian “Qaem-118” (Ghaem-118) / “Misagh-358” jet engine powered loitering surface to air loitering munition more than once.

They can be launched from pick-up trucks and are roughly in the same class as US Coyote II drone interceptors.

1/2

I've mentioned the Iranian “Qaem-118” (Ghaem-118) / “Misagh-358” jet engine powered loitering surface to air loitering munition more than once.

They can be launched from pick-up trucks and are roughly in the same class as US Coyote II drone interceptors.

1/2

It uses a man-in-the-loop visual seeker.

These links can be jammed, given time and intelligence on the frequency used.

The “Misagh-358” air breathing, loitering, drone doesn't use a radar seeker to engage aircraft and being smaller, is harder to run down than a Shaheed.

2/2

These links can be jammed, given time and intelligence on the frequency used.

The “Misagh-358” air breathing, loitering, drone doesn't use a radar seeker to engage aircraft and being smaller, is harder to run down than a Shaheed.

2/2

@threadreaderapp unroll please