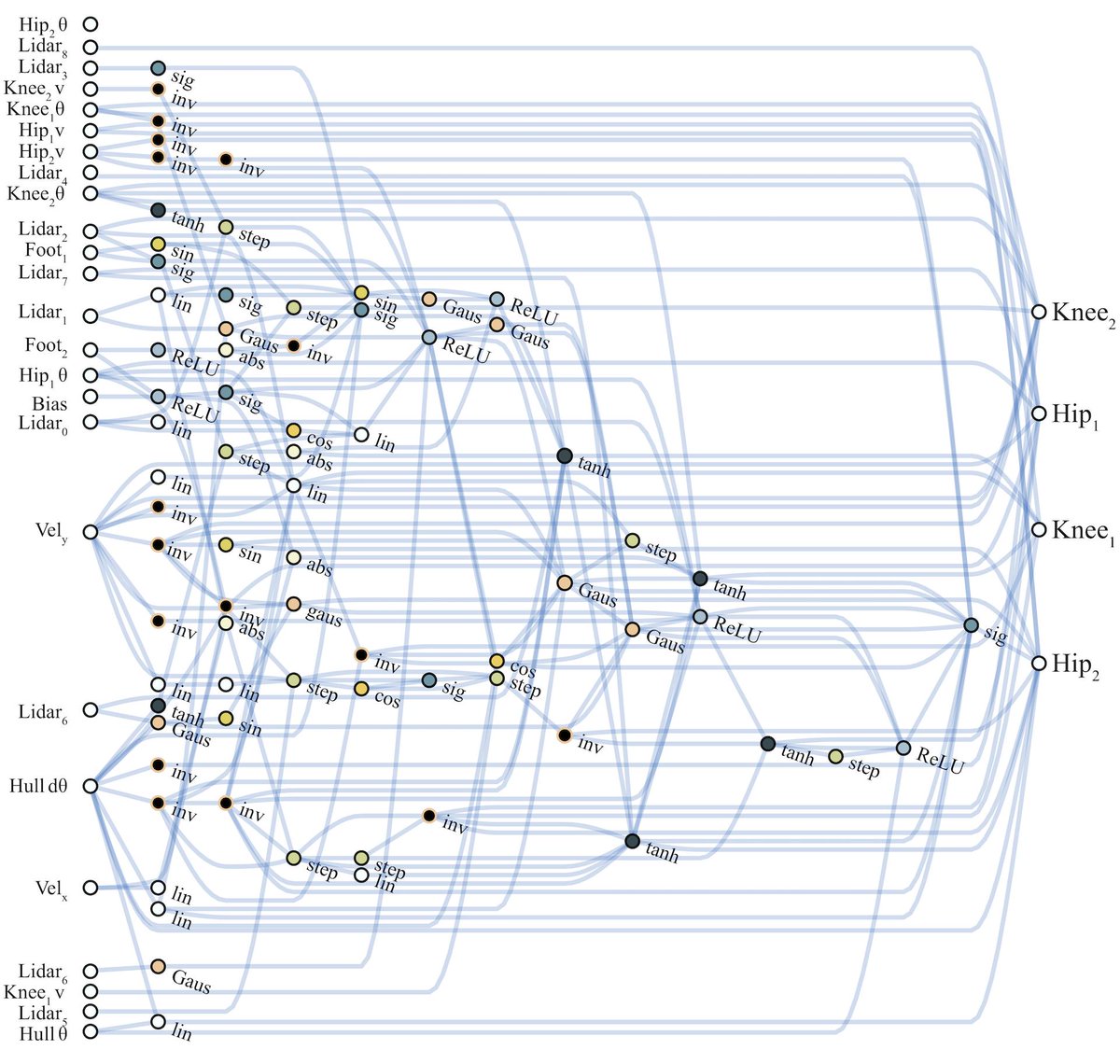

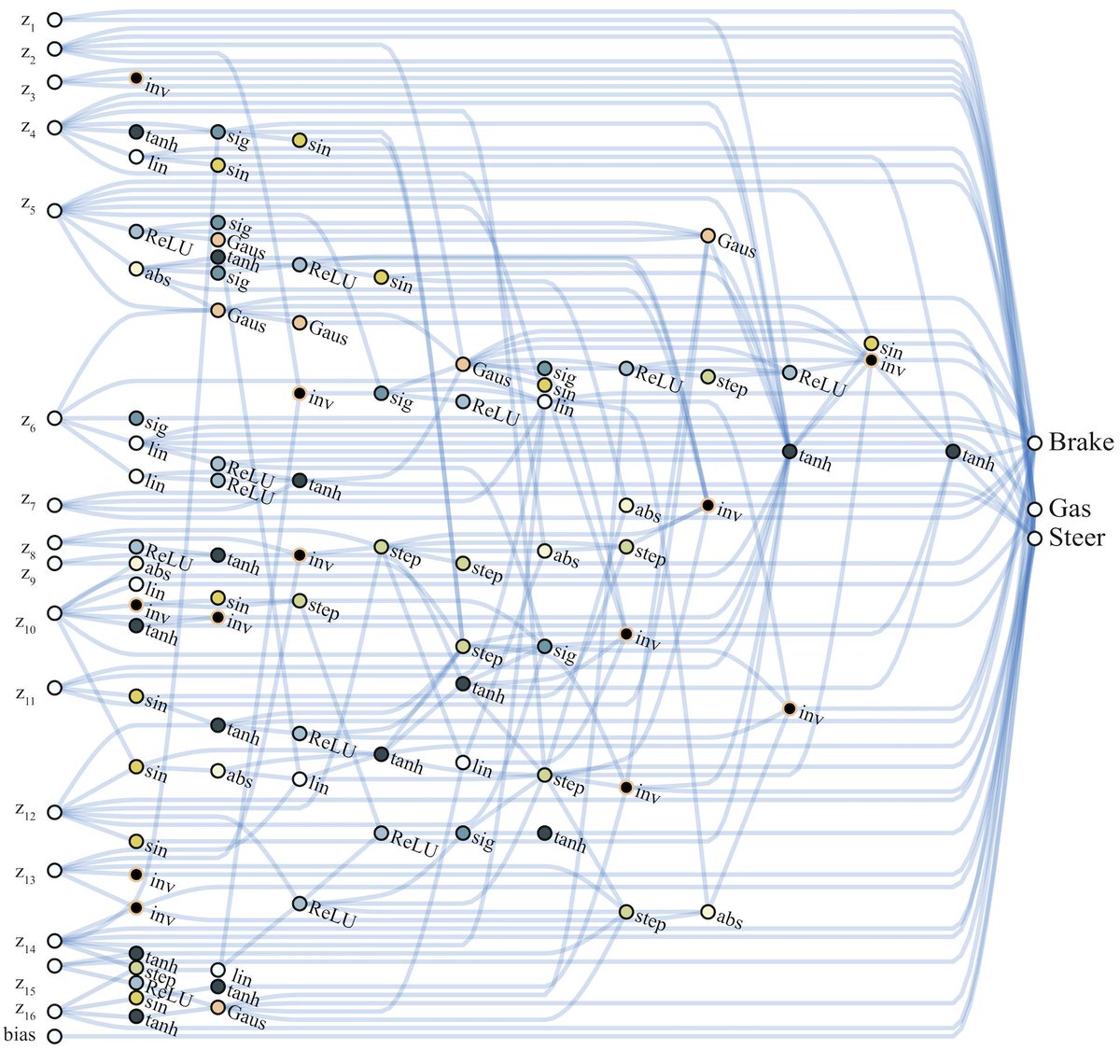

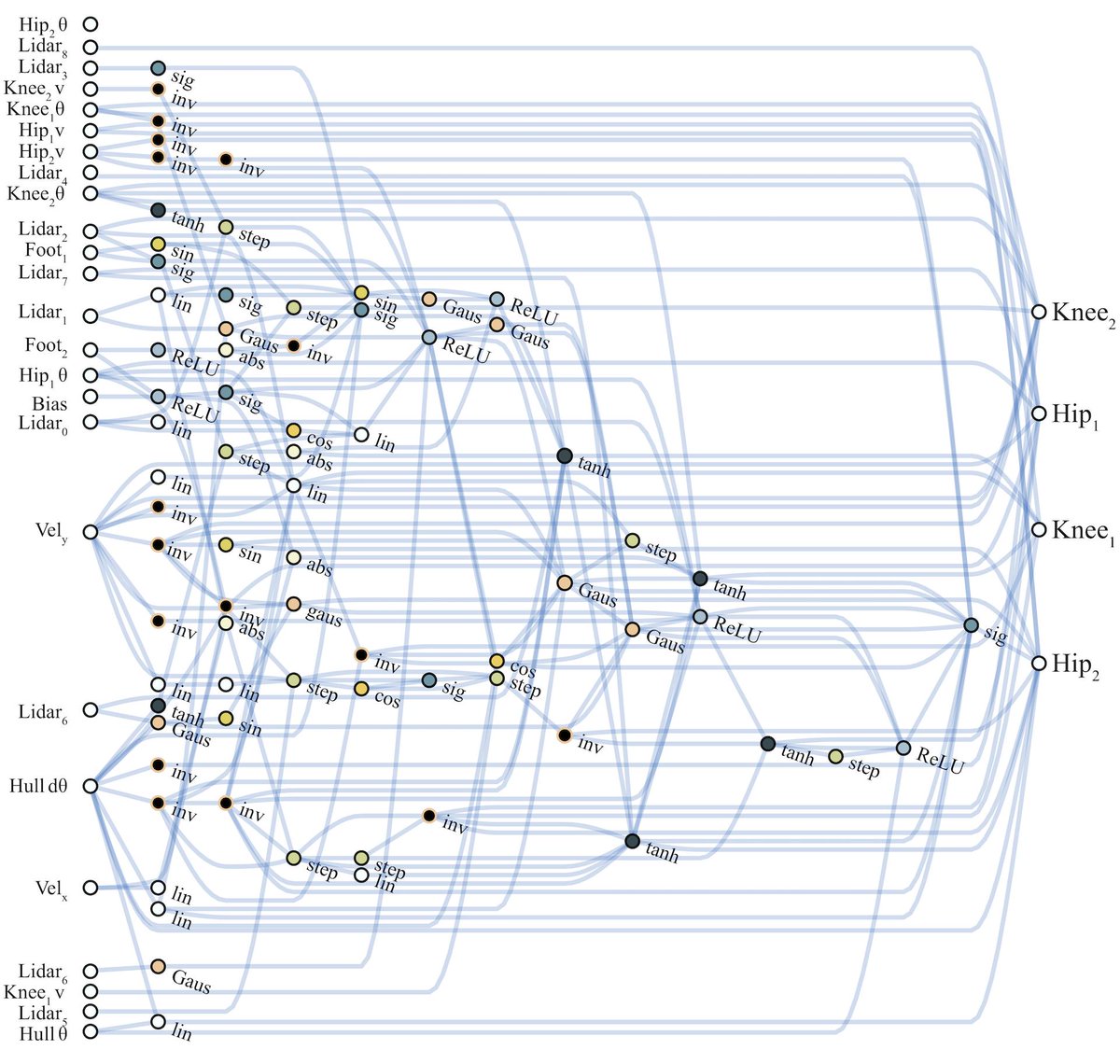

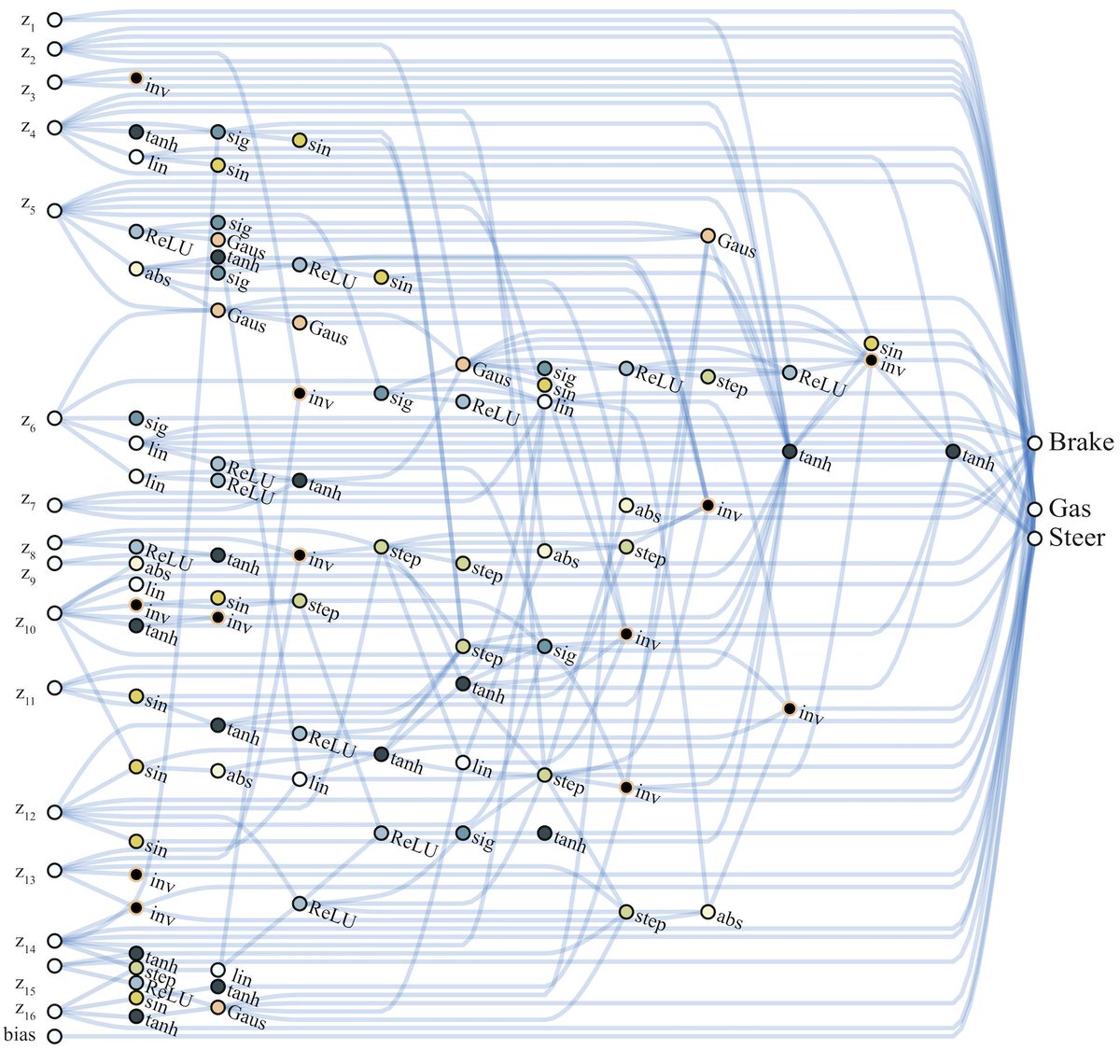

Inspired by precocial species in biology, we set out to search for neural net architectures that can already (sort of) perform various tasks even when they use random weight values.

Article: weightagnostic.github.io

PDF: arxiv.org/abs/1906.04358

Twitter may remove this content at anytime, convert it as a PDF, save and print for later use!

1) Follow Thread Reader App on Twitter so you can easily mention us!

2) Go to a Twitter thread (series of Tweets by the same owner) and mention us with a keyword "unroll"

@threadreaderapp unroll

You can practice here first or read more on our help page!