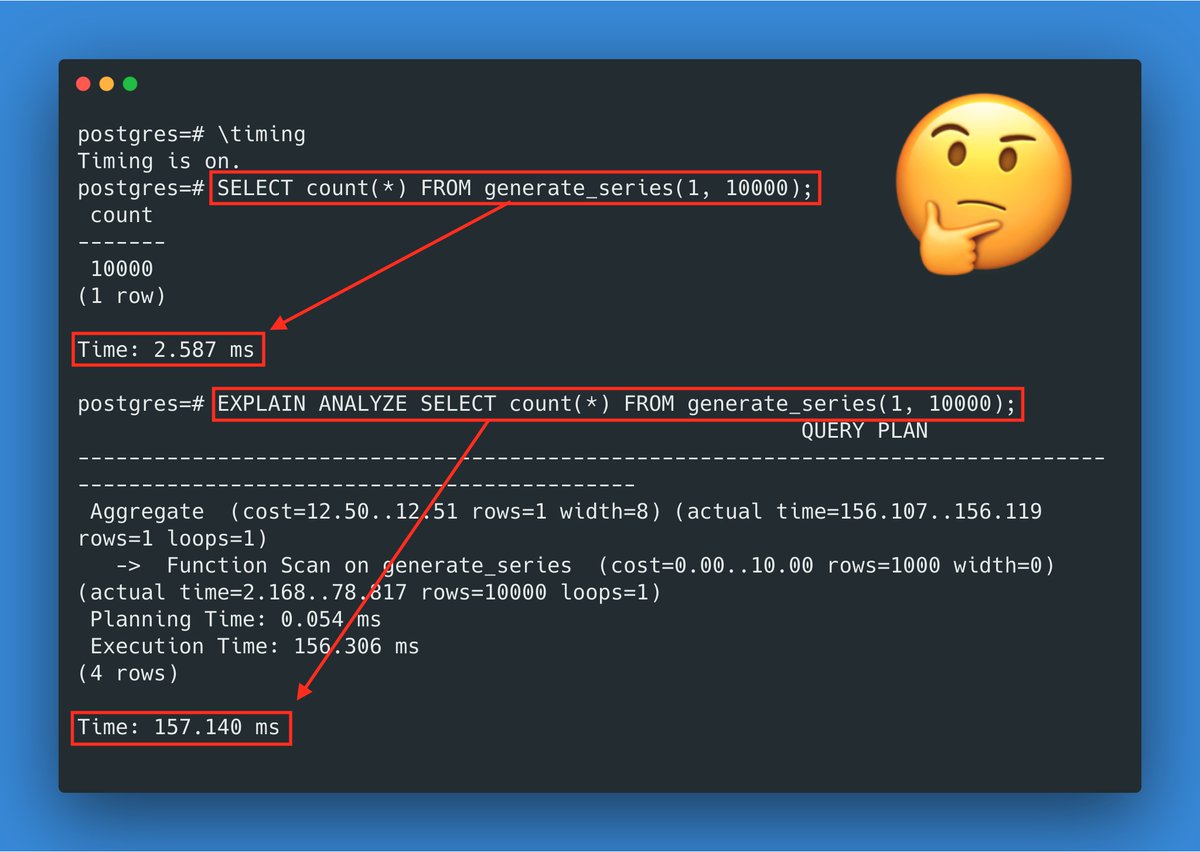

But that's kinda lame, so let's dig deeper.

Docker for mac works by launching a linux vm in the background. Maybe the issue is caused by "vm overhead"?

github.com/moby/hyperkit

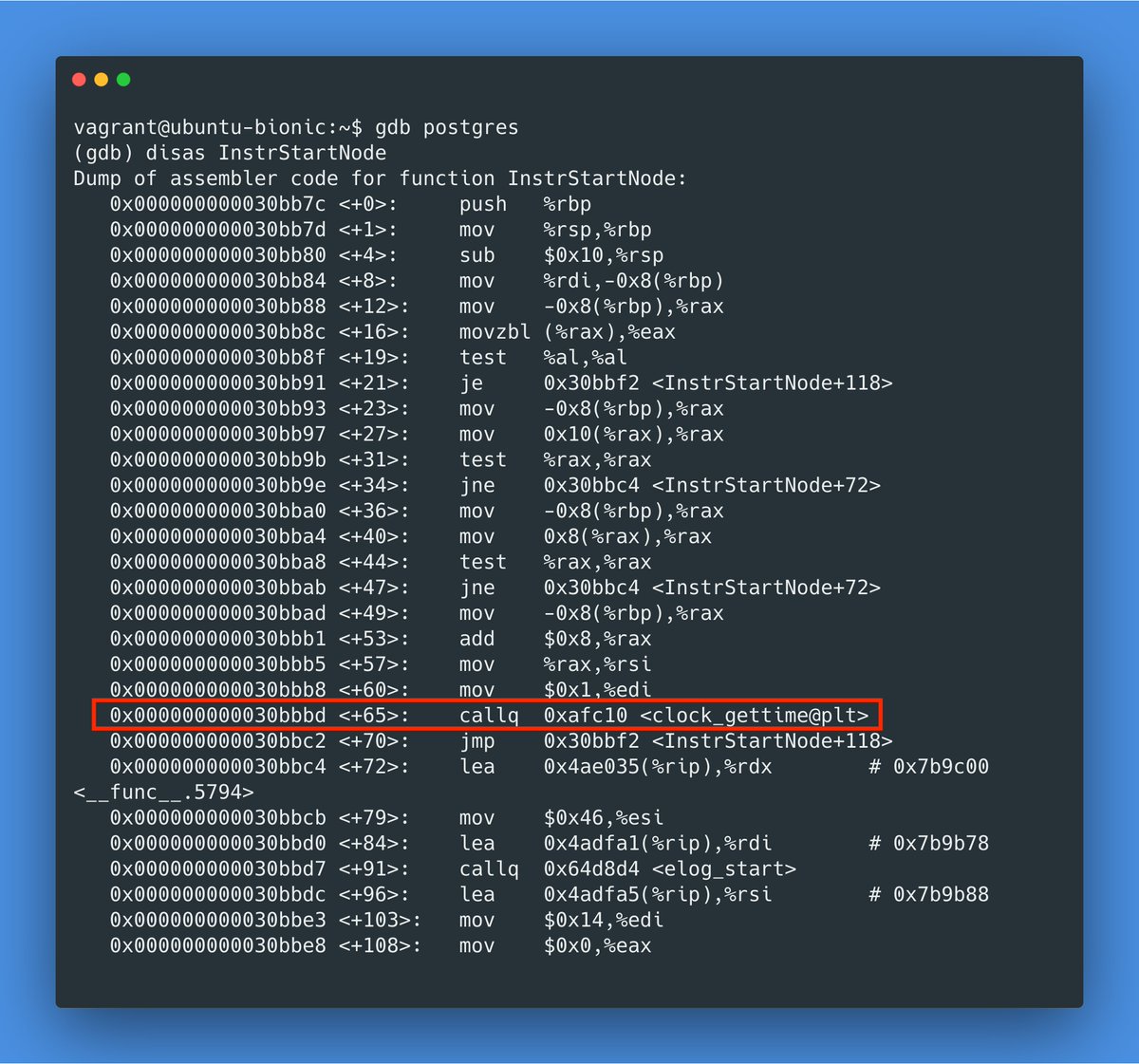

callq 0xafc10 <clock_gettime@plt>

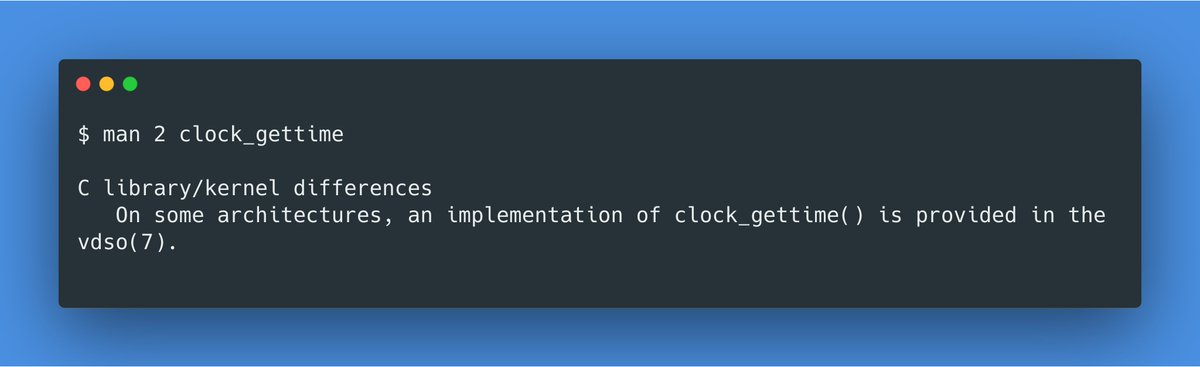

The `@plt` stands for Procedure Linkage Table, which means we're calling into libc!

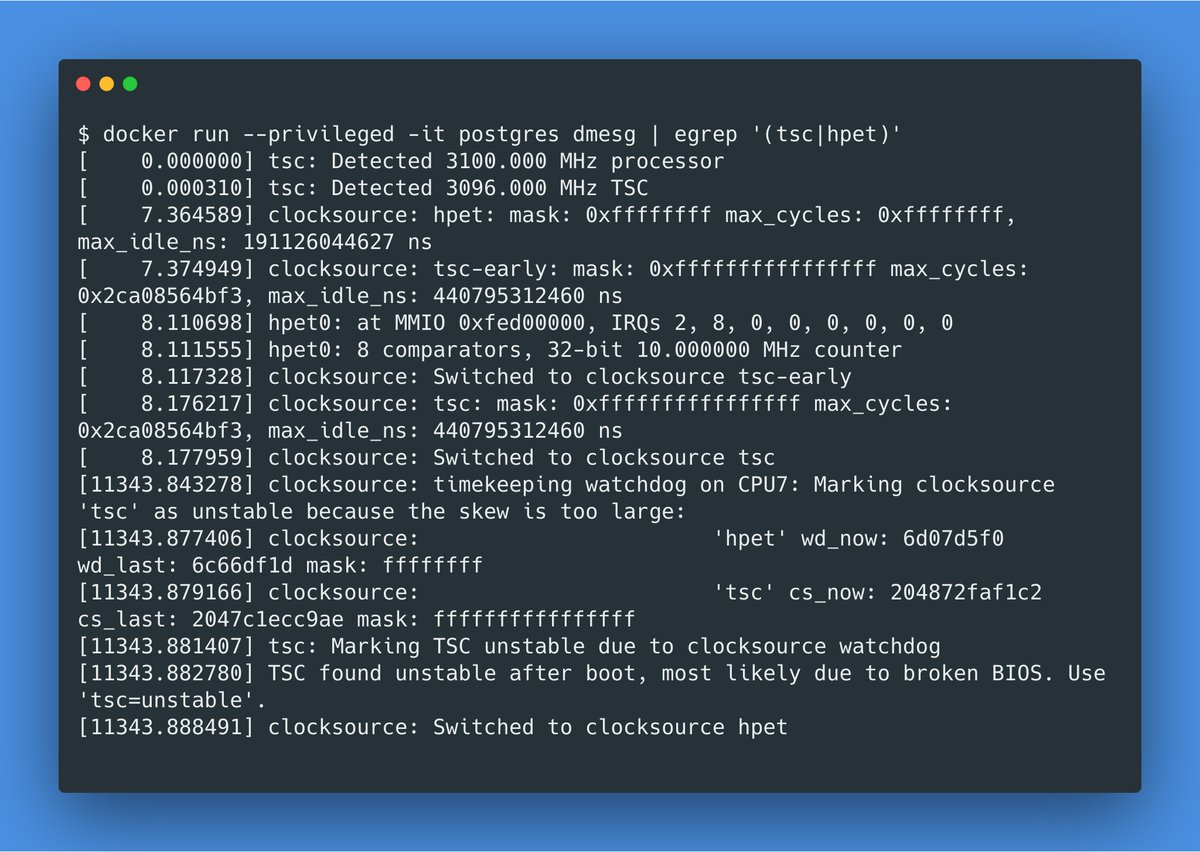

It seems that it's time drift issues that can change the clocksource.

docker run --privileged -it postgres dmesg | egrep '(tsc|hpet)'

benchling.engineering/analyzing-perf…

news.ycombinator.com/item?id=169256…

docker.com/blog/addressin…

Unfortunately GH issues for it don't seem to be getting much attention, despite this bug impacting lot of applications. Here is a report of PHP requests being slowed down by 3x:

github.com/docker/for-mac…

blog.packagecloud.io/eng/2017/03/08…

One workaround seems to be to restart docker for mac, but usually it's just a matter of time before the clocksource issue will hit you again.

In my team at work we've agreed to always use PostgreSQL on macOS directly when doing performance related work.

As an application developer, you will probably not be able to master this complexity. But you will be asked to explain why your stuff is slow.

Learn enough C to be able to read it, enough gdb to set some breakpoints, and enough perf tools to trace syscalls and function calls.

And soon enough, even the most daunting PostgreSQL performance mysteries will reveal themselves.

We support relocation, and my DMs are open for any questions!

jobs.apple.com/en-us/details/…